Perceptual Conditional Generative Adversarial Networks for End-to-End Image Colourization

Paper and Code

Nov 27, 2018

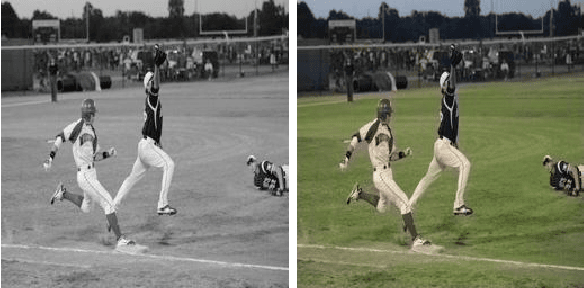

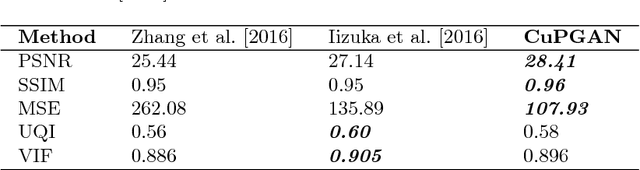

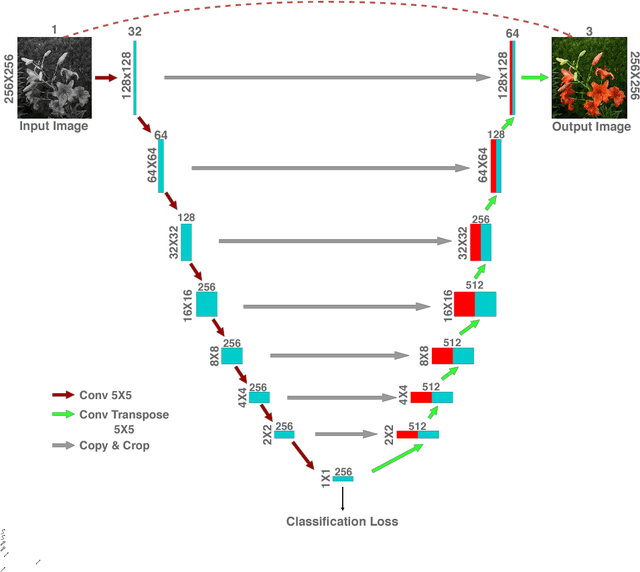

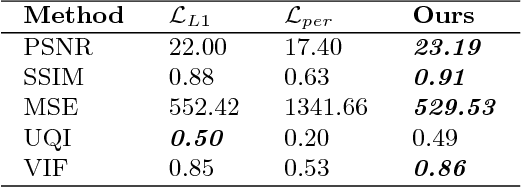

Colours are everywhere. They embody a significant part of human visual perception. In this paper, we explore the paradigm of hallucinating colours from a given gray-scale image. The problem of colourization has been dealt in previous literature but mostly in a supervised manner involving user-interference. With the emergence of Deep Learning methods numerous tasks related to computer vision and pattern recognition have been automatized and carried in an end-to-end fashion due to the availability of large data-sets and high-power computing systems. We investigate and build upon the recent success of Conditional Generative Adversarial Networks (cGANs) for Image-to-Image translations. In addition to using the training scheme in the basic cGAN, we propose an encoder-decoder generator network which utilizes the class-specific cross-entropy loss as well as the perceptual loss in addition to the original objective function of cGAN. We train our model on a large-scale dataset and present illustrative qualitative and quantitative analysis of our results. Our results vividly display the versatility and proficiency of our methods through life-like colourization outcomes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge