Maxime Tremblay

Finite-rate sparse quantum codes aplenty

Jul 18, 2022

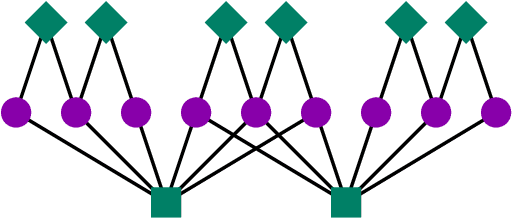

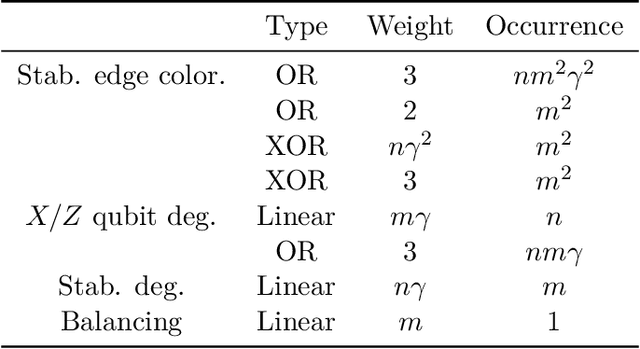

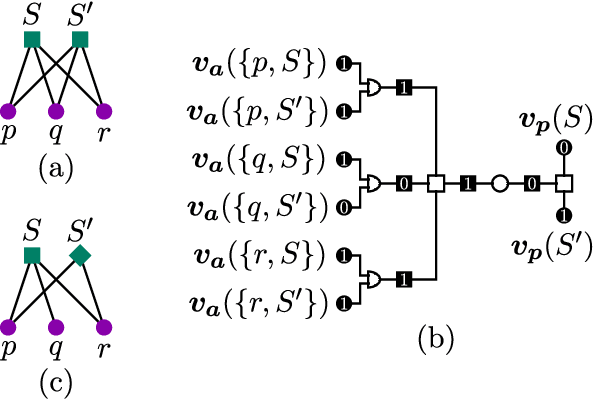

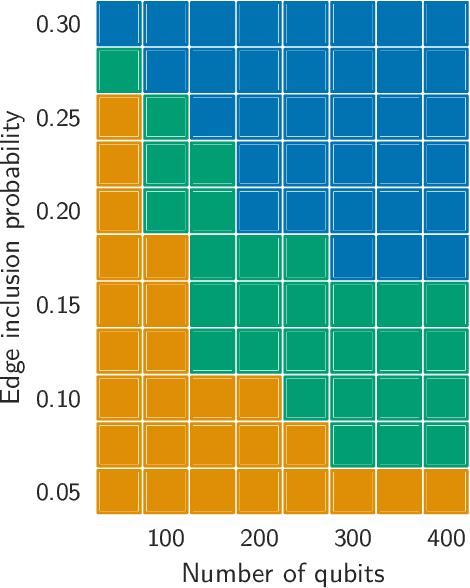

Abstract:We introduce a methodology for generating random multi-qubit stabilizer codes based on solving a constraint satisfaction problem (CSP) on random bipartite graphs. This framework allows us to enforce stabilizer commutation, X/Z balancing, finite rate, sparsity, and maximum-degree constraints simultaneously in a CSP that we can then solve numerically. Using a state-of-the-art CSP solver, we obtain convincing evidence for the existence of a satisfiability threshold. Furthermore, the extent of the satisfiable phase increases with the number of qubits. In that phase, finding sparse codes becomes an easy problem. Moreover, we observe that the sparse codes found in the satisfiable phase practically achieve the channel capacity for erasure noise. Our results show that intermediate-size finite-rate sparse quantum codes are easy to find, while also demonstrating a flexible methodology for generating good codes with custom properties. We therefore establish a complete and customizable pipeline for random quantum code discovery that can be geared towards near to mid-term quantum processor layouts.

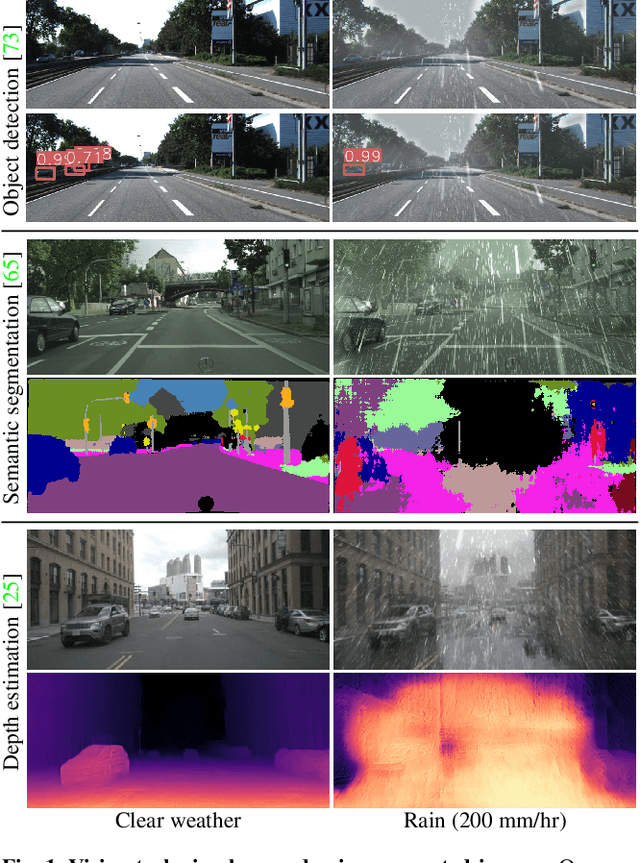

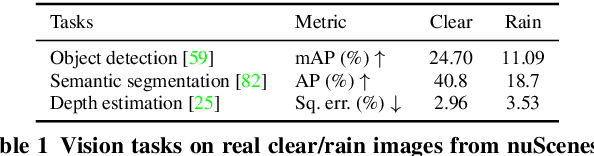

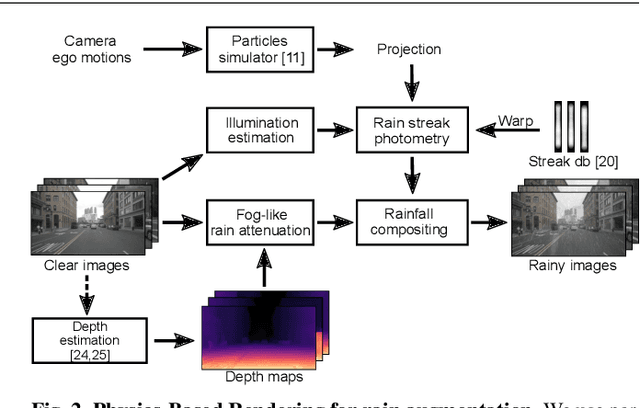

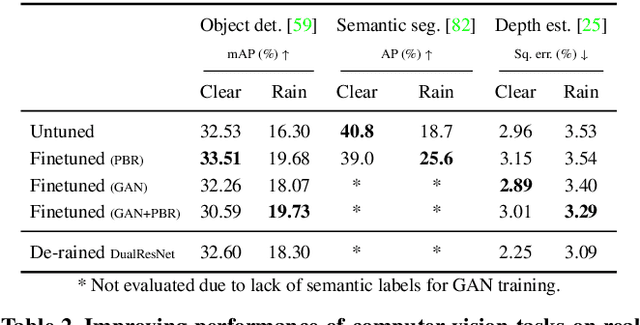

Rain rendering for evaluating and improving robustness to bad weather

Sep 06, 2020

Abstract:Rain fills the atmosphere with water particles, which breaks the common assumption that light travels unaltered from the scene to the camera. While it is well-known that rain affects computer vision algorithms, quantifying its impact is difficult. In this context, we present a rain rendering pipeline that enables the systematic evaluation of common computer vision algorithms to controlled amounts of rain. We present three different ways to add synthetic rain to existing images datasets: completely physic-based; completely data-driven; and a combination of both. The physic-based rain augmentation combines a physical particle simulator and accurate rain photometric modeling. We validate our rendering methods with a user study, demonstrating our rain is judged as much as 73% more realistic than the state-of-theart. Using our generated rain-augmented KITTI, Cityscapes, and nuScenes datasets, we conduct a thorough evaluation of object detection, semantic segmentation, and depth estimation algorithms and show that their performance decreases in degraded weather, on the order of 15% for object detection, 60% for semantic segmentation, and 6-fold increase in depth estimation error. Finetuning on our augmented synthetic data results in improvements of 21% on object detection, 37% on semantic segmentation, and 8% on depth estimation.

Learning to segment on tiny datasets: a new shape model

Aug 07, 2017

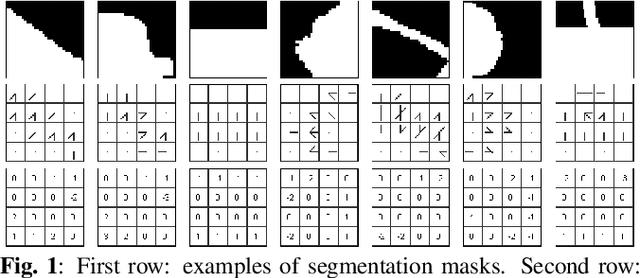

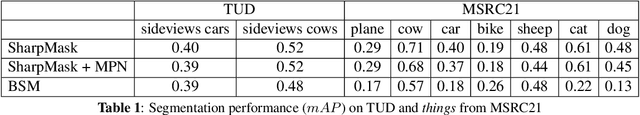

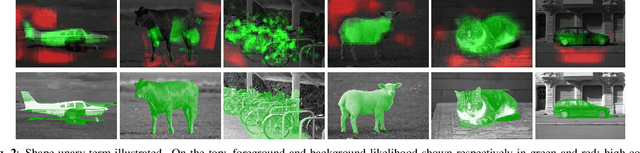

Abstract:Current object segmentation algorithms are based on the hypothesis that one has access to a very large amount of data. In this paper, we aim to segment objects using only tiny datasets. To this extent, we propose a new automatic part-based object segmentation algorithm for non-deformable and semi-deformable objects in natural backgrounds. We have developed a novel shape descriptor which models the local boundaries of an object's part. This shape descriptor is used in a bag-of-words approach for object detection. Once the detection process is performed, we use the background and foreground likelihood given by our trained shape model, and the information from the image content, to define a dense CRF model. We use a mean field approximation to solve it and thus segment the object of interest. Performance evaluated on different datasets shows that our approach can sometimes achieve results near state-of-the-art techniques based on big data while requiring only a tiny training set.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge