Shamik Roy

Multi-Faceted Evaluation of Tool-Augmented Dialogue Systems

Oct 22, 2025Abstract:Evaluating conversational AI systems that use external tools is challenging, as errors can arise from complex interactions among user, agent, and tools. While existing evaluation methods assess either user satisfaction or agents' tool-calling capabilities, they fail to capture critical errors in multi-turn tool-augmented dialogues-such as when agents misinterpret tool results yet appear satisfactory to users. We introduce TRACE, a benchmark of systematically synthesized tool-augmented conversations covering diverse error cases, and SCOPE, an evaluation framework that automatically discovers diverse error patterns and evaluation rubrics in tool-augmented dialogues. Experiments show SCOPE significantly outperforms the baseline, particularly on challenging cases where user satisfaction signals are misleading.

When Facts Change: Probing LLMs on Evolving Knowledge with evolveQA

Oct 22, 2025Abstract:LLMs often fail to handle temporal knowledge conflicts--contradictions arising when facts evolve over time within their training data. Existing studies evaluate this phenomenon through benchmarks built on structured knowledge bases like Wikidata, but they focus on widely-covered, easily-memorized popular entities and lack the dynamic structure needed to fairly evaluate LLMs with different knowledge cut-off dates. We introduce evolveQA, a benchmark specifically designed to evaluate LLMs on temporally evolving knowledge, constructed from 3 real-world, time-stamped corpora: AWS updates, Azure changes, and WHO disease outbreak reports. Our framework identifies naturally occurring knowledge evolution and generates questions with gold answers tailored to different LLM knowledge cut-off dates. Through extensive evaluation of 12 open and closed-source LLMs across 3 knowledge probing formats, we demonstrate significant performance drops of up to 31% on evolveQA compared to static knowledge questions.

Constrained Decoding with Speculative Lookaheads

Dec 09, 2024Abstract:Constrained decoding with lookahead heuristics (CDLH) is a highly effective method for aligning LLM generations to human preferences. However, the extensive lookahead roll-out operations for each generated token makes CDLH prohibitively expensive, resulting in low adoption in practice. In contrast, common decoding strategies such as greedy decoding are extremely efficient, but achieve very low constraint satisfaction. We propose constrained decoding with speculative lookaheads (CDSL), a technique that significantly improves upon the inference efficiency of CDLH without experiencing the drastic performance reduction seen with greedy decoding. CDSL is motivated by the recently proposed idea of speculative decoding that uses a much smaller draft LLM for generation and a larger target LLM for verification. In CDSL, the draft model is used to generate lookaheads which is verified by a combination of target LLM and task-specific reward functions. This process accelerates decoding by reducing the computational burden while maintaining strong performance. We evaluate CDSL in two constraint decoding tasks with three LLM families and achieve 2.2x to 12.15x speedup over CDLH without significant performance reduction.

FLAP: Flow Adhering Planning with Constrained Decoding in LLMs

Mar 09, 2024

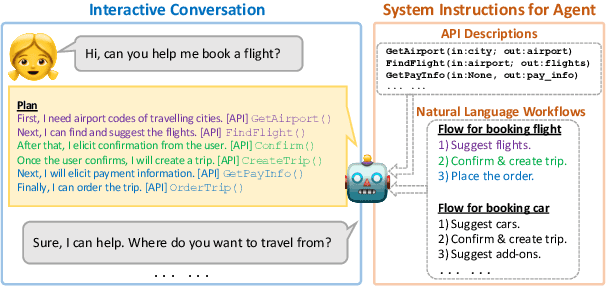

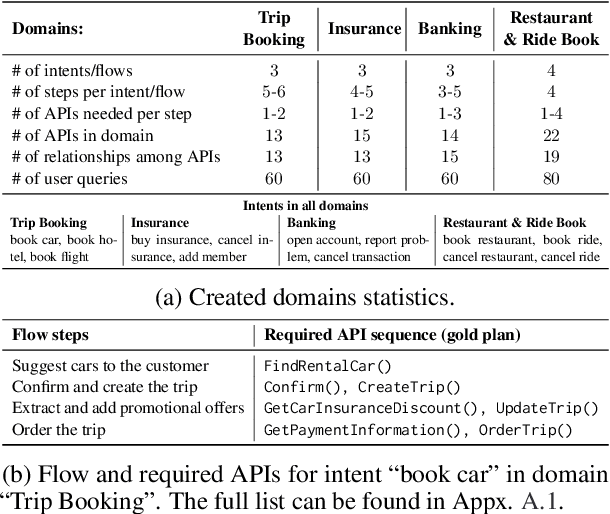

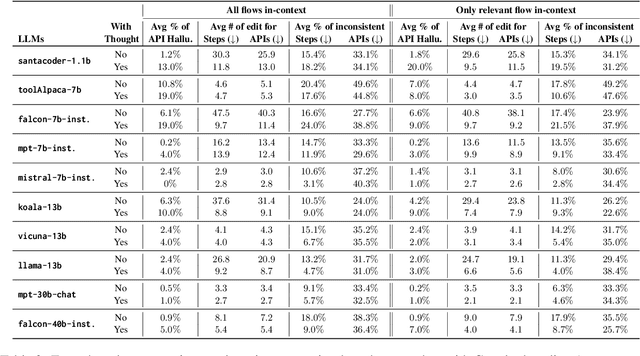

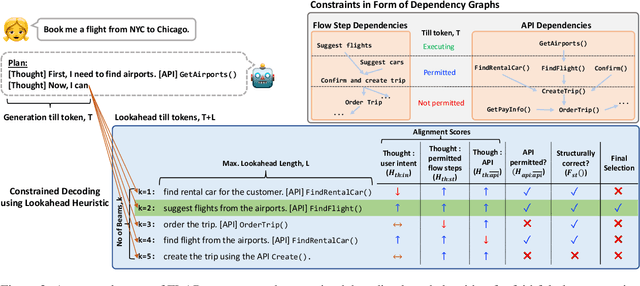

Abstract:Planning is a crucial task for agents in task oriented dialogs (TODs). Human agents typically resolve user issues by following predefined workflows, decomposing workflow steps into actionable items, and performing actions by executing APIs in order; all of which require reasoning and planning. With the recent advances in LLMs, there have been increasing attempts to use LLMs for task planning and API usage. However, the faithfulness of the plans to predefined workflows and API dependencies, is not guaranteed with LLMs because of their bias towards pretraining data. Moreover, in real life, workflows are custom-defined and prone to change, hence, quickly adapting agents to the changes is desirable. In this paper, we study faithful planning in TODs to resolve user intents by following predefined flows and preserving API dependencies. We propose a constrained decoding algorithm based on lookahead heuristic for faithful planning. Our algorithm alleviates the need for finetuning LLMs using domain specific data, outperforms other decoding and prompting-based baselines, and applying our algorithm on smaller LLMs (7B) we achieve comparable performance to larger LLMs (30B-40B).

"A Tale of Two Movements": Identifying and Comparing Perspectives in #BlackLivesMatter and #BlueLivesMatter Movements-related Tweets using Weakly Supervised Graph-based Structured Prediction

Oct 21, 2023

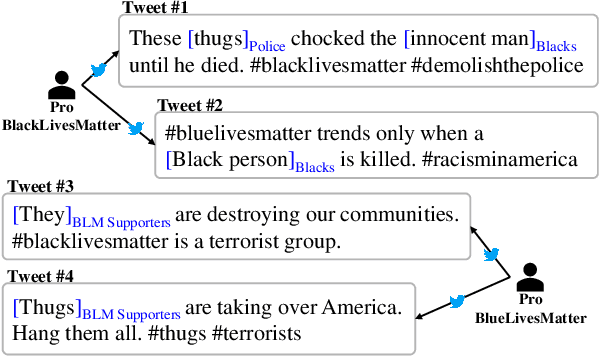

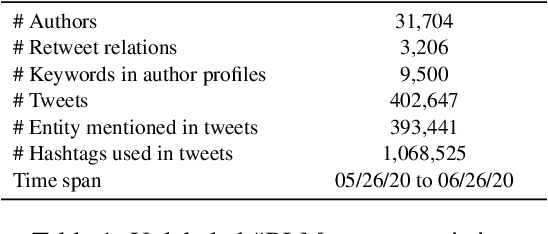

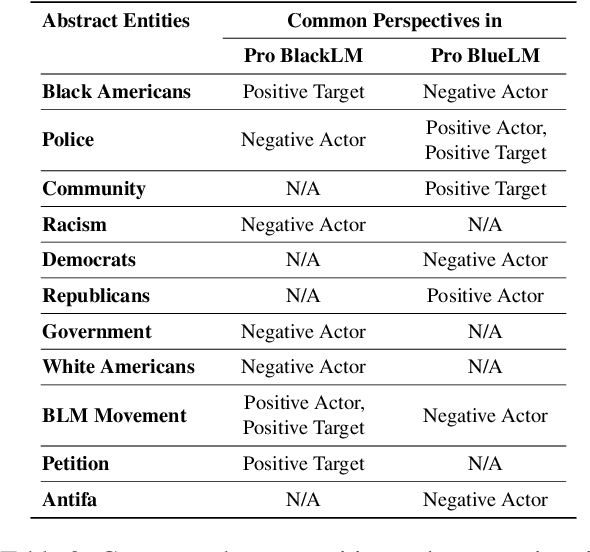

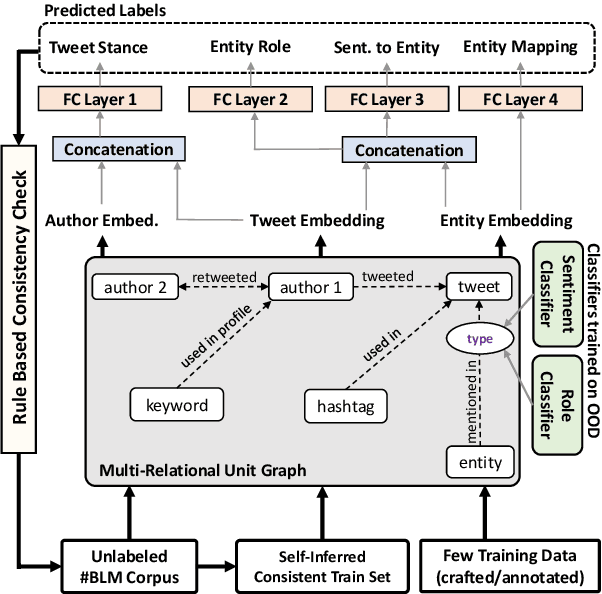

Abstract:Social media has become a major driver of social change, by facilitating the formation of online social movements. Automatically understanding the perspectives driving the movement and the voices opposing it, is a challenging task as annotated data is difficult to obtain. We propose a weakly supervised graph-based approach that explicitly models perspectives in #BackLivesMatter-related tweets. Our proposed approach utilizes a social-linguistic representation of the data. We convert the text to a graph by breaking it into structured elements and connect it with the social network of authors, then structured prediction is done over the elements for identifying perspectives. Our approach uses a small seed set of labeled examples. We experiment with large language models for generating artificial training examples, compare them to manual annotation, and find that it achieves comparable performance. We perform quantitative and qualitative analyses using a human-annotated test set. Our model outperforms multitask baselines by a large margin, successfully characterizing the perspectives supporting and opposing #BLM.

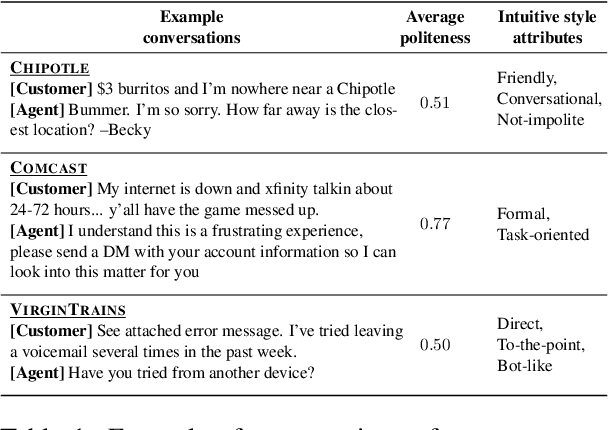

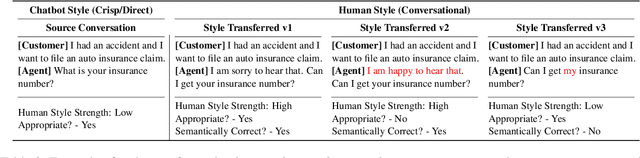

Conversation Style Transfer using Few-Shot Learning

Feb 16, 2023

Abstract:Conventional text style transfer approaches for natural language focus on sentence-level style transfer without considering contextual information, and the style is described with attributes (e.g., formality). When applying style transfer on conversations such as task-oriented dialogues, existing approaches suffer from these limitations as context can play an important role and the style attributes are often difficult to define in conversations. In this paper, we introduce conversation style transfer as a few-shot learning problem, where the model learns to perform style transfer by observing only the target-style dialogue examples. We propose a novel in-context learning approach to solve the task with style-free dialogues as a pivot. Human evaluation shows that by incorporating multi-turn context, the model is able to match the target style while having better appropriateness and semantic correctness compared to utterance-level style transfer. Additionally, we show that conversation style transfer can also benefit downstream tasks. Results on multi-domain intent classification tasks show improvement in F1 scores after transferring the style of training data to match the style of test data.

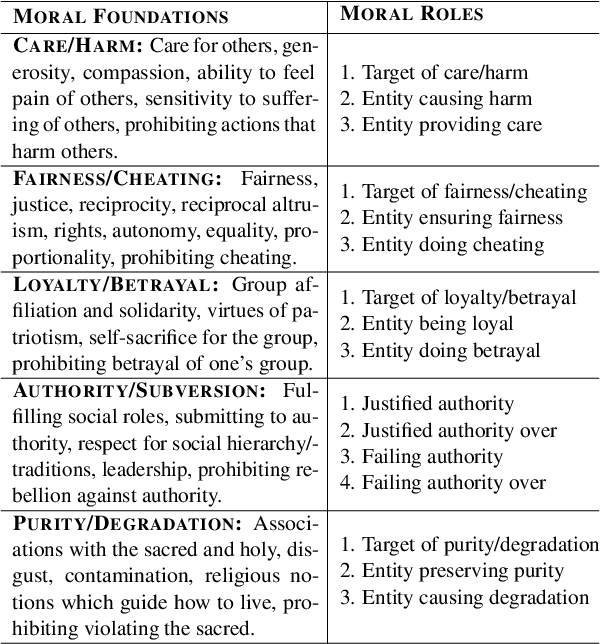

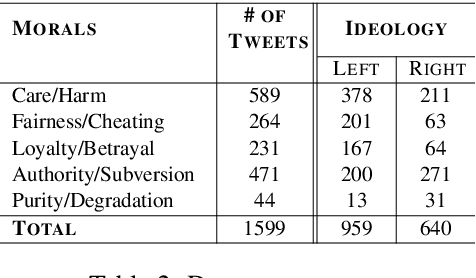

Towards Few-Shot Identification of Morality Frames using In-Context Learning

Feb 03, 2023Abstract:Data scarcity is a common problem in NLP, especially when the annotation pertains to nuanced socio-linguistic concepts that require specialized knowledge. As a result, few-shot identification of these concepts is desirable. Few-shot in-context learning using pre-trained Large Language Models (LLMs) has been recently applied successfully in many NLP tasks. In this paper, we study few-shot identification of a psycho-linguistic concept, Morality Frames (Roy et al., 2021), using LLMs. Morality frames are a representation framework that provides a holistic view of the moral sentiment expressed in text, identifying the relevant moral foundation (Haidt and Graham, 2007) and at a finer level of granularity, the moral sentiment expressed towards the entities mentioned in the text. Previous studies relied on human annotation to identify morality frames in text which is expensive. In this paper, we propose prompting-based approaches using pretrained Large Language Models for identification of morality frames, relying only on few-shot exemplars. We compare our models' performance with few-shot RoBERTa and found promising results.

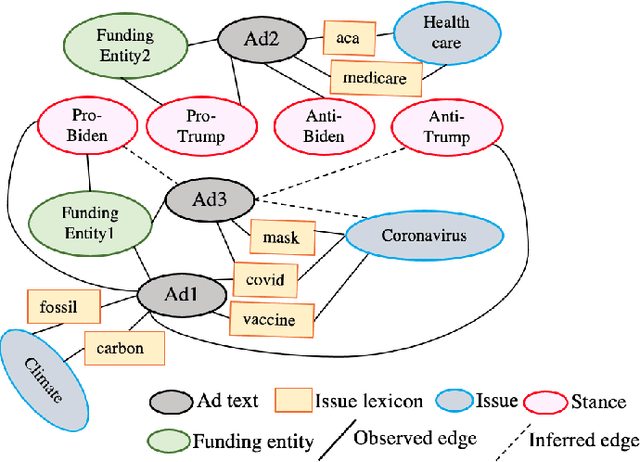

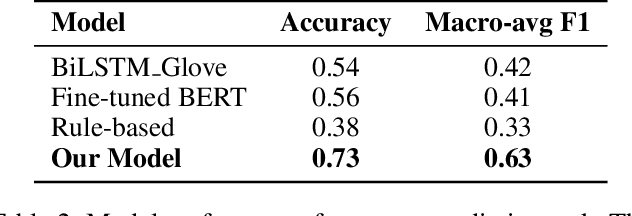

Weakly Supervised Learning for Analyzing Political Campaigns on Facebook

Oct 19, 2022

Abstract:Social media platforms are currently the main channel for political messaging, allowing politicians to target specific demographics and adapt based on their reactions. However, making this communication transparent is challenging, as the messaging is tightly coupled with its intended audience and often echoed by multiple stakeholders interested in advancing specific policies. Our goal in this paper is to take a first step towards understanding these highly decentralized settings. We propose a weakly supervised approach to identify the stance and issue of political ads on Facebook and analyze how political campaigns use some kind of demographic targeting by location, gender, or age. Furthermore, we analyze the temporal dynamics of the political ads on election polls.

Identifying Morality Frames in Political Tweets using Relational Learning

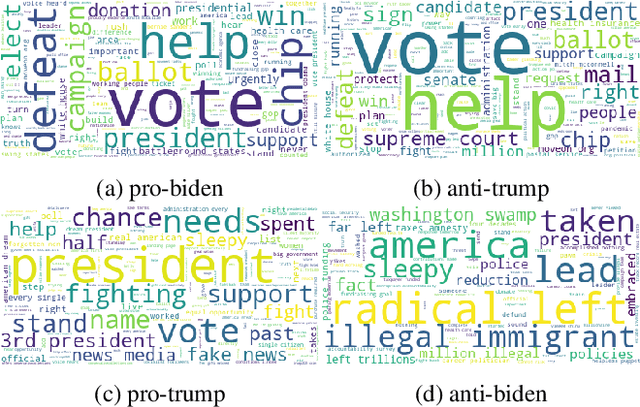

Sep 09, 2021

Abstract:Extracting moral sentiment from text is a vital component in understanding public opinion, social movements, and policy decisions. The Moral Foundation Theory identifies five moral foundations, each associated with a positive and negative polarity. However, moral sentiment is often motivated by its targets, which can correspond to individuals or collective entities. In this paper, we introduce morality frames, a representation framework for organizing moral attitudes directed at different entities, and come up with a novel and high-quality annotated dataset of tweets written by US politicians. Then, we propose a relational learning model to predict moral attitudes towards entities and moral foundations jointly. We do qualitative and quantitative evaluations, showing that moral sentiment towards entities differs highly across political ideologies.

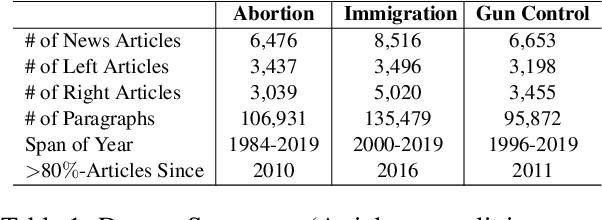

Weakly Supervised Learning of Nuanced Frames for Analyzing Polarization in News Media

Sep 21, 2020

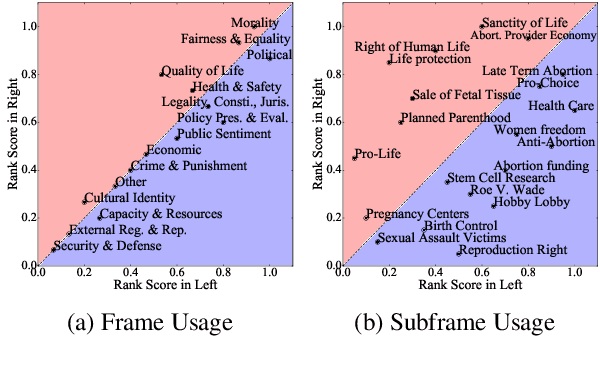

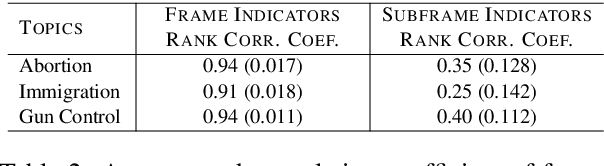

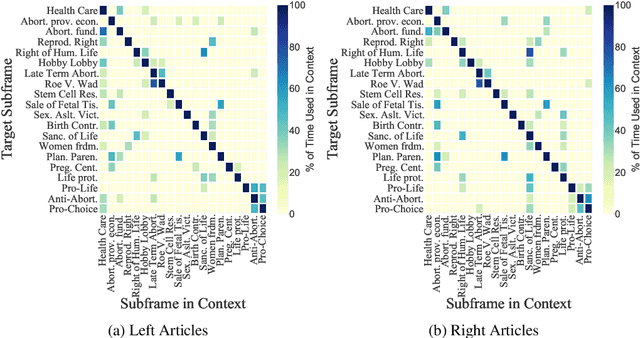

Abstract:In this paper we suggest a minimally-supervised approach for identifying nuanced frames in news article coverage of politically divisive topics. We suggest to break the broad policy frames suggested by Boydstun et al., 2014 into fine-grained subframes which can capture differences in political ideology in a better way. We evaluate the suggested subframes and their embedding, learned using minimal supervision, over three topics, namely, immigration, gun-control and abortion. We demonstrate the ability of the subframes to capture ideological differences and analyze political discourse in news media.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge