Shaheer Ullah Saeed

T2-Only Prostate Cancer Prediction by Meta-Learning from Bi-Parametric MR Imaging

Nov 11, 2024

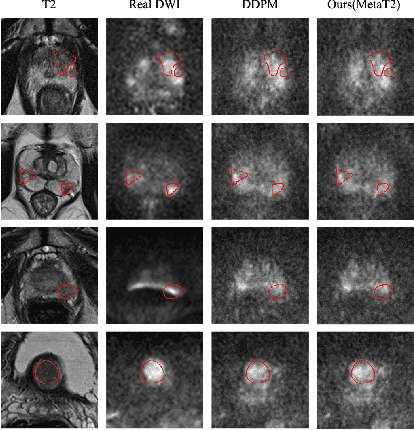

Abstract:Current imaging-based prostate cancer diagnosis requires both MR T2-weighted (T2w) and diffusion-weighted imaging (DWI) sequences, with additional sequences for potentially greater accuracy improvement. However, measuring diffusion patterns in DWI sequences can be time-consuming, prone to artifacts and sensitive to imaging parameters. While machine learning (ML) models have demonstrated radiologist-level accuracy in detecting prostate cancer from these two sequences, this study investigates the potential of ML-enabled methods using only the T2w sequence as input during inference time. We first discuss the technical feasibility of such a T2-only approach, and then propose a novel ML formulation, where DWI sequences - readily available for training purposes - are only used to train a meta-learning model, which subsequently only uses T2w sequences at inference. Using multiple datasets from more than 3,000 prostate cancer patients, we report superior or comparable performance in localising radiologist-identified prostate cancer using our proposed T2-only models, compared with alternative models using T2-only or both sequences as input. Real patient cases are presented and discussed to demonstrate, for the first time, the exclusively true-positive cases from models with different input sequences.

SAMReg: SAM-enabled Image Registration with ROI-based Correspondence

Oct 17, 2024

Abstract:This paper describes a new spatial correspondence representation based on paired regions-of-interest (ROIs), for medical image registration. The distinct properties of the proposed ROI-based correspondence are discussed, in the context of potential benefits in clinical applications following image registration, compared with alternative correspondence-representing approaches, such as those based on sampled displacements and spatial transformation functions. These benefits include a clear connection between learning-based image registration and segmentation, which in turn motivates two cases of image registration approaches using (pre-)trained segmentation networks. Based on the segment anything model (SAM), a vision foundation model for segmentation, we develop a new registration algorithm SAMReg, which does not require any training (or training data), gradient-based fine-tuning or prompt engineering. The proposed SAMReg models are evaluated across five real-world applications, including intra-subject registration tasks with cardiac MR and lung CT, challenging inter-subject registration scenarios with prostate MR and retinal imaging, and an additional evaluation with a non-clinical example with aerial image registration. The proposed methods outperform both intensity-based iterative algorithms and DDF-predicting learning-based networks across tested metrics including Dice and target registration errors on anatomical structures, and further demonstrates competitive performance compared to weakly-supervised registration approaches that rely on fully-segmented training data. Open source code and examples are available at: https://github.com/sqhuang0103/SAMReg.git.

One registration is worth two segmentations

May 17, 2024

Abstract:The goal of image registration is to establish spatial correspondence between two or more images, traditionally through dense displacement fields (DDFs) or parametric transformations (e.g., rigid, affine, and splines). Rethinking the existing paradigms of achieving alignment via spatial transformations, we uncover an alternative but more intuitive correspondence representation: a set of corresponding regions-of-interest (ROI) pairs, which we demonstrate to have sufficient representational capability as other correspondence representation methods.Further, it is neither necessary nor sufficient for these ROIs to hold specific anatomical or semantic significance. In turn, we formulate image registration as searching for the same set of corresponding ROIs from both moving and fixed images - in other words, two multi-class segmentation tasks on a pair of images. For a general-purpose and practical implementation, we integrate the segment anything model (SAM) into our proposed algorithms, resulting in a SAM-enabled registration (SAMReg) that does not require any training data, gradient-based fine-tuning or engineered prompts. We experimentally show that the proposed SAMReg is capable of segmenting and matching multiple ROI pairs, which establish sufficiently accurate correspondences, in three clinical applications of registering prostate MR, cardiac MR and abdominal CT images. Based on metrics including Dice and target registration errors on anatomical structures, the proposed registration outperforms both intensity-based iterative algorithms and DDF-predicting learning-based networks, even yielding competitive performance with weakly-supervised registration which requires fully-segmented training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge