Seungsu Kim

Disentangled Object-Centric Image Representation for Robotic Manipulation

Mar 14, 2025Abstract:Learning robotic manipulation skills from vision is a promising approach for developing robotics applications that can generalize broadly to real-world scenarios. As such, many approaches to enable this vision have been explored with fruitful results. Particularly, object-centric representation methods have been shown to provide better inductive biases for skill learning, leading to improved performance and generalization. Nonetheless, we show that object-centric methods can struggle to learn simple manipulation skills in multi-object environments. Thus, we propose DOCIR, an object-centric framework that introduces a disentangled representation for objects of interest, obstacles, and robot embodiment. We show that this approach leads to state-of-the-art performance for learning pick and place skills from visual inputs in multi-object environments and generalizes at test time to changing objects of interest and distractors in the scene. Furthermore, we show its efficacy both in simulation and zero-shot transfer to the real world.

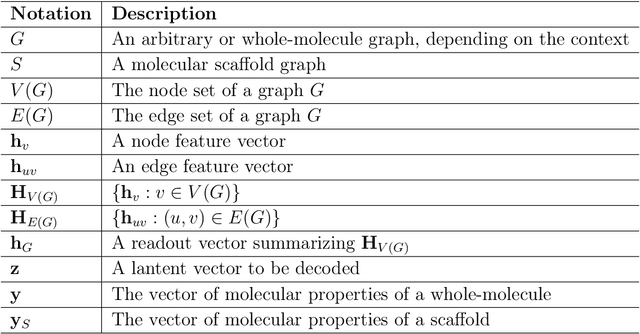

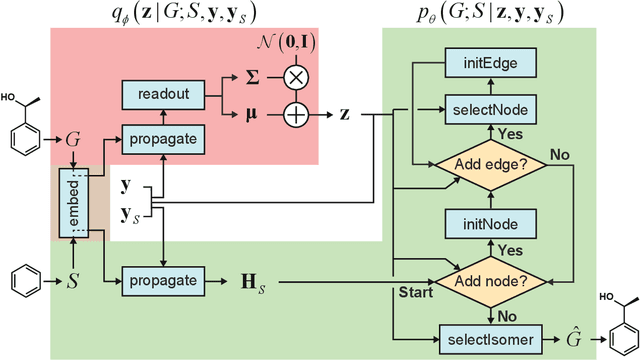

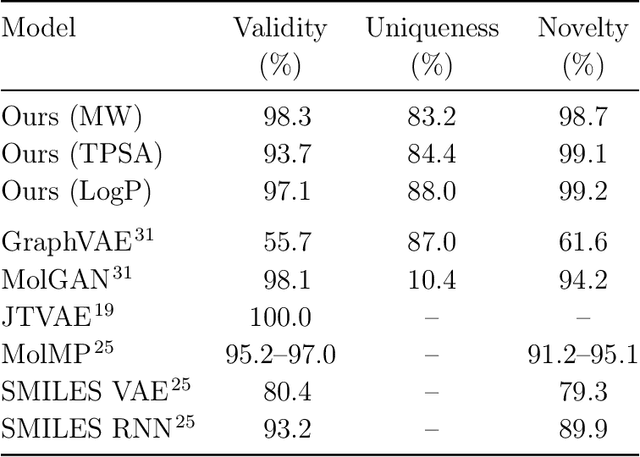

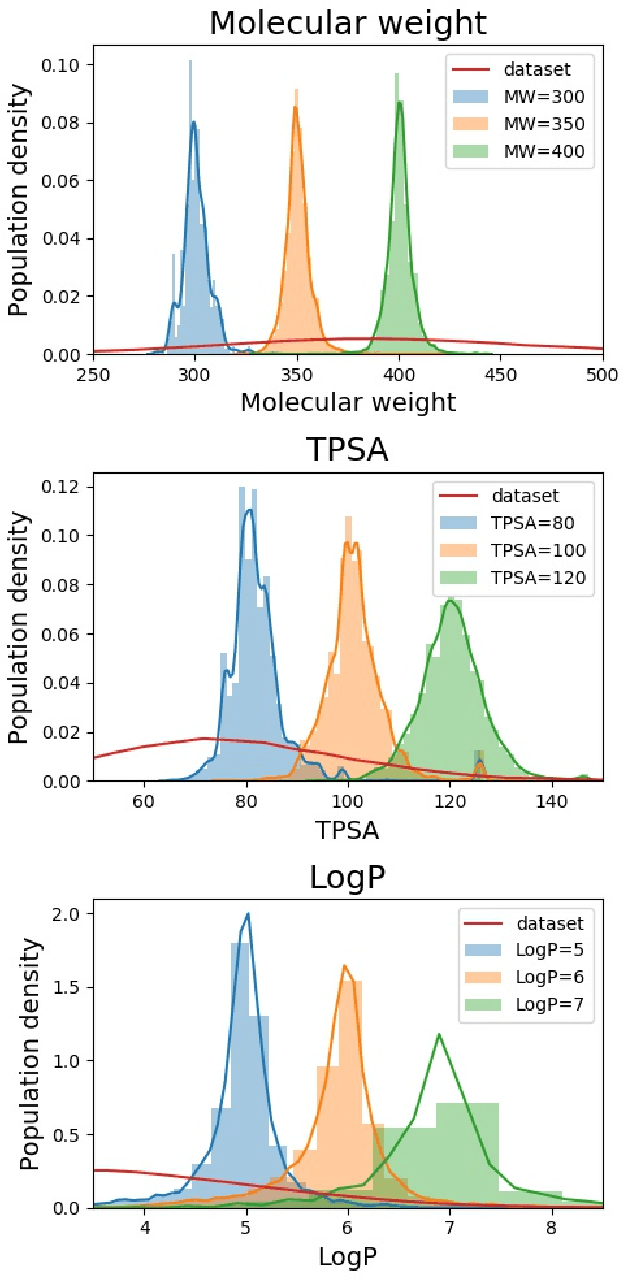

Scaffold-based molecular design using graph generative model

May 31, 2019

Abstract:Searching new molecules in areas like drug discovery often starts from the core structures of candidate molecules to optimize the properties of interest. The way as such has called for a strategy of designing molecules retaining a particular scaffold as a substructure. On this account, our present work proposes a scaffold-based molecular generative model. The model generates molecular graphs by extending the graph of a scaffold through sequential additions of vertices and edges. In contrast to previous related models, our model guarantees the generated molecules to retain the given scaffold with certainty. Our evaluation of the model using unseen scaffolds showed the validity, uniqueness, and novelty of generated molecules as high as the case using seen scaffolds. This confirms that the model can generalize the learned chemical rules of adding atoms and bonds rather than simply memorizing the mapping from scaffolds to molecules during learning. Furthermore, despite the restraint of fixing core structures, our model could simultaneously control multiple molecular properties when generating new molecules.

From exploration to control: learning object manipulation skills through novelty search and local adaptation

Jan 03, 2019

Abstract:Programming a robot to deal with open-ended tasks remains a challenge, in particular if the robot has to manipulate objects. Launching, grasping, pushing or any other object interaction can be simulated but the corresponding models are not reversible and the robot behavior thus cannot be directly deduced. These behaviors are hard to learn without a demonstration as the search space is large and the reward sparse. We propose a method to autonomously generate a diverse repertoire of simple object interaction behaviors in simulation. Our goal is to bootstrap a robot learning and development process with limited informations about what the robot has to achieve and how. This repertoire can be exploited to solve different tasks in reality thanks to a proposed adaptation method or could be used as a training set for data-hungry algorithms. The proposed approach relies on the definition of a goal space and generates a repertoire of trajectories to reach attainable goals, thus allowing the robot to control this goal space. The repertoire is built with an off-the-shelf simulation thanks to a quality diversity algorithm. The result is a set of solutions tested in simulation only. It may result in two different problems: (1) as the repertoire is discrete and finite, it may not contain the trajectory to deal with a given situation or (2) some trajectories may lead to a behavior in reality that differs from simulation because of a reality gap. We propose an approach to deal with both issues by using a local linearization between the motion parameters and the observed effects. Furthermore, we present an approach to update the existing solution repertoire with the tests done on the real robot. The approach has been validated on two different experiments on the Baxter robot: a ball launching and a joystick manipulation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge