Seonkyu Lim

KFinEval-Pilot: A Comprehensive Benchmark Suite for Korean Financial Language Understanding

Apr 17, 2025Abstract:We introduce KFinEval-Pilot, a benchmark suite specifically designed to evaluate large language models (LLMs) in the Korean financial domain. Addressing the limitations of existing English-centric benchmarks, KFinEval-Pilot comprises over 1,000 curated questions across three critical areas: financial knowledge, legal reasoning, and financial toxicity. The benchmark is constructed through a semi-automated pipeline that combines GPT-4-generated prompts with expert validation to ensure domain relevance and factual accuracy. We evaluate a range of representative LLMs and observe notable performance differences across models, with trade-offs between task accuracy and output safety across different model families. These results highlight persistent challenges in applying LLMs to high-stakes financial applications, particularly in reasoning and safety. Grounded in real-world financial use cases and aligned with the Korean regulatory and linguistic context, KFinEval-Pilot serves as an early diagnostic tool for developing safer and more reliable financial AI systems.

Bridging Dynamic Factor Models and Neural Controlled Differential Equations for Nowcasting GDP

Sep 13, 2024

Abstract:Gross domestic product (GDP) nowcasting is crucial for policy-making as GDP growth is a key indicator of economic conditions. Dynamic factor models (DFMs) have been widely adopted by government agencies for GDP nowcasting due to their ability to handle irregular or missing macroeconomic indicators and their interpretability. However, DFMs face two main challenges: i) the lack of capturing economic uncertainties such as sudden recessions or booms, and ii) the limitation of capturing irregular dynamics from mixed-frequency data. To address these challenges, we introduce NCDENow, a novel GDP nowcasting framework that integrates neural controlled differential equations (NCDEs) with DFMs. This integration effectively handles the dynamics of irregular time series. NCDENow consists of 3 main modules: i) factor extraction leveraging DFM, ii) dynamic modeling using NCDE, and iii) GDP growth prediction through regression. We evaluate NCDENow against 6 baselines on 2 real-world GDP datasets from South Korea and the United Kingdom, demonstrating its enhanced predictive capability. Our empirical results favor our method, highlighting the significant potential of integrating NCDE into nowcasting models. Our code and dataset are available at https://github.com/sklim84/NCDENow_CIKM2024.

Long-term Time Series Forecasting based on Decomposition and Neural Ordinary Differential Equations

Nov 10, 2023

Abstract:Long-term time series forecasting (LTSF) is a challenging task that has been investigated in various domains such as finance investment, health care, traffic, and weather forecasting. In recent years, Linear-based LTSF models showed better performance, pointing out the problem of Transformer-based approaches causing temporal information loss. However, Linear-based approach has also limitations that the model is too simple to comprehensively exploit the characteristics of the dataset. To solve these limitations, we propose LTSF-DNODE, which applies a model based on linear ordinary differential equations (ODEs) and a time series decomposition method according to data statistical characteristics. We show that LTSF-DNODE outperforms the baselines on various real-world datasets. In addition, for each dataset, we explore the impacts of regularization in the neural ordinary differential equation (NODE) framework.

MadSGM: Multivariate Anomaly Detection with Score-based Generative Models

Aug 29, 2023

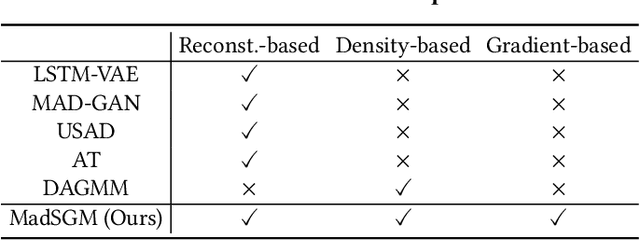

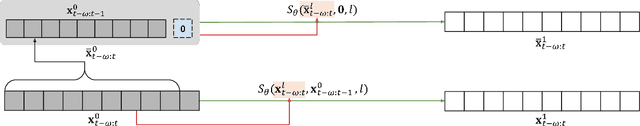

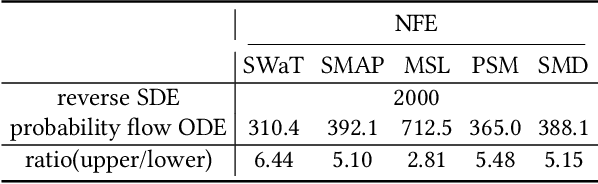

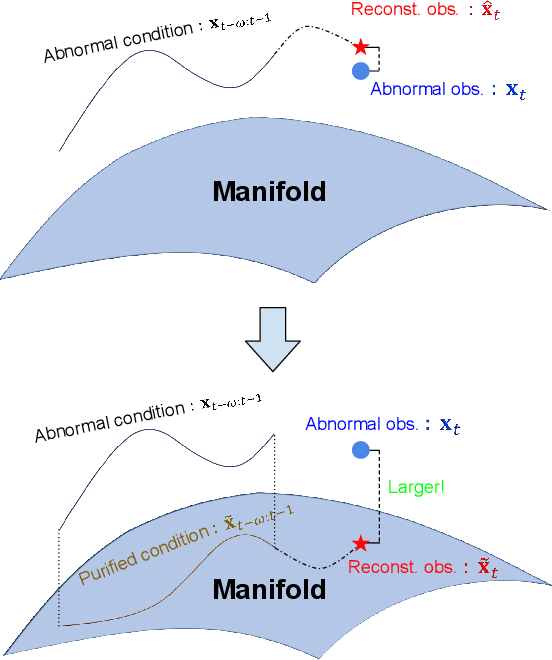

Abstract:The time-series anomaly detection is one of the most fundamental tasks for time-series. Unlike the time-series forecasting and classification, the time-series anomaly detection typically requires unsupervised (or self-supervised) training since collecting and labeling anomalous observations are difficult. In addition, most existing methods resort to limited forms of anomaly measurements and therefore, it is not clear whether they are optimal in all circumstances. To this end, we present a multivariate time-series anomaly detector based on score-based generative models, called MadSGM, which considers the broadest ever set of anomaly measurement factors: i) reconstruction-based, ii) density-based, and iii) gradient-based anomaly measurements. We also design a conditional score network and its denoising score matching loss for the time-series anomaly detection. Experiments on five real-world benchmark datasets illustrate that MadSGM achieves the most robust and accurate predictions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge