Sean R. Moore

Hierarchical Deep Convolutional Neural Networks for Multi-category Diagnosis of Gastrointestinal Disorders on Histopathological Images

May 08, 2020

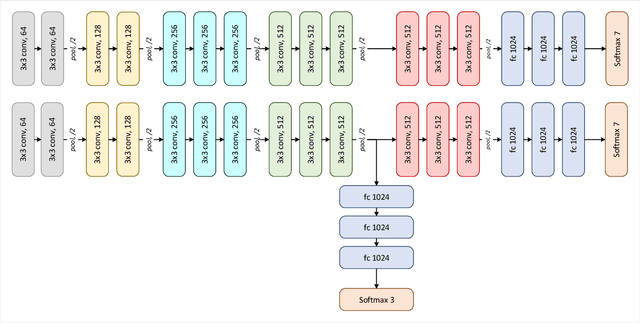

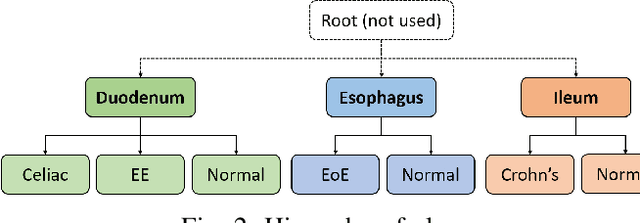

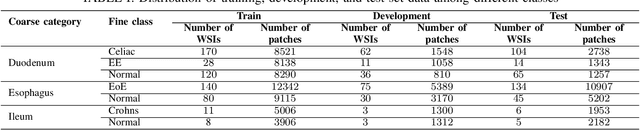

Abstract:Deep convolutional neural networks (CNNs) have been successful for a wide range of computer vision tasks including image classification. A specific area of application lies in digital pathology for pattern recognition in tissue-based diagnosis of gastrointestinal (GI) diseases. This domain can utilize CNNs to translate histopathological images into precise diagnostics. This is challenging since these complex biopsies are heterogeneous and require multiple levels of assessment. This is mainly due to structural similarities in different parts of the GI tract and shared features among different gut diseases. Addressing this problem with a flat model which assumes all classes (parts of the gut and their diseases) are equally difficult to distinguish leads to an inadequate assessment of each class. Since hierarchical model restricts classification error to each sub-class, it leads to a more informative model compared to a flat model. In this paper we propose to apply hierarchical classification of biopsy images from different parts of the GI tract and the receptive diseases within each. We embedded a class hierarchy into the plain VGGNet to take advantage of the hierarchical structure of its layers. The proposed model was evaluated using an independent set of image patches from 373 whole slide images. The results indicate that hierarchical model can achieve better results compared to the flat model for multi-category diagnosis of GI disorders using histopathological images.

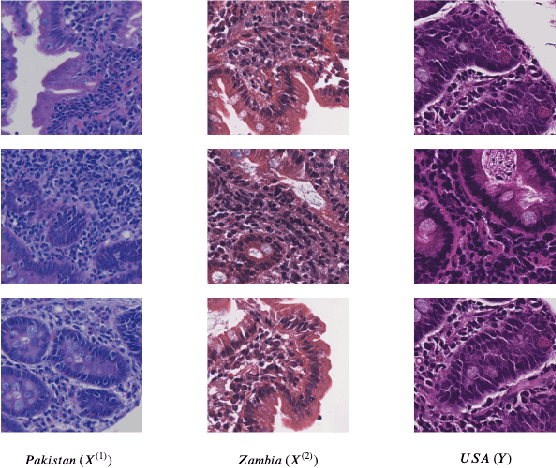

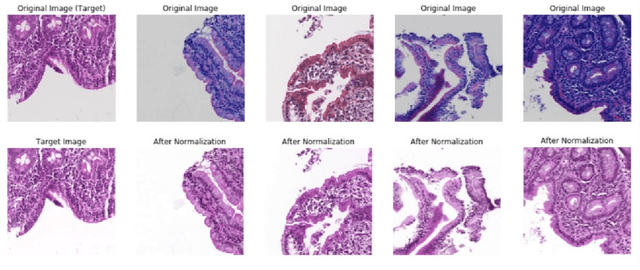

Self-Attentive Adversarial Stain Normalization

Sep 04, 2019

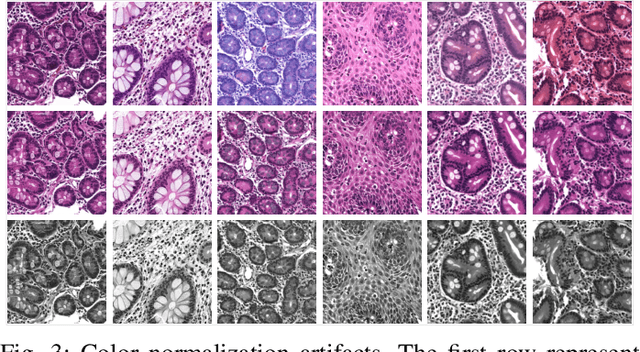

Abstract:Hematoxylin and Eosin (H&E) stained Whole Slide Images (WSIs) are utilized for biopsy visualization-based diagnostic and prognostic assessment of diseases. Variation in the H&E staining process across different lab sites can lead to significant variations in biopsy image appearance. These variations introduce an undesirable bias when the slides are examined by pathologists or used for training deep learning models. To reduce this bias, slides need to be translated to a common domain of stain appearance before analysis. We propose a Self-Attentive Adversarial Stain Normalization (SAASN) approach for the normalization of multiple stain appearances to a common domain. This unsupervised generative adversarial approach includes self-attention mechanism for synthesizing images with finer detail while preserving the structural consistency of the biopsy features during translation. SAASN demonstrates consistent and superior performance compared to other popular stain normalization techniques on H&E stained duodenal biopsy image data.

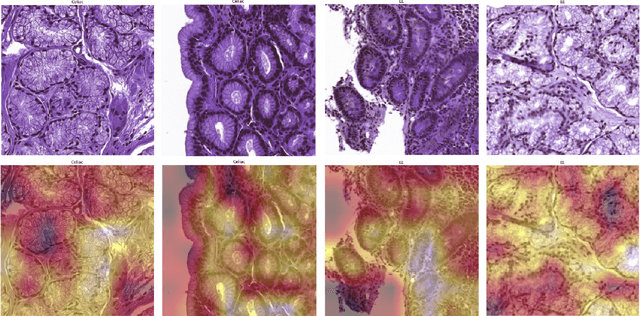

Deep Learning for Visual Recognition of Environmental Enteropathy and Celiac Disease

Aug 08, 2019

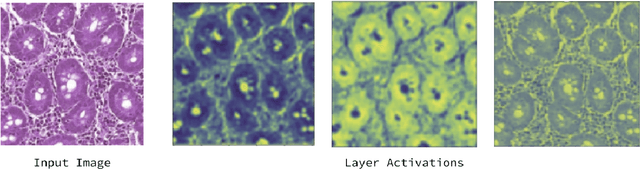

Abstract:Physicians use biopsies to distinguish between different but histologically similar enteropathies. The range of syndromes and pathologies that could cause different gastrointestinal conditions makes this a difficult problem. Recently, deep learning has been used successfully in helping diagnose cancerous tissues in histopathological images. These successes motivated the research presented in this paper, which describes a deep learning approach that distinguishes between Celiac Disease (CD) and Environmental Enteropathy (EE) and normal tissue from digitized duodenal biopsies. Experimental results show accuracies of over 90% for this approach. We also look into interpreting the neural network model using Gradient-weighted Class Activation Mappings and filter activations on input images to understand the visual explanations for the decisions made by the model.

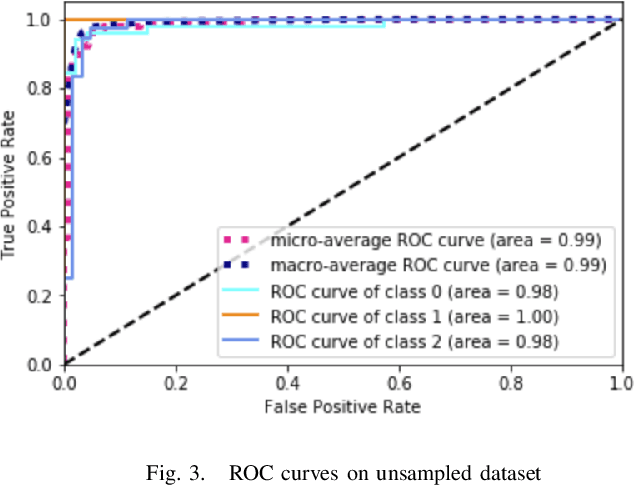

Diagnosis of Celiac Disease and Environmental Enteropathy on Biopsy Images Using Color Balancing on Convolutional Neural Networks

Apr 24, 2019

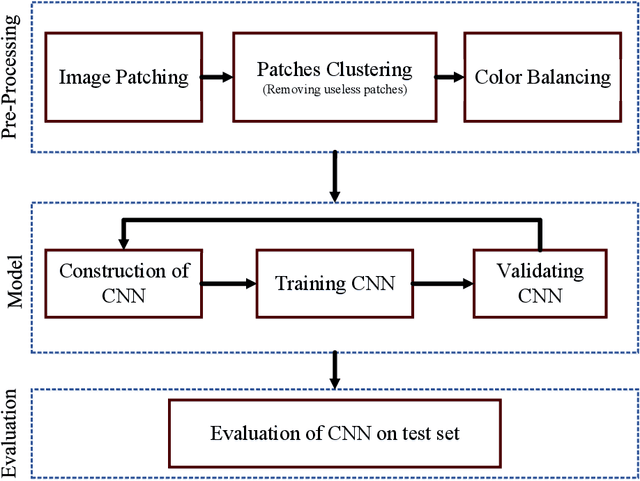

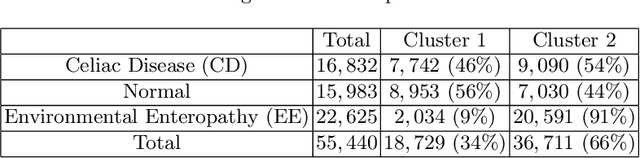

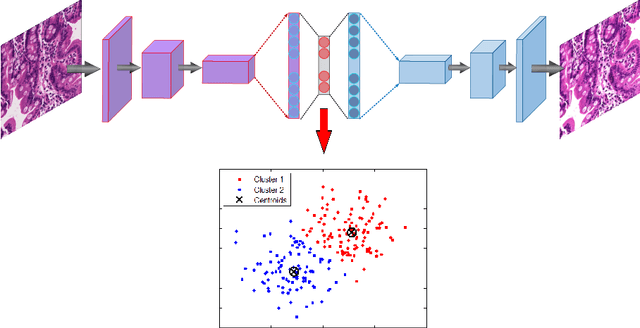

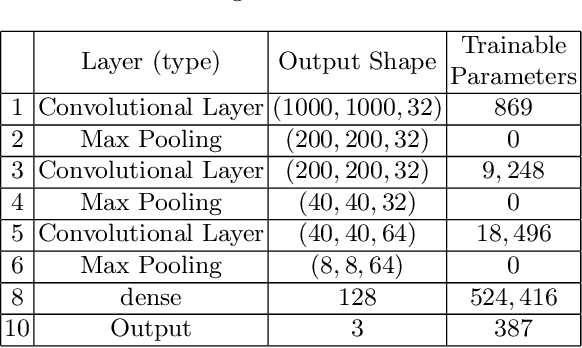

Abstract:Celiac Disease (CD) and Environmental Enteropathy (EE) are common causes of malnutrition and adversely impact normal childhood development. CD is an autoimmune disorder that is prevalent worldwide and is caused by an increased sensitivity to gluten. Gluten exposure destructs the small intestinal epithelial barrier, resulting in nutrient mal-absorption and childhood under-nutrition. EE also results in barrier dysfunction but is thought to be caused by an increased vulnerability to infections. EE has been implicated as the predominant cause of under-nutrition, oral vaccine failure, and impaired cognitive development in low-and-middle-income countries. Both conditions require a tissue biopsy for diagnosis, and a major challenge of interpreting clinical biopsy images to differentiate between these gastrointestinal diseases is striking histopathologic overlap between them. In the current study, we propose a convolutional neural network (CNN) to classify duodenal biopsy images from subjects with CD, EE, and healthy controls. We evaluated the performance of our proposed model using a large cohort containing 1000 biopsy images. Our evaluations show that the proposed model achieves an area under ROC of 0.99, 1.00, and 0.97 for CD, EE, and healthy controls, respectively. These results demonstrate the discriminative power of the proposed model in duodenal biopsies classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge