Scot Fang

Two Approaches to Building Collaborative, Task-Oriented Dialog Agents through Self-Play

Sep 20, 2021

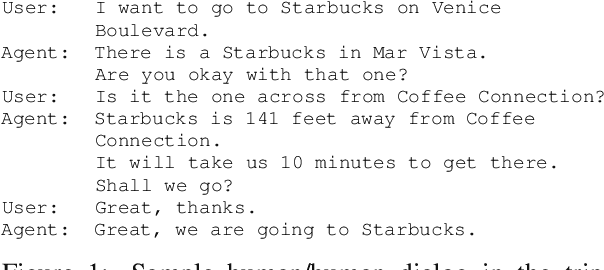

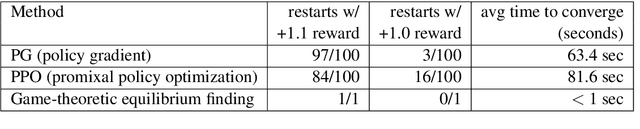

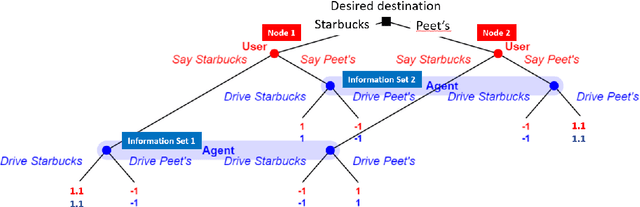

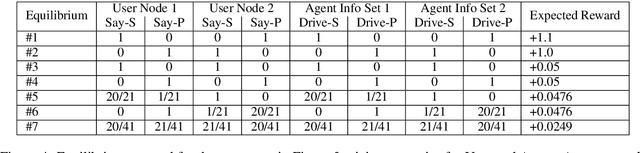

Abstract:Task-oriented dialog systems are often trained on human/human dialogs, such as collected from Wizard-of-Oz interfaces. However, human/human corpora are frequently too small for supervised training to be effective. This paper investigates two approaches to training agent-bots and user-bots through self-play, in which they autonomously explore an API environment, discovering communication strategies that enable them to solve the task. We give empirical results for both reinforcement learning and game-theoretic equilibrium finding.

MeetDot: Videoconferencing with Live Translation Captions

Sep 20, 2021

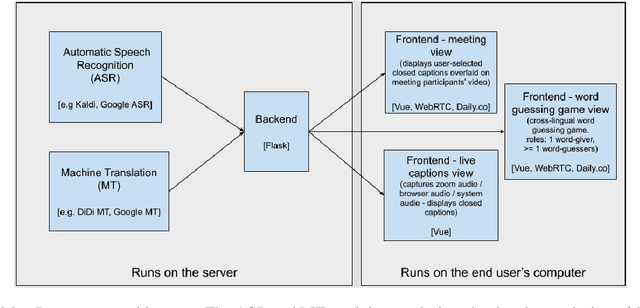

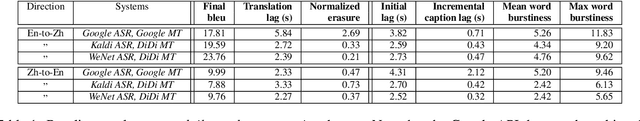

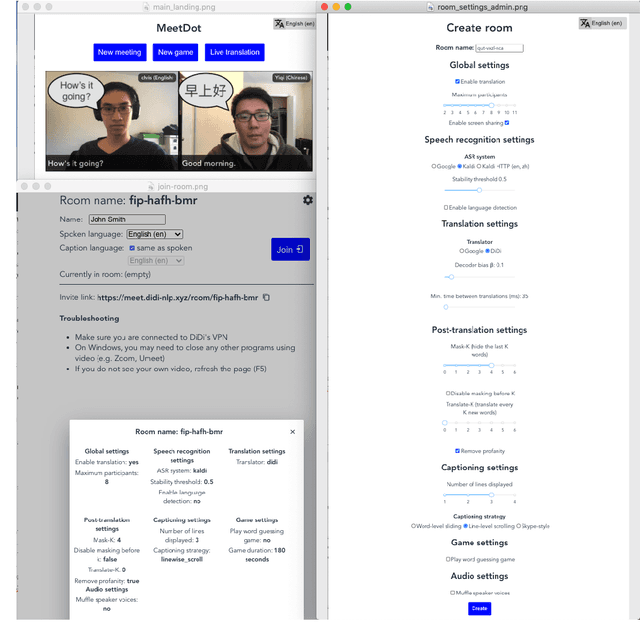

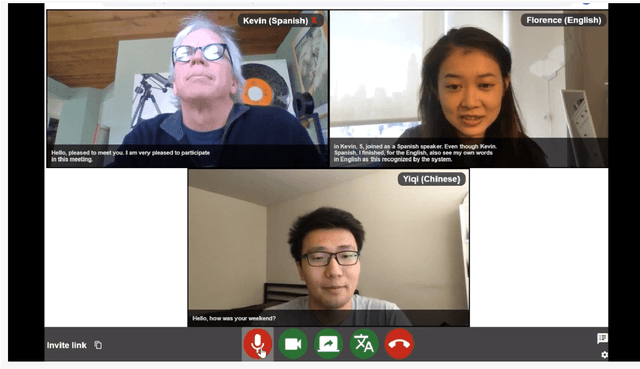

Abstract:We present MeetDot, a videoconferencing system with live translation captions overlaid on screen. The system aims to facilitate conversation between people who speak different languages, thereby reducing communication barriers between multilingual participants. Currently, our system supports speech and captions in 4 languages and combines automatic speech recognition (ASR) and machine translation (MT) in a cascade. We use the re-translation strategy to translate the streamed speech, resulting in caption flicker. Additionally, our system has very strict latency requirements to have acceptable call quality. We implement several features to enhance user experience and reduce their cognitive load, such as smooth scrolling captions and reducing caption flicker. The modular architecture allows us to integrate different ASR and MT services in our backend. Our system provides an integrated evaluation suite to optimize key intrinsic evaluation metrics such as accuracy, latency and erasure. Finally, we present an innovative cross-lingual word-guessing game as an extrinsic evaluation metric to measure end-to-end system performance. We plan to make our system open-source for research purposes.

MEEP: An Open-Source Platform for Human-Human Dialog Collection and End-to-End Agent Training

Oct 09, 2020

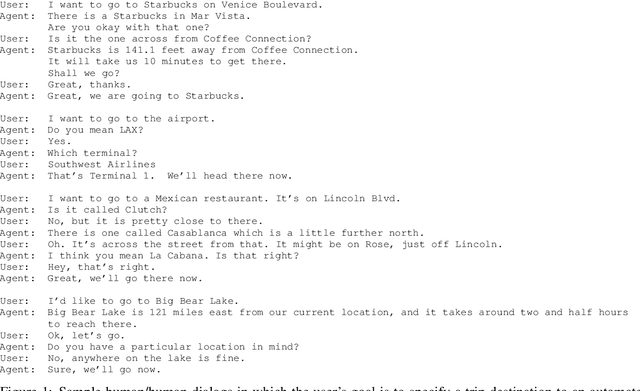

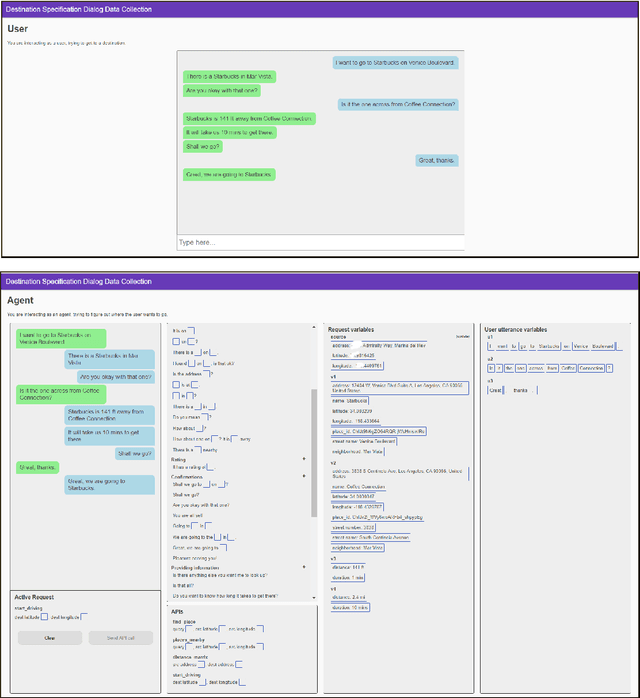

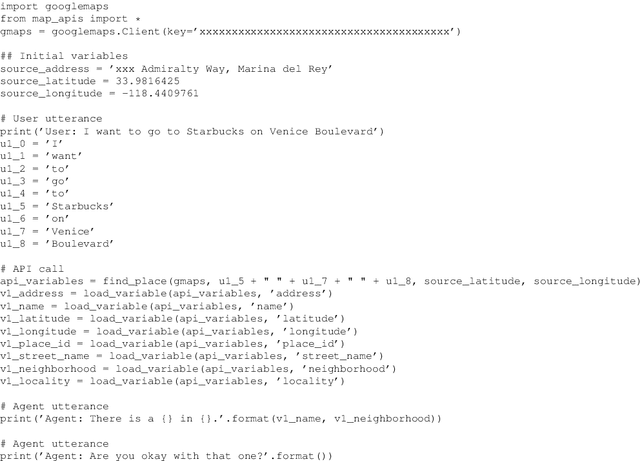

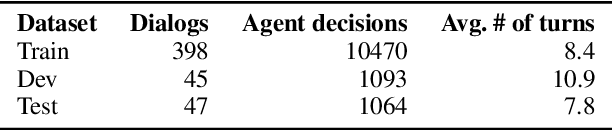

Abstract:We create a new task-oriented dialog platform (MEEP) where agents are given considerable freedom in terms of utterances and API calls, but are constrained to work within a push-button environment. We include facilities for collecting human-human dialog corpora, and for training automatic agents in an end-to-end fashion. We demonstrate MEEP with a dialog assistant that lets users specify trip destinations.

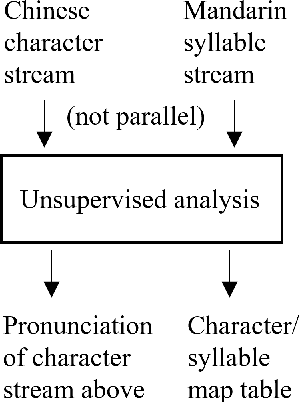

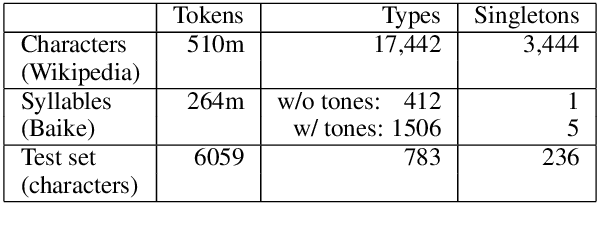

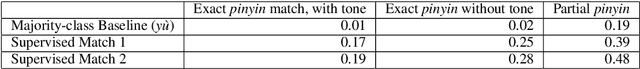

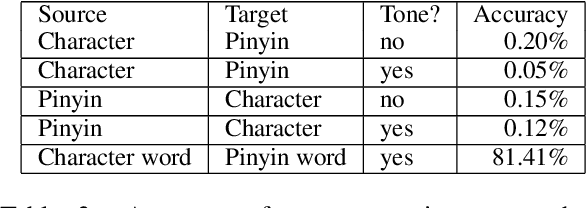

Learning to Pronounce Chinese Without a Pronunciation Dictionary

Oct 09, 2020

Abstract:We demonstrate a program that learns to pronounce Chinese text in Mandarin, without a pronunciation dictionary. From non-parallel streams of Chinese characters and Chinese pinyin syllables, it establishes a many-to-many mapping between characters and pronunciations. Using unsupervised methods, the program effectively deciphers writing into speech. Its token-level character-to-syllable accuracy is 89%, which significantly exceeds the 22% accuracy of prior work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge