Sang-Woong Lee

Assessing generalisability of deep learning-based polyp detection and segmentation methods through a computer vision challenge

Feb 24, 2022

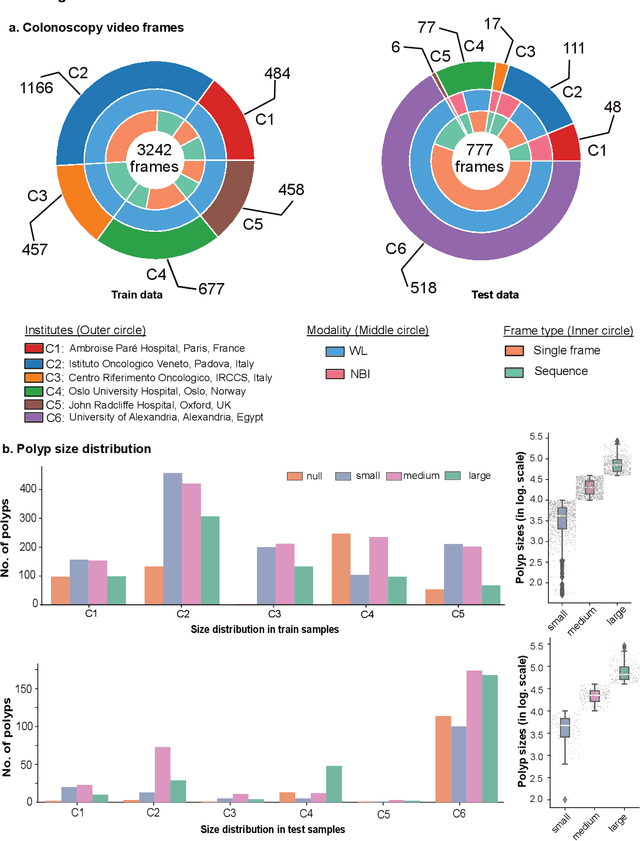

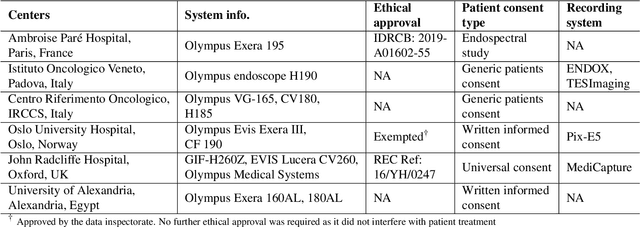

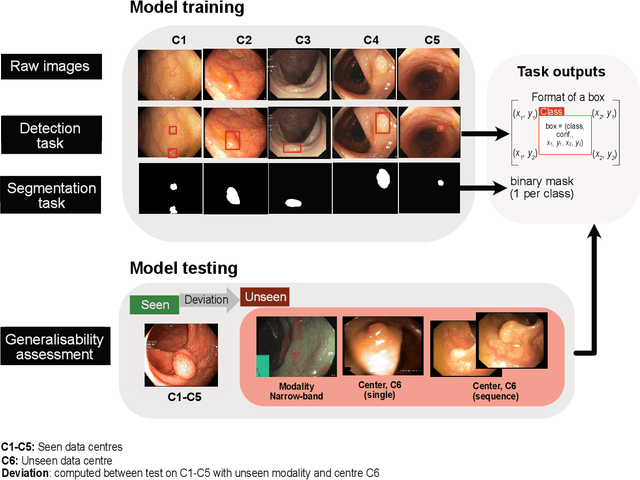

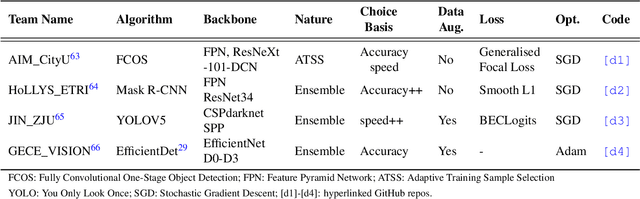

Abstract:Polyps are well-known cancer precursors identified by colonoscopy. However, variability in their size, location, and surface largely affect identification, localisation, and characterisation. Moreover, colonoscopic surveillance and removal of polyps (referred to as polypectomy ) are highly operator-dependent procedures. There exist a high missed detection rate and incomplete removal of colonic polyps due to their variable nature, the difficulties to delineate the abnormality, the high recurrence rates, and the anatomical topography of the colon. There have been several developments in realising automated methods for both detection and segmentation of these polyps using machine learning. However, the major drawback in most of these methods is their ability to generalise to out-of-sample unseen datasets that come from different centres, modalities and acquisition systems. To test this hypothesis rigorously we curated a multi-centre and multi-population dataset acquired from multiple colonoscopy systems and challenged teams comprising machine learning experts to develop robust automated detection and segmentation methods as part of our crowd-sourcing Endoscopic computer vision challenge (EndoCV) 2021. In this paper, we analyse the detection results of the four top (among seven) teams and the segmentation results of the five top teams (among 16). Our analyses demonstrate that the top-ranking teams concentrated on accuracy (i.e., accuracy > 80% on overall Dice score on different validation sets) over real-time performance required for clinical applicability. We further dissect the methods and provide an experiment-based hypothesis that reveals the need for improved generalisability to tackle diversity present in multi-centre datasets.

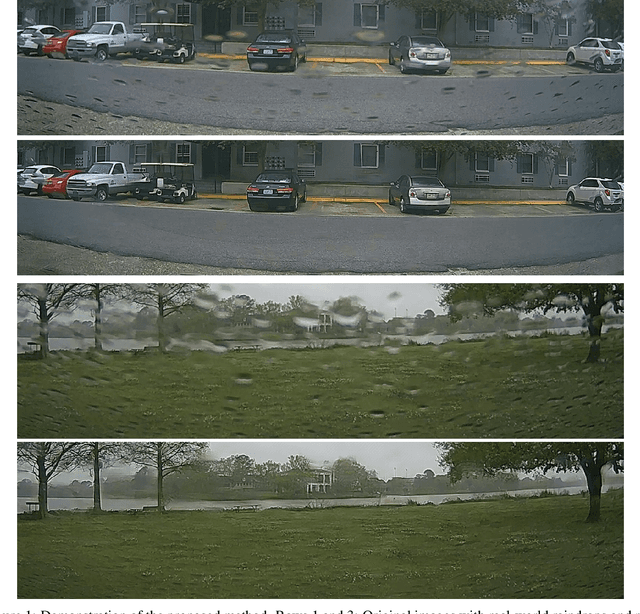

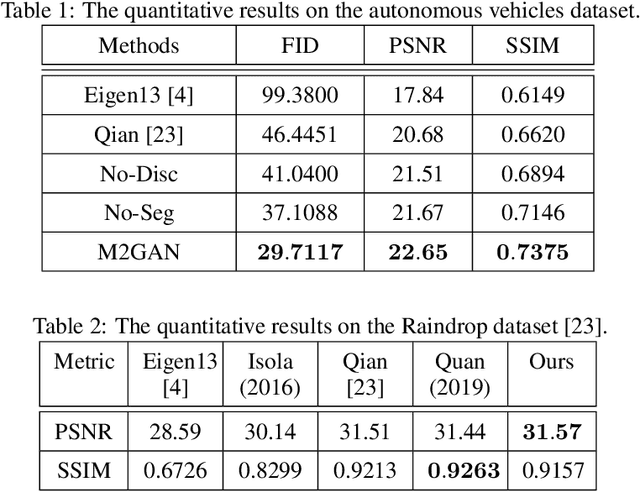

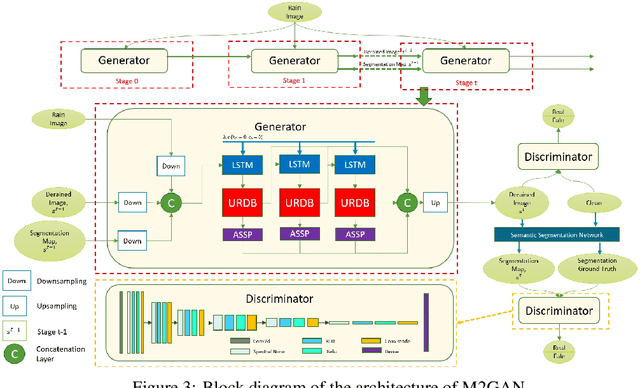

M2GAN: A Multi-Stage Self-Attention Network for Image Rain Removal on Autonomous Vehicles

Oct 12, 2021

Abstract:Image deraining is a new challenging problem in applications of autonomous vehicles. In a bad weather condition of heavy rainfall, raindrops, mainly hitting the vehicle's windshield, can significantly reduce observation ability even though the windshield wipers might be able to remove part of it. Moreover, rain flows spreading over the windshield can yield the physical effect of refraction, which seriously impede the sightline or undermine the machine learning system equipped in the vehicle. In this paper, we propose a new multi-stage multi-task recurrent generative adversarial network (M2GAN) to deal with challenging problems of raindrops hitting the car's windshield. This method is also applicable for removing raindrops appearing on a glass window or lens. M2GAN is a multi-stage multi-task generative adversarial network that can utilize prior high-level information, such as semantic segmentation, to boost deraining performance. To demonstrate M2GAN, we introduce the first real-world dataset for rain removal on autonomous vehicles. The experimental results show that our proposed method is superior to other state-of-the-art approaches of deraining raindrops in respect of quantitative metrics and visual quality. M2GAN is considered the first method to deal with challenging problems of real-world rains under unconstrained environments such as autonomous vehicles.

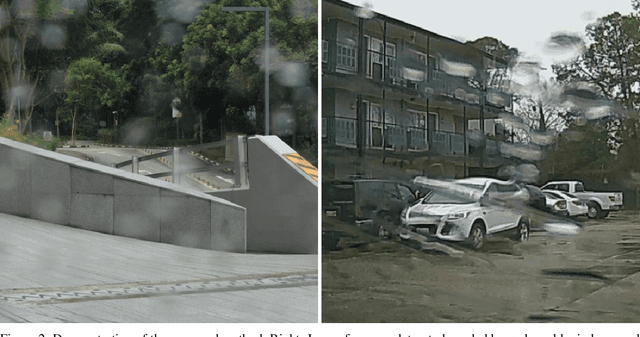

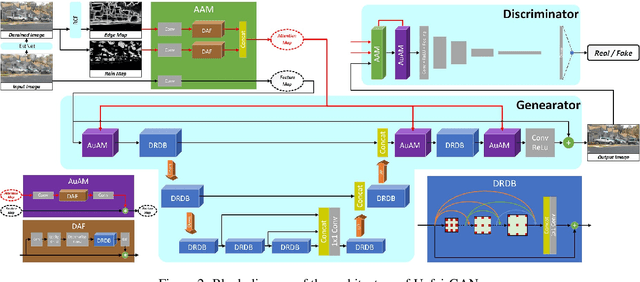

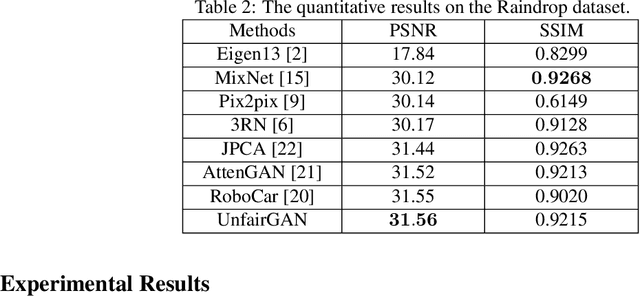

UnfairGAN: An Enhanced Generative Adversarial Network for Raindrop Removal from A Single Image

Oct 11, 2021

Abstract:Image deraining is a new challenging problem in real-world applications, such as autonomous vehicles. In a bad weather condition of heavy rainfall, raindrops, mainly hitting glasses or windshields, can significantly reduce observation ability. Moreover, raindrops spreading over the glass can yield refraction's physical effect, which seriously impedes the sightline or undermine machine learning systems. In this paper, we propose an enhanced generative adversarial network to deal with the challenging problems of raindrops. UnfairGAN is an enhanced generative adversarial network that can utilize prior high-level information, such as edges and rain estimation, to boost deraining performance. To demonstrate UnfairGAN, we introduce a large dataset for training deep learning models of rain removal. The experimental results show that our proposed method is superior to other state-of-the-art approaches of deraining raindrops regarding quantitative metrics and visual quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge