Sandor M. Veres

A stochastically verifiable autonomous control architecture with reasoning

Nov 10, 2016

Abstract:A new agent architecture called Limited Instruction Set Agent (LISA) is introduced for autonomous control. The new architecture is based on previous implementations of AgentSpeak and it is structurally simpler than its predecessors with the aim of facilitating design-time and run-time verification methods. The process of abstracting the LISA system to two different types of discrete probabilistic models (DTMC and MDP) is investigated and illustrated. The LISA system provides a tool for complete modelling of the agent and the environment for probabilistic verification. The agent program can be automatically compiled into a DTMC or a MDP model for verification with Prism. The automatically generated Prism model can be used for both design-time and run-time verification. The run-time verification is investigated and illustrated in the LISA system as an internal modelling mechanism for prediction of future outcomes.

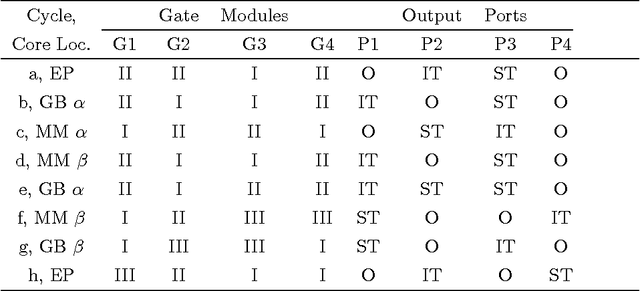

Testing, Verification and Improvements of Timeliness in ROS processes

Nov 10, 2016

Abstract:This paper addresses the problem of improving response times of robots implemented in the Robotic Operating System (ROS) using formal verification of computational-time feasibility. In order to verify the real time behaviour of a robot under uncertain signal processing times, methods of formal verification of timeliness properties are proposed for data flows in a ROS-based control system using Probabilistic Timed Programs (PTPs). To calculate the probability of success under certain time limits, and to demonstrate the strength of our approach, a case study is implemented for a robotic agent in terms of operational times verification using the PRISM model checker, which points to possible enhancements to the operation of the robotic agent.

* 13 pages, 4 figures

Verification of Logical Consistency in Robotic Reasoning

Nov 10, 2016

Abstract:Most autonomous robotic agents use logic inference to keep themselves to safe and permitted behaviour. Given a set of rules, it is important that the robot is able to establish the consistency between its rules, its perception-based beliefs, its planned actions and their consequences. This paper investigates how a robotic agent can use model checking to examine the consistency of its rules, beliefs and actions. A rule set is modelled by a Boolean evolution system with synchronous semantics, which can be translated into a labelled transition system (LTS). It is proven that stability and consistency can be formulated as computation tree logic (CTL) and linear temporal logic (LTL) properties. Two new algorithms are presented to perform realtime consistency and stability checks respectively. Their implementation provides us a computational tool, which can form the basis of efficient consistency checks on-board robots.

Dynamical Behavior Investigation and Analysis of Novel Mechanism for Simulated Spherical Robot named "RollRoller"

Oct 19, 2016

Abstract:This paper introduces a simulation study of fluid actuated multi-driven closed system as spherical mobile robot called "RollRoller". Robot's mechanism design consists of two essential parts: tubes to lead a core and mechanical controlling parts to correspond movements. Our robot gets its motivation force by displacing the spherical movable mass known as core in curvy manners inside certain pipes. This simulation investigates by explaining the mechanical and structural features of the robot for creating hydraulic-base actuation via force and momentum analysis. Next, we categorize difficult and integrated 2D motions to omit unstable equilibrium points through derived nonlinear dynamics. We propose an algorithmic position control in forward direction that creates hybrid model as solution for motion planning problem in spherical robot. By deriving nonlinear dynamics of the spherical robot and implementing designed motion planning, we show how RollRoller can be efficient in high speed movements in comparison to the other pendulum-driven models. Then, we validate the results of this position control obtained by nonlinear dynamics via Adams/view simulation which uses the imported solid model of RollRoller. Lastly, We have a look to the circular maneuver of this robot by the same simulator.

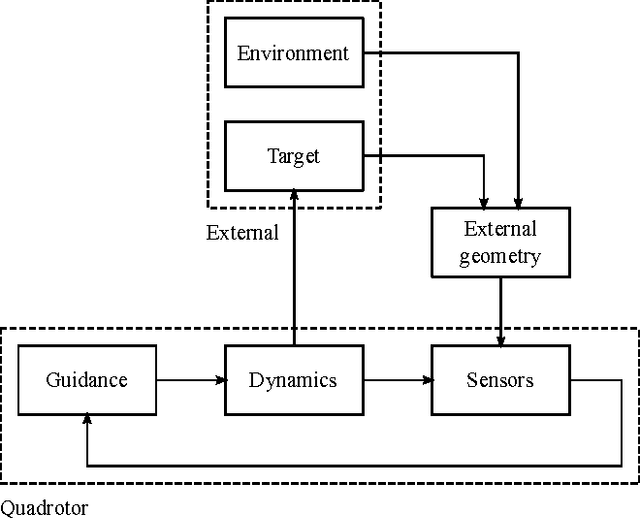

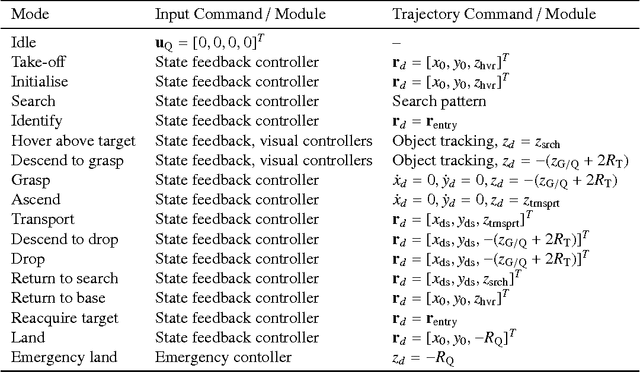

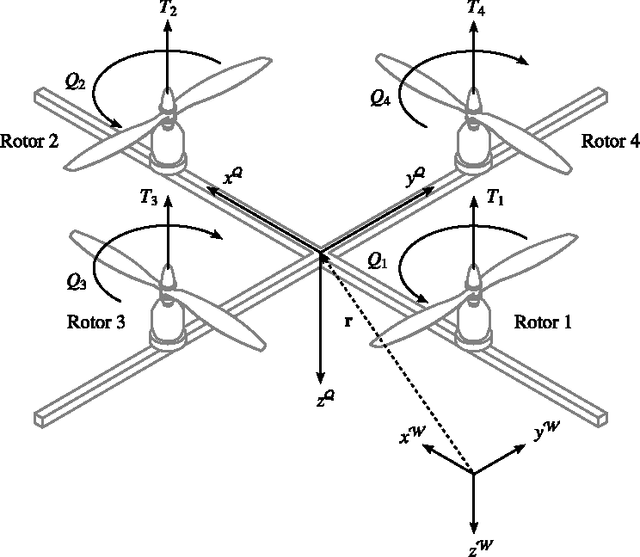

A Continuous-Time Model of an Autonomous Aerial Vehicle to Inform and Validate Formal Verification Methods

Sep 01, 2016

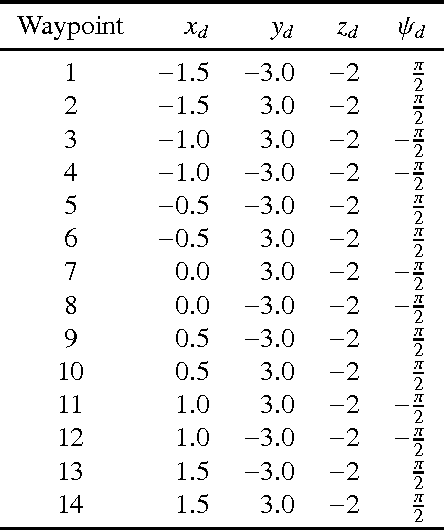

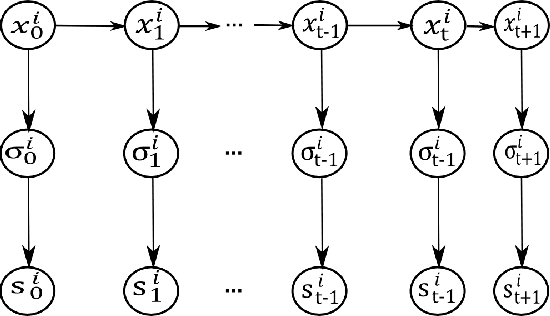

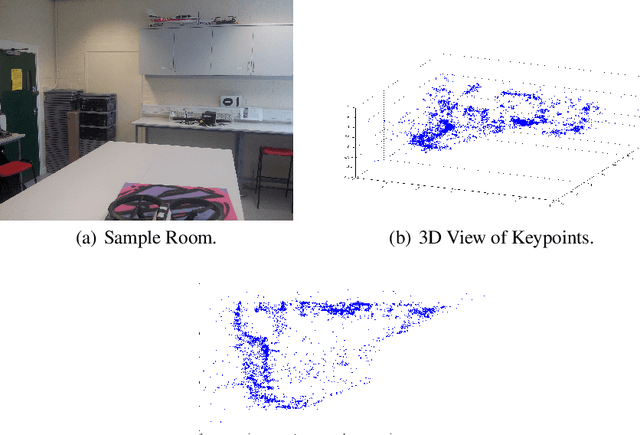

Abstract:If autonomous vehicles are to be widely accepted, we need to ensure their safe operation. For this reason, verification and validation (V&V) approaches must be developed that are suitable for this domain. Model checking is a formal technique which allows us to exhaustively explore the paths of an abstract model of a system. Using a probabilistic model checker such as PRISM, we may determine properties such as the expected time for a mission, or the probability that a specific mission failure occurs. However, model checking of complex systems is difficult due to the loss of information during abstraction. This is especially so when considering systems such as autonomous vehicles which are subject to external influences. An alternative solution is the use of Monte Carlo simulation to explore the results of a continuous-time model of the system. The main disadvantage of this approach is that the approach is not exhaustive as not all executions of the system are analysed. We are therefore interested in developing a framework for formal verification of autonomous vehicles, using Monte Carlo simulation to inform and validate our symbolic models during the initial stages of development. In this paper, we present a continuous-time model of a quadrotor unmanned aircraft undertaking an autonomous mission. We employ this model in Monte Carlo simulation to obtain specific mission properties which will inform the symbolic models employed in formal verification.

Collision Avoidance of Two Autonomous Quadcopters

Mar 17, 2016

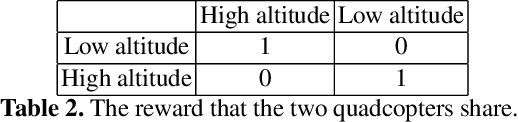

Abstract:Traffic collision avoidance systems (TCAS) are used in order to avoid incidences of mid-air collisions between aircraft. We present a game-theoretic approach of a TCAS designed for autonomous unmanned aerial vehicles (UAVs). A variant of the canonical example of game-theoretic learning, fictitious play, is used as a coordination mechanism between the UAVs, that should choose between the alternative altitudes to fly and avoid collision. We present the implementation results of the proposed coordination mechanism in two quad-copters flying in opposite directions.

Formal Verification of Autonomous Vehicle Platooning

Feb 04, 2016

Abstract:The coordination of multiple autonomous vehicles into convoys or platoons is expected on our highways in the near future. However, before such platoons can be deployed, the new autonomous behaviors of the vehicles in these platoons must be certified. An appropriate representation for vehicle platooning is as a multi-agent system in which each agent captures the "autonomous decisions" carried out by each vehicle. In order to ensure that these autonomous decision-making agents in vehicle platoons never violate safety requirements, we use formal verification. However, as the formal verification technique used to verify the agent code does not scale to the full system and as the global verification technique does not capture the essential verification of autonomous behavior, we use a combination of the two approaches. This mixed strategy allows us to verify safety requirements not only of a model of the system, but of the actual agent code used to program the autonomous vehicles.

Agent Based Approaches to Engineering Autonomous Space Software

Mar 02, 2010

Abstract:Current approaches to the engineering of space software such as satellite control systems are based around the development of feedback controllers using packages such as MatLab's Simulink toolbox. These provide powerful tools for engineering real time systems that adapt to changes in the environment but are limited when the controller itself needs to be adapted. We are investigating ways in which ideas from temporal logics and agent programming can be integrated with the use of such control systems to provide a more powerful layer of autonomous decision making. This paper will discuss our initial approaches to the engineering of such systems.

* 3 pages, 1 Figure, Formal Methods in Aerospace

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge