Sandeep Gogineni

Approximate MLE of High-Dimensional STAP Covariance Matrices with Banded & Spiked Structure -- A Convex Relaxation Approach

May 12, 2025Abstract:Estimating the clutter-plus-noise covariance matrix in high-dimensional STAP is challenging in the presence of Internal Clutter Motion (ICM) and a high noise floor. The problem becomes more difficult in low-sample regimes, where the Sample Covariance Matrix (SCM) becomes ill-conditioned. To capture the ICM and high noise floor, we model the covariance matrix using a ``Banded+Spiked'' structure. Since the Maximum Likelihood Estimation (MLE) for this model is non-convex, we propose a convex relaxation which is formulated as a Frobenius norm minimization with non-smooth convex constraints enforcing banded sparsity. This relaxation serves as a provable upper bound for the non-convex likelihood maximization and extends to cases where the covariance matrix dimension exceeds the number of samples. We derive a variational inequality-based bound to assess its quality. We introduce a novel algorithm to jointly estimate the banded clutter covariance and noise power. Additionally, we establish conditions ensuring the estimated covariance matrix remains positive definite and the bandsize is accurately recovered. Numerical results using the high-fidelity RFView radar simulation environment demonstrate that our algorithm achieves a higher Signal-to-Clutter-plus-Noise Ratio (SCNR) than state-of-the-art methods, including TABASCO, Spiked Covariance Stein Shrinkage, and Diagonal Loading, particularly when the covariance matrix dimension exceeds the number of samples.

A Digital Engineering Approach to Testing Modern AI and Complex Systems

Nov 26, 2024

Abstract:Modern AI (i.e., Deep Learning and its variants) is here to stay. However, its enigmatic black box nature presents a fundamental challenge to the traditional methods of test and validation (T&E). Or does it? In this paper we introduce a Digital Engineering (DE) approach to T&E (DE-T&E), combined with generative AI, that can achieve requisite mil spec statistical validation as well as uncover potential deleterious Black Swan events that might otherwise not be uncovered until it is too late. An illustration of these concepts is presented for an advanced modern radar example employing deep learning AI.

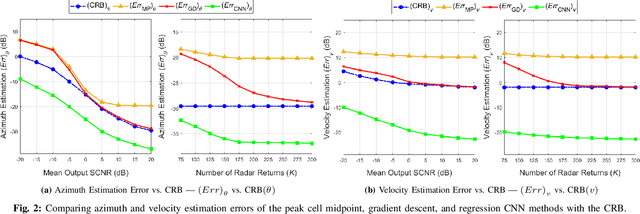

Data-Driven Target Localization: Benchmarking Gradient Descent Using the Cramér-Rao Bound

Jan 20, 2024

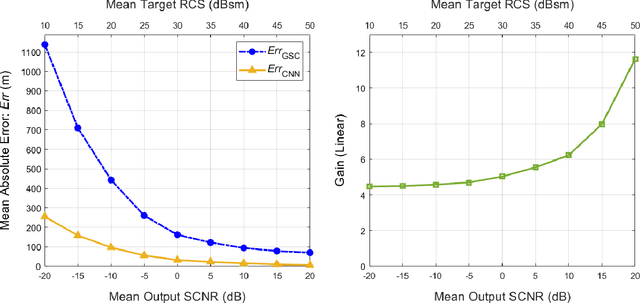

Abstract:In modern radar systems, precise target localization using azimuth and velocity estimation is paramount. Traditional unbiased estimation methods have leveraged gradient descent algorithms to reach the theoretical limits of the Cram\'er Rao Bound (CRB) for the error of the parameter estimates. In this study, we present a data-driven neural network approach that outperforms these traditional techniques, demonstrating improved accuracies in target azimuth and velocity estimation. Using a representative simulated scenario, we show that our proposed neural network model consistently achieves improved parameter estimates due to its inherently biased nature, yielding a diminished mean squared error (MSE). Our findings underscore the potential of employing deep learning methods in radar systems, paving the way for more accurate localization in cluttered and dynamic environments.

Subspace Perturbation Analysis for Data-Driven Radar Target Localization

Mar 21, 2023

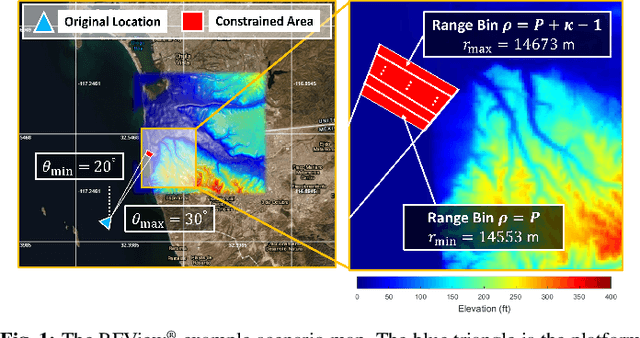

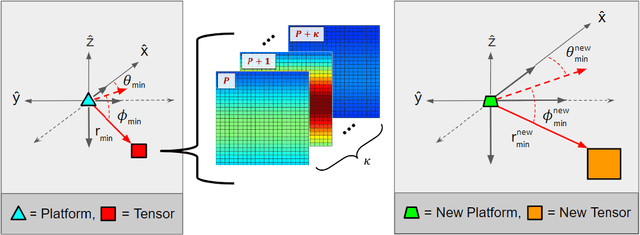

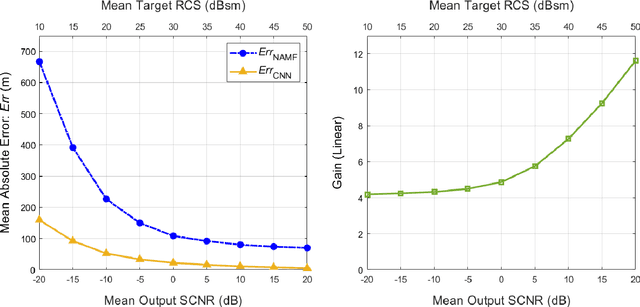

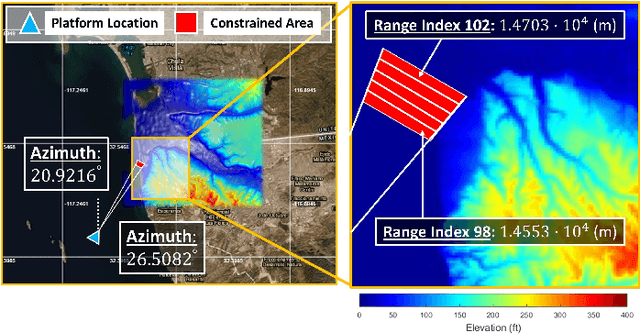

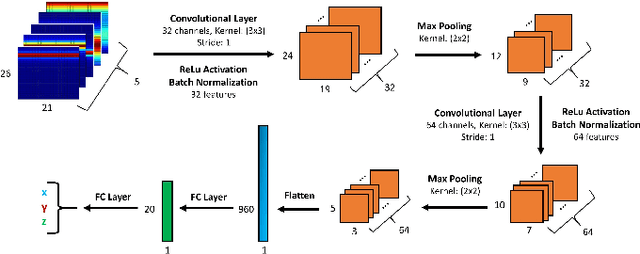

Abstract:Recent works exploring data-driven approaches to classical problems in adaptive radar have demonstrated promising results pertaining to the task of radar target localization. Via the use of space-time adaptive processing (STAP) techniques and convolutional neural networks, these data-driven approaches to target localization have helped benchmark the performance of neural networks for matched scenarios. However, the thorough bridging of these topics across mismatched scenarios still remains an open problem. As such, in this work, we augment our data-driven approach to radar target localization by performing a subspace perturbation analysis, which allows us to benchmark the localization accuracy of our proposed deep learning framework across mismatched scenarios. To evaluate this framework, we generate comprehensive datasets by randomly placing targets of variable strengths in mismatched constrained areas via RFView, a high-fidelity, site-specific modeling and simulation tool. For the radar returns from these constrained areas, we generate heatmap tensors in range, azimuth, and elevation using the normalized adaptive matched filter (NAMF) test statistic. We estimate target locations from these heatmap tensors using a convolutional neural network, and demonstrate that the predictive performance of our framework in the presence of mismatches can be predetermined.

Radar Clutter Covariance Estimation: A Nonlinear Spectral Shrinkage Approach

Feb 04, 2023Abstract:In this paper, we exploit the spiked covariance structure of the clutter plus noise covariance matrix for radar signal processing. Using state-of-the-art techniques high dimensional statistics, we propose a nonlinear shrinkage-based rotation invariant spiked covariance matrix estimator. We state the convergence of the estimated spiked eigenvalues. We use a dataset generated from the high-fidelity, site-specific physics-based radar simulation software RFView to compare the proposed algorithm against the existing Rank Constrained Maximum Likelihood (RCML)-Expected Likelihood (EL) covariance estimation algorithm. We demonstrate that the computation time for the estimation by the proposed algorithm is less than the RCML-EL algorithm with identical Signal to Clutter plus Noise (SCNR) performance. We show that the proposed algorithm and the RCML-EL-based algorithm share the same optimization problem in high dimensions. We use Low-Rank Adaptive Normalized Matched Filter (LR-ANMF) detector to compute the detection probabilities for different false alarm probabilities over a range of target SNR. We present preliminary results which demonstrate the robustness of the detector against contaminating clutter discretes using the Challenge Dataset from RFView. Finally, we empirically show that the minimum variance distortionless beamformer (MVDR) error variance for the proposed algorithm is identical to the error variance resulting from the true covariance matrix.

Toward Data-Driven Radar STAP

Sep 22, 2022

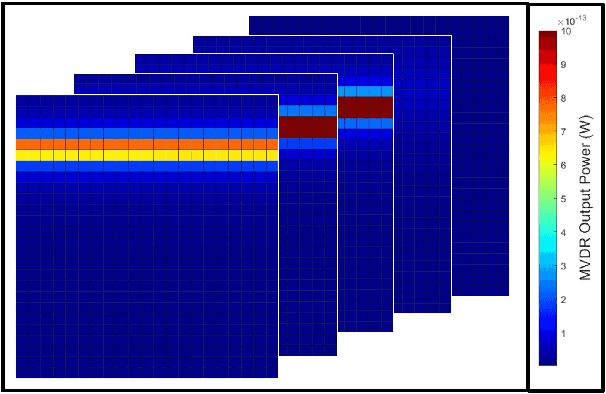

Abstract:Catalyzed by the recent emergence of site-specific, high-fidelity radio frequency (RF) modeling and simulation tools purposed for radar, data-driven formulations of classical methods in radar have rapidly grown in popularity over the past decade. Despite this surge, limited focus has been directed toward the theoretical foundations of these classical methods. In this regard, as part of our ongoing data-driven approach to radar space-time adaptive processing (STAP), we analyze the asymptotic performance guarantees of select subspace separation methods in the context of radar target localization, and augment this analysis through a proposed deep learning framework for target location estimation. In our approach, we generate comprehensive datasets by randomly placing targets of variable strengths in predetermined constrained areas using RFView, a site-specific RF modeling and simulation tool developed by ISL Inc. For each radar return signal from these constrained areas, we generate heatmap tensors in range, azimuth, and elevation of the normalized adaptive matched filter (NAMF) test statistic, and of the output power of a generalized sidelobe canceller (GSC). Using our deep learning framework, we estimate target locations from these heatmap tensors to demonstrate the feasibility of and significant improvements provided by our data-driven approach in matched and mismatched settings.

High Fidelity RF Clutter Modeling and Simulation

Feb 10, 2022

Abstract:In this paper, we present a tutorial overview of state-of-the-art radio frequency (RF) clutter modeling and simulation (M&S) techniques. Traditional statistical approximation based methods will be reviewed followed by more accurate physics-based stochastic transfer function clutter models that facilitate site-specific simulations anywhere on earth. The various factors that go into the computation of these transfer functions will be presented, followed by several examples across multiple RF applications. Finally, we introduce a radar challenge dataset generated using these tools that can enable testing and benchmarking of all cognitive radar algorithms and techniques.

Toward Data-Driven STAP Radar

Jan 26, 2022

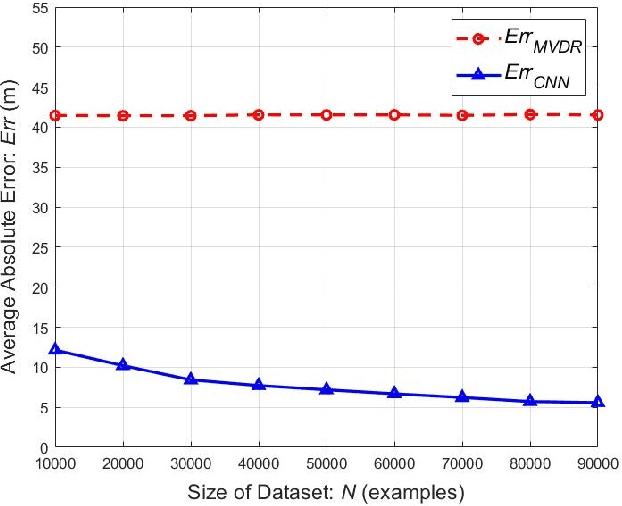

Abstract:Using an amalgamation of techniques from classical radar, computer vision, and deep learning, we characterize our ongoing data-driven approach to space-time adaptive processing (STAP) radar. We generate a rich example dataset of received radar signals by randomly placing targets of variable strengths in a predetermined region using RFView, a site-specific radio frequency modeling and simulation tool developed by ISL Inc. For each data sample within this region, we generate heatmap tensors in range, azimuth, and elevation of the output power of a minimum variance distortionless response (MVDR) beamformer, which can be replaced with a desired test statistic. These heatmap tensors can be thought of as stacked images, and in an airborne scenario, the moving radar creates a sequence of these time-indexed image stacks, resembling a video. Our goal is to use these images and videos to detect targets and estimate their locations, a procedure reminiscent of computer vision algorithms for object detection$-$namely, the Faster Region-Based Convolutional Neural Network (Faster R-CNN). The Faster R-CNN consists of a proposal generating network for determining regions of interest (ROI), a regression network for positioning anchor boxes around targets, and an object classification algorithm; it is developed and optimized for natural images. Our ongoing research will develop analogous tools for heatmap images of radar data. In this regard, we will generate a large, representative adaptive radar signal processing database for training and testing, analogous in spirit to the COCO dataset for natural images. As a preliminary example, we present a regression network in this paper for estimating target locations to demonstrate the feasibility of and significant improvements provided by our data-driven approach.

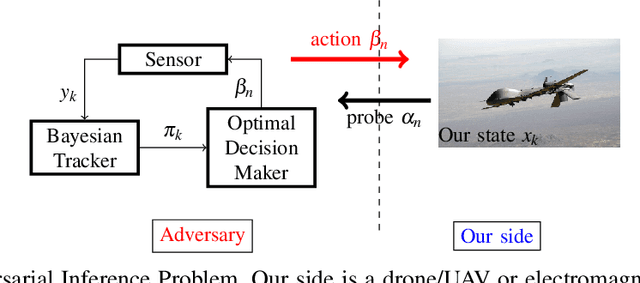

Adversarial Radar Inference: Inverse Tracking, Identifying Cognition and Designing Smart Interference

Aug 01, 2020

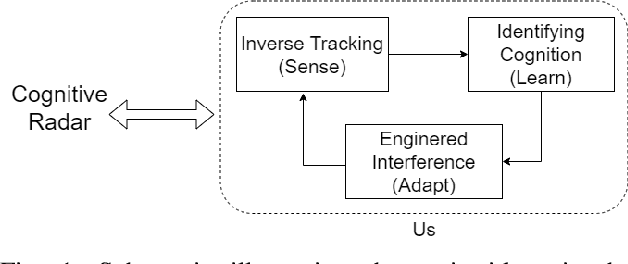

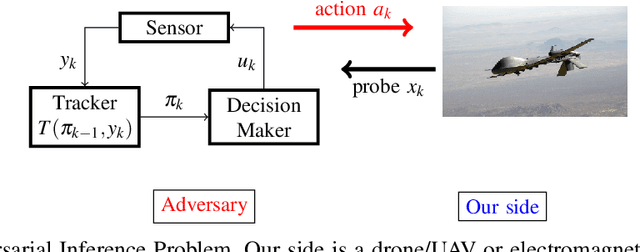

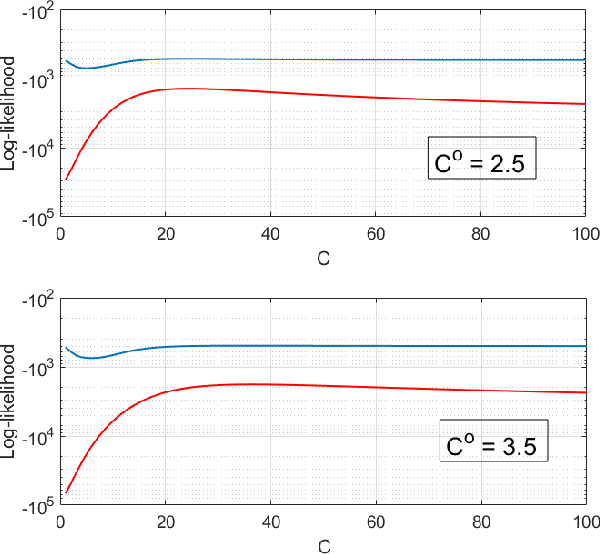

Abstract:This paper considers three inter-related adversarial inference problems involving cognitive radars. We first discuss inverse tracking of the radar to estimate the adversary's estimate of us based on the radar's actions and calibrate the radar's sensing accuracy. Second, using revealed preference from microeconomics, we formulate a non-parametric test to identify if the cognitive radar is a constrained utility maximizer with signal processing constraints. We consider two radar functionalities, namely, beam allocation and waveform design, with respect to which the cognitive radar is assumed to maximize its utility and construct a set-valued estimator for the radar's utility function. Finally, we discuss how to engineer interference at the physical layer level to confuse the radar which forces it to change its transmit waveform. The levels of abstraction range from smart interference design based on Wiener filters (at the pulse/waveform level), inverse Kalman filters at the tracking level and revealed preferences for identifying utility maximization at the systems level.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge