Shyam Venkatasubramanian

Stabilizing Transformer Training Through Consensus

Jan 30, 2026Abstract:Standard attention-based transformers are known to exhibit instability under learning rate overspecification during training, particularly at high learning rates. While various methods have been proposed to improve resilience to such overspecification by modifying the optimization procedure, fundamental architectural innovations to this end remain underexplored. In this work, we illustrate that the consensus mechanism, a drop-in replacement for attention, stabilizes transformer training across a wider effective range of learning rates. We formulate consensus as a graphical model and provide extensive empirical analysis demonstrating improved stability across learning rate sweeps on text, DNA, and protein modalities. We further propose a hybrid consensus-attention framework that preserves performance while improving stability. We provide theoretical analysis characterizing the properties of consensus.

Learn2Mix: Training Neural Networks Using Adaptive Data Integration

Dec 21, 2024

Abstract:Accelerating model convergence in resource-constrained environments is essential for fast and efficient neural network training. This work presents learn2mix, a new training strategy that adaptively adjusts class proportions within batches, focusing on classes with higher error rates. Unlike classical training methods that use static class proportions, learn2mix continually adapts class proportions during training, leading to faster convergence. Empirical evaluations on benchmark datasets show that neural networks trained with learn2mix converge faster than those trained with classical approaches, achieving improved results for classification, regression, and reconstruction tasks under limited training resources and with imbalanced classes. Our empirical findings are supported by theoretical analysis.

Steinmetz Neural Networks for Complex-Valued Data

Sep 16, 2024

Abstract:In this work, we introduce a new approach to processing complex-valued data using DNNs consisting of parallel real-valued subnetworks with coupled outputs. Our proposed class of architectures, referred to as Steinmetz Neural Networks, leverages multi-view learning to construct more interpretable representations within the latent space. Subsequently, we present the Analytic Neural Network, which implements a consistency penalty that encourages analytic signal representations in the Steinmetz neural network's latent space. This penalty enforces a deterministic and orthogonal relationship between the real and imaginary components. Utilizing an information-theoretic construction, we demonstrate that the upper bound on the generalization error posited by the analytic neural network is lower than that of the general class of Steinmetz neural networks. Our numerical experiments demonstrate the improved performance and robustness to additive noise, afforded by our proposed networks on benchmark datasets and synthetic examples.

RASPNet: A Benchmark Dataset for Radar Adaptive Signal Processing Applications

Jun 14, 2024

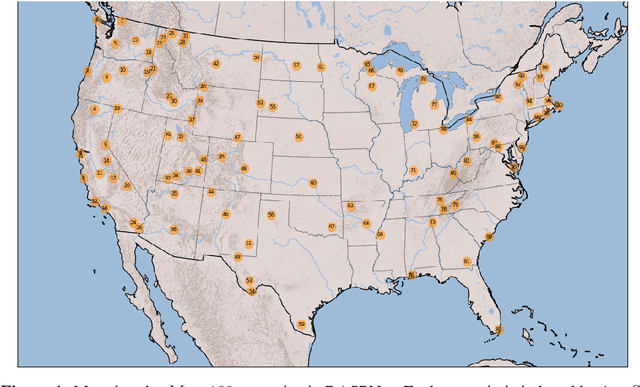

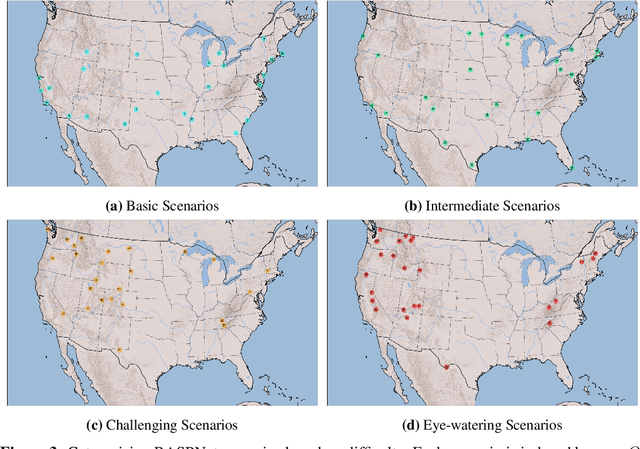

Abstract:This work presents a large-scale dataset for radar adaptive signal processing (RASP) applications, aimed at supporting the development of data-driven models within the radar community. The dataset, called RASPNet, consists of 100 realistic scenarios compiled over a variety of topographies and land types from across the contiguous United States, designed to reflect a diverse array of real-world environments. Within each scenario, RASPNet consists of 10,000 clutter realizations from an airborne radar setting, which can be utilized for radar algorithm development and evaluation. RASPNet intends to fill a prominent gap in the availability of a large-scale, realistic dataset that standardizes the evaluation of adaptive radar processing techniques. We describe its construction, organization, and several potential applications, which includes a transfer learning example to demonstrate how RASPNet can be leveraged for realistic adaptive radar processing scenarios.

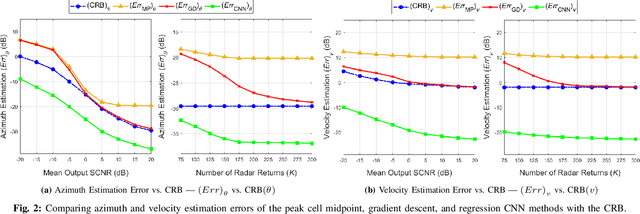

Data-Driven Target Localization: Benchmarking Gradient Descent Using the Cramér-Rao Bound

Jan 20, 2024

Abstract:In modern radar systems, precise target localization using azimuth and velocity estimation is paramount. Traditional unbiased estimation methods have leveraged gradient descent algorithms to reach the theoretical limits of the Cram\'er Rao Bound (CRB) for the error of the parameter estimates. In this study, we present a data-driven neural network approach that outperforms these traditional techniques, demonstrating improved accuracies in target azimuth and velocity estimation. Using a representative simulated scenario, we show that our proposed neural network model consistently achieves improved parameter estimates due to its inherently biased nature, yielding a diminished mean squared error (MSE). Our findings underscore the potential of employing deep learning methods in radar systems, paving the way for more accurate localization in cluttered and dynamic environments.

Random Linear Projections Loss for Hyperplane-Based Optimization in Regression Neural Networks

Nov 21, 2023

Abstract:Despite their popularity across a wide range of domains, regression neural networks are prone to overfitting complex datasets. In this work, we propose a loss function termed Random Linear Projections (RLP) loss, which is empirically shown to mitigate overfitting. With RLP loss, the distance between sets of hyperplanes connecting fixed-size subsets of the neural network's feature-prediction pairs and feature-label pairs is minimized. The intuition behind this loss derives from the notion that if two functions share the same hyperplanes connecting all subsets of feature-label pairs, then these functions must necessarily be equivalent. Our empirical studies, conducted across benchmark datasets and representative synthetic examples, demonstrate the improvements of the proposed RLP loss over mean squared error (MSE). Specifically, neural networks trained with the RLP loss achieve better performance while requiring fewer data samples and are more robust to additive noise. We provide theoretical analysis supporting our empirical findings.

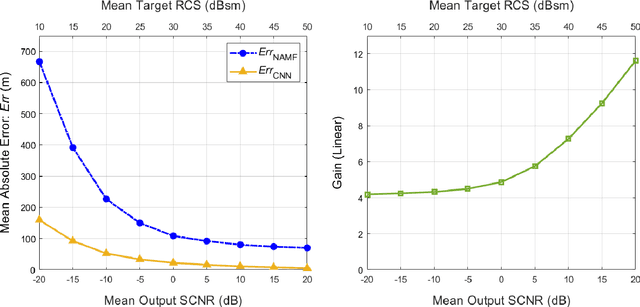

Subspace Perturbation Analysis for Data-Driven Radar Target Localization

Mar 21, 2023

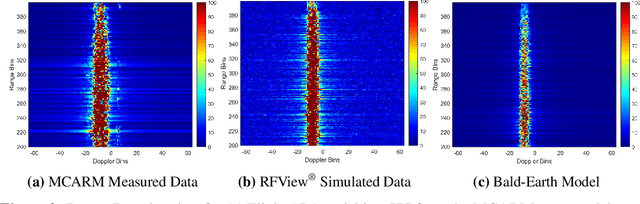

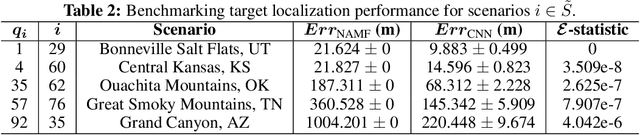

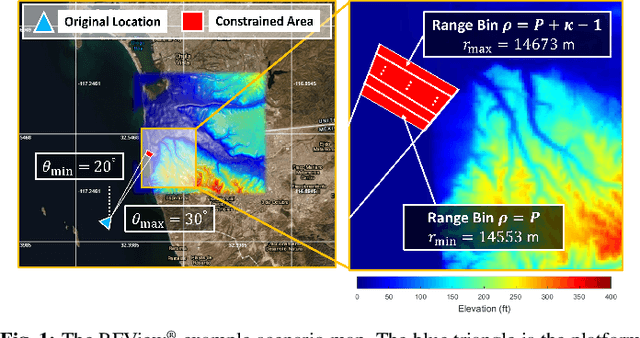

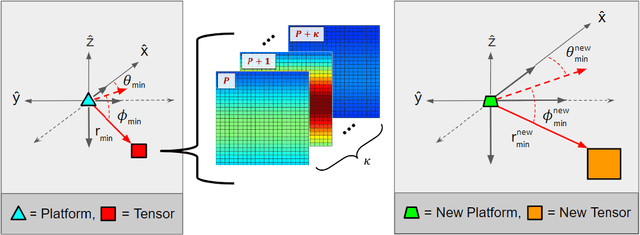

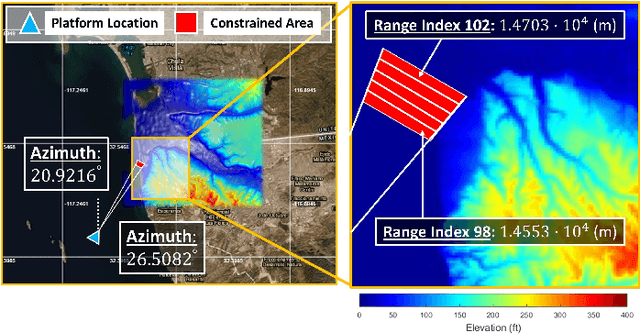

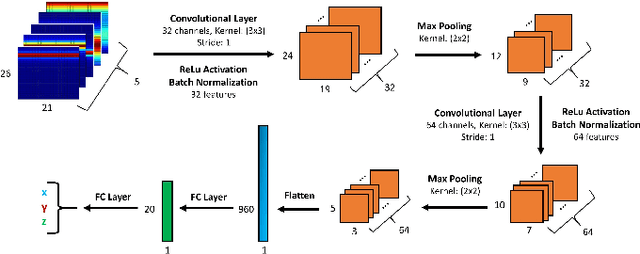

Abstract:Recent works exploring data-driven approaches to classical problems in adaptive radar have demonstrated promising results pertaining to the task of radar target localization. Via the use of space-time adaptive processing (STAP) techniques and convolutional neural networks, these data-driven approaches to target localization have helped benchmark the performance of neural networks for matched scenarios. However, the thorough bridging of these topics across mismatched scenarios still remains an open problem. As such, in this work, we augment our data-driven approach to radar target localization by performing a subspace perturbation analysis, which allows us to benchmark the localization accuracy of our proposed deep learning framework across mismatched scenarios. To evaluate this framework, we generate comprehensive datasets by randomly placing targets of variable strengths in mismatched constrained areas via RFView, a high-fidelity, site-specific modeling and simulation tool. For the radar returns from these constrained areas, we generate heatmap tensors in range, azimuth, and elevation using the normalized adaptive matched filter (NAMF) test statistic. We estimate target locations from these heatmap tensors using a convolutional neural network, and demonstrate that the predictive performance of our framework in the presence of mismatches can be predetermined.

Toward Data-Driven Radar STAP

Sep 22, 2022

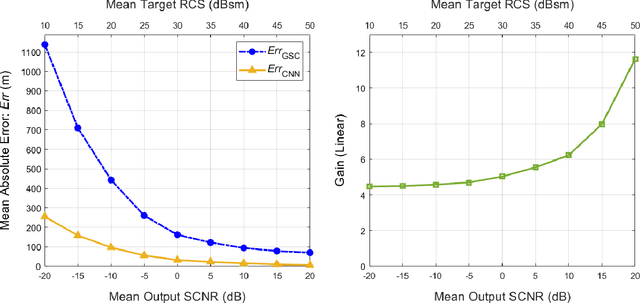

Abstract:Catalyzed by the recent emergence of site-specific, high-fidelity radio frequency (RF) modeling and simulation tools purposed for radar, data-driven formulations of classical methods in radar have rapidly grown in popularity over the past decade. Despite this surge, limited focus has been directed toward the theoretical foundations of these classical methods. In this regard, as part of our ongoing data-driven approach to radar space-time adaptive processing (STAP), we analyze the asymptotic performance guarantees of select subspace separation methods in the context of radar target localization, and augment this analysis through a proposed deep learning framework for target location estimation. In our approach, we generate comprehensive datasets by randomly placing targets of variable strengths in predetermined constrained areas using RFView, a site-specific RF modeling and simulation tool developed by ISL Inc. For each radar return signal from these constrained areas, we generate heatmap tensors in range, azimuth, and elevation of the normalized adaptive matched filter (NAMF) test statistic, and of the output power of a generalized sidelobe canceller (GSC). Using our deep learning framework, we estimate target locations from these heatmap tensors to demonstrate the feasibility of and significant improvements provided by our data-driven approach in matched and mismatched settings.

Toward Data-Driven STAP Radar

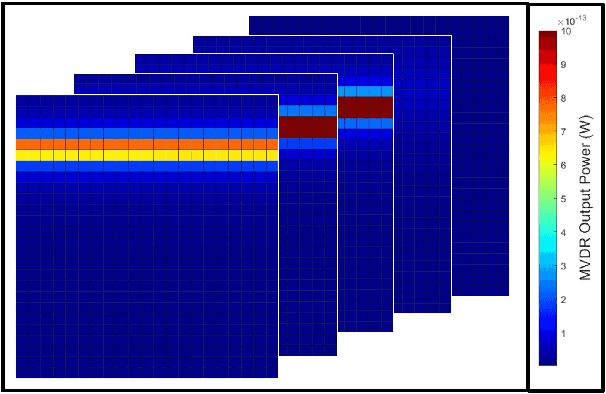

Jan 26, 2022

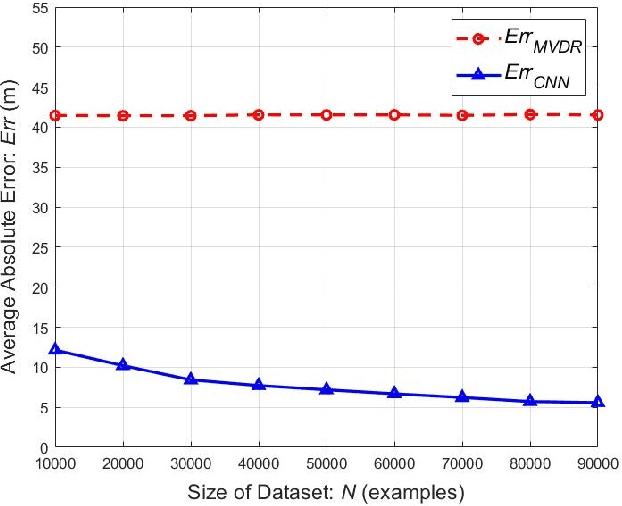

Abstract:Using an amalgamation of techniques from classical radar, computer vision, and deep learning, we characterize our ongoing data-driven approach to space-time adaptive processing (STAP) radar. We generate a rich example dataset of received radar signals by randomly placing targets of variable strengths in a predetermined region using RFView, a site-specific radio frequency modeling and simulation tool developed by ISL Inc. For each data sample within this region, we generate heatmap tensors in range, azimuth, and elevation of the output power of a minimum variance distortionless response (MVDR) beamformer, which can be replaced with a desired test statistic. These heatmap tensors can be thought of as stacked images, and in an airborne scenario, the moving radar creates a sequence of these time-indexed image stacks, resembling a video. Our goal is to use these images and videos to detect targets and estimate their locations, a procedure reminiscent of computer vision algorithms for object detection$-$namely, the Faster Region-Based Convolutional Neural Network (Faster R-CNN). The Faster R-CNN consists of a proposal generating network for determining regions of interest (ROI), a regression network for positioning anchor boxes around targets, and an object classification algorithm; it is developed and optimized for natural images. Our ongoing research will develop analogous tools for heatmap images of radar data. In this regard, we will generate a large, representative adaptive radar signal processing database for training and testing, analogous in spirit to the COCO dataset for natural images. As a preliminary example, we present a regression network in this paper for estimating target locations to demonstrate the feasibility of and significant improvements provided by our data-driven approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge