Toward Data-Driven Radar STAP

Paper and Code

Sep 22, 2022

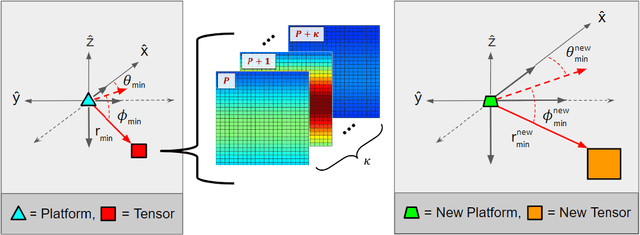

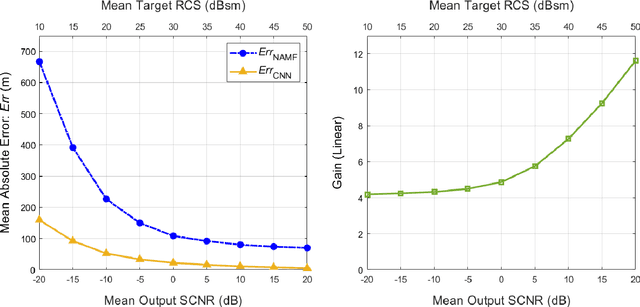

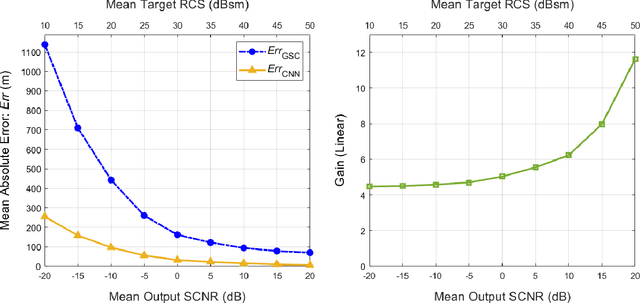

Catalyzed by the recent emergence of site-specific, high-fidelity radio frequency (RF) modeling and simulation tools purposed for radar, data-driven formulations of classical methods in radar have rapidly grown in popularity over the past decade. Despite this surge, limited focus has been directed toward the theoretical foundations of these classical methods. In this regard, as part of our ongoing data-driven approach to radar space-time adaptive processing (STAP), we analyze the asymptotic performance guarantees of select subspace separation methods in the context of radar target localization, and augment this analysis through a proposed deep learning framework for target location estimation. In our approach, we generate comprehensive datasets by randomly placing targets of variable strengths in predetermined constrained areas using RFView, a site-specific RF modeling and simulation tool developed by ISL Inc. For each radar return signal from these constrained areas, we generate heatmap tensors in range, azimuth, and elevation of the normalized adaptive matched filter (NAMF) test statistic, and of the output power of a generalized sidelobe canceller (GSC). Using our deep learning framework, we estimate target locations from these heatmap tensors to demonstrate the feasibility of and significant improvements provided by our data-driven approach in matched and mismatched settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge