San Jiang

Fast Feature Matching of UAV Images via Matrix Band Reduction-based GPU Data Schedule

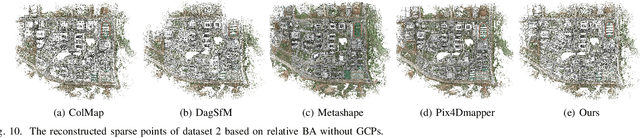

May 28, 2025Abstract:Feature matching dominats the time costs in structure from motion (SfM). The primary contribution of this study is a GPU data schedule algorithm for efficient feature matching of Unmanned aerial vehicle (UAV) images. The core idea is to divide the whole dataset into blocks based on the matrix band reduction (MBR) and achieve efficient feature matching via GPU-accelerated cascade hashing. First, match pairs are selected by using an image retrieval technique, which converts images into global descriptors and searches high-dimension nearest neighbors with graph indexing. Second, compact image blocks are iteratively generated from a MBR-based data schedule strategy, which exploits image connections to avoid redundant data IO (input/output) burden and increases the usage of GPU computing power. Third, guided by the generated image blocks, feature matching is executed sequentially within the framework of GPU-accelerated cascade hashing, and initial candidate matches are refined by combining a local geometric constraint and RANSAC-based global verification. For further performance improvement, these two seps are designed to execute parallelly in GPU and CPU. Finally, the performance of the proposed solution is evaluated by using large-scale UAV datasets. The results demonstrate that it increases the efficiency of feature matching with speedup ratios ranging from 77.0 to 100.0 compared with KD-Tree based matching methods, and achieves comparable accuracy in relative and absolute bundle adjustment (BA). The proposed algorithm is an efficient solution for feature matching of UAV images.

UAVPairs: A Challenging Benchmark for Match Pair Retrieval of Large-scale UAV Images

May 28, 2025

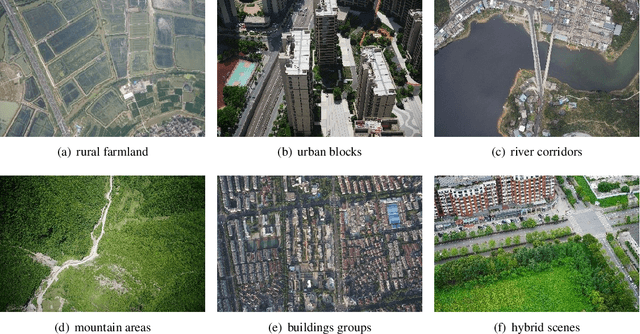

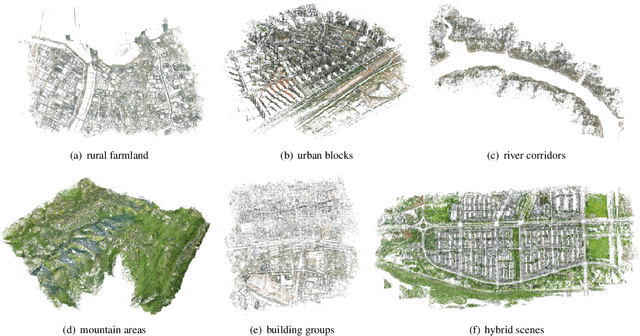

Abstract:The primary contribution of this paper is a challenging benchmark dataset, UAVPairs, and a training pipeline designed for match pair retrieval of large-scale UAV images. First, the UAVPairs dataset, comprising 21,622 high-resolution images across 30 diverse scenes, is constructed; the 3D points and tracks generated by SfM-based 3D reconstruction are employed to define the geometric similarity of image pairs, ensuring genuinely matchable image pairs are used for training. Second, to solve the problem of expensive mining cost for global hard negative mining, a batched nontrivial sample mining strategy is proposed, leveraging the geometric similarity and multi-scene structure of the UAVPairs to generate training samples as to accelerate training. Third, recognizing the limitation of pair-based losses, the ranked list loss is designed to improve the discrimination of image retrieval models, which optimizes the global similarity structure constructed from the positive set and negative set. Finally, the effectiveness of the UAVPairs dataset and training pipeline is validated through comprehensive experiments on three distinct large-scale UAV datasets. The experiment results demonstrate that models trained with the UAVPairs dataset and the ranked list loss achieve significantly improved retrieval accuracy compared to models trained on existing datasets or with conventional losses. Furthermore, these improvements translate to enhanced view graph connectivity and higher quality of reconstructed 3D models. The models trained by the proposed approach perform more robustly compared with hand-crafted global features, particularly in challenging repetitively textured scenes and weakly textured scenes. For match pair retrieval of large-scale UAV images, the trained image retrieval models offer an effective solution. The dataset would be made publicly available at https://github.com/json87/UAVPairs.

SuperMapNet for Long-Range and High-Accuracy Vectorized HD Map Construction

May 20, 2025Abstract:Vectorized HD map is essential for autonomous driving. Remarkable work has been achieved in recent years, but there are still major issues: (1) in the generation of the BEV features, single modality-based methods are of limited perception capability, while direct concatenation-based multi-modal methods fail to capture synergies and disparities between different modalities, resulting in limited ranges with feature holes; (2) in the classification and localization of map elements, only point information is used without the consideration of element infor-mation and neglects the interaction between point information and element information, leading to erroneous shapes and element entanglement with low accuracy. To address above issues, we introduce SuperMapNet for long-range and high-accuracy vectorized HD map construction. It uses both camera images and LiDAR point clouds as input, and first tightly couple semantic information from camera images and geometric information from LiDAR point clouds by a cross-attention based synergy enhancement module and a flow-based disparity alignment module for long-range BEV feature generation. And then, local features from point queries and global features from element queries are tightly coupled by three-level interactions for high-accuracy classification and localization, where Point2Point interaction learns local geometric information between points of the same element and of each point, Element2Element interaction learns relation constraints between different elements and semantic information of each elements, and Point2Element interaction learns complement element information for its constituent points. Experiments on the nuScenes and Argoverse2 datasets demonstrate superior performances, surpassing SOTAs over 14.9/8.8 mAP and 18.5/3.1 mAP under hard/easy settings, respectively. The code is made publicly available1.

Efficient Match Pair Retrieval for Large-scale UAV Images via Graph Indexed Global Descriptor

Jul 10, 2023

Abstract:SfM (Structure from Motion) has been extensively used for UAV (Unmanned Aerial Vehicle) image orientation. Its efficiency is directly influenced by feature matching. Although image retrieval has been extensively used for match pair selection, high computational costs are consumed due to a large number of local features and the large size of the used codebook. Thus, this paper proposes an efficient match pair retrieval method and implements an integrated workflow for parallel SfM reconstruction. First, an individual codebook is trained online by considering the redundancy of UAV images and local features, which avoids the ambiguity of training codebooks from other datasets. Second, local features of each image are aggregated into a single high-dimension global descriptor through the VLAD (Vector of Locally Aggregated Descriptors) aggregation by using the trained codebook, which remarkably reduces the number of features and the burden of nearest neighbor searching in image indexing. Third, the global descriptors are indexed via the HNSW (Hierarchical Navigable Small World) based graph structure for the nearest neighbor searching. Match pairs are then retrieved by using an adaptive threshold selection strategy and utilized to create a view graph for divide-and-conquer based parallel SfM reconstruction. Finally, the performance of the proposed solution has been verified using three large-scale UAV datasets. The test results demonstrate that the proposed solution accelerates match pair retrieval with a speedup ratio ranging from 36 to 108 and improves the efficiency of SfM reconstruction with competitive accuracy in both relative and absolute orientation.

3D Reconstruction of Spherical Images based on Incremental Structure from Motion

Jun 24, 2023Abstract:3D reconstruction plays an increasingly important role in modern photogrammetric systems. Conventional satellite or aerial-based remote sensing (RS) platforms can provide the necessary data sources for the 3D reconstruction of large-scale landforms and cities. Even with low-altitude UAVs (Unmanned Aerial Vehicles), 3D reconstruction in complicated situations, such as urban canyons and indoor scenes, is challenging due to the frequent tracking failures between camera frames and high data collection costs. Recently, spherical images have been extensively exploited due to the capability of recording surrounding environments from one camera exposure. Classical 3D reconstruction pipelines, however, cannot be used for spherical images. Besides, there exist few software packages for 3D reconstruction of spherical images. Based on the imaging geometry of spherical cameras, this study investigates the algorithms for the relative orientation using spherical correspondences, absolute orientation using 3D correspondences between scene and spherical points, and the cost functions for BA (bundle adjustment) optimization. In addition, an incremental SfM (Structure from Motion) workflow has been proposed for spherical images using the above-mentioned algorithms. The proposed solution is finally verified by using three spherical datasets captured by both consumer-grade and professional spherical cameras. The results demonstrate that the proposed SfM workflow can achieve the successful 3D reconstruction of complex scenes and provide useful clues for the implementation in open-source software packages. The source code of the designed SfM workflow would be made publicly available.

3D reconstruction of spherical images: A review of techniques, applications, and prospects

Feb 09, 2023

Abstract:3D reconstruction plays an increasingly important role in modern photogrammetric systems. Conventional satellite or aerial-based remote sensing (RS) platforms can provide the necessary data sources for the 3D reconstruction of large-scale landforms and cities. Even with low-altitude UAVs (Unmanned Aerial Vehicles), 3D reconstruction in complicated situations, such as urban canyons and indoor scenes, is challenging due to frequent tracking failures between camera frames and high data collection costs. Recently, spherical images have been extensively used due to the capability of recording surrounding environments from one camera exposure. In contrast to perspective images with limited FOV (Field of View), spherical images can cover the whole scene with full horizontal and vertical FOV and facilitate camera tracking and data acquisition in these complex scenes. With the rapid evolution and extensive use of professional and consumer-grade spherical cameras, spherical images show great potential for the 3D modeling of urban and indoor scenes. Classical 3D reconstruction pipelines, however, cannot be directly used for spherical images. Besides, there exist few software packages that are designed for the 3D reconstruction of spherical images. As a result, this research provides a thorough survey of the state-of-the-art for 3D reconstruction of spherical images in terms of data acquisition, feature detection and matching, image orientation, and dense matching as well as presenting promising applications and discussing potential prospects. We anticipate that this study offers insightful clues to direct future research.

Optimized Views Photogrammetry: Precision Analysis and A Large-scale Case Study in Qingdao

Jun 24, 2022

Abstract:UAVs have become one of the widely used remote sensing platforms and played a critical role in the construction of smart cities. However, due to the complex environment in urban scenes, secure and accurate data acquisition brings great challenges to 3D modeling and scene updating. Optimal trajectory planning of UAVs and accurate data collection of onboard cameras are non-trivial issues in urban modeling. This study presents the principle of optimized views photogrammetry and verifies its precision and potential in large-scale 3D modeling. Different from oblique photogrammetry, optimized views photogrammetry uses rough models to generate and optimize UAV trajectories, which is achieved through the consideration of model point reconstructability and view point redundancy. Based on the principle of optimized views photogrammetry, this study first conducts a precision analysis of 3D models by using UAV images of optimized views photogrammetry and then executes a large-scale case study in the urban region of Qingdao city, China, to verify its engineering potential. By using GCPs for image orientation precision analysis and TLS (terrestrial laser scanning) point clouds for model quality analysis, experimental results show that optimized views photogrammetry could construct stable image connection networks and could achieve comparable image orientation accuracy. Benefiting from the accurate image acquisition strategy, the quality of mesh models significantly improves, especially for urban areas with serious occlusions, in which 3 to 5 times of higher accuracy has been achieved. Besides, the case study in Qingdao city verifies that optimized views photogrammetry can be a reliable and powerful solution for the large-scale 3D modeling in complex urban scenes.

Parallel Structure from Motion for UAV Images via Weighted Connected Dominating Set

Jun 24, 2022

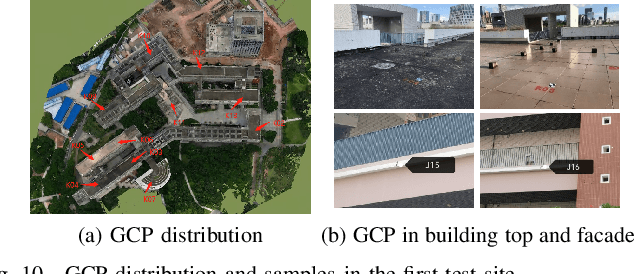

Abstract:Incremental Structure from Motion (ISfM) has been widely used for UAV image orientation. Its efficiency, however, decreases dramatically due to the sequential constraint. Although the divide-and-conquer strategy has been utilized for efficiency improvement, cluster merging becomes difficult or depends on seriously designed overlap structures. This paper proposes an algorithm to extract the global model for cluster merging and designs a parallel SfM solution to achieve efficient and accurate UAV image orientation. First, based on vocabulary tree retrieval, match pairs are selected to construct an undirected weighted match graph, whose edge weights are calculated by considering both the number and distribution of feature matches. Second, an algorithm, termed weighted connected dominating set (WCDS), is designed to achieve the simplification of the match graph and build the global model, which incorporates the edge weight in the graph node selection and enables the successful reconstruction of the global model. Third, the match graph is simultaneously divided into compact and non-overlapped clusters. After the parallel reconstruction, cluster merging is conducted with the aid of common 3D points between the global and cluster models. Finally, by using three UAV datasets that are captured by classical oblique and recent optimized views photogrammetry, the validation of the proposed solution is verified through comprehensive analysis and comparison. The experimental results demonstrate that the proposed parallel SfM can achieve 17.4 times efficiency improvement and comparative orientation accuracy. In absolute BA, the geo-referencing accuracy is approximately 2.0 and 3.0 times the GSD (Ground Sampling Distance) value in the horizontal and vertical directions, respectively. For parallel SfM, the proposed solution is a more reliable alternative.

WHU-Stereo: A Challenging Benchmark for Stereo Matching of High-Resolution Satellite Images

Jun 06, 2022Abstract:Stereo matching of high-resolution satellite images (HRSI) is still a fundamental but challenging task in the field of photogrammetry and remote sensing. Recently, deep learning (DL) methods, especially convolutional neural networks (CNNs), have demonstrated tremendous potential for stereo matching on public benchmark datasets. However, datasets for stereo matching of satellite images are scarce. To facilitate further research, this paper creates and publishes a challenging dataset, termed WHU-Stereo, for stereo matching DL network training and testing. This dataset is created by using airborne LiDAR point clouds and high-resolution stereo imageries taken from the Chinese GaoFen-7 satellite (GF-7). The WHU-Stereo dataset contains more than 1700 epipolar rectified image pairs, which cover six areas in China and includes various kinds of landscapes. We have assessed the accuracy of ground-truth disparity maps, and it is proved that our dataset achieves comparable precision compared with existing state-of-the-art stereo matching datasets. To verify its feasibility, in experiments, the hand-crafted SGM stereo matching algorithm and recent deep learning networks have been tested on the WHU-Stereo dataset. Experimental results show that deep learning networks can be well trained and achieves higher performance than hand-crafted SGM algorithm, and the dataset has great potential in remote sensing application. The WHU-Stereo dataset can serve as a challenging benchmark for stereo matching of high-resolution satellite images, and performance evaluation of deep learning models. Our dataset is available at https://github.com/Sheng029/WHU-Stereo

Hierarchical Motion Consistency Constraint for Efficient Geometrical Verification in UAV Image Matching

Jan 12, 2018

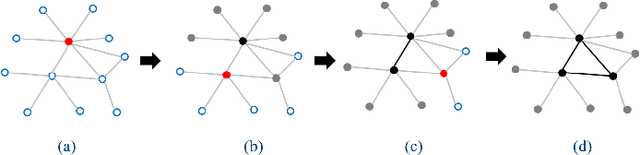

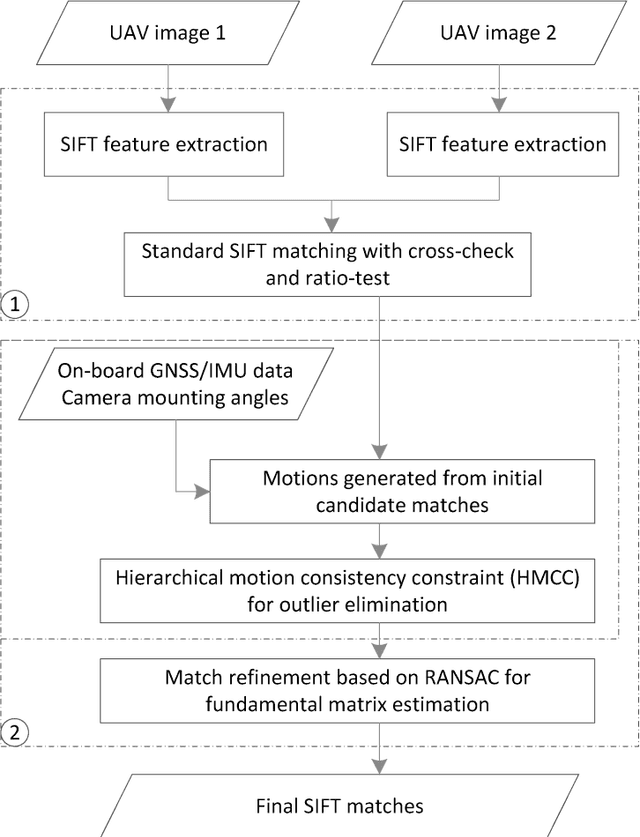

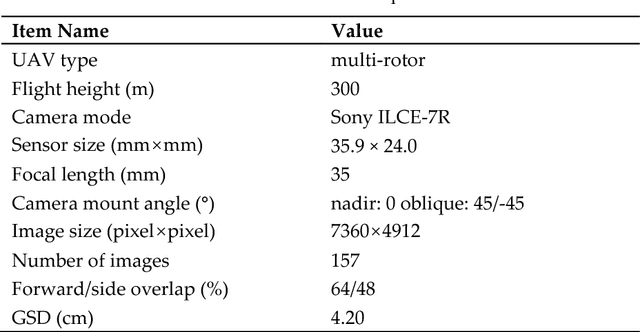

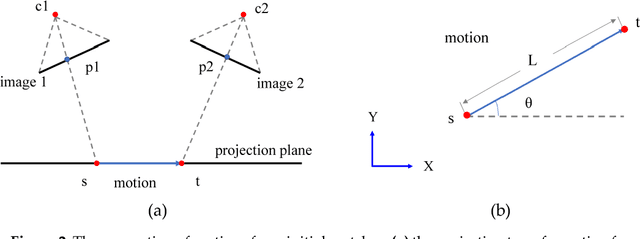

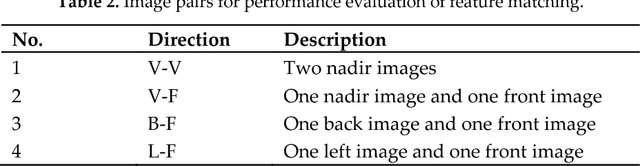

Abstract:This paper proposes a strategy for efficient geometrical verification in unmanned aerial vehicle (UAV) image matching. First, considering the complex transformation model between correspondence set in the image-space, feature points of initial candidate matches are projected onto an elevation plane in the object-space, with assistant of UAV flight control data and camera mounting angles. Spatial relationships are simplified as a 2D-translation in which a motion establishes the relation of two correspondence points. Second, a hierarchical motion consistency constraint, termed HMCC, is designed to eliminate outliers from initial candidate matches, which includes three major steps, namely the global direction consistency constraint, the local direction-change consistency constraint and the global length consistency constraint. To cope with scenarios with high outlier ratios, the HMCC is achieved by using a voting scheme. Finally, an efficient geometrical verification strategy is proposed by using the HMCC as a pre-processing step to increase inlier ratios before the consequent application of the basic RANSAC algorithm. The performance of the proposed strategy is verified through comprehensive comparison and analysis by using real UAV datasets captured with different photogrammetric systems. Experimental results demonstrate that the generated motions have noticeable separation ability, and the HMCC-RANSAC algorithm can efficiently eliminate outliers based on the motion consistency constraint, with a speedup ratio reaching to 6 for oblique UAV images. Even though the completeness sacrifice of approximately 7 percent of points is observed from image orientation tests, competitive orientation accuracy is achieved from all used datasets. For geometrical verification of both nadir and oblique UAV images, the proposed method can be a more efficient solution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge