Sai Shankar Narasimhan

A Challenge to Build Neuro-Symbolic Video Agents

May 20, 2025Abstract:Modern video understanding systems excel at tasks such as scene classification, object detection, and short video retrieval. However, as video analysis becomes increasingly central to real-world applications, there is a growing need for proactive video agents for the systems that not only interpret video streams but also reason about events and take informed actions. A key obstacle in this direction is temporal reasoning: while deep learning models have made remarkable progress in recognizing patterns within individual frames or short clips, they struggle to understand the sequencing and dependencies of events over time, which is critical for action-driven decision-making. Addressing this limitation demands moving beyond conventional deep learning approaches. We posit that tackling this challenge requires a neuro-symbolic perspective, where video queries are decomposed into atomic events, structured into coherent sequences, and validated against temporal constraints. Such an approach can enhance interpretability, enable structured reasoning, and provide stronger guarantees on system behavior, all key properties for advancing trustworthy video agents. To this end, we present a grand challenge to the research community: developing the next generation of intelligent video agents that integrate three core capabilities: (1) autonomous video search and analysis, (2) seamless real-world interaction, and (3) advanced content generation. By addressing these pillars, we can transition from passive perception to intelligent video agents that reason, predict, and act, pushing the boundaries of video understanding.

SynDiff-AD: Improving Semantic Segmentation and End-to-End Autonomous Driving with Synthetic Data from Latent Diffusion Models

Nov 25, 2024Abstract:In recent years, significant progress has been made in collecting large-scale datasets to improve segmentation and autonomous driving models. These large-scale datasets are often dominated by common environmental conditions such as "Clear and Day" weather, leading to decreased performance in under-represented conditions like "Rainy and Night". To address this issue, we introduce SynDiff-AD, a novel data augmentation pipeline that leverages diffusion models (DMs) to generate realistic images for such subgroups. SynDiff-AD uses ControlNet-a DM that guides data generation conditioned on semantic maps-along with a novel prompting scheme that generates subgroup-specific, semantically dense prompts. By augmenting datasets with SynDiff-AD, we improve the performance of segmentation models like Mask2Former and SegFormer by up to 1.2% and 2.3% on the Waymo dataset, and up to 1.4% and 0.7% on the DeepDrive dataset, respectively. Additionally, we demonstrate that our SynDiff-AD pipeline enhances the driving performance of end-to-end autonomous driving models, like AIM-2D and AIM-BEV, by up to 20% across diverse environmental conditions in the CARLA autonomous driving simulator, providing a more robust model.

Context Matters: Leveraging Contextual Features for Time Series Forecasting

Oct 17, 2024Abstract:Time series forecasts are often influenced by exogenous contextual features in addition to their corresponding history. For example, in financial settings, it is hard to accurately predict a stock price without considering public sentiments and policy decisions in the form of news articles, tweets, etc. Though this is common knowledge, the current state-of-the-art (SOTA) forecasting models fail to incorporate such contextual information, owing to its heterogeneity and multimodal nature. To address this, we introduce ContextFormer, a novel plug-and-play method to surgically integrate multimodal contextual information into existing pre-trained forecasting models. ContextFormer effectively distills forecast-specific information from rich multimodal contexts, including categorical, continuous, time-varying, and even textual information, to significantly enhance the performance of existing base forecasters. ContextFormer outperforms SOTA forecasting models by up to 30% on a range of real-world datasets spanning energy, traffic, environmental, and financial domains.

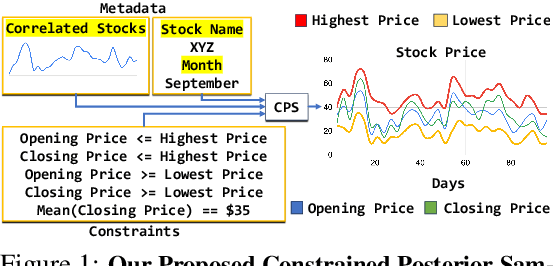

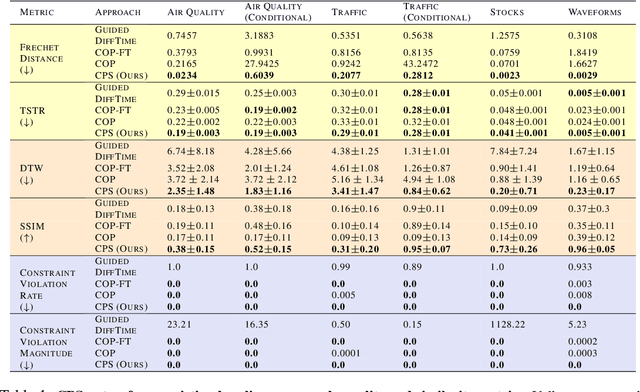

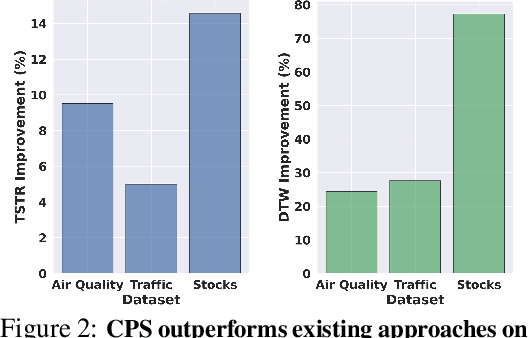

Constrained Posterior Sampling: Time Series Generation with Hard Constraints

Oct 16, 2024

Abstract:Generating realistic time series samples is crucial for stress-testing models and protecting user privacy by using synthetic data. In engineering and safety-critical applications, these samples must meet certain hard constraints that are domain-specific or naturally imposed by physics or nature. Consider, for example, generating electricity demand patterns with constraints on peak demand times. This can be used to stress-test the functioning of power grids during adverse weather conditions. Existing approaches for generating constrained time series are either not scalable or degrade sample quality. To address these challenges, we introduce Constrained Posterior Sampling (CPS), a diffusion-based sampling algorithm that aims to project the posterior mean estimate into the constraint set after each denoising update. Notably, CPS scales to a large number of constraints (~100) without requiring additional training. We provide theoretical justifications highlighting the impact of our projection step on sampling. Empirically, CPS outperforms state-of-the-art methods in sample quality and similarity to real time series by around 10% and 42%, respectively, on real-world stocks, traffic, and air quality datasets.

Time Weaver: A Conditional Time Series Generation Model

Mar 05, 2024Abstract:Imagine generating a city's electricity demand pattern based on weather, the presence of an electric vehicle, and location, which could be used for capacity planning during a winter freeze. Such real-world time series are often enriched with paired heterogeneous contextual metadata (weather, location, etc.). Current approaches to time series generation often ignore this paired metadata, and its heterogeneity poses several practical challenges in adapting existing conditional generation approaches from the image, audio, and video domains to the time series domain. To address this gap, we introduce Time Weaver, a novel diffusion-based model that leverages the heterogeneous metadata in the form of categorical, continuous, and even time-variant variables to significantly improve time series generation. Additionally, we show that naive extensions of standard evaluation metrics from the image to the time series domain are insufficient. These metrics do not penalize conditional generation approaches for their poor specificity in reproducing the metadata-specific features in the generated time series. Thus, we innovate a novel evaluation metric that accurately captures the specificity of conditional generation and the realism of the generated time series. We show that Time Weaver outperforms state-of-the-art benchmarks, such as Generative Adversarial Networks (GANs), by up to 27% in downstream classification tasks on real-world energy, medical, air quality, and traffic data sets.

Safe Networked Robotics via Formal Verification

Feb 22, 2023Abstract:Autonomous robots must utilize rich sensory data to make safe control decisions. Often, compute-constrained robots require assistance from remote computation (''the cloud'') if they need to invoke compute-intensive Deep Neural Network perception or control models. Likewise, a robot can be remotely teleoperated by a human during risky scenarios. However, this assistance comes at the cost of a time delay due to network latency, resulting in stale/delayed observations being used in the cloud to compute the control commands for the present robot state. Such communication delays could potentially lead to the violation of essential safety properties, such as collision avoidance. This paper develops methods to ensure the safety of teleoperated robots with stochastic latency. To do so, we use tools from formal verification to construct a shield (i.e., run-time monitor) that provides a list of safe actions for any delayed sensory observation, given the expected and worst-case network latency. Our shield is minimally intrusive and enables networked robots to satisfy key safety constraints, expressed as temporal logic specifications, with high probability. Our approach gracefully improves a teleoperated robot's safety vs. efficiency trade-off as a function of network latency, allowing us to quantify performance gains for WiFi or even future 5G networks. We demonstrate our approach on a real F1/10th autonomous vehicle that navigates in crowded indoor environments and transmits rich LiDAR sensory data over congested WiFi links.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge