Sadra Sabouri

Exploring the Challenges and Opportunities of AI-assisted Codebase Generation

Aug 11, 2025Abstract:Recent AI code assistants have significantly improved their ability to process more complex contexts and generate entire codebases based on a textual description, compared to the popular snippet-level generation. These codebase AI assistants (CBAs) can also extend or adapt codebases, allowing users to focus on higher-level design and deployment decisions. While prior work has extensively studied the impact of snippet-level code generation, this new class of codebase generation models is relatively unexplored. Despite initial anecdotal reports of excitement about these agents, they remain less frequently adopted compared to snippet-level code assistants. To utilize CBAs better, we need to understand how developers interact with CBAs, and how and why CBAs fall short of developers' needs. In this paper, we explored these gaps through a counterbalanced user study and interview with (n = 16) students and developers working on coding tasks with CBAs. We found that participants varied the information in their prompts, like problem description (48% of prompts), required functionality (98% of prompts), code structure (48% of prompts), and their prompt writing process. Despite various strategies, the overall satisfaction score with generated codebases remained low (mean = 2.8, median = 3, on a scale of one to five). Participants mentioned functionality as the most common factor for dissatisfaction (77% of instances), alongside poor code quality (42% of instances) and communication issues (25% of instances). We delve deeper into participants' dissatisfaction to identify six underlying challenges that participants faced when using CBAs, and extracted five barriers to incorporating CBAs into their workflows. Finally, we surveyed 21 commercial CBAs to compare their capabilities with participant challenges and present design opportunities for more efficient and useful CBAs.

ELI-Why: Evaluating the Pedagogical Utility of Language Model Explanations

Jun 17, 2025

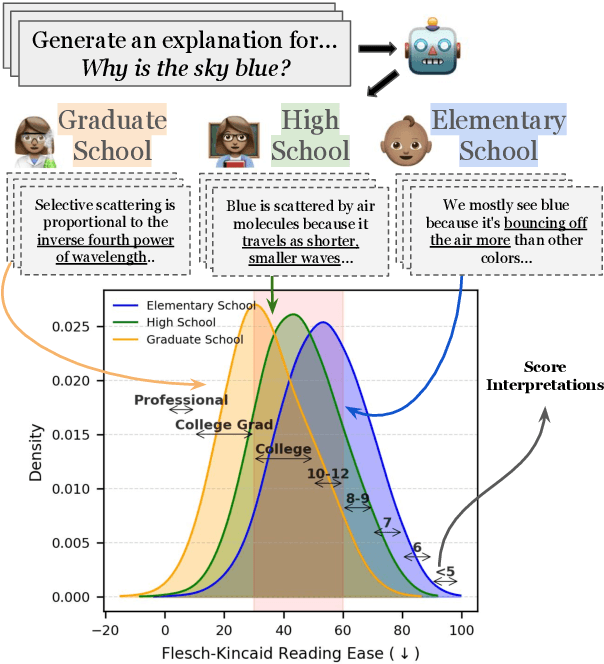

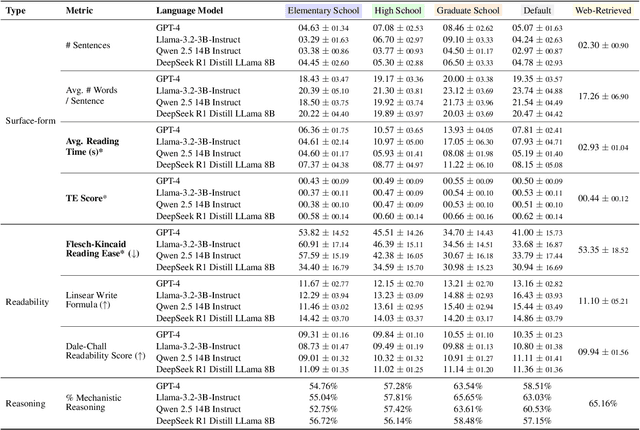

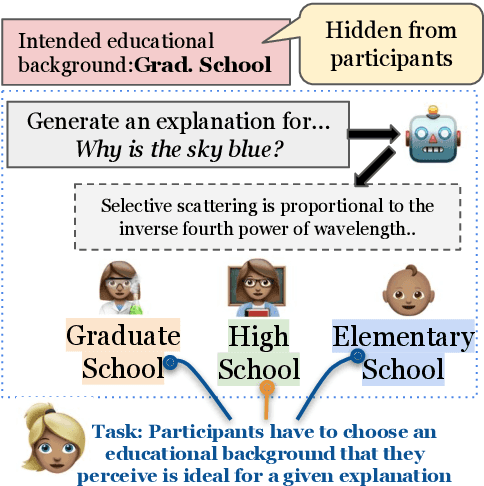

Abstract:Language models today are widely used in education, yet their ability to tailor responses for learners with varied informational needs and knowledge backgrounds remains under-explored. To this end, we introduce ELI-Why, a benchmark of 13.4K "Why" questions to evaluate the pedagogical capabilities of language models. We then conduct two extensive human studies to assess the utility of language model-generated explanatory answers (explanations) on our benchmark, tailored to three distinct educational grades: elementary, high-school and graduate school. In our first study, human raters assume the role of an "educator" to assess model explanations' fit to different educational grades. We find that GPT-4-generated explanations match their intended educational background only 50% of the time, compared to 79% for lay human-curated explanations. In our second study, human raters assume the role of a learner to assess if an explanation fits their own informational needs. Across all educational backgrounds, users deemed GPT-4-generated explanations 20% less suited on average to their informational needs, when compared to explanations curated by lay people. Additionally, automated evaluation metrics reveal that explanations generated across different language model families for different informational needs remain indistinguishable in their grade-level, limiting their pedagogical effectiveness.

PyMilo: A Python Library for ML I/O

Dec 31, 2024Abstract:PyMilo is an open-source Python package that addresses the limitations of existing Machine Learning (ML) model storage formats by providing a transparent, reliable, and safe method for exporting and deploying trained models. Current formats, such as pickle and other binary formats, have significant problems, such as reliability, safety, and transparency issues. In contrast, PyMilo serializes ML models in a transparent non-executable format, enabling straightforward and safe model exchange, while also facilitating the deserialization and deployment of exported models in production environments. This package aims to provide a seamless, end-to-end solution for the exportation and importation of pre-trained ML models, which simplifies the model development and deployment pipeline.

A Semidefinite Relaxation Approach for Fair Graph Clustering

Oct 19, 2024

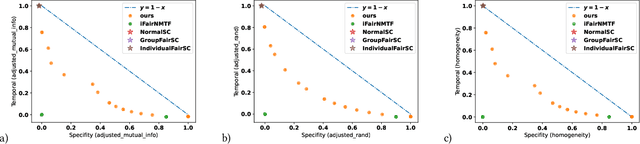

Abstract:Fair graph clustering is crucial for ensuring equitable representation and treatment of diverse communities in network analysis. Traditional methods often ignore disparities among social, economic, and demographic groups, perpetuating biased outcomes and reinforcing inequalities. This study introduces fair graph clustering within the framework of the disparate impact doctrine, treating it as a joint optimization problem integrating clustering quality and fairness constraints. Given the NP-hard nature of this problem, we employ a semidefinite relaxation approach to approximate the underlying optimization problem. For up to medium-sized graphs, we utilize a singular value decomposition-based algorithm, while for larger graphs, we propose a novel algorithm based on the alternative direction method of multipliers. Unlike existing methods, our formulation allows for tuning the trade-off between clustering quality and fairness. Experimental results on graphs generated from the standard stochastic block model demonstrate the superiority of our approach in achieving an optimal accuracy-fairness trade-off compared to state-of-the-art methods.

naab: A ready-to-use plug-and-play corpus for Farsi

Aug 29, 2022

Abstract:Huge corpora of textual data are always known to be a crucial need for training deep models such as transformer-based ones. This issue is emerging more in lower resource languages - like Farsi. We propose naab, the biggest cleaned and ready-to-use open-source textual corpus in Farsi. It contains about 130GB of data, 250 million paragraphs, and 15 billion words. The project name is derived from the Farsi word NAAB K which means pure and high grade. We also provide the raw version of the corpus called naab-raw and an easy-to-use preprocessor that can be employed by those who wanted to make a customized corpus.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge