Ryan Sheatsley

On the Robustness Tradeoff in Fine-Tuning

Mar 19, 2025Abstract:Fine-tuning has become the standard practice for adapting pre-trained (upstream) models to downstream tasks. However, the impact on model robustness is not well understood. In this work, we characterize the robustness-accuracy trade-off in fine-tuning. We evaluate the robustness and accuracy of fine-tuned models over 6 benchmark datasets and 7 different fine-tuning strategies. We observe a consistent trade-off between adversarial robustness and accuracy. Peripheral updates such as BitFit are more effective for simple tasks--over 75% above the average measured with area under the Pareto frontiers on CIFAR-10 and CIFAR-100. In contrast, fine-tuning information-heavy layers, such as attention layers via Compacter, achieves a better Pareto frontier on more complex tasks--57.5% and 34.6% above the average on Caltech-256 and CUB-200, respectively. Lastly, we observe that robustness of fine-tuning against out-of-distribution data closely tracks accuracy. These insights emphasize the need for robustness-aware fine-tuning to ensure reliable real-world deployments.

Adversarial Agents: Black-Box Evasion Attacks with Reinforcement Learning

Mar 03, 2025

Abstract:Reinforcement learning (RL) offers powerful techniques for solving complex sequential decision-making tasks from experience. In this paper, we demonstrate how RL can be applied to adversarial machine learning (AML) to develop a new class of attacks that learn to generate adversarial examples: inputs designed to fool machine learning models. Unlike traditional AML methods that craft adversarial examples independently, our RL-based approach retains and exploits past attack experience to improve future attacks. We formulate adversarial example generation as a Markov Decision Process and evaluate RL's ability to (a) learn effective and efficient attack strategies and (b) compete with state-of-the-art AML. On CIFAR-10, our agent increases the success rate of adversarial examples by 19.4% and decreases the median number of victim model queries per adversarial example by 53.2% from the start to the end of training. In a head-to-head comparison with a state-of-the-art image attack, SquareAttack, our approach enables an adversary to generate adversarial examples with 13.1% more success after 5000 episodes of training. From a security perspective, this work demonstrates a powerful new attack vector that uses RL to attack ML models efficiently and at scale.

Targeting Alignment: Extracting Safety Classifiers of Aligned LLMs

Jan 27, 2025

Abstract:Alignment in large language models (LLMs) is used to enforce guidelines such as safety. Yet, alignment fails in the face of jailbreak attacks that modify inputs to induce unsafe outputs. In this paper, we present and evaluate a method to assess the robustness of LLM alignment. We observe that alignment embeds a safety classifier in the target model that is responsible for deciding between refusal and compliance. We seek to extract an approximation of this classifier, called a surrogate classifier, from the LLM. We develop an algorithm for identifying candidate classifiers from subsets of the LLM model. We evaluate the degree to which the candidate classifiers approximate the model's embedded classifier in benign (F1 score) and adversarial (using surrogates in a white-box attack) settings. Our evaluation shows that the best candidates achieve accurate agreement (an F1 score above 80%) using as little as 20% of the model architecture. Further, we find attacks mounted on the surrogate models can be transferred with high accuracy. For example, a surrogate using only 50% of the Llama 2 model achieved an attack success rate (ASR) of 70%, a substantial improvement over attacking the LLM directly, where we only observed a 22% ASR. These results show that extracting surrogate classifiers is a viable (and highly effective) means for modeling (and therein addressing) the vulnerability of aligned models to jailbreaking attacks.

Err on the Side of Texture: Texture Bias on Real Data

Dec 13, 2024

Abstract:Bias significantly undermines both the accuracy and trustworthiness of machine learning models. To date, one of the strongest biases observed in image classification models is texture bias-where models overly rely on texture information rather than shape information. Yet, existing approaches for measuring and mitigating texture bias have not been able to capture how textures impact model robustness in real-world settings. In this work, we introduce the Texture Association Value (TAV), a novel metric that quantifies how strongly models rely on the presence of specific textures when classifying objects. Leveraging TAV, we demonstrate that model accuracy and robustness are heavily influenced by texture. Our results show that texture bias explains the existence of natural adversarial examples, where over 90% of these samples contain textures that are misaligned with the learned texture of their true label, resulting in confident mispredictions.

The Space of Adversarial Strategies

Sep 09, 2022

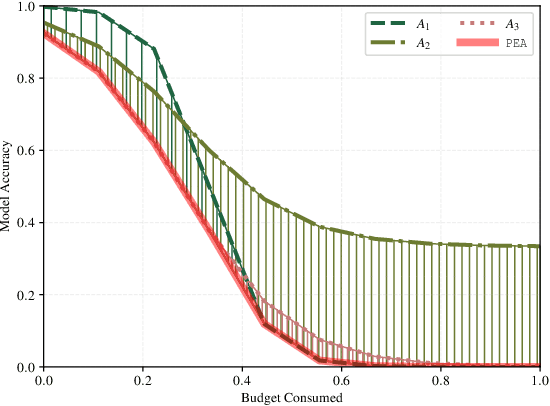

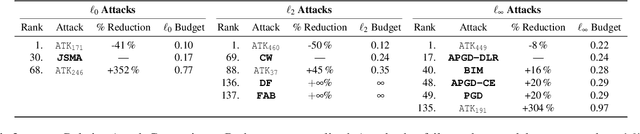

Abstract:Adversarial examples, inputs designed to induce worst-case behavior in machine learning models, have been extensively studied over the past decade. Yet, our understanding of this phenomenon stems from a rather fragmented pool of knowledge; at present, there are a handful of attacks, each with disparate assumptions in threat models and incomparable definitions of optimality. In this paper, we propose a systematic approach to characterize worst-case (i.e., optimal) adversaries. We first introduce an extensible decomposition of attacks in adversarial machine learning by atomizing attack components into surfaces and travelers. With our decomposition, we enumerate over components to create 576 attacks (568 of which were previously unexplored). Next, we propose the Pareto Ensemble Attack (PEA): a theoretical attack that upper-bounds attack performance. With our new attacks, we measure performance relative to the PEA on: both robust and non-robust models, seven datasets, and three extended lp-based threat models incorporating compute costs, formalizing the Space of Adversarial Strategies. From our evaluation we find that attack performance to be highly contextual: the domain, model robustness, and threat model can have a profound influence on attack efficacy. Our investigation suggests that future studies measuring the security of machine learning should: (1) be contextualized to the domain & threat models, and (2) go beyond the handful of known attacks used today.

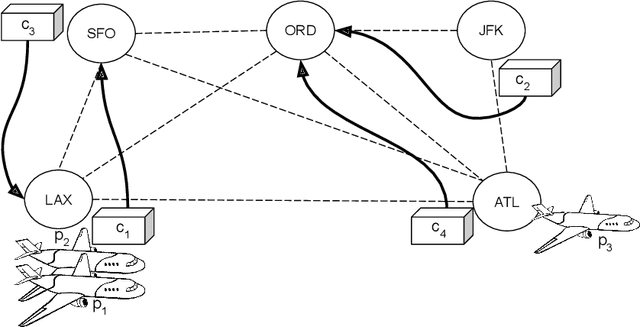

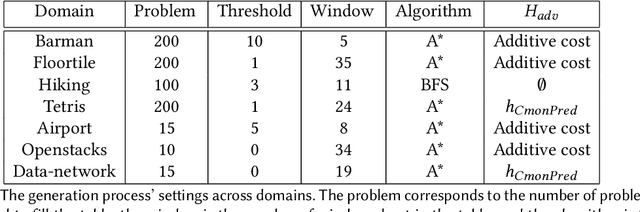

Adversarial Plannning

May 01, 2022

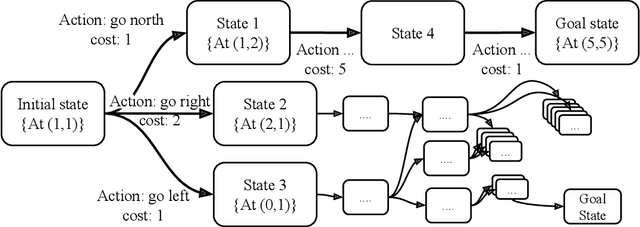

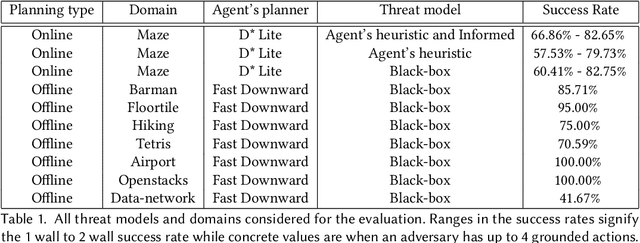

Abstract:Planning algorithms are used in computational systems to direct autonomous behavior. In a canonical application, for example, planning for autonomous vehicles is used to automate the static or continuous planning towards performance, resource management, or functional goals (e.g., arriving at the destination, managing fuel fuel consumption). Existing planning algorithms assume non-adversarial settings; a least-cost plan is developed based on available environmental information (i.e., the input instance). Yet, it is unclear how such algorithms will perform in the face of adversaries attempting to thwart the planner. In this paper, we explore the security of planning algorithms used in cyber- and cyber-physical systems. We present two $\textit{adversarial planning}$ algorithms-one static and one adaptive-that perturb input planning instances to maximize cost (often substantially so). We evaluate the performance of the algorithms against two dominant planning algorithms used in commercial applications (D* Lite and Fast Downward) and show both are vulnerable to extremely limited adversarial action. Here, experiments show that an adversary is able to increase plan costs in 66.9% of instances by only removing a single action from the actions space (D* Lite) and render 70% of instances from an international planning competition unsolvable by removing only three actions (Fast Forward). Finally, we show that finding an optimal perturbation in any search-based planning system is NP-hard.

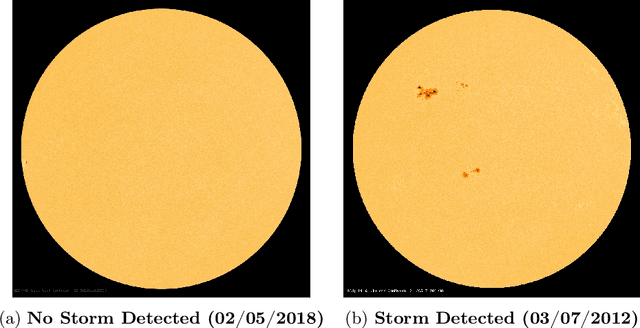

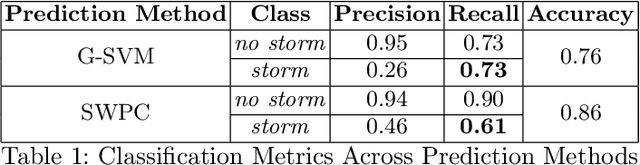

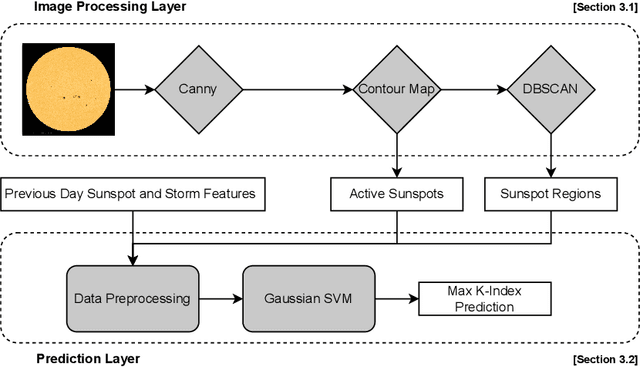

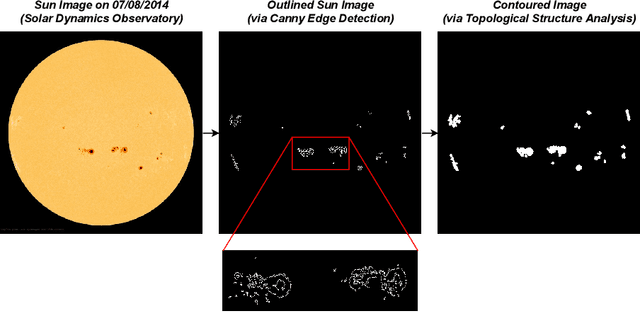

A Machine Learning and Computer Vision Approach to Geomagnetic Storm Forecasting

Apr 04, 2022

Abstract:Geomagnetic storms, disturbances of Earth's magnetosphere caused by masses of charged particles being emitted from the Sun, are an uncontrollable threat to modern technology. Notably, they have the potential to damage satellites and cause instability in power grids on Earth, among other disasters. They result from high sun activity, which are induced from cool areas on the Sun known as sunspots. Forecasting the storms to prevent disasters requires an understanding of how and when they will occur. However, current prediction methods at the National Oceanic and Atmospheric Administration (NOAA) are limited in that they depend on expensive solar wind spacecraft and a global-scale magnetometer sensor network. In this paper, we introduce a novel machine learning and computer vision approach to accurately forecast geomagnetic storms without the need of such costly physical measurements. Our approach extracts features from images of the Sun to establish correlations between sunspots and geomagnetic storm classification and is competitive with NOAA's predictions. Indeed, our prediction achieves a 76% storm classification accuracy. This paper serves as an existence proof that machine learning and computer vision techniques provide an effective means for augmenting and improving existing geomagnetic storm forecasting methods.

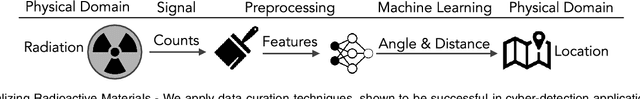

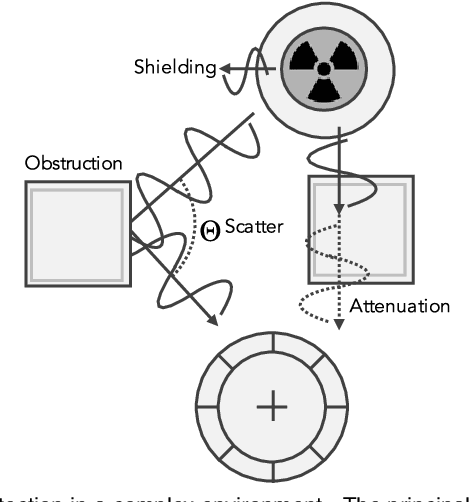

Improving Radioactive Material Localization by Leveraging Cyber-Security Model Optimizations

Feb 21, 2022

Abstract:One of the principal uses of physical-space sensors in public safety applications is the detection of unsafe conditions (e.g., release of poisonous gases, weapons in airports, tainted food). However, current detection methods in these applications are often costly, slow to use, and can be inaccurate in complex, changing, or new environments. In this paper, we explore how machine learning methods used successfully in cyber domains, such as malware detection, can be leveraged to substantially enhance physical space detection. We focus on one important exemplar application--the detection and localization of radioactive materials. We show that the ML-based approaches can significantly exceed traditional table-based approaches in predicting angular direction. Moreover, the developed models can be expanded to include approximations of the distance to radioactive material (a critical dimension that reference tables used in practice do not capture). With four and eight detector arrays, we collect counts of gamma-rays as features for a suite of machine learning models to localize radioactive material. We explore seven unique scenarios via simulation frameworks frequently used for radiation detection and with physical experiments using radioactive material in laboratory environments. We observe that our approach can outperform the standard table-based method, reducing the angular error by 37% and reliably predicting distance within 2.4%. In this way, we show that advances in cyber-detection provide substantial opportunities for enhancing detection in public safety applications and beyond.

HoneyModels: Machine Learning Honeypots

Feb 21, 2022

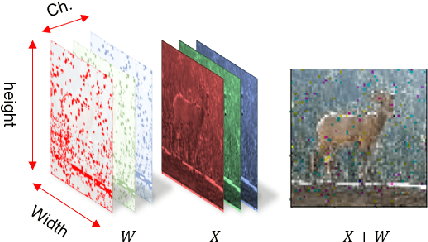

Abstract:Machine Learning is becoming a pivotal aspect of many systems today, offering newfound performance on classification and prediction tasks, but this rapid integration also comes with new unforeseen vulnerabilities. To harden these systems the ever-growing field of Adversarial Machine Learning has proposed new attack and defense mechanisms. However, a great asymmetry exists as these defensive methods can only provide security to certain models and lack scalability, computational efficiency, and practicality due to overly restrictive constraints. Moreover, newly introduced attacks can easily bypass defensive strategies by making subtle alterations. In this paper, we study an alternate approach inspired by honeypots to detect adversaries. Our approach yields learned models with an embedded watermark. When an adversary initiates an interaction with our model, attacks are encouraged to add this predetermined watermark stimulating detection of adversarial examples. We show that HoneyModels can reveal 69.5% of adversaries attempting to attack a Neural Network while preserving the original functionality of the model. HoneyModels offer an alternate direction to secure Machine Learning that slightly affects the accuracy while encouraging the creation of watermarked adversarial samples detectable by the HoneyModel but indistinguishable from others for the adversary.

On the Robustness of Domain Constraints

May 18, 2021

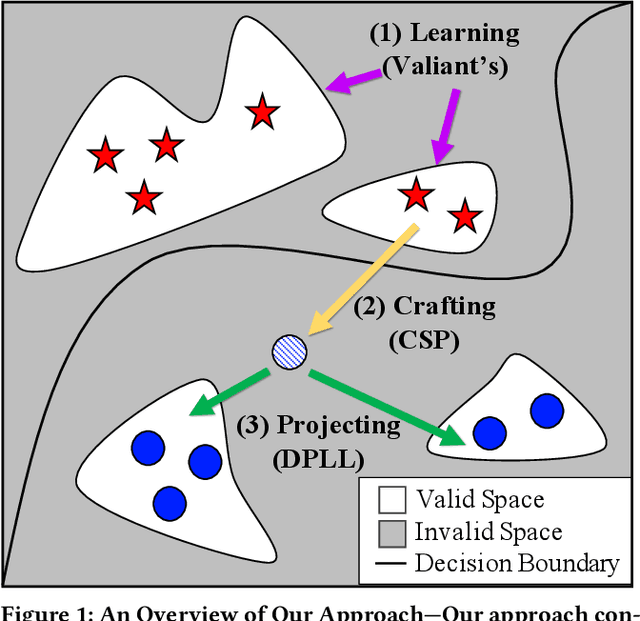

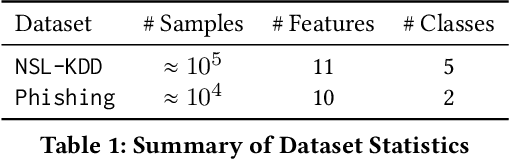

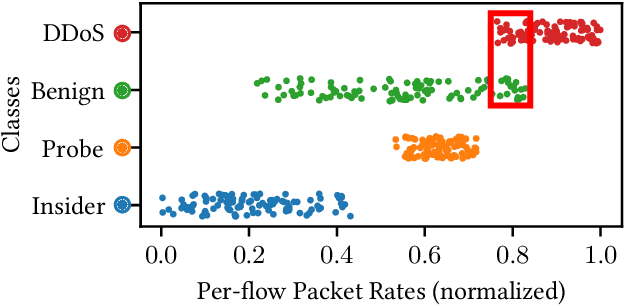

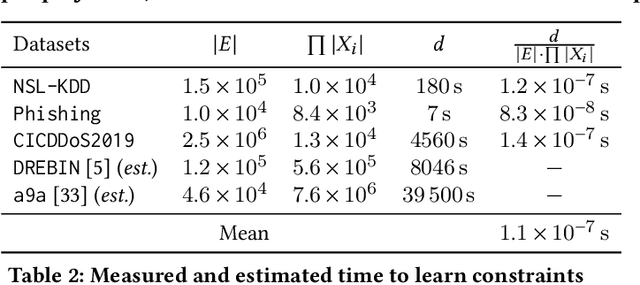

Abstract:Machine learning is vulnerable to adversarial examples-inputs designed to cause models to perform poorly. However, it is unclear if adversarial examples represent realistic inputs in the modeled domains. Diverse domains such as networks and phishing have domain constraints-complex relationships between features that an adversary must satisfy for an attack to be realized (in addition to any adversary-specific goals). In this paper, we explore how domain constraints limit adversarial capabilities and how adversaries can adapt their strategies to create realistic (constraint-compliant) examples. In this, we develop techniques to learn domain constraints from data, and show how the learned constraints can be integrated into the adversarial crafting process. We evaluate the efficacy of our approach in network intrusion and phishing datasets and find: (1) up to 82% of adversarial examples produced by state-of-the-art crafting algorithms violate domain constraints, (2) domain constraints are robust to adversarial examples; enforcing constraints yields an increase in model accuracy by up to 34%. We observe not only that adversaries must alter inputs to satisfy domain constraints, but that these constraints make the generation of valid adversarial examples far more challenging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge