Ruiyan Wang

PoseAnything: Universal Pose-guided Video Generation with Part-aware Temporal Coherence

Dec 15, 2025Abstract:Pose-guided video generation refers to controlling the motion of subjects in generated video through a sequence of poses. It enables precise control over subject motion and has important applications in animation. However, current pose-guided video generation methods are limited to accepting only human poses as input, thus generalizing poorly to pose of other subjects. To address this issue, we propose PoseAnything, the first universal pose-guided video generation framework capable of handling both human and non-human characters, supporting arbitrary skeletal inputs. To enhance consistency preservation during motion, we introduce Part-aware Temporal Coherence Module, which divides the subject into different parts, establishes part correspondences, and computes cross-attention between corresponding parts across frames to achieve fine-grained part-level consistency. Additionally, we propose Subject and Camera Motion Decoupled CFG, a novel guidance strategy that, for the first time, enables independent camera movement control in pose-guided video generation, by separately injecting subject and camera motion control information into the positive and negative anchors of CFG. Furthermore, we present XPose, a high-quality public dataset containing 50,000 non-human pose-video pairs, along with an automated pipeline for annotation and filtering. Extensive experiments demonstrate that Pose-Anything significantly outperforms state-of-the-art methods in both effectiveness and generalization.

PA-HOI: A Physics-Aware Human and Object Interaction Dataset

Aug 08, 2025

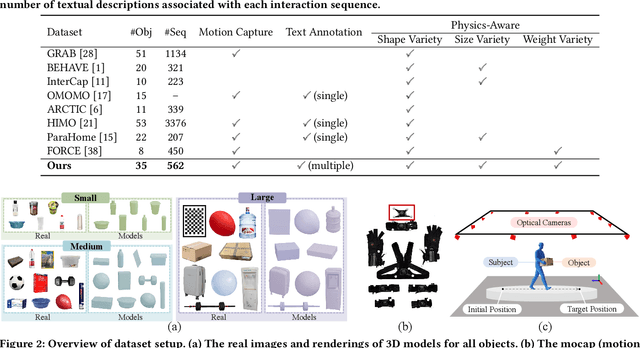

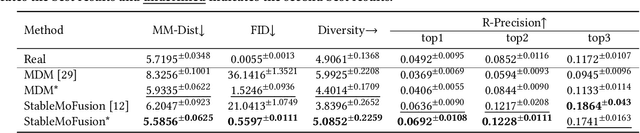

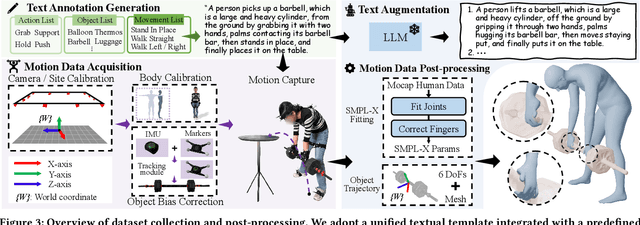

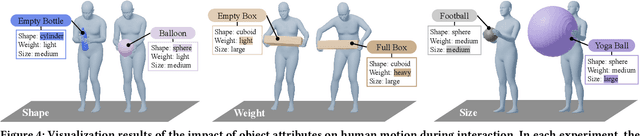

Abstract:The Human-Object Interaction (HOI) task explores the dynamic interactions between humans and objects in physical environments, providing essential biomechanical and cognitive-behavioral foundations for fields such as robotics, virtual reality, and human-computer interaction. However, existing HOI data sets focus on details of affordance, often neglecting the influence of physical properties of objects on human long-term motion. To bridge this gap, we introduce the PA-HOI Motion Capture dataset, which highlights the impact of objects' physical attributes on human motion dynamics, including human posture, moving velocity, and other motion characteristics. The dataset comprises 562 motion sequences of human-object interactions, with each sequence performed by subjects of different genders interacting with 35 3D objects that vary in size, shape, and weight. This dataset stands out by significantly extending the scope of existing ones for understanding how the physical attributes of different objects influence human posture, speed, motion scale, and interacting strategies. We further demonstrate the applicability of the PA-HOI dataset by integrating it with existing motion generation methods, validating its capacity to transfer realistic physical awareness.

Balancing the Causal Effects in Class-Incremental Learning

Feb 15, 2024Abstract:Class-Incremental Learning (CIL) is a practical and challenging problem for achieving general artificial intelligence. Recently, Pre-Trained Models (PTMs) have led to breakthroughs in both visual and natural language processing tasks. Despite recent studies showing PTMs' potential ability to learn sequentially, a plethora of work indicates the necessity of alleviating the catastrophic forgetting of PTMs. Through a pilot study and a causal analysis of CIL, we reveal that the crux lies in the imbalanced causal effects between new and old data. Specifically, the new data encourage models to adapt to new classes while hindering the adaptation of old classes. Similarly, the old data encourages models to adapt to old classes while hindering the adaptation of new classes. In other words, the adaptation process between new and old classes conflicts from the causal perspective. To alleviate this problem, we propose Balancing the Causal Effects (BaCE) in CIL. Concretely, BaCE proposes two objectives for building causal paths from both new and old data to the prediction of new and classes, respectively. In this way, the model is encouraged to adapt to all classes with causal effects from both new and old data and thus alleviates the causal imbalance problem. We conduct extensive experiments on continual image classification, continual text classification, and continual named entity recognition. Empirical results show that BaCE outperforms a series of CIL methods on different tasks and settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge