Ruisheng Wang

BuildingWorld: A Structured 3D Building Dataset for Urban Foundation Models

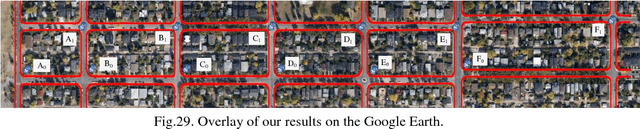

Nov 09, 2025Abstract:As digital twins become central to the transformation of modern cities, accurate and structured 3D building models emerge as a key enabler of high-fidelity, updatable urban representations. These models underpin diverse applications including energy modeling, urban planning, autonomous navigation, and real-time reasoning. Despite recent advances in 3D urban modeling, most learning-based models are trained on building datasets with limited architectural diversity, which significantly undermines their generalizability across heterogeneous urban environments. To address this limitation, we present BuildingWorld, a comprehensive and structured 3D building dataset designed to bridge the gap in stylistic diversity. It encompasses buildings from geographically and architecturally diverse regions -- including North America, Europe, Asia, Africa, and Oceania -- offering a globally representative dataset for urban-scale foundation modeling and analysis. Specifically, BuildingWorld provides about five million LOD2 building models collected from diverse sources, accompanied by real and simulated airborne LiDAR point clouds. This enables comprehensive research on 3D building reconstruction, detection and segmentation. Cyber City, a virtual city model, is introduced to enable the generation of unlimited training data with customized and structurally diverse point cloud distributions. Furthermore, we provide standardized evaluation metrics tailored for building reconstruction, aiming to facilitate the training, evaluation, and comparison of large-scale vision models and foundation models in structured 3D urban environments.

APC2Mesh: Bridging the gap from occluded building façades to full 3D models

Apr 03, 2024Abstract:The benefits of having digital twins of urban buildings are numerous. However, a major difficulty encountered in their creation from airborne LiDAR point clouds is the effective means of accurately reconstructing significant occlusions amidst point density variations and noise. To bridge the noise/sparsity/occlusion gap and generate high fidelity 3D building models, we propose APC2Mesh which integrates point completion into a 3D reconstruction pipeline, enabling the learning of dense geometrically accurate representation of buildings. Specifically, we leveraged complete points generated from occluded ones as input to a linearized skip attention-based deformation network for 3D mesh reconstruction. In our experiments, conducted on 3 different scenes, we demonstrate that: (1) APC2Mesh delivers comparatively superior results, indicating its efficacy in handling the challenges of occluded airborne building points of diverse styles and complexities. (2) The combination of point completion with typical deep learning-based 3D point cloud reconstruction methods offers a direct and effective solution for reconstructing significantly occluded airborne building points. As such, this neural integration holds promise for advancing the creation of digital twins for urban buildings with greater accuracy and fidelity.

PBWR: Parametric Building Wireframe Reconstruction from Aerial LiDAR Point Clouds

Nov 18, 2023

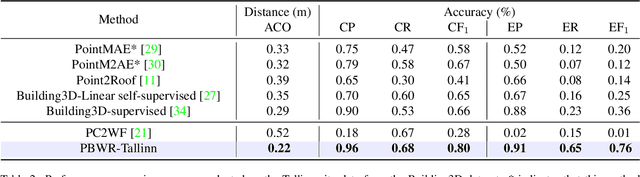

Abstract:In this paper, we present an end-to-end 3D building wireframe reconstruction method to regress edges directly from aerial LiDAR point clouds.Our method, named Parametric Building Wireframe Reconstruction (PBWR), takes aerial LiDAR point clouds and initial edge entities as input, and fully uses self-attention mechanism of transformers to regress edge parameters without any intermediate steps such as corner prediction. We propose an edge non-maximum suppression (E-NMS) module based on edge similarityto remove redundant edges. Additionally, a dedicated edge loss function is utilized to guide the PBWR in regressing edges parameters, where simple use of edge distance loss isn't suitable. In our experiments, we demonstrate state-of-the-art results on the Building3D dataset, achieving an improvement of approximately 36% in entry-level dataset edge accuracy and around 42% improvement in the Tallinn dataset.

A Multimodal Learning Framework for Comprehensive 3D Mineral Prospectivity Modeling with Jointly Learned Structure-Fluid Relationships

Sep 06, 2023

Abstract:This study presents a novel multimodal fusion model for three-dimensional mineral prospectivity mapping (3D MPM), effectively integrating structural and fluid information through a deep network architecture. Leveraging Convolutional Neural Networks (CNN) and Multilayer Perceptrons (MLP), the model employs canonical correlation analysis (CCA) to align and fuse multimodal features. Rigorous evaluation on the Jiaojia gold deposit dataset demonstrates the model's superior performance in distinguishing ore-bearing instances and predicting mineral prospectivity, outperforming other models in result analyses. Ablation studies further reveal the benefits of joint feature utilization and CCA incorporation. This research not only advances mineral prospectivity modeling but also highlights the pivotal role of data integration and feature alignment for enhanced exploration decision-making.

Building3D: An Urban-Scale Dataset and Benchmarks for Learning Roof Structures from Point Clouds

Jul 21, 2023Abstract:Urban modeling from LiDAR point clouds is an important topic in computer vision, computer graphics, photogrammetry and remote sensing. 3D city models have found a wide range of applications in smart cities, autonomous navigation, urban planning and mapping etc. However, existing datasets for 3D modeling mainly focus on common objects such as furniture or cars. Lack of building datasets has become a major obstacle for applying deep learning technology to specific domains such as urban modeling. In this paper, we present a urban-scale dataset consisting of more than 160 thousands buildings along with corresponding point clouds, mesh and wire-frame models, covering 16 cities in Estonia about 998 Km2. We extensively evaluate performance of state-of-the-art algorithms including handcrafted and deep feature based methods. Experimental results indicate that Building3D has challenges of high intra-class variance, data imbalance and large-scale noises. The Building3D is the first and largest urban-scale building modeling benchmark, allowing a comparison of supervised and self-supervised learning methods. We believe that our Building3D will facilitate future research on urban modeling, aerial path planning, mesh simplification, and semantic/part segmentation etc.

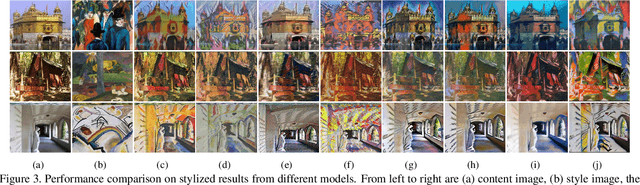

DRB-GAN: A Dynamic ResBlock Generative Adversarial Network for Artistic Style Transfer

Aug 19, 2021

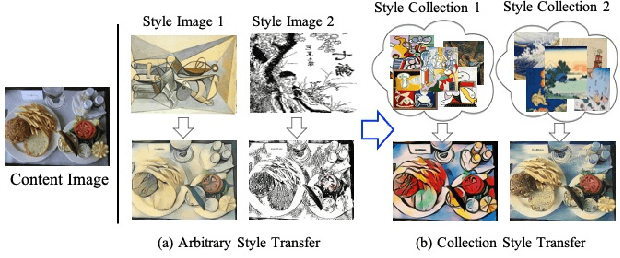

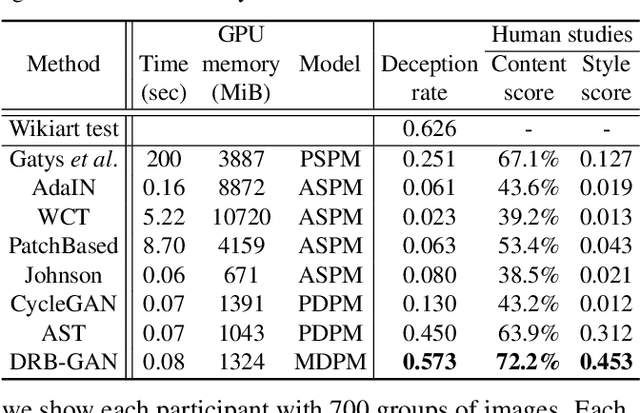

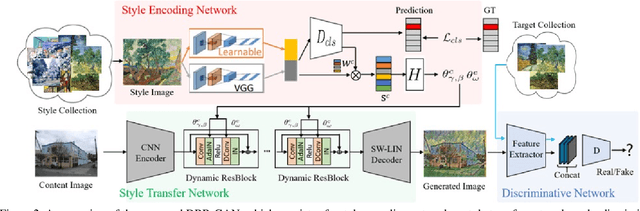

Abstract:The paper proposes a Dynamic ResBlock Generative Adversarial Network (DRB-GAN) for artistic style transfer. The style code is modeled as the shared parameters for Dynamic ResBlocks connecting both the style encoding network and the style transfer network. In the style encoding network, a style class-aware attention mechanism is used to attend the style feature representation for generating the style codes. In the style transfer network, multiple Dynamic ResBlocks are designed to integrate the style code and the extracted CNN semantic feature and then feed into the spatial window Layer-Instance Normalization (SW-LIN) decoder, which enables high-quality synthetic images with artistic style transfer. Moreover, the style collection conditional discriminator is designed to equip our DRB-GAN model with abilities for both arbitrary style transfer and collection style transfer during the training stage. No matter for arbitrary style transfer or collection style transfer, extensive experiments strongly demonstrate that our proposed DRB-GAN outperforms state-of-the-art methods and exhibits its superior performance in terms of visual quality and efficiency. Our source code is available at \color{magenta}{\url{https://github.com/xuwenju123/DRB-GAN}}.

An optimal hierarchical clustering approach to segmentation of mobile LiDAR point clouds

Nov 09, 2017

Abstract:This paper proposes a hierarchical clustering approach for the segmentation of mobile LiDAR point clouds. We perform the hierarchical clustering on unorganized point clouds based on a proximity matrix. The dissimilarity measure in the proximity matrix is calculated by the Euclidean distances between clusters and the difference of normal vectors at given points. The main contribution of this paper is that we succeed to optimize the combination of clusters in the hierarchical clustering. The combination is obtained by achieving the matching of a bipartite graph, and optimized by solving the minimum-cost perfect matching. Results show that the proposed optimal hierarchical clustering (OHC) succeeds to achieve the segmentation of multiple individual objects automatically and outperforms the state-of-the-art LiDAR point cloud segmentation approaches.

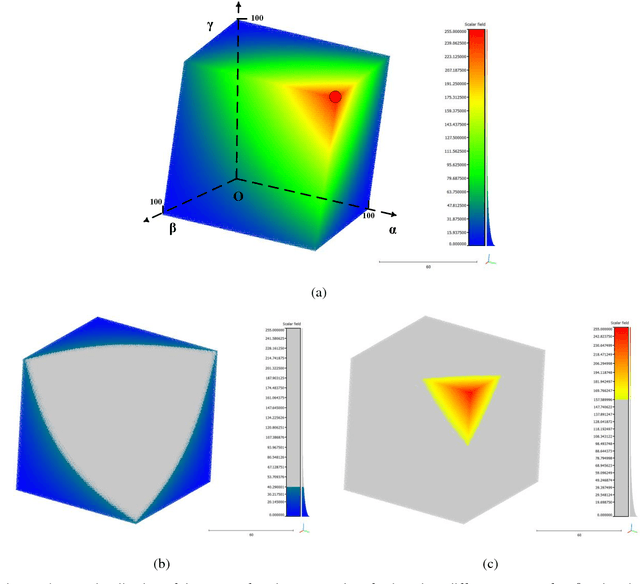

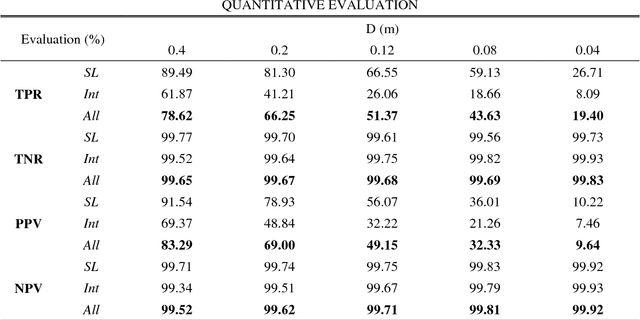

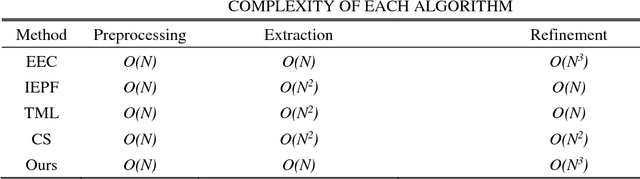

Road Curb Extraction from Mobile LiDAR Point Clouds

Oct 27, 2016

Abstract:Automatic extraction of road curbs from uneven, unorganized, noisy and massive 3D point clouds is a challenging task. Existing methods often project 3D point clouds onto 2D planes to extract curbs. However, the projection causes loss of 3D information which degrades the performance of the detection. This paper presents a robust, accurate and efficient method to extract road curbs from 3D mobile LiDAR point clouds. Our method consists of two steps: 1) extracting the candidate points of curbs based on the proposed novel energy function and 2) refining the candidate points using the proposed least cost path model. We evaluated our method on a large-scale of residential area (16.7GB, 300 million points) and an urban area (1.07GB, 20 million points) mobile LiDAR point clouds. Results indicate that the proposed method is superior to the state-of-the-art methods in terms of robustness, accuracy and efficiency. The proposed curb extraction method achieved a completeness of 78.62% and a correctness of 83.29%. These experiments demonstrate that the proposed method is a promising solution to extract road curbs from mobile LiDAR point clouds.

A Cognitive Model for Humanoid Robot Navigation and Mapping using Alderbaran NAO

Jul 21, 2014

Abstract:The aim of this work is to build a cognitive model for the humanoid robot, especially, we are interested in the navigation and mapping on the humanoid robot. The agents used are the Alderbaran NAO robot. The framework is effectively applied to the integration of AI, computer vision, and signal processing problems. Our model can be divided into two parts, cognitive mapping and perception. Cognitive mapping is assumed as three parts, whose representations were proposed a network of ASRs, an MFIS, and a hierarchy of Place Representations. On the other hand, perception is the traditional computer vision problem, which is the image sensing, feature extraction and interested objects tracking. The points of our project can be concluded as the following. Firstly, the robotics should realize where it is. Second, we would like to test the theory that this is how humans map their environment. The humanoid robot inspires the human vision searching by integrating the visual mechanism and computer vision techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge