Rongqing Li

ITPNet: Towards Instantaneous Trajectory Prediction for Autonomous Driving

Dec 10, 2024

Abstract:Trajectory prediction of agents is crucial for the safety of autonomous vehicles, whereas previous approaches usually rely on sufficiently long-observed trajectory to predict the future trajectory of the agents. However, in real-world scenarios, it is not realistic to collect adequate observed locations for moving agents, leading to the collapse of most prediction models. For instance, when a moving car suddenly appears and is very close to an autonomous vehicle because of the obstruction, it is quite necessary for the autonomous vehicle to quickly and accurately predict the future trajectories of the car with limited observed trajectory locations. In light of this, we focus on investigating the task of instantaneous trajectory prediction, i.e., two observed locations are available during inference. To this end, we propose a general and plug-and-play instantaneous trajectory prediction approach, called ITPNet. Specifically, we propose a backward forecasting mechanism to reversely predict the latent feature representations of unobserved historical trajectories of the agent based on its two observed locations and then leverage them as complementary information for future trajectory prediction. Meanwhile, due to the inevitable existence of noise and redundancy in the predicted latent feature representations, we further devise a Noise Redundancy Reduction Former, aiming at to filter out noise and redundancy from unobserved trajectories and integrate the filtered features and observed features into a compact query for future trajectory predictions. In essence, ITPNet can be naturally compatible with existing trajectory prediction models, enabling them to gracefully handle the case of instantaneous trajectory prediction. Extensive experiments on the Argoverse and nuScenes datasets demonstrate ITPNet outperforms the baselines, and its efficacy with different trajectory prediction models.

DREAM: Domain-agnostic Reverse Engineering Attributes of Black-box Model

Dec 08, 2024

Abstract:Deep learning models are usually black boxes when deployed on machine learning platforms. Prior works have shown that the attributes (e.g., the number of convolutional layers) of a target black-box model can be exposed through a sequence of queries. There is a crucial limitation: these works assume the training dataset of the target model is known beforehand and leverage this dataset for model attribute attack. However, it is difficult to access the training dataset of the target black-box model in reality. Therefore, whether the attributes of a target black-box model could be still revealed in this case is doubtful. In this paper, we investigate a new problem of black-box reverse engineering, without requiring the availability of the target model's training dataset. We put forward a general and principled framework DREAM, by casting this problem as out-of-distribution (OOD) generalization. In this way, we can learn a domain-agnostic meta-model to infer the attributes of the target black-box model with unknown training data. This makes our method one of the kinds that can gracefully apply to an arbitrary domain for model attribute reverse engineering with strong generalization ability. Extensive experimental results demonstrate the superiority of our proposed method over the baselines.

* arXiv admin note: substantial text overlap with arXiv:2307.10997

DREAM: Domain-free Reverse Engineering Attributes of Black-box Model

Jul 20, 2023

Abstract:Deep learning models are usually black boxes when deployed on machine learning platforms. Prior works have shown that the attributes ($e.g.$, the number of convolutional layers) of a target black-box neural network can be exposed through a sequence of queries. There is a crucial limitation: these works assume the dataset used for training the target model to be known beforehand and leverage this dataset for model attribute attack. However, it is difficult to access the training dataset of the target black-box model in reality. Therefore, whether the attributes of a target black-box model could be still revealed in this case is doubtful. In this paper, we investigate a new problem of Domain-agnostic Reverse Engineering the Attributes of a black-box target Model, called DREAM, without requiring the availability of the target model's training dataset, and put forward a general and principled framework by casting this problem as an out of distribution (OOD) generalization problem. In this way, we can learn a domain-agnostic model to inversely infer the attributes of a target black-box model with unknown training data. This makes our method one of the kinds that can gracefully apply to an arbitrary domain for model attribute reverse engineering with strong generalization ability. Extensive experimental studies are conducted and the results validate the superiority of our proposed method over the baselines.

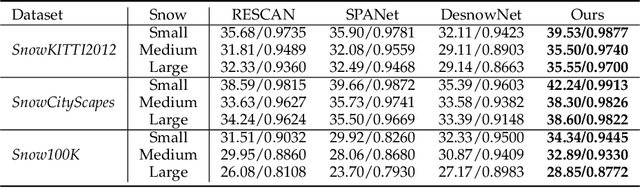

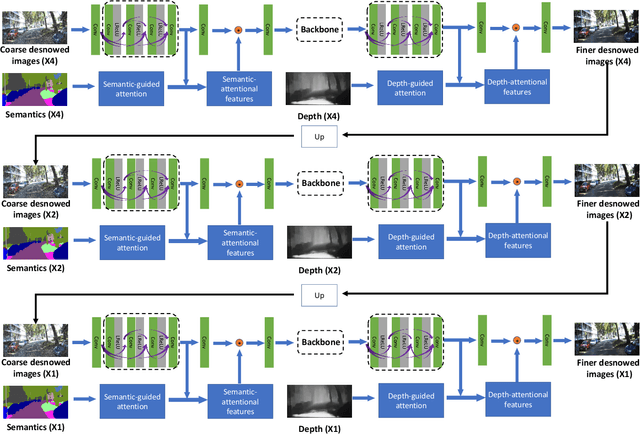

Deep Dense Multi-scale Network for Snow Removal Using Semantic and Geometric Priors

Mar 21, 2021

Abstract:Images captured in snowy days suffer from noticeable degradation of scene visibility, which degenerates the performance of current vision-based intelligent systems. Removing snow from images thus is an important topic in computer vision. In this paper, we propose a Deep Dense Multi-Scale Network (\textbf{DDMSNet}) for snow removal by exploiting semantic and geometric priors. As images captured in outdoor often share similar scenes and their visibility varies with depth from camera, such semantic and geometric information provides a strong prior for snowy image restoration. We incorporate the semantic and geometric maps as input and learn the semantic-aware and geometry-aware representation to remove snow. In particular, we first create a coarse network to remove snow from the input images. Then, the coarsely desnowed images are fed into another network to obtain the semantic and geometric labels. Finally, we design a DDMSNet to learn semantic-aware and geometry-aware representation via a self-attention mechanism to produce the final clean images. Experiments evaluated on public synthetic and real-world snowy images verify the superiority of the proposed method, offering better results both quantitatively and qualitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge