Ricardo B. Grando

Improving Generalization in Aerial and Terrestrial Mobile Robots Control Through Delayed Policy Learning

Jun 04, 2024

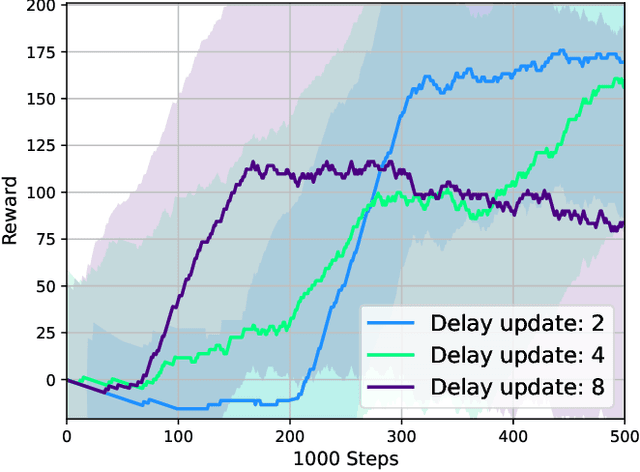

Abstract:Deep Reinforcement Learning (DRL) has emerged as a promising approach to enhancing motion control and decision-making through a wide range of robotic applications. While prior research has demonstrated the efficacy of DRL algorithms in facilitating autonomous mapless navigation for aerial and terrestrial mobile robots, these methods often grapple with poor generalization when faced with unknown tasks and environments. This paper explores the impact of the Delayed Policy Updates (DPU) technique on fostering generalization to new situations, and bolstering the overall performance of agents. Our analysis of DPU in aerial and terrestrial mobile robots reveals that this technique significantly curtails the lack of generalization and accelerates the learning process for agents, enhancing their efficiency across diverse tasks and unknown scenarios.

From Seedling to Harvest: The GrowingSoy Dataset for Weed Detection in Soy Crops via Instance Segmentation

Jun 01, 2024Abstract:Deep learning, particularly Convolutional Neural Networks (CNNs), has gained significant attention for its effectiveness in computer vision, especially in agricultural tasks. Recent advancements in instance segmentation have improved image classification accuracy. In this work, we introduce a comprehensive dataset for training neural networks to detect weeds and soy plants through instance segmentation. Our dataset covers various stages of soy growth, offering a chronological perspective on weed invasion's impact, with 1,000 meticulously annotated images. We also provide 6 state of the art models, trained in this dataset, that can understand and detect soy and weed in every stage of the plantation process. By using this dataset for weed and soy segmentation, we achieved a segmentation average precision of 79.1% and an average recall of 69.2% across all plant classes, with the YOLOv8X model. Moreover, the YOLOv8M model attained 78.7% mean average precision (mAp-50) in caruru weed segmentation, 69.7% in grassy weed segmentation, and 90.1% in soy plant segmentation.

Advancing Behavior Generation in Mobile Robotics through High-Fidelity Procedural Simulations

May 27, 2024

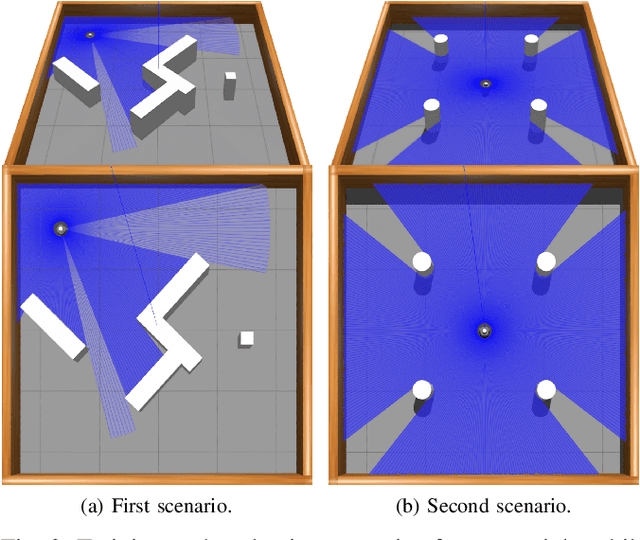

Abstract:This paper introduces YamaS, a simulator integrating Unity3D Engine with Robotic Operating System for robot navigation research and aims to facilitate the development of both Deep Reinforcement Learning (Deep-RL) and Natural Language Processing (NLP). It supports single and multi-agent configurations with features like procedural environment generation, RGB vision, and dynamic obstacle navigation. Unique to YamaS is its ability to construct single and multi-agent environments, as well as generating agent's behaviour through textual descriptions. The simulator's fidelity is underscored by comparisons with the real-world Yamabiko Beego robot, demonstrating high accuracy in sensor simulations and spatial reasoning. Moreover, YamaS integrates Virtual Reality (VR) to augment Human-Robot Interaction (HRI) studies, providing an immersive platform for developers and researchers. This fusion establishes YamaS as a versatile and valuable tool for the development and testing of autonomous systems, contributing to the fields of robot simulation and AI-driven training methodologies.

DoCRL: Double Critic Deep Reinforcement Learning for Mapless Navigation of a Hybrid Aerial Underwater Vehicle with Medium Transition

Aug 18, 2023

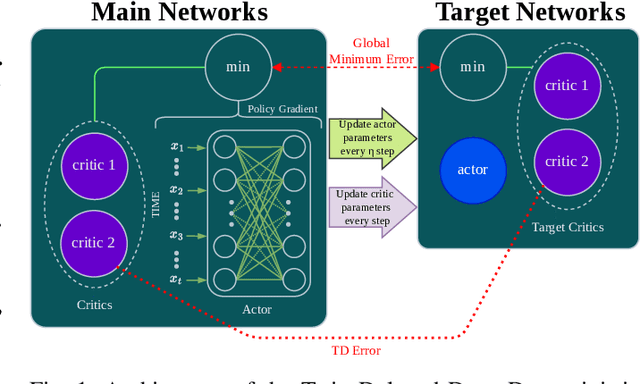

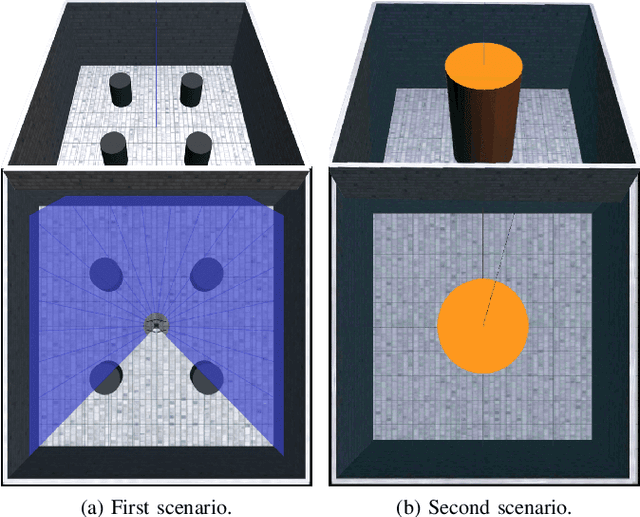

Abstract:Deep Reinforcement Learning (Deep-RL) techniques for motion control have been continuously used to deal with decision-making problems for a wide variety of robots. Previous works showed that Deep-RL can be applied to perform mapless navigation, including the medium transition of Hybrid Unmanned Aerial Underwater Vehicles (HUAUVs). These are robots that can operate in both air and water media, with future potential for rescue tasks in robotics. This paper presents new approaches based on the state-of-the-art Double Critic Actor-Critic algorithms to address the navigation and medium transition problems for a HUAUV. We show that double-critic Deep-RL with Recurrent Neural Networks using range data and relative localization solely improves the navigation performance of HUAUVs. Our DoCRL approaches achieved better navigation and transitioning capability, outperforming previous approaches.

Active Perception Applied To Unmanned Aerial Vehicles Through Deep Reinforcement Learning

Sep 13, 2022

Abstract:Unmanned Aerial Vehicles (UAV) have been standing out due to the wide range of applications in which they can be used autonomously. However, they need intelligent systems capable of providing a greater understanding of what they perceive to perform several tasks. They become more challenging in complex environments since there is a need to perceive the environment and act under environmental uncertainties to make a decision. In this context, a system that uses active perception can improve performance by seeking the best next view through the recognition of targets while displacement occurs. This work aims to contribute to the active perception of UAVs by tackling the problem of tracking and recognizing water surface structures to perform a dynamic landing. We show that our system with classical image processing techniques and a simple Deep Reinforcement Learning (Deep-RL) agent is capable of perceiving the environment and dealing with uncertainties without making the use of complex Convolutional Neural Networks (CNN) or Contrastive Learning (CL).

Mapless Navigation of a Hybrid Aerial Underwater Vehicle with Deep Reinforcement Learning Through Environmental Generalization

Sep 13, 2022

Abstract:Previous works showed that Deep-RL can be applied to perform mapless navigation, including the medium transition of Hybrid Unmanned Aerial Underwater Vehicles (HUAUVs). This paper presents new approaches based on the state-of-the-art actor-critic algorithms to address the navigation and medium transition problems for a HUAUV. We show that a double critic Deep-RL with Recurrent Neural Networks improves the navigation performance of HUAUVs using solely range data and relative localization. Our Deep-RL approaches achieved better navigation and transitioning capabilities with a solid generalization of learning through distinct simulated scenarios, outperforming previous approaches.

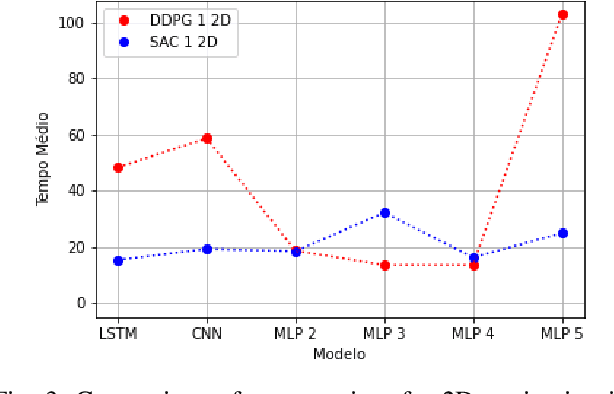

Deterministic and Stochastic Analysis of Deep Reinforcement Learning for Low Dimensional Sensing-based Navigation of Mobile Robots

Sep 13, 2022

Abstract:Deterministic and Stochastic techniques in Deep Reinforcement Learning (Deep-RL) have become a promising solution to improve motion control and the decision-making tasks for a wide variety of robots. Previous works showed that these Deep-RL algorithms can be applied to perform mapless navigation of mobile robots in general. However, they tend to use simple sensing strategies since it has been shown that they perform poorly with a high dimensional state spaces, such as the ones yielded from image-based sensing. This paper presents a comparative analysis of two Deep-RL techniques - Deep Deterministic Policy Gradients (DDPG) and Soft Actor-Critic (SAC) - when performing tasks of mapless navigation for mobile robots. We aim to contribute by showing how the neural network architecture influences the learning itself, presenting quantitative results based on the time and distance of navigation of aerial mobile robots for each approach. Overall, our analysis of six distinct architectures highlights that the stochastic approach (SAC) better suits with deeper architectures, while the opposite happens with the deterministic approach (DDPG).

Virtual Reality Platform to Develop and Test Applications on Human-Robot Social Interaction

Aug 13, 2022

Abstract:Robotics simulation has been an integral part of research and development in the robotics area. The simulation eliminates the possibility of harm to sensors, motors, and the physical structure of a real robot by enabling robotics application testing to be carried out quickly and affordably without being subjected to mechanical or electronic errors. Simulation through virtual reality (VR) offers a more immersive experience by providing better visual cues of environments, making it an appealing alternative for interacting with simulated robots. This immersion is crucial, particularly when discussing sociable robots, a subarea of the human-robot interaction (HRI) field. The widespread use of robots in daily life depends on HRI. In the future, robots will be able to interact effectively with people to perform a variety of tasks in human civilization. It is crucial to develop simple and understandable interfaces for robots as they begin to proliferate in the personal workspace. Due to this, in this study, we implement a VR robotic framework with ready-to-use tools and packages to enhance research and application development in social HRI. Since the entire VR interface is an open-source project, the tests can be conducted in an immersive environment without needing a physical robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge