Rasoul Etesami

FedGTST: Boosting Global Transferability of Federated Models via Statistics Tuning

Oct 16, 2024

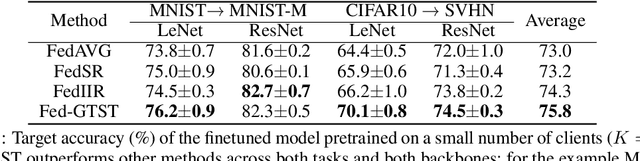

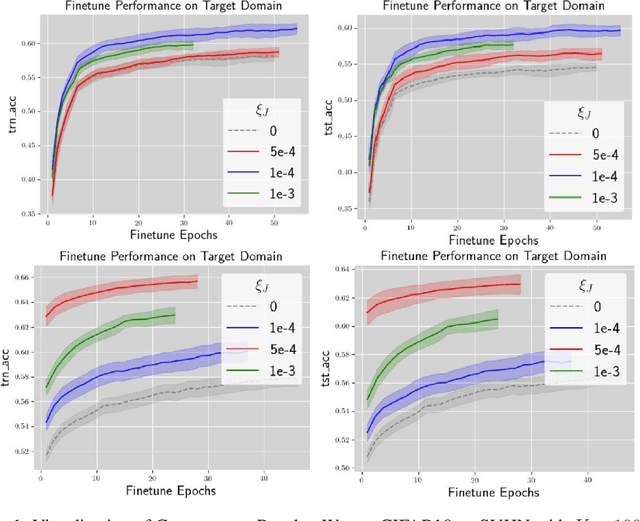

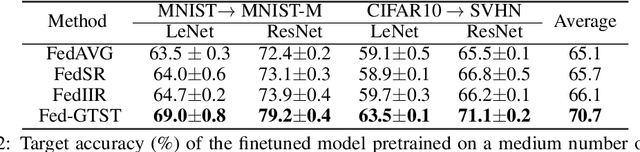

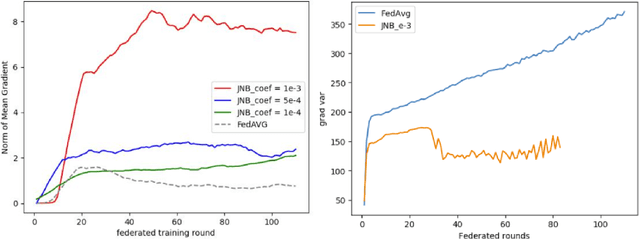

Abstract:The performance of Transfer Learning (TL) heavily relies on effective pretraining, which demands large datasets and substantial computational resources. As a result, executing TL is often challenging for individual model developers. Federated Learning (FL) addresses these issues by facilitating collaborations among clients, expanding the dataset indirectly, distributing computational costs, and preserving privacy. However, key challenges remain unresolved. First, existing FL methods tend to optimize transferability only within local domains, neglecting the global learning domain. Second, most approaches rely on indirect transferability metrics, which do not accurately reflect the final target loss or true degree of transferability. To address these gaps, we propose two enhancements to FL. First, we introduce a client-server exchange protocol that leverages cross-client Jacobian (gradient) norms to boost transferability. Second, we increase the average Jacobian norm across clients at the server, using this as a local regularizer to reduce cross-client Jacobian variance. Our transferable federated algorithm, termed FedGTST (Federated Global Transferability via Statistics Tuning), demonstrates that increasing the average Jacobian and reducing its variance allows for tighter control of the target loss. This leads to an upper bound on the target loss in terms of the source loss and source-target domain discrepancy. Extensive experiments on datasets such as MNIST to MNIST-M and CIFAR10 to SVHN show that FedGTST outperforms relevant baselines, including FedSR. On the second dataset pair, FedGTST improves accuracy by 9.8% over FedSR and 7.6% over FedIIR when LeNet is used as the backbone.

F-FOMAML: GNN-Enhanced Meta-Learning for Peak Period Demand Forecasting with Proxy Data

Jun 23, 2024Abstract:Demand prediction is a crucial task for e-commerce and physical retail businesses, especially during high-stake sales events. However, the limited availability of historical data from these peak periods poses a significant challenge for traditional forecasting methods. In this paper, we propose a novel approach that leverages strategically chosen proxy data reflective of potential sales patterns from similar entities during non-peak periods, enriched by features learned from a graph neural networks (GNNs)-based forecasting model, to predict demand during peak events. We formulate the demand prediction as a meta-learning problem and develop the Feature-based First-Order Model-Agnostic Meta-Learning (F-FOMAML) algorithm that leverages proxy data from non-peak periods and GNN-generated relational metadata to learn feature-specific layer parameters, thereby adapting to demand forecasts for peak events. Theoretically, we show that by considering domain similarities through task-specific metadata, our model achieves improved generalization, where the excess risk decreases as the number of training tasks increases. Empirical evaluations on large-scale industrial datasets demonstrate the superiority of our approach. Compared to existing state-of-the-art models, our method demonstrates a notable improvement in demand prediction accuracy, reducing the Mean Absolute Error by 26.24% on an internal vending machine dataset and by 1.04% on the publicly accessible JD.com dataset.

Striking a Balance: An Optimal Mechanism Design for Heterogenous Differentially Private Data Acquisition for Logistic Regression

Sep 19, 2023Abstract:We investigate the problem of performing logistic regression on data collected from privacy-sensitive sellers. Since the data is private, sellers must be incentivized through payments to provide their data. Thus, the goal is to design a mechanism that optimizes a weighted combination of test loss, seller privacy, and payment, i.e., strikes a balance between multiple objectives of interest. We solve the problem by combining ideas from game theory, statistical learning theory, and differential privacy. The buyer's objective function can be highly non-convex. However, we show that, under certain conditions on the problem parameters, the problem can be convexified by using a change of variables. We also provide asymptotic results characterizing the buyer's test error and payments when the number of sellers becomes large. Finally, we demonstrate our ideas by applying them to a real healthcare data set.

Local Environment Poisoning Attacks on Federated Reinforcement Learning

Mar 18, 2023

Abstract:Federated learning (FL) has become a popular tool for solving traditional Reinforcement Learning (RL) tasks. The multi-agent structure addresses the major concern of data-hungry in traditional RL, while the federated mechanism protects the data privacy of individual agents. However, the federated mechanism also exposes the system to poisoning by malicious agents that can mislead the trained policy. Despite the advantage brought by FL, the vulnerability of Federated Reinforcement Learning (FRL) has not been well-studied before. In this work, we propose the first general framework to characterize FRL poisoning as an optimization problem constrained by a limited budget and design a poisoning protocol that can be applied to policy-based FRL and extended to FRL with actor-critic as a local RL algorithm by training a pair of private and public critics. We also discuss a conventional defense strategy inherited from FL to mitigate this risk. We verify our poisoning effectiveness by conducting extensive experiments targeting mainstream RL algorithms and over various RL OpenAI Gym environments covering a wide range of difficulty levels. Our results show that our proposed defense protocol is successful in most cases but is not robust under complicated environments. Our work provides new insights into the vulnerability of FL in RL training and poses additional challenges for designing robust FRL algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge