Randi Cabezas

UmeTrack: Unified multi-view end-to-end hand tracking for VR

Oct 31, 2022

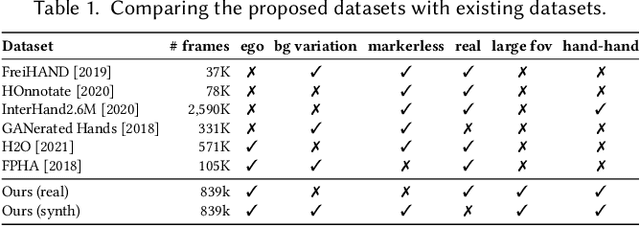

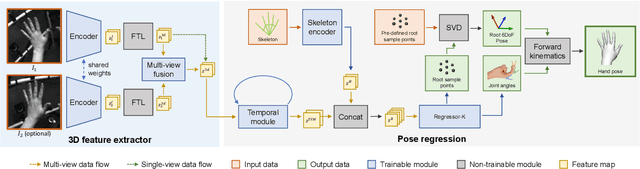

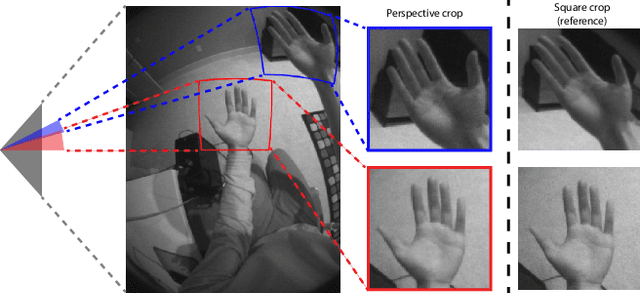

Abstract:Real-time tracking of 3D hand pose in world space is a challenging problem and plays an important role in VR interaction. Existing work in this space are limited to either producing root-relative (versus world space) 3D pose or rely on multiple stages such as generating heatmaps and kinematic optimization to obtain 3D pose. Moreover, the typical VR scenario, which involves multi-view tracking from wide \ac{fov} cameras is seldom addressed by these methods. In this paper, we present a unified end-to-end differentiable framework for multi-view, multi-frame hand tracking that directly predicts 3D hand pose in world space. We demonstrate the benefits of end-to-end differentiabilty by extending our framework with downstream tasks such as jitter reduction and pinch prediction. To demonstrate the efficacy of our model, we further present a new large-scale egocentric hand pose dataset that consists of both real and synthetic data. Experiments show that our system trained on this dataset handles various challenging interactive motions, and has been successfully applied to real-time VR applications.

Direction-Aware Semi-Dense SLAM

Sep 18, 2017

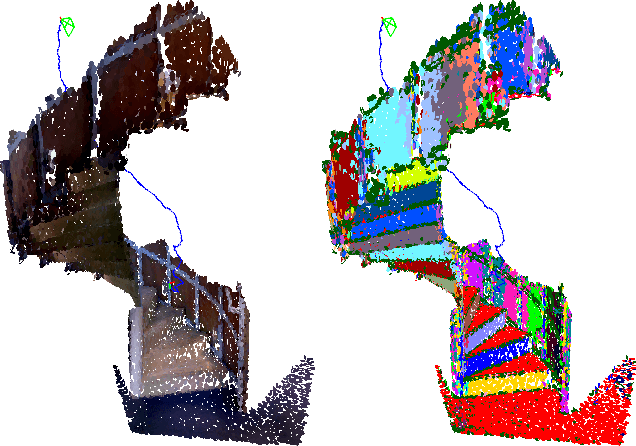

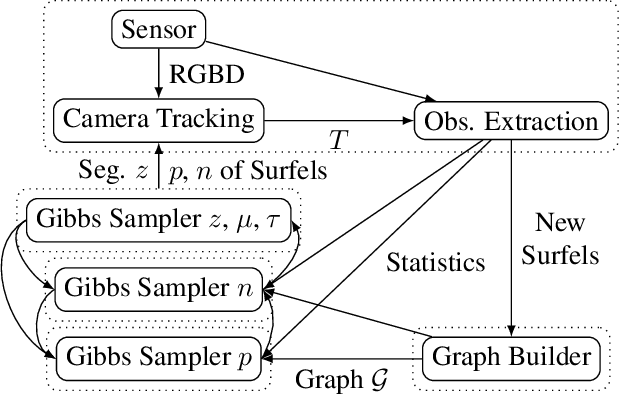

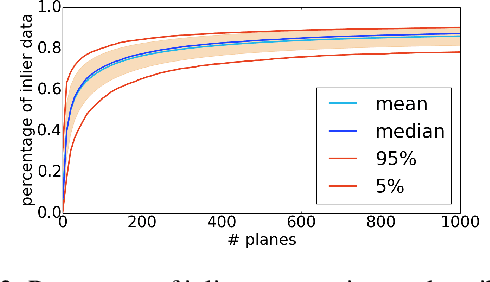

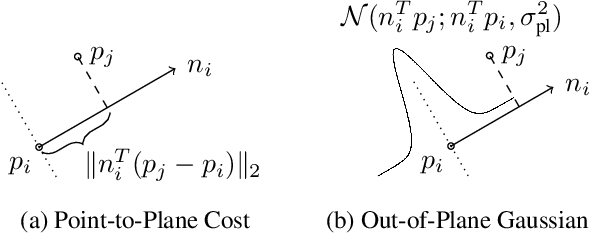

Abstract:To aide simultaneous localization and mapping (SLAM), future perception systems will incorporate forms of scene understanding. In a step towards fully integrated probabilistic geometric scene understanding, localization and mapping we propose the first direction-aware semi-dense SLAM system. It jointly infers the directional Stata Center World (SCW) segmentation and a surfel-based semi-dense map while performing real-time camera tracking. The joint SCW map model connects a scene-wide Bayesian nonparametric Dirichlet Process von-Mises-Fisher mixture model (DP-vMF) prior on surfel orientations with the local surfel locations via a conditional random field (CRF). Camera tracking leverages the SCW segmentation to improve efficiency via guided observation selection. Results demonstrate improved SLAM accuracy and tracking efficiency at state of the art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge