Rajvinder Singh

Unsupervised Anomaly Detection in Medical Images with a Memory-augmented Multi-level Cross-attentional Masked Autoencoder

Mar 22, 2022

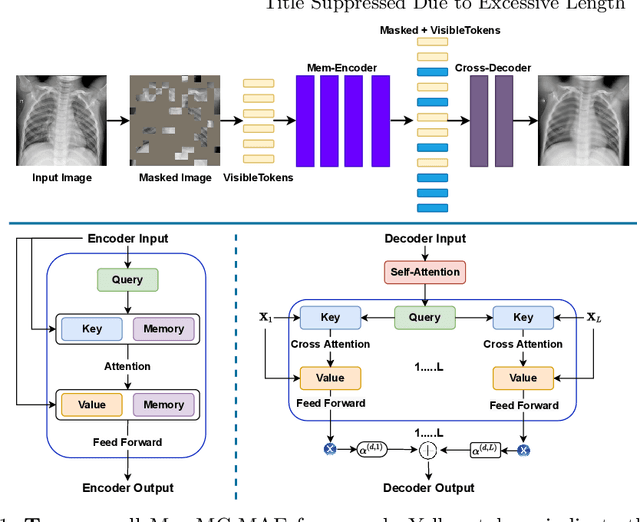

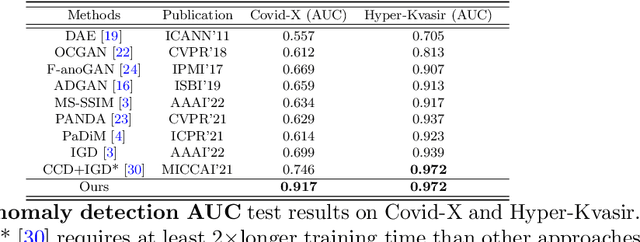

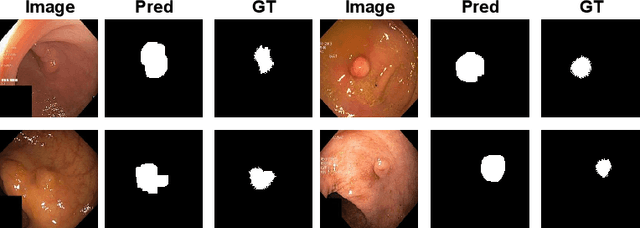

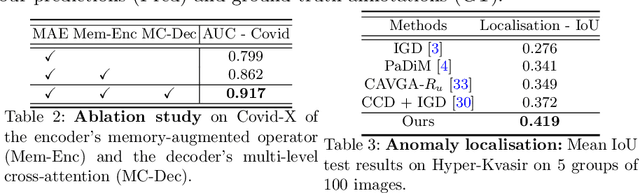

Abstract:Unsupervised anomaly detection (UAD) aims to find anomalous images by optimising a detector using a training set that contains only normal images. UAD approaches can be based on reconstruction methods, self-supervised approaches, and Imagenet pre-trained models. Reconstruction methods, which detect anomalies from image reconstruction errors, are advantageous because they do not rely on the design of problem-specific pretext tasks needed by self-supervised approaches, and on the unreliable translation of models pre-trained from non-medical datasets. However, reconstruction methods may fail because they can have low reconstruction errors even for anomalous images. In this paper, we introduce a new reconstruction-based UAD approach that addresses this low-reconstruction error issue for anomalous images. Our UAD approach, the memory-augmented multi-level cross-attentional masked autoencoder (MemMC-MAE), is a transformer-based approach, consisting of a novel memory-augmented self-attention operator for the encoder and a new multi-level cross-attention operator for the decoder. MemMC-MAE masks large parts of the input image during its reconstruction, reducing the risk that it will produce low reconstruction errors because anomalies are likely to be masked and cannot be reconstructed. However, when the anomaly is not masked, then the normal patterns stored in the encoder's memory combined with the decoder's multi-level cross-attention will constrain the accurate reconstruction of the anomaly. We show that our method achieves SOTA anomaly detection and localisation on colonoscopy and Covid-19 Chest X-ray datasets.

In Defense of Kalman Filtering for Polyp Tracking from Colonoscopy Videos

Jan 27, 2022

Abstract:Real-time and robust automatic detection of polyps from colonoscopy videos are essential tasks to help improve the performance of doctors during this exam. The current focus of the field is on the development of accurate but inefficient detectors that will not enable a real-time application. We advocate that the field should instead focus on the development of simple and efficient detectors that an be combined with effective trackers to allow the implementation of real-time polyp detectors. In this paper, we propose a Kalman filtering tracker that can work together with powerful, but efficient detectors, enabling the implementation of real-time polyp detectors. In particular, we show that the combination of our Kalman filtering with the detector PP-YOLO shows state-of-the-art (SOTA) detection accuracy and real-time processing. More specifically, our approach has SOTA results on the CVC-ClinicDB dataset, with a recall of 0.740, precision of 0.869, $F_1$ score of 0.799, an average precision (AP) of 0.837, and can run in real time (i.e., 30 frames per second). We also evaluate our method on a subset of the Hyper-Kvasir annotated by our clinical collaborators, resulting in SOTA results, with a recall of 0.956, precision of 0.875, $F_1$ score of 0.914, AP of 0.952, and can run in real time.

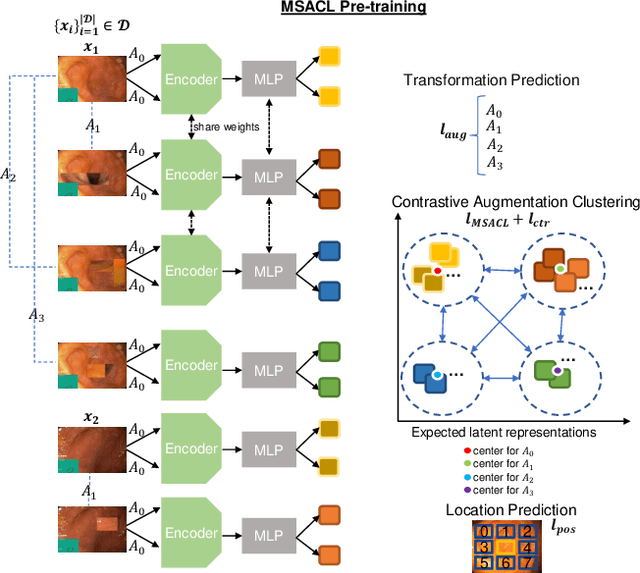

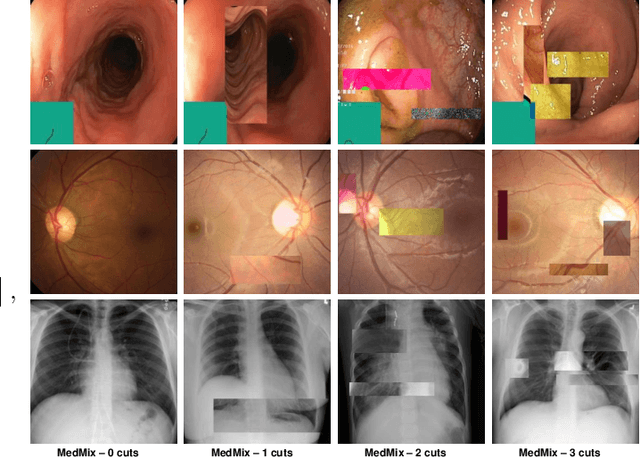

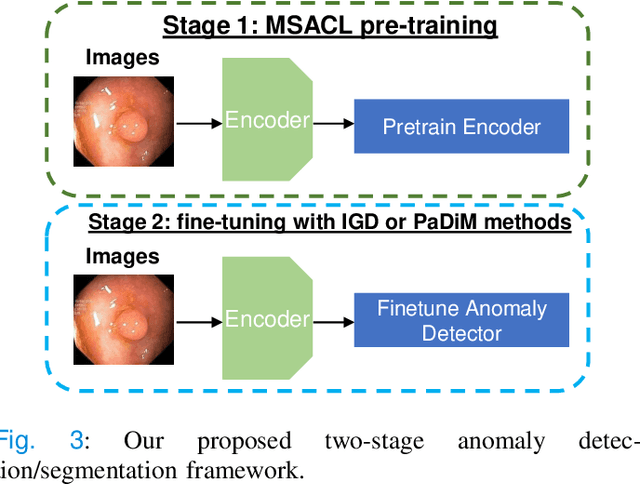

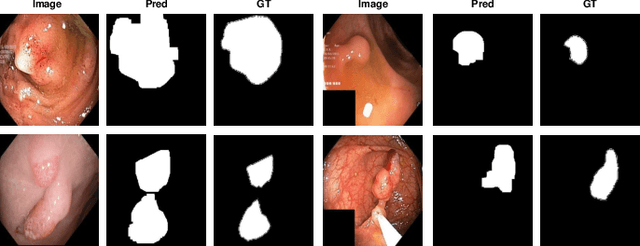

Multi-centred Strong Augmentation via Contrastive Learning for Unsupervised Lesion Detection and Segmentation

Sep 03, 2021

Abstract:The scarcity of high quality medical image annotations hinders the implementation of accurate clinical applications for detecting and segmenting abnormal lesions. To mitigate this issue, the scientific community is working on the development of unsupervised anomaly detection (UAD) systems that learn from a training set containing only normal (i.e., healthy) images, where abnormal samples (i.e., unhealthy) are detected and segmented based on how much they deviate from the learned distribution of normal samples. One significant challenge faced by UAD methods is how to learn effective low-dimensional image representations that are sensitive enough to detect and segment abnormal lesions of varying size, appearance and shape. To address this challenge, we propose a novel self-supervised UAD pre-training algorithm, named Multi-centred Strong Augmentation via Contrastive Learning (MSACL). MSACL learns representations by separating several types of strong and weak augmentations of normal image samples, where the weak augmentations represent normal images and strong augmentations denote synthetic abnormal images. To produce such strong augmentations, we introduce MedMix, a novel data augmentation strategy that creates new training images with realistic looking lesions (i.e., anomalies) in normal images. The pre-trained representations from MSACL are generic and can be used to improve the efficacy of different types of off-the-shelf state-of-the-art (SOTA) UAD models. Comprehensive experimental results show that the use of MSACL largely improves these SOTA UAD models on four medical imaging datasets from diverse organs, namely colonoscopy, fundus screening and covid-19 chest-ray datasets.

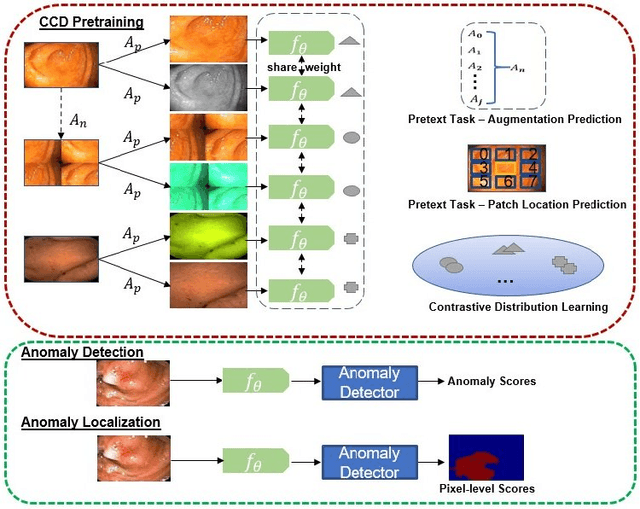

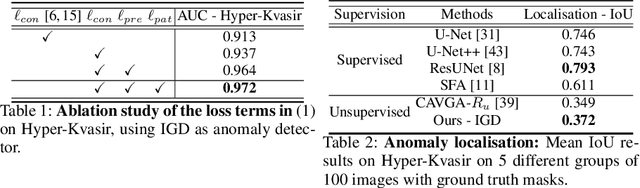

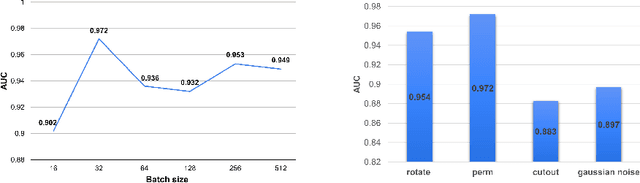

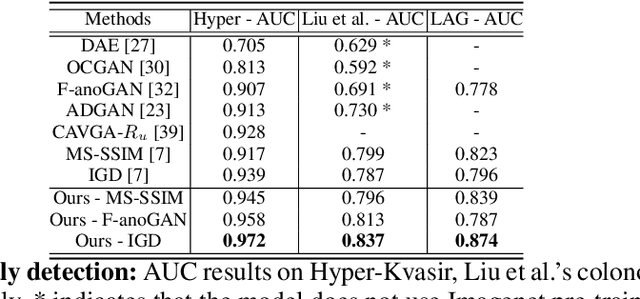

Constrained Contrastive Distribution Learning for Unsupervised Anomaly Detection and Localisation in Medical Images

Mar 05, 2021

Abstract:Unsupervised anomaly detection (UAD) learns one-class classifiers exclusively with normal (i.e., healthy) images to detect any abnormal (i.e., unhealthy) samples that do not conform to the expected normal patterns. UAD has two main advantages over its fully supervised counterpart. Firstly, it is able to directly leverage large datasets available from health screening programs that contain mostly normal image samples, avoiding the costly manual labelling of abnormal samples and the subsequent issues involved in training with extremely class-imbalanced data. Further, UAD approaches can potentially detect and localise any type of lesions that deviate from the normal patterns. One significant challenge faced by UAD methods is how to learn effective low-dimensional image representations to detect and localise subtle abnormalities, generally consisting of small lesions. To address this challenge, we propose a novel self-supervised representation learning method, called Constrained Contrastive Distribution learning for anomaly detection (CCD), which learns fine-grained feature representations by simultaneously predicting the distribution of augmented data and image contexts using contrastive learning with pretext constraints. The learned representations can be leveraged to train more anomaly-sensitive detection models. Extensive experiment results show that our method outperforms current state-of-the-art UAD approaches on three different colonoscopy and fundus screening datasets. Our code is available at https://github.com/tianyu0207/CCD.

Weakly-supervised Video Anomaly Detection with Contrastive Learning of Long and Short-range Temporal Features

Jan 25, 2021

Abstract:In this paper, we address the problem of weakly-supervised video anomaly detection, in which given video-level labels for training, we aim to identify in test videos, the snippets containing abnormal events. Although current methods based on multiple instance learning (MIL) show effective detection performance, they ignore important video temporal dependencies. Also, the number of abnormal snippets can vary per anomaly video, which complicates the training process of MIL-based methods because they tend to focus on the most abnormal snippet -- this can cause it to mistakenly select a normal snippet instead of an abnormal snippet, and also to fail to select all abnormal snippets available. We propose a novel method, named Multi-scale Temporal Network trained with top-K Contrastive Multiple Instance Learning (MTN-KMIL), to address the issues above. The main contributions of MTN-KMIL are: 1) a novel synthesis of a pyramid of dilated convolutions and a self-attention mechanism, with the former capturing the multi-scale short-range temporal dependencies between snippets and the latter capturing long-range temporal dependencies; and 2) a novel contrastive MIL learning method that enforces large margins between the top-K normal and abnormal video snippets at the feature representation level and anomaly score level, resulting in accurate anomaly discrimination. Extensive experiments show that our method outperforms several state-of-the-art methods by a large margin on three benchmark data sets (ShanghaiTech, UCF-Crime and XD-Violence). The code is available at https://github.com/tianyu0207/MTN-KMIL

Detecting, Localising and Classifying Polyps from Colonoscopy Videos using Deep Learning

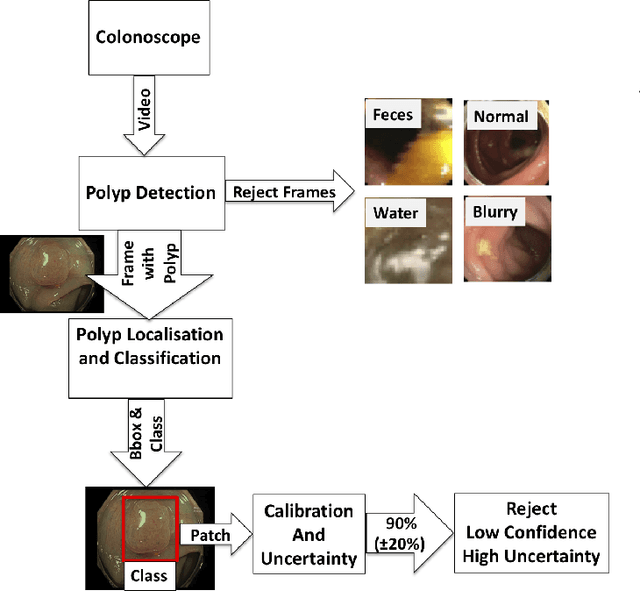

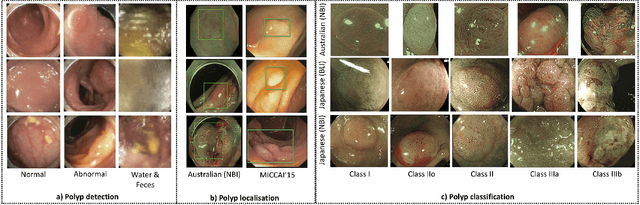

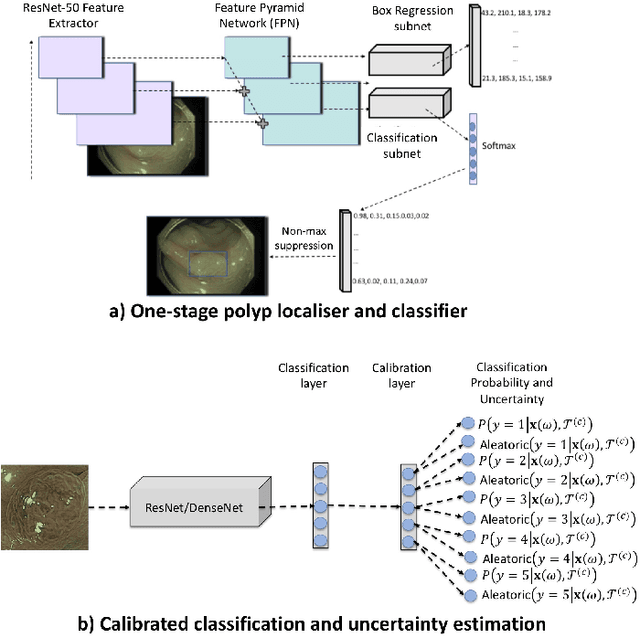

Jan 09, 2021

Abstract:In this paper, we propose and analyse a system that can automatically detect, localise and classify polyps from colonoscopy videos. The detection of frames with polyps is formulated as a few-shot anomaly classification problem, where the training set is highly imbalanced with the large majority of frames consisting of normal images and a small minority comprising frames with polyps. Colonoscopy videos may contain blurry images and frames displaying feces and water jet sprays to clean the colon -- such frames can mistakenly be detected as anomalies, so we have implemented a classifier to reject these two types of frames before polyp detection takes place. Next, given a frame containing a polyp, our method localises (with a bounding box around the polyp) and classifies it into five different classes. Furthermore, we study a method to improve the reliability and interpretability of the classification result using uncertainty estimation and classification calibration. Classification uncertainty and calibration not only help improve classification accuracy by rejecting low-confidence and high-uncertain results, but can be used by doctors to decide how to decide on the classification of a polyp. All the proposed detection, localisation and classification methods are tested using large data sets and compared with relevant baseline approaches.

Few-Shot Anomaly Detection for Polyp Frames from Colonoscopy

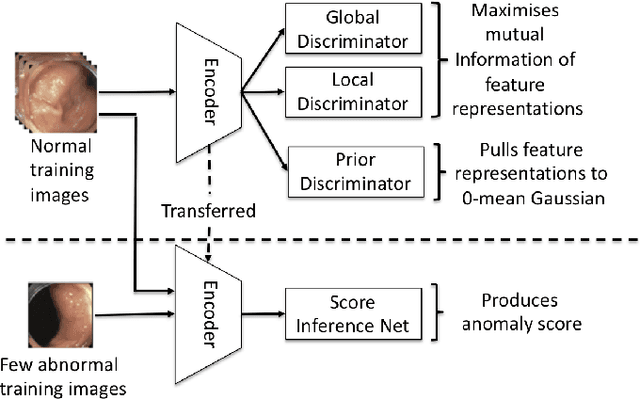

Jun 26, 2020

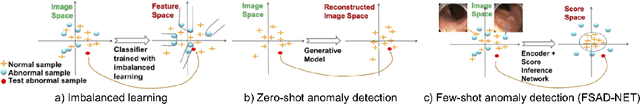

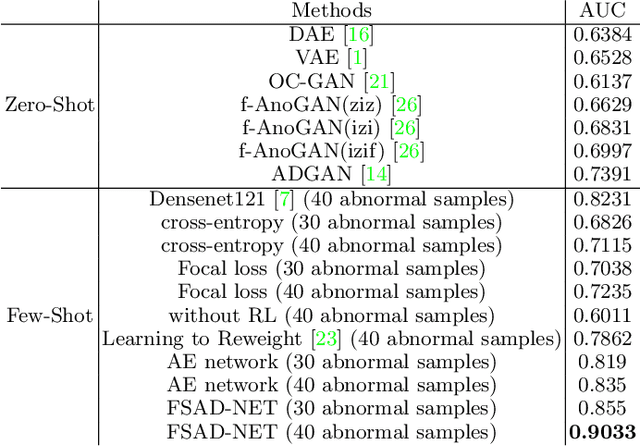

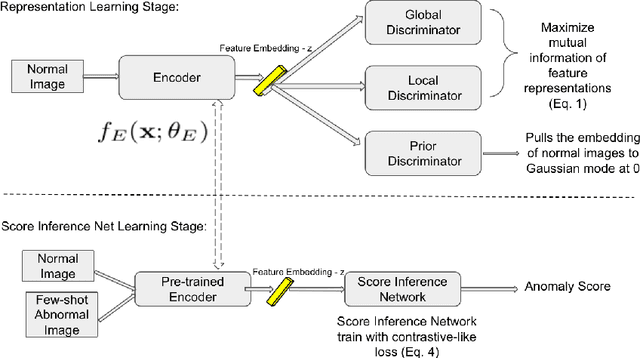

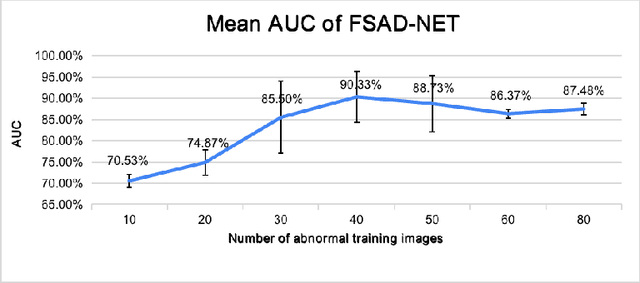

Abstract:Anomaly detection methods generally target the learning of a normal image distribution (i.e., inliers showing healthy cases) and during testing, samples relatively far from the learned distribution are classified as anomalies (i.e., outliers showing disease cases). These approaches tend to be sensitive to outliers that lie relatively close to inliers (e.g., a colonoscopy image with a small polyp). In this paper, we address the inappropriate sensitivity to outliers by also learning from inliers. We propose a new few-shot anomaly detection method based on an encoder trained to maximise the mutual information between feature embeddings and normal images, followed by a few-shot score inference network, trained with a large set of inliers and a substantially smaller set of outliers. We evaluate our proposed method on the clinical problem of detecting frames containing polyps from colonoscopy video sequences, where the training set has 13350 normal images (i.e., without polyps) and less than 100 abnormal images (i.e., with polyps). The results of our proposed model on this data set reveal a state-of-the-art detection result, while the performance based on different number of anomaly samples is relatively stable after approximately 40 abnormal training images.

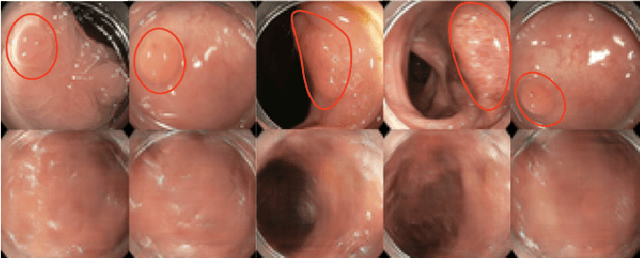

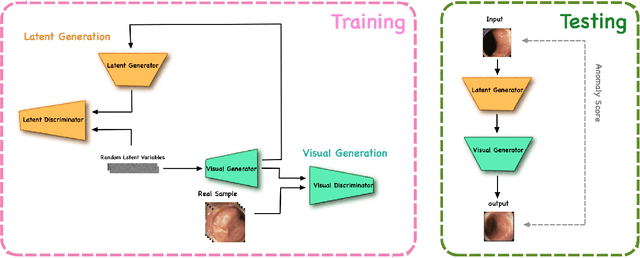

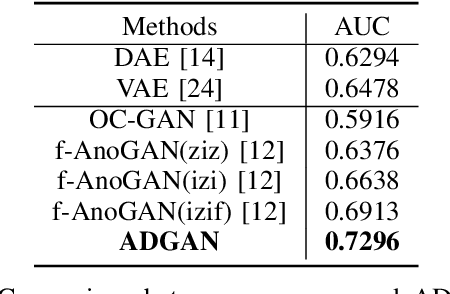

Photoshopping Colonoscopy Video Frames

Oct 23, 2019

Abstract:The automatic detection of frames containing polyps from a colonoscopy video sequence is an important first step for a fully automated colonoscopy analysis tool. Typically, such detection system is built using a large annotated data set of frames with and without polyps, which is expensive to be obtained. In this paper, we introduce a new system that detects frames containing polyps as anomalies from a distribution of frames from exams that do not contain any polyps. The system is trained using a one-class training set consisting of colonoscopy frames without polyps -- such training set is considerably less expensive to obtain, compared to the 2-class data set mentioned above. During inference, the system is only able to reconstruct frames without polyps, and when it tries to reconstruct a frame with polyp, it automatically removes (i.e., photoshop) it from the frame -- the difference between the input and reconstructed frames is used to detect frames with polyps. We name our proposed model as anomaly detection generative adversarial network (ADGAN), comprising a dual GAN with two generators and two discriminators. We show that our proposed approach achieves the state-of-the-art result on this data set, compared with recently proposed anomaly detection systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge