Rajiv Kumar

Development and Validation of Fully Automatic Deep Learning-Based Algorithms for Immunohistochemistry Reporting of Invasive Breast Ductal Carcinoma

Jun 16, 2024

Abstract:Immunohistochemistry (IHC) analysis is a well-accepted and widely used method for molecular subtyping, a procedure for prognosis and targeted therapy of breast carcinoma, the most common type of tumor affecting women. There are four molecular biomarkers namely progesterone receptor (PR), estrogen receptor (ER), antigen Ki67, and human epidermal growth factor receptor 2 (HER2) whose assessment is needed under IHC procedure to decide prognosis as well as predictors of response to therapy. However, IHC scoring is based on subjective microscopic examination of tumor morphology and suffers from poor reproducibility, high subjectivity, and often incorrect scoring in low-score cases. In this paper, we present, a deep learning-based semi-supervised trained, fully automatic, decision support system (DSS) for IHC scoring of invasive ductal carcinoma. Our system automatically detects the tumor region removing artifacts and scores based on Allred standard. The system is developed using 3 million pathologist-annotated image patches from 300 slides, fifty thousand in-house cell annotations, and forty thousand pixels marking of HER2 membrane. We have conducted multicentric trials at four centers with three different types of digital scanners in terms of percentage agreement with doctors. And achieved agreements of 95, 92, 88 and 82 percent for Ki67, HER2, ER, and PR stain categories, respectively. In addition to overall accuracy, we found that there is 5 percent of cases where pathologist have changed their score in favor of algorithm score while reviewing with detailed algorithmic analysis. Our approach could improve the accuracy of IHC scoring and subsequent therapy decisions, particularly where specialist expertise is unavailable. Our system is highly modular. The proposed algorithm modules can be used to develop DSS for other cancer types.

Multi-Stain Multi-Level Convolutional Network for Multi-Tissue Breast Cancer Image Segmentation

Jun 09, 2024

Abstract:Digital pathology and microscopy image analysis are widely employed in the segmentation of digitally scanned IHC slides, primarily to identify cancer and pinpoint regions of interest (ROI) indicative of tumor presence. However, current ROI segmentation models are either stain-specific or suffer from the issues of stain and scanner variance due to different staining protocols or modalities across multiple labs. Also, tissues like Ductal Carcinoma in Situ (DCIS), acini, etc. are often classified as Tumors due to their structural similarities and color compositions. In this paper, we proposed a novel convolutional neural network (CNN) based Multi-class Tissue Segmentation model for histopathology whole-slide Breast slides which classify tumors and segments other tissue regions such as Ducts, acini, DCIS, Squamous epithelium, Blood Vessels, Necrosis, etc. as a separate class. Our unique pixel-aligned non-linear merge across spatial resolutions empowers models with both local and global fields of view for accurate detection of various classes. Our proposed model is also able to separate bad regions such as folds, artifacts, blurry regions, bubbles, etc. from tissue regions using multi-level context from different resolutions of WSI. Multi-phase iterative training with context-aware augmentation and increasing noise was used to efficiently train a multi-stain generic model with partial and noisy annotations from 513 slides. Our training pipeline used 12 million patches generated using context-aware augmentations which made our model stain and scanner invariant across data sources. To extrapolate stain and scanner invariance, our model was evaluated on 23000 patches which were for a completely new stain (Hematoxylin and Eosin) from a completely new scanner (Motic) from a different lab. The mean IOU was 0.72 which is on par with model performance on other data sources and scanners.

FPCD: An Open Aerial VHR Dataset for Farm Pond Change Detection

Feb 28, 2023Abstract:Change detection for aerial imagery involves locating and identifying changes associated with the areas of interest between co-registered bi-temporal or multi-temporal images of a geographical location. Farm ponds are man-made structures belonging to the category of minor irrigation structures used to collect surface run-off water for future irrigation purposes. Detection of farm ponds from aerial imagery and their evolution over time helps in land surveying to analyze the agricultural shifts, policy implementation, seasonal effects and climate changes. In this paper, we introduce a publicly available object detection and instance segmentation (OD/IS) dataset for localizing farm ponds from aerial imagery. We also collected and annotated the bi-temporal data over a time-span of 14 years across 17 villages, resulting in a binary change detection dataset called \textbf{F}arm \textbf{P}ond \textbf{C}hange \textbf{D}etection Dataset (\textbf{FPCD}). We have benchmarked and analyzed the performance of various object detection and instance segmentation methods on our OD/IS dataset and the change detection methods over the FPCD dataset. The datasets are publicly accessible at this page: \textit{\url{https://huggingface.co/datasets/ctundia/FPCD}}

DEff-GAN: Diverse Attribute Transfer for Few-Shot Image Synthesis

Feb 28, 2023

Abstract:Requirements of large amounts of data is a difficulty in training many GANs. Data efficient GANs involve fitting a generators continuous target distribution with a limited discrete set of data samples, which is a difficult task. Single image methods have focused on modeling the internal distribution of a single image and generating its samples. While single image methods can synthesize image samples with diversity, they do not model multiple images or capture the inherent relationship possible between two images. Given only a handful of images, we are interested in generating samples and exploiting the commonalities in the input images. In this work, we extend the single-image GAN method to model multiple images for sample synthesis. We modify the discriminator with an auxiliary classifier branch, which helps to generate a wide variety of samples and to classify the input labels. Our Data-Efficient GAN (DEff-GAN) generates excellent results when similarities and correspondences can be drawn between the input images or classes.

Deriving Surface Resistivity from Polarimetric SAR Data Using Dual-Input UNet

Jul 05, 2022

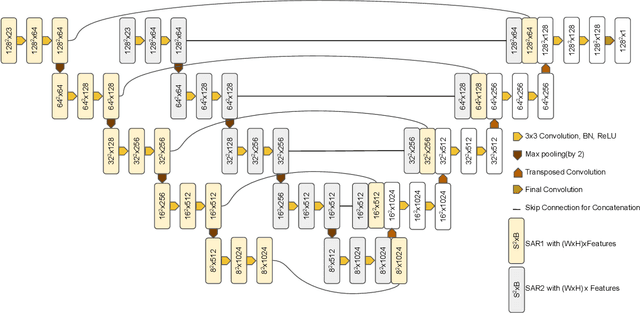

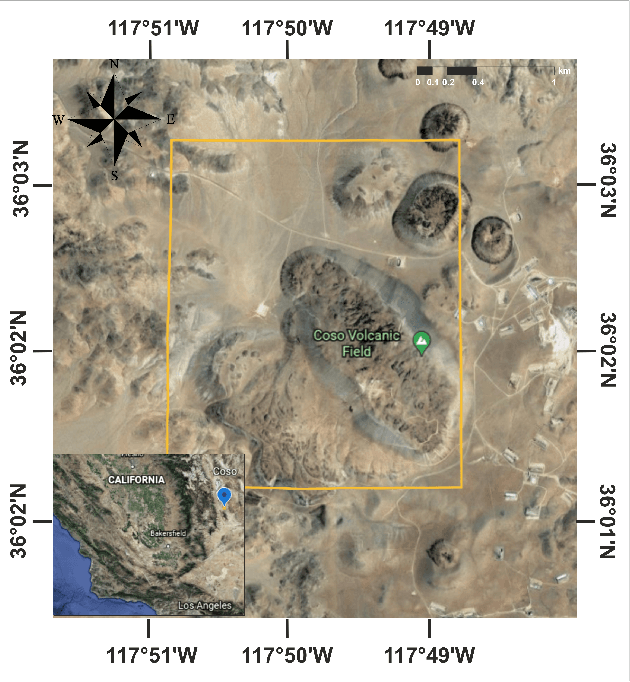

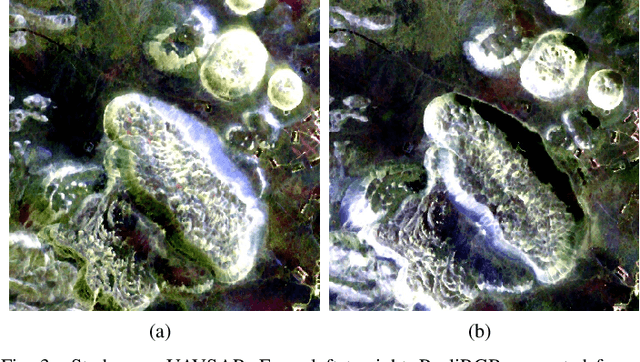

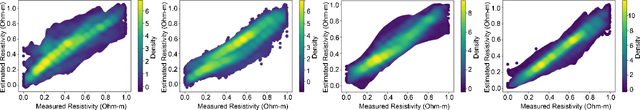

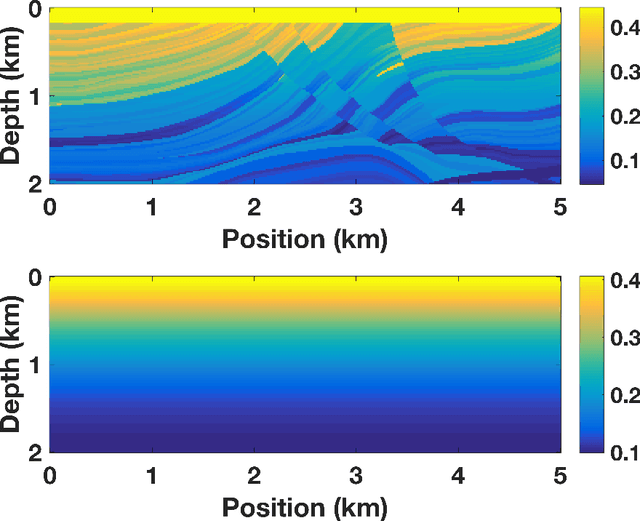

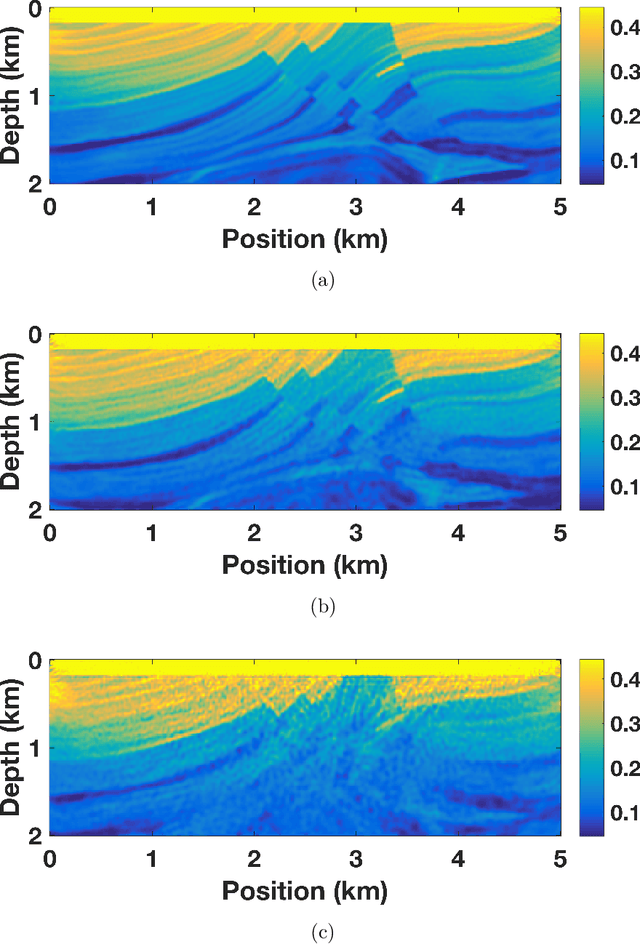

Abstract:Traditional survey methods for finding surface resistivity are time-consuming and labor intensive. Very few studies have focused on finding the resistivity/conductivity using remote sensing data and deep learning techniques. In this line of work, we assessed the correlation between surface resistivity and Synthetic Aperture Radar (SAR) by applying various deep learning methods and tested our hypothesis in the Coso Geothermal Area, USA. For detecting the resistivity, L-band full polarimetric SAR data acquired by UAVSAR were used, and MT (Magnetotellurics) inverted resistivity data of the area were used as the ground truth. We conducted experiments to compare various deep learning architectures and suggest the use of Dual Input UNet (DI-UNet) architecture. DI-UNet uses a deep learning architecture to predict the resistivity using full polarimetric SAR data by promising a quick survey addition to the traditional method. Our proposed approach accomplished improved outcomes for the mapping of MT resistivity from SAR data.

The MIS Check-Dam Dataset for Object Detection and Instance Segmentation Tasks

Nov 30, 2021

Abstract:Deep learning has led to many recent advances in object detection and instance segmentation, among other computer vision tasks. These advancements have led to wide application of deep learning based methods and related methodologies in object detection tasks for satellite imagery. In this paper, we introduce MIS Check-Dam, a new dataset of check-dams from satellite imagery for building an automated system for the detection and mapping of check-dams, focusing on the importance of irrigation structures used for agriculture. We review some of the most recent object detection and instance segmentation methods and assess their performance on our new dataset. We evaluate several single stage, two-stage and attention based methods under various network configurations and backbone architectures. The dataset and the pre-trained models are available at https://www.cse.iitb.ac.in/gramdrishti/.

Learning Unsupervised Cross-domain Image-to-Image Translation Using a Shared Discriminator

Feb 09, 2021

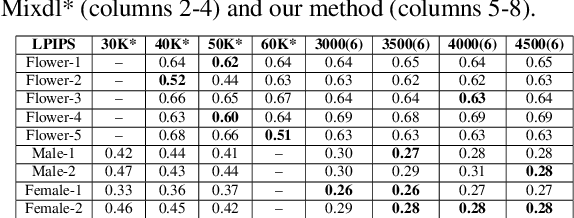

Abstract:Unsupervised image-to-image translation is used to transform images from a source domain to generate images in a target domain without using source-target image pairs. Promising results have been obtained for this problem in an adversarial setting using two independent GANs and attention mechanisms. We propose a new method that uses a single shared discriminator between the two GANs, which improves the overall efficacy. We assess the qualitative and quantitative results on image transfiguration, a cross-domain translation task, in a setting where the target domain shares similar semantics to the source domain. Our results indicate that even without adding attention mechanisms, our method performs at par with attention-based methods and generates images of comparable quality.

Basis Pursuit Denoise with Nonsmooth Constraints

Nov 28, 2018

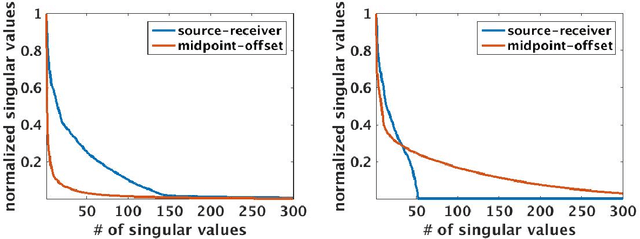

Abstract:Level-set optimization formulations with data-driven constraints minimize a regularization functional subject to matching observations to a given error level. These formulations are widely used, particularly for matrix completion and sparsity promotion in data interpolation and denoising. The misfit level is typically measured in the l2 norm, or other smooth metrics. In this paper, we present a new flexible algorithmic framework that targets nonsmooth level-set constraints, including L1, Linf, and even L0 norms. These constraints give greater flexibility for modeling deviations in observation and denoising, and have significant impact on the solution. Measuring error in the L1 and L0 norms makes the result more robust to large outliers, while matching many observations exactly. We demonstrate the approach for basis pursuit denoise (BPDN) problems as well as for extensions of BPDN to matrix factorization, with applications to interpolation and denoising of 5D seismic data. The new methods are particularly promising for seismic applications, where the amplitude in the data varies significantly, and measurement noise in low-amplitude regions can wreak havoc for standard Gaussian error models.

Simultaneous shot inversion for nonuniform geometries using fast data interpolation

Apr 23, 2018

Abstract:Stochastic optimization is key to efficient inversion in PDE-constrained optimization. Using 'simultaneous shots', or random superposition of source terms, works very well in simple acquisition geometries where all sources see all receivers, but this rarely occurs in practice. We develop an approach that interpolates data to an ideal acquisition geometry while solving the inverse problem using simultaneous shots. The approach is formulated as a joint inverse problem, combining ideas from low-rank interpolation with full-waveform inversion. Results using synthetic experiments illustrate the flexibility and efficiency of the approach.

Beating level-set methods for 3D seismic data interpolation: a primal-dual alternating approach

Jul 09, 2016

Abstract:Acquisition cost is a crucial bottleneck for seismic workflows, and low-rank formulations for data interpolation allow practitioners to `fill in' data volumes from critically subsampled data acquired in the field. Tremendous size of seismic data volumes required for seismic processing remains a major challenge for these techniques. We propose a new approach to solve residual constrained formulations for interpolation. We represent the data volume using matrix factors, and build a block-coordinate algorithm with constrained convex subproblems that are solved with a primal-dual splitting scheme. The new approach is competitive with state of the art level-set algorithms that interchange the role of objectives with constraints. We use the new algorithm to successfully interpolate a large scale 5D seismic data volume, generated from the geologically complex synthetic 3D Compass velocity model, where 80% of the data has been removed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge