Prasad Sudhakar

Deep Attention-guided Adaptive Subsampling

Oct 14, 2025Abstract:Although deep neural networks have provided impressive gains in performance, these improvements often come at the cost of increased computational complexity and expense. In many cases, such as 3D volume or video classification tasks, not all slices or frames are necessary due to inherent redundancies. To address this issue, we propose a novel learnable subsampling framework that can be integrated into any neural network architecture. Subsampling, being a nondifferentiable operation, poses significant challenges for direct adaptation into deep learning models. While some works, have proposed solutions using the Gumbel-max trick to overcome the problem of non-differentiability, they fall short in a crucial aspect: they are only task-adaptive and not inputadaptive. Once the sampling mechanism is learned, it remains static and does not adjust to different inputs, making it unsuitable for real-world applications. To this end, we propose an attention-guided sampling module that adapts to inputs even during inference. This dynamic adaptation results in performance gains and reduces complexity in deep neural network models. We demonstrate the effectiveness of our method on 3D medical imaging datasets from MedMNIST3D as well as two ultrasound video datasets for classification tasks, one of them being a challenging in-house dataset collected under real-world clinical conditions.

Label Sharing Incremental Learning Framework for Independent Multi-Label Segmentation Tasks

Nov 17, 2024

Abstract:In a setting where segmentation models have to be built for multiple datasets, each with its own corresponding label set, a straightforward way is to learn one model for every dataset and its labels. Alternatively, multi-task architectures with shared encoders and multiple segmentation heads or shared weights with compound labels can also be made use of. This work proposes a novel label sharing framework where a shared common label space is constructed and each of the individual label sets are systematically mapped to the common labels. This transforms multiple datasets with disparate label sets into a single large dataset with shared labels, and therefore all the segmentation tasks can be addressed by learning a single model. This eliminates the need for task specific adaptations in network architectures and also results in parameter and data efficient models. Furthermore, label sharing framework is naturally amenable for incremental learning where segmentations for new datasets can be easily learnt. We experimentally validate our method on various medical image segmentation datasets, each involving multi-label segmentation. Furthermore, we demonstrate the efficacy of the proposed method in terms of performance and incremental learning ability vis-a-vis alternative methods.

Task-driven Prompt Evolution for Foundation Models

Oct 26, 2023Abstract:Promptable foundation models, particularly Segment Anything Model (SAM), have emerged as a promising alternative to the traditional task-specific supervised learning for image segmentation. However, many evaluation studies have found that their performance on medical imaging modalities to be underwhelming compared to conventional deep learning methods. In the world of large pre-trained language and vision-language models, learning prompt from downstream tasks has achieved considerable success in improving performance. In this work, we propose a plug-and-play Prompt Optimization Technique for foundation models like SAM (SAMPOT) that utilizes the downstream segmentation task to optimize the human-provided prompt to obtain improved performance. We demonstrate the utility of SAMPOT on lung segmentation in chest X-ray images and obtain an improvement on a significant number of cases ($\sim75\%$) over human-provided initial prompts. We hope this work will lead to further investigations in the nascent field of automatic visual prompt-tuning.

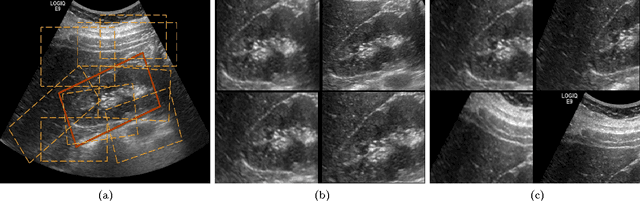

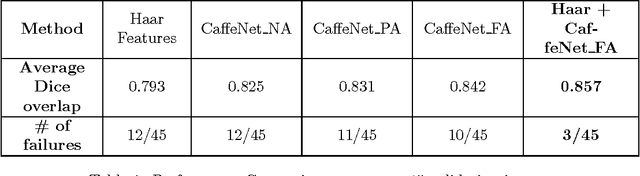

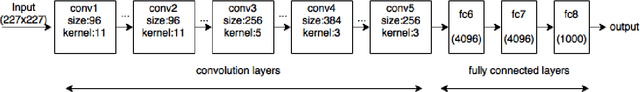

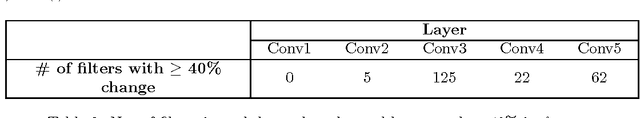

Understanding the Mechanisms of Deep Transfer Learning for Medical Images

Apr 20, 2017

Abstract:The ability to automatically learn task specific feature representations has led to a huge success of deep learning methods. When large training data is scarce, such as in medical imaging problems, transfer learning has been very effective. In this paper, we systematically investigate the process of transferring a Convolutional Neural Network, trained on ImageNet images to perform image classification, to kidney detection problem in ultrasound images. We study how the detection performance depends on the extent of transfer. We show that a transferred and tuned CNN can outperform a state-of-the-art feature engineered pipeline and a hybridization of these two techniques achieves 20\% higher performance. We also investigate how the evolution of intermediate response images from our network. Finally, we compare these responses to state-of-the-art image processing filters in order to gain greater insight into how transfer learning is able to effectively manage widely varying imaging regimes.

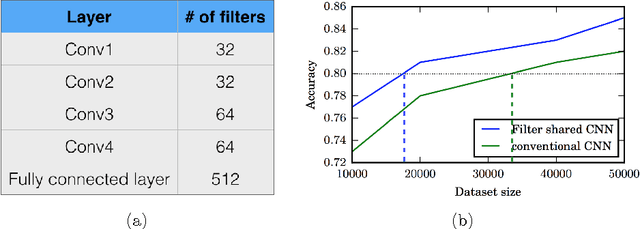

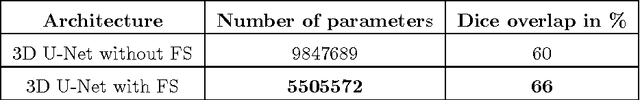

Filter sharing: Efficient learning of parameters for volumetric convolutions

Dec 08, 2016

Abstract:Typical convolutional neural networks (CNNs) have several millions of parameters and require a large amount of annotated data to train them. In medical applications where training data is hard to come by, these sophisticated machine learning models are difficult to train. In this paper, we propose a method to reduce the inherent complexity of CNNs during training by exploiting the significant redundancy that is noticed in the learnt CNN filters. Our method relies on finding a small set of filters and mixing coefficients to derive every filter in each convolutional layer at the time of training itself, thereby reducing the number of parameters to be trained. We consider the problem of 3D lung nodule segmentation in CT images and demonstrate the effectiveness of our method in achieving good results with only few training examples.

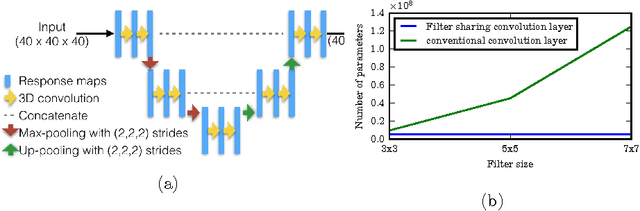

Compressive Imaging and Characterization of Sparse Light Deflection Maps

Jul 14, 2015

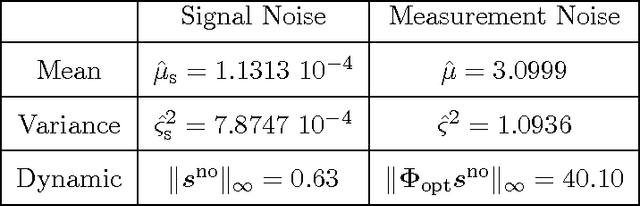

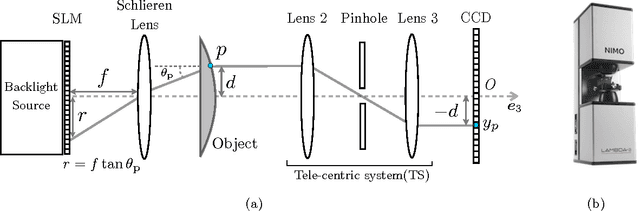

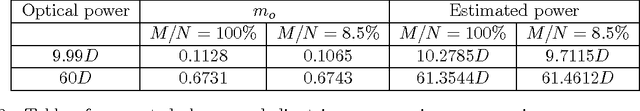

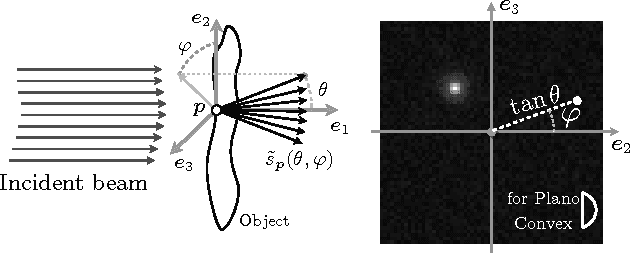

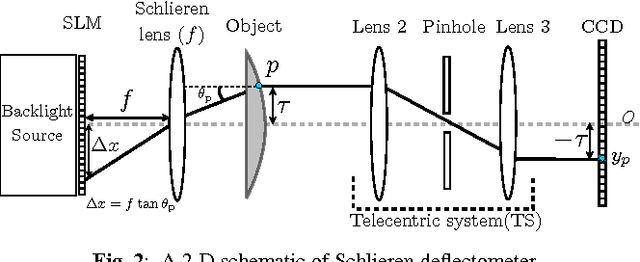

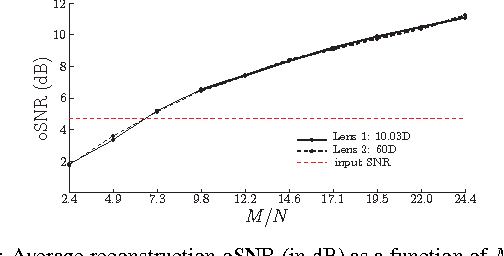

Abstract:Light rays incident on a transparent object of uniform refractive index undergo deflections, which uniquely characterize the surface geometry of the object. Associated with each point on the surface is a deflection map (or spectrum) which describes the pattern of deflections in various directions. This article presents a novel method to efficiently acquire and reconstruct sparse deflection spectra induced by smooth object surfaces. To this end, we leverage the framework of Compressed Sensing (CS) in a particular implementation of a schlieren deflectometer, i.e., an optical system providing linear measurements of deflection spectra with programmable spatial light modulation patterns. We design those modulation patterns on the principle of spread spectrum CS for reducing the number of observations. The ability of our device to simultaneously observe the deflection spectra on a dense discretization of the object surface is related to a Multiple Measurement Vector (MMV) model. This scheme allows us to estimate both the noise power and the instrumental point spread function. We formulate the spectrum reconstruction task as the solving of a linear inverse problem regularized by an analysis sparsity prior using a translation invariant wavelet frame. Our results demonstrate the capability and advantages of using a CS based approach for deflectometric imaging both on simulated data and experimental deflectometric data. Finally, the paper presents an extension of our method showing how we can extract the main deflection direction in each point of the object surface from a few compressive measurements, without needing any costly reconstruction procedures. This compressive characterization is then confirmed with experimental results on simple plano-convex and multifocal intra-ocular lenses studying the evolution of the main deflection as a function of the object point location.

Compressive Schlieren Deflectometry

Dec 03, 2012

Abstract:Schlieren deflectometry aims at characterizing the deflections undergone by refracted incident light rays at any surface point of a transparent object. For smooth surfaces, each surface location is actually associated with a sparse deflection map (or spectrum). This paper presents a novel method to compressively acquire and reconstruct such spectra. This is achieved by altering the way deflection information is captured in a common Schlieren Deflectometer, i.e., the deflection spectra are indirectly observed by the principle of spread spectrum compressed sensing. These observations are realized optically using a 2-D Spatial Light Modulator (SLM) adjusted to the corresponding sensing basis and whose modulations encode the light deviation subsequently recorded by a CCD camera. The efficiency of this approach is demonstrated experimentally on the observation of few test objects. Further, using a simple parametrization of the deflection spectra we show that relevant key parameters can be directly computed using the measurements, avoiding full reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge