Laurent Jacques

Random Features for Grassmannian Kernels

Apr 30, 2025Abstract:The Grassmannian manifold G(k, n) serves as a fundamental tool in signal processing, computer vision, and machine learning, where problems often involve classifying, clustering, or comparing subspaces. In this work, we propose a sketching-based approach to approximate Grassmannian kernels using random projections. We introduce three variations of kernel approximation, including two that rely on binarised sketches, offering substantial memory gains. We establish theoretical properties of our method in the special case of G(1, n) and extend it to general G(k, n). Experimental validation demonstrates that our sketched kernels closely match the performance of standard Grassmannian kernels while avoiding the need to compute or store the full kernel matrix. Our approach enables scalable Grassmannian-based methods for large-scale applications in machine learning and pattern recognition.

MROP: Modulated Rank-One Projections for compressive radio interferometric imaging

Apr 25, 2025Abstract:The emerging generation of radio-interferometric (RI) arrays are set to form images of the sky with a new regime of sensitivity and resolution. This implies a significant increase in visibility data volumes, scaling as $\mathcal{O}(Q^{2}B)$ for $Q$ antennas and $B$ short-time integration intervals (or batches), calling for efficient data dimensionality reduction techniques. This paper proposes a new approach to data compression during acquisition, coined modulated rank-one projection (MROP). MROP compresses the $Q\times Q$ batchwise covariance matrix into a smaller number $P$ of random rank-one projections and compresses across time by trading $B$ for a smaller number $M$ of random modulations of the ROP measurement vectors. Firstly, we introduce a dual perspective on the MROP acquisition, which can either be understood as random beamforming, or as a post-correlation compression. Secondly, we analyse the noise statistics of MROPs and demonstrate that the random projections induce a uniform noise level across measurements independently of the visibility-weighting scheme used. Thirdly, we propose a detailed analysis of the memory and computational cost requirements across the data acquisition and image reconstruction stages, with comparison to state-of-the-art dimensionality reduction approaches. Finally, the MROP model is validated in simulation for monochromatic intensity imaging, with comparison to the classical and baseline-dependent averaging (BDA) models, and using the uSARA optimisation algorithm for image formation. An extensive experimental setup is considered, with ground-truth images containing diffuse and faint emission and spanning a wide variety of dynamic ranges, and for a range of $uv$-coverages corresponding to VLA and MeerKAT observation.

Herglotz-NET: Implicit Neural Representation of Spherical Data with Harmonic Positional Encoding

Feb 20, 2025Abstract:Representing and processing data in spherical domains presents unique challenges, primarily due to the curvature of the domain, which complicates the application of classical Euclidean techniques. Implicit neural representations (INRs) have emerged as a promising alternative for high-fidelity data representation; however, to effectively handle spherical domains, these methods must be adapted to the inherent geometry of the sphere to maintain both accuracy and stability. In this context, we propose Herglotz-NET (HNET), a novel INR architecture that employs a harmonic positional encoding based on complex Herglotz mappings. This encoding yields a well-posed representation on the sphere with interpretable and robust spectral properties. Moreover, we present a unified expressivity analysis showing that any spherical-based INR satisfying a mild condition exhibits a predictable spectral expansion that scales with network depth. Our results establish HNET as a scalable and flexible framework for accurate modeling of spherical data.

Towards Generative Ray Path Sampling for Faster Point-to-Point Ray Tracing

Oct 31, 2024Abstract:Radio propagation modeling is essential in telecommunication research, as radio channels result from complex interactions with environmental objects. Recently, Machine Learning has been attracting attention as a potential alternative to computationally demanding tools, like Ray Tracing, which can model these interactions in detail. However, existing Machine Learning approaches often attempt to learn directly specific channel characteristics, such as the coverage map, making them highly specific to the frequency and material properties and unable to fully capture the underlying propagation mechanisms. Hence, Ray Tracing, particularly the Point-to-Point variant, remains popular to accurately identify all possible paths between transmitter and receiver nodes. Still, path identification is computationally intensive because the number of paths to be tested grows exponentially while only a small fraction is valid. In this paper, we propose a Machine Learning-aided Ray Tracing approach to efficiently sample potential ray paths, significantly reducing the computational load while maintaining high accuracy. Our model dynamically learns to prioritize potentially valid paths among all possible paths and scales linearly with scene complexity. Unlike recent alternatives, our approach is invariant with translation, scaling, or rotation of the geometry, and avoids dependency on specific environment characteristics.

Comparing Differentiable and Dynamic Ray Tracing: Introducing the Multipath Lifetime Map

Oct 21, 2024

Abstract:With the increasing presence of dynamic scenarios, such as Vehicle-to-Vehicle communications, radio propagation modeling tools must adapt to the rapidly changing nature of the radio channel. Recently, both Differentiable and Dynamic Ray Tracing frameworks have emerged to address these challenges. However, there is often confusion about how these approaches differ and which one should be used in specific contexts. In this paper, we provide an overview of these two techniques and a comparative analysis against two state-of-the-art tools: 3DSCAT from UniBo and Sionna from NVIDIA. To provide a more precise characterization of the scope of these methods, we introduce a novel simulation-based metric, the Multipath Lifetime Map, which enables the evaluation of spatial and temporal coherence in radio channels only based on the geometrical description of the environment. Finally, our metrics are evaluated on a classic urban street canyon scenario, yielding similar results to those obtained from measurement campaigns.

UNSURE: Unknown Noise level Stein's Unbiased Risk Estimator

Sep 03, 2024

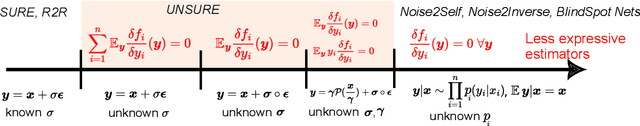

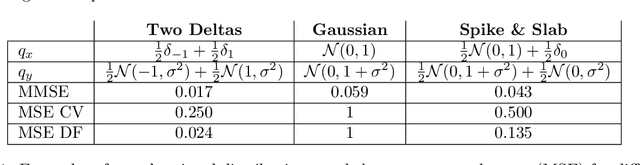

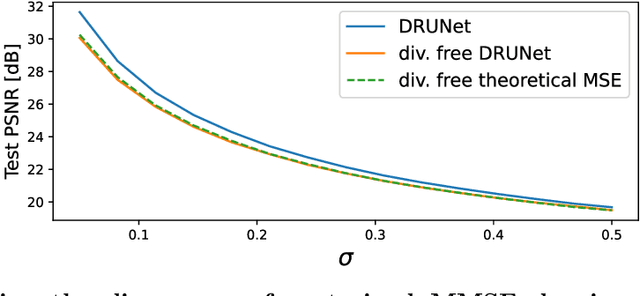

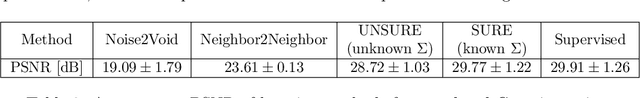

Abstract:Recently, many self-supervised learning methods for image reconstruction have been proposed that can learn from noisy data alone, bypassing the need for ground-truth references. Most existing methods cluster around two classes: i) Noise2Self and similar cross-validation methods that require very mild knowledge about the noise distribution, and ii) Stein's Unbiased Risk Estimator (SURE) and similar approaches that assume full knowledge of the distribution. The first class of methods is often suboptimal compared to supervised learning, and the second class is often impractical, as the noise level is generally unknown in real-world applications. In this paper, we provide a theoretical framework that characterizes this expressivity-robustness trade-off and propose a new approach based on SURE, but unlike the standard SURE, does not require knowledge about the noise level. Throughout a series of experiments, we show that the proposed estimator outperforms other existing self-supervised methods on various imaging inverse problems.

Flattened one-bit stochastic gradient descent: compressed distributed optimization with controlled variance

May 17, 2024

Abstract:We propose a novel algorithm for distributed stochastic gradient descent (SGD) with compressed gradient communication in the parameter-server framework. Our gradient compression technique, named flattened one-bit stochastic gradient descent (FO-SGD), relies on two simple algorithmic ideas: (i) a one-bit quantization procedure leveraging the technique of dithering, and (ii) a randomized fast Walsh-Hadamard transform to flatten the stochastic gradient before quantization. As a result, the approximation of the true gradient in this scheme is biased, but it prevents commonly encountered algorithmic problems, such as exploding variance in the one-bit compression regime, deterioration of performance in the case of sparse gradients, and restrictive assumptions on the distribution of the stochastic gradients. In fact, we show SGD-like convergence guarantees under mild conditions. The compression technique can be used in both directions of worker-server communication, therefore admitting distributed optimization with full communication compression.

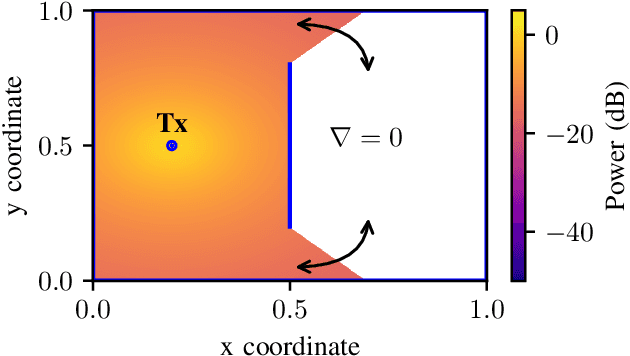

Fully Differentiable Ray Tracing via Discontinuity Smoothing for Radio Network Optimization

Jan 22, 2024

Abstract:Recently, Differentiable Ray Tracing has been successfully applied in the field of wireless communications for learning radio materials or optimizing the transmitter orientation. However, in the frame of gradient based optimization, obstruction of the rays by objects can cause sudden variations in the related objective functions or create entire regions where the gradient is zero. As these issues can dramatically impact convergence, this paper presents a novel Ray Tracing framework that is fully differentiable with respect to any scene parameter, but also provides a loss function continuous everywhere, thanks to specific local smoothing techniques. Previously non-continuous functions are replaced by a smoothing function, that can be exchanged with any function having similar properties. This function is also configurable via a parameter that determines how smooth the approximation should be. The present method is applied on a basic one-transmitter-multi-receiver scenario, and shows that it can successfully find the optimal solution. As a complementary resource, a 2D Python library, DiffeRT2d, is provided in Open Access, with examples and a comprehensive documentation.

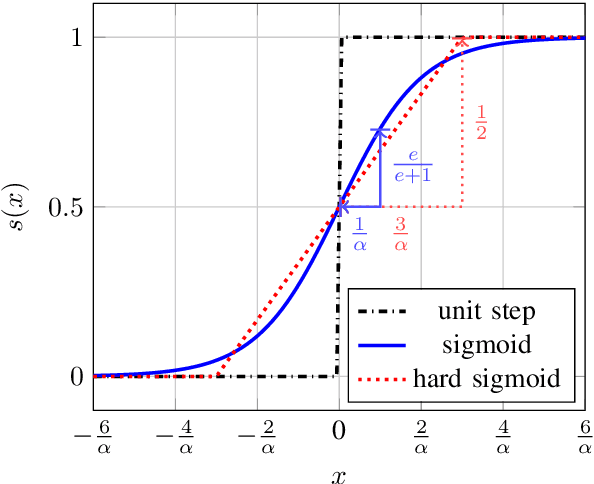

Spintronics for image recognition : performance benchmarking via ultrafast data-driven simulations

Aug 10, 2023Abstract:We present a demonstration of image classification using a hardware-based echo-state network (ESN) that relies on spintronic nanostructures known as vortex-based spin-torque oscillators (STVOs). Our network is realized using a single STVO multiplexed in time. To circumvent the challenges associated with repeated experimental manipulation of such a nanostructured system, we employ an ultrafast data-driven simulation framework called the data-driven Thiele equation approach (DD-TEA) to simulate the STVO dynamics. We use this approach to efficiently develop, optimize and test an STVO-based ESN for image classification using the MNIST dataset. We showcase the versatility of our solution by successfully applying it to solve classification challenges with the EMNIST-letters and Fashion MNIST datasets. Through our simulations, we determine that within a large ESN the results obtained using the STVO dynamics as an activation function are comparable to the ones obtained with other conventional nonlinear activation functions like the reLU and the sigmoid. While achieving state-of-the-art accuracy levels on the MNIST dataset, our model's performance on EMNIST-letters and Fashion MNIST is lower due to the relative simplicity of the system architecture and the increased complexity of the tasks. We expect that the DD-TEA framework will enable the exploration of more specialized neural architectures, ultimately leading to improved classification accuracy. This approach also holds promise for investigating and developing dedicated learning rules to further enhance classification performance.

Grid Hopping: Accelerating Direct Estimation Algorithms for Multistatic FMCW Radar

Jul 31, 2023Abstract:This paper presents a novel signal processing technique, coined grid hopping, as well as an active multistatic Frequency-Modulated Continuous Wave (FMCW) radar system designed to evaluate its performance. The design of grid hopping is motivated by two existing estimation algorithms. The first one is the indirect algorithm estimating ranges and speeds separately for each received signal, before combining them to obtain location and velocity estimates. The second one is the direct method jointly processing the received signals to directly estimate target location and velocity. While the direct method is known to provide better performance, it is seldom used because of its high computation time. Our grid hopping approach, which relies on interpolation strategies, offers a reduced computation time while its performance stays on par with the direct method. We validate the efficiency of this technique on actual FMCW radar measurements and compare it with other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge