Hariharan Ravishankar

SonoSAMTrack -- Segment and Track Anything on Ultrasound Images

Nov 07, 2023

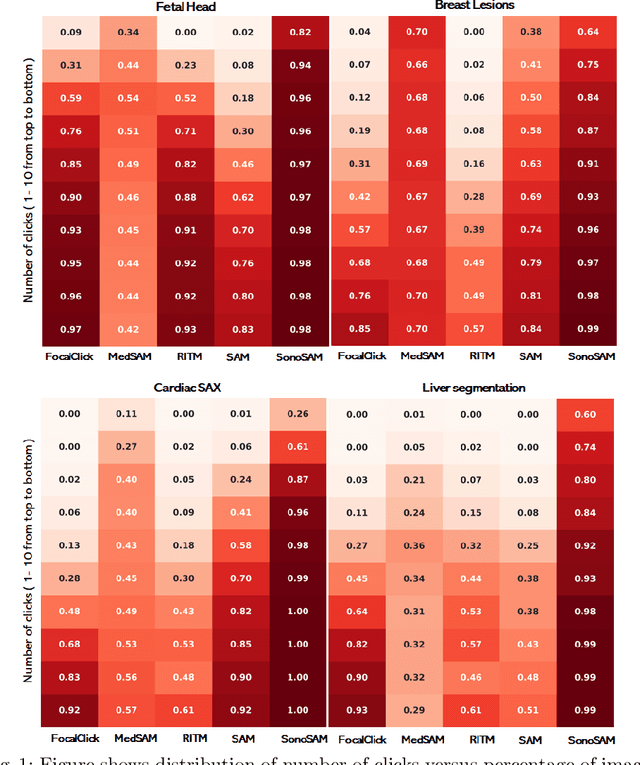

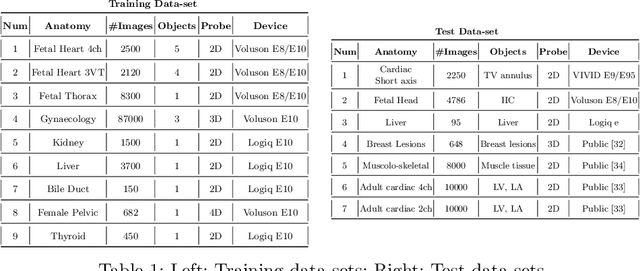

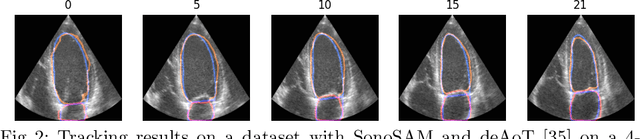

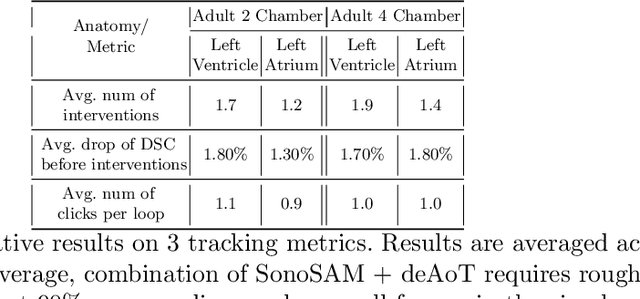

Abstract:In this paper, we present SonoSAM - a promptable foundational model for segmenting objects of interest on ultrasound images, followed by state of the art tracking model to perform segmentations on 2D+t and 3D ultrasound datasets. Fine-tuned exclusively on a rich, diverse set of objects from $\approx200$k ultrasound image-mask pairs, SonoSAM demonstrates state-of-the-art performance on $8$ unseen ultrasound data-sets, outperforming competing methods by a significant margin on all metrics of interest. SonoSAM achieves average dice similarity score of $>90\%$ on almost all test data-sets within 2-6 clicks on an average, making it a valuable tool for annotating ultrasound images. We also extend SonoSAM to 3-D (2-D +t) applications and demonstrate superior performance making it a valuable tool for generating dense annotations from ultrasound cine-loops. Further, to increase practical utility of SonoSAM, we propose a two-step process of fine-tuning followed by knowledge distillation to a smaller footprint model without comprising the performance. We present detailed qualitative and quantitative comparisons of SonoSAM with state-of-the-art methods showcasing efficacy of SonoSAM as one of the first reliable, generic foundational model for ultrasound.

Towards Continuous Domain adaptation for Healthcare

Dec 04, 2018

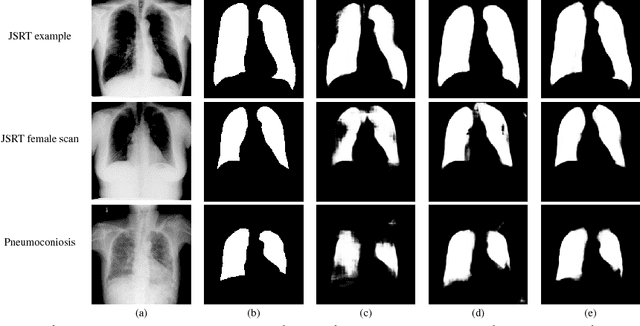

Abstract:Deep learning algorithms have demonstrated tremendous success on challenging medical imaging problems. However, post-deployment, these algorithms are susceptible to data distribution variations owing to \emph{limited data issues} and \emph{diversity} in medical images. In this paper, we propose \emph{ContextNets}, a generic memory-augmented neural network framework for semantic segmentation to achieve continuous domain adaptation without the necessity of retraining. Unlike existing methods which require access to entire source and target domain images, our algorithm can adapt to a target domain with a few similar images. We condition the inference on any new input with features computed on its support set of images (and masks, if available) through contextual embeddings to achieve site-specific adaptation. We demonstrate state-of-the-art domain adaptation performance on the X-ray lung segmentation problem from three independent cohorts that differ in disease type, gender, contrast and intensity variations.

Understanding the Mechanisms of Deep Transfer Learning for Medical Images

Apr 20, 2017

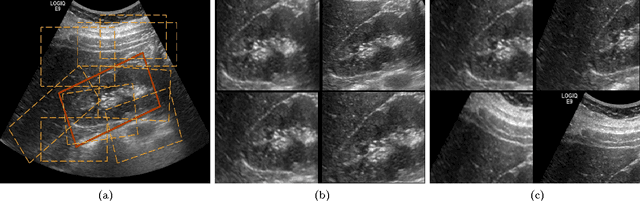

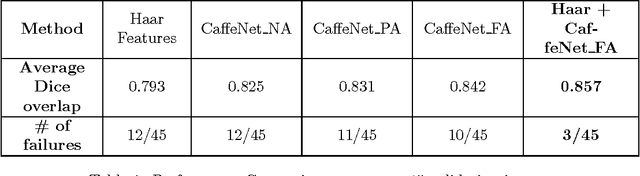

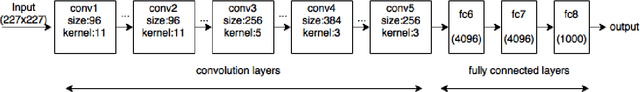

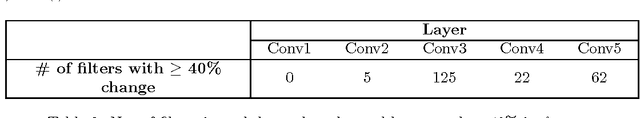

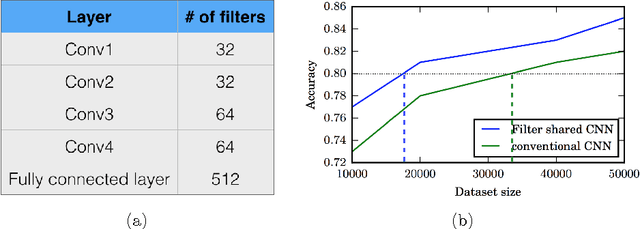

Abstract:The ability to automatically learn task specific feature representations has led to a huge success of deep learning methods. When large training data is scarce, such as in medical imaging problems, transfer learning has been very effective. In this paper, we systematically investigate the process of transferring a Convolutional Neural Network, trained on ImageNet images to perform image classification, to kidney detection problem in ultrasound images. We study how the detection performance depends on the extent of transfer. We show that a transferred and tuned CNN can outperform a state-of-the-art feature engineered pipeline and a hybridization of these two techniques achieves 20\% higher performance. We also investigate how the evolution of intermediate response images from our network. Finally, we compare these responses to state-of-the-art image processing filters in order to gain greater insight into how transfer learning is able to effectively manage widely varying imaging regimes.

Filter sharing: Efficient learning of parameters for volumetric convolutions

Dec 08, 2016

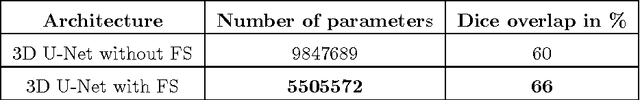

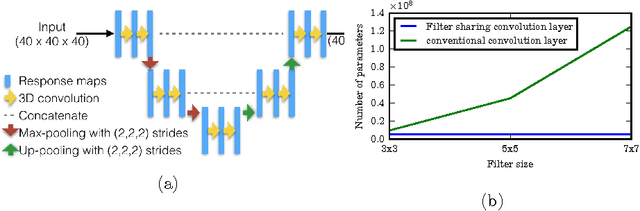

Abstract:Typical convolutional neural networks (CNNs) have several millions of parameters and require a large amount of annotated data to train them. In medical applications where training data is hard to come by, these sophisticated machine learning models are difficult to train. In this paper, we propose a method to reduce the inherent complexity of CNNs during training by exploiting the significant redundancy that is noticed in the learnt CNN filters. Our method relies on finding a small set of filters and mixing coefficients to derive every filter in each convolutional layer at the time of training itself, thereby reducing the number of parameters to be trained. We consider the problem of 3D lung nodule segmentation in CT images and demonstrate the effectiveness of our method in achieving good results with only few training examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge