Pietro Baroni

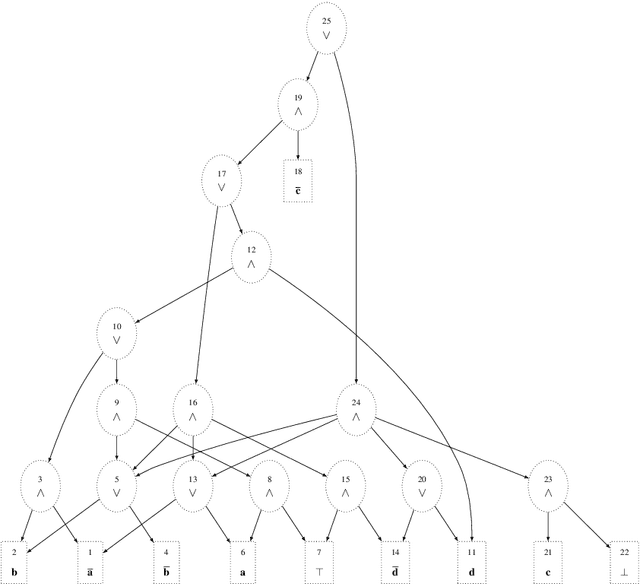

Research Note on Uncertain Probabilities and Abstract Argumentation

Aug 23, 2022

Abstract:The sixth assessment of the international panel on climate change (IPCC) states that "cumulative net CO2 emissions over the last decade (2010-2019) are about the same size as the 11 remaining carbon budget likely to limit warming to 1.5C (medium confidence)." Such reports directly feed the public discourse, but nuances such as the degree of belief and of confidence are often lost. In this paper, we propose a formal account for allowing such degrees of belief and the associated confidence to be used to label arguments in abstract argumentation settings. Differently from other proposals in probabilistic argumentation, we focus on the task of probabilistic inference over a chosen query building upon Sato's distribution semantics which has been already shown to encompass a variety of cases including the semantics of Bayesian networks. Borrowing from the vast literature on such semantics, we examine how such tasks can be dealt with in practice when considering uncertain probabilities, and discuss the connections with existing proposals for probabilistic argumentation.

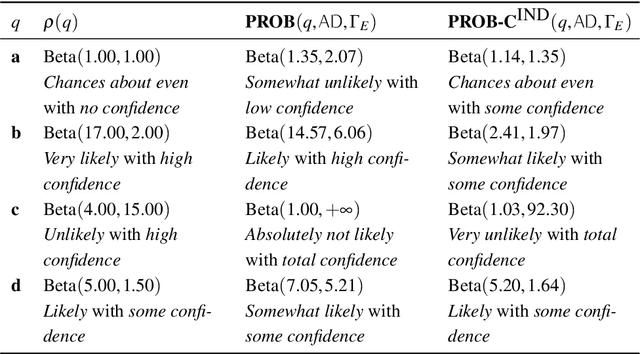

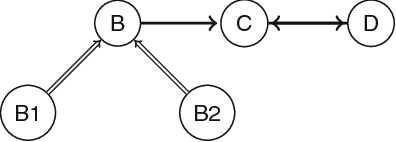

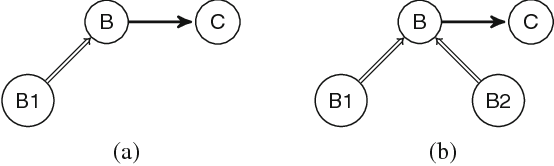

Explaining Causal Models with Argumentation: the Case of Bi-variate Reinforcement

May 23, 2022

Abstract:Causal models are playing an increasingly important role in machine learning, particularly in the realm of explainable AI. We introduce a conceptualisation for generating argumentation frameworks (AFs) from causal models for the purpose of forging explanations for the models' outputs. The conceptualisation is based on reinterpreting desirable properties of semantics of AFs as explanation moulds, which are means for characterising the relations in the causal model argumentatively. We demonstrate our methodology by reinterpreting the property of bi-variate reinforcement as an explanation mould to forge bipolar AFs as explanations for the outputs of causal models. We perform a theoretical evaluation of these argumentative explanations, examining whether they satisfy a range of desirable explanatory and argumentative properties.

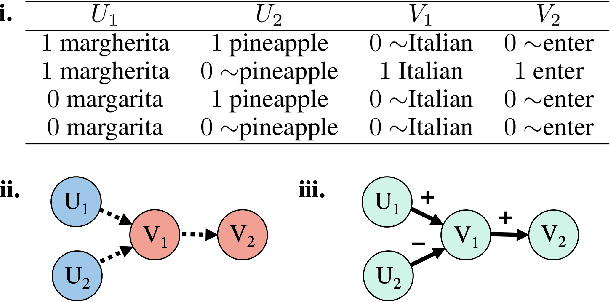

Argumentative XAI: A Survey

May 24, 2021

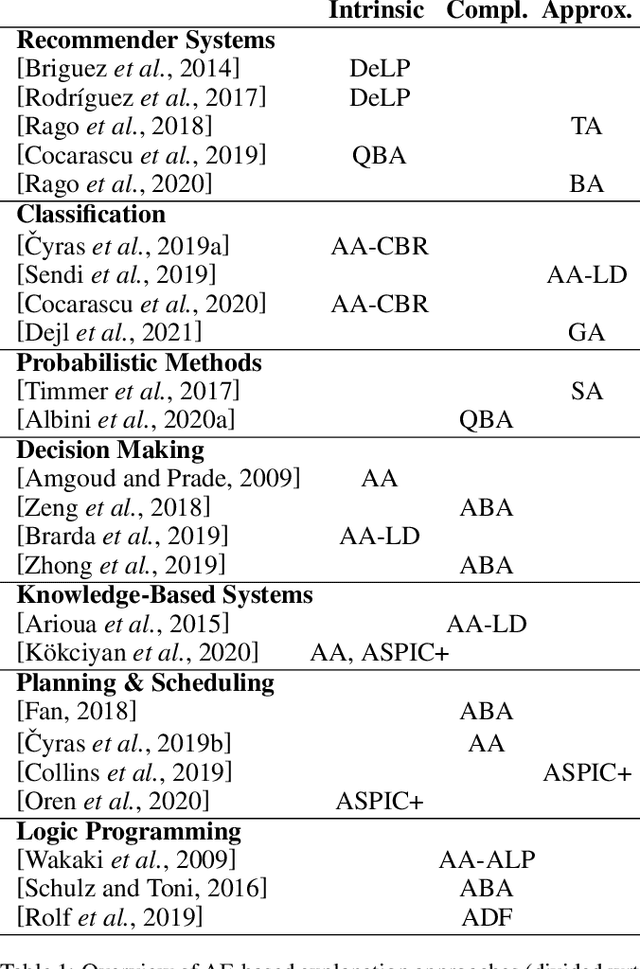

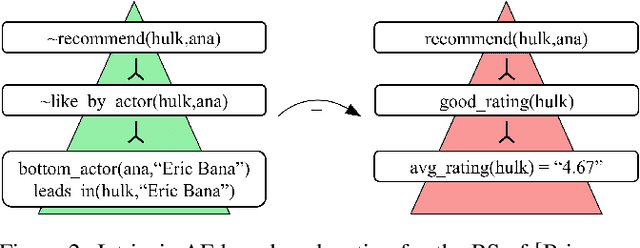

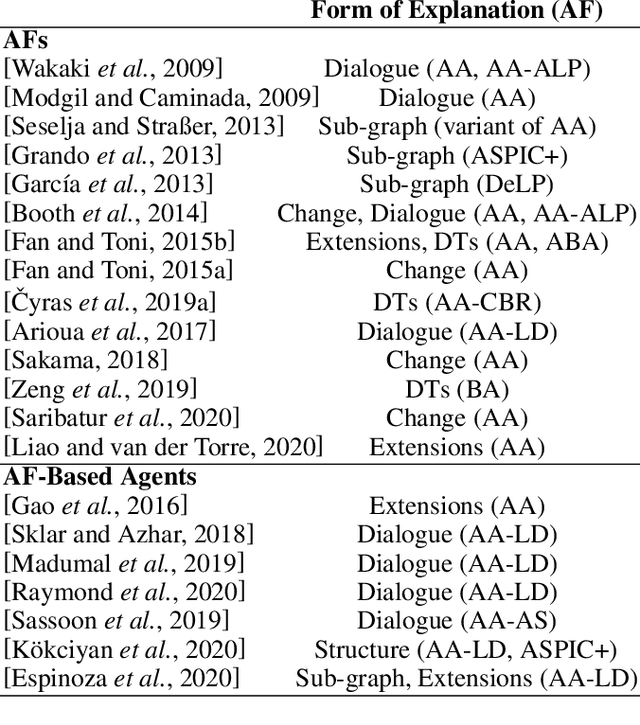

Abstract:Explainable AI (XAI) has been investigated for decades and, together with AI itself, has witnessed unprecedented growth in recent years. Among various approaches to XAI, argumentative models have been advocated in both the AI and social science literature, as their dialectical nature appears to match some basic desirable features of the explanation activity. In this survey we overview XAI approaches built using methods from the field of computational argumentation, leveraging its wide array of reasoning abstractions and explanation delivery methods. We overview the literature focusing on different types of explanation (intrinsic and post-hoc), different models with which argumentation-based explanations are deployed, different forms of delivery, and different argumentation frameworks they use. We also lay out a roadmap for future work.

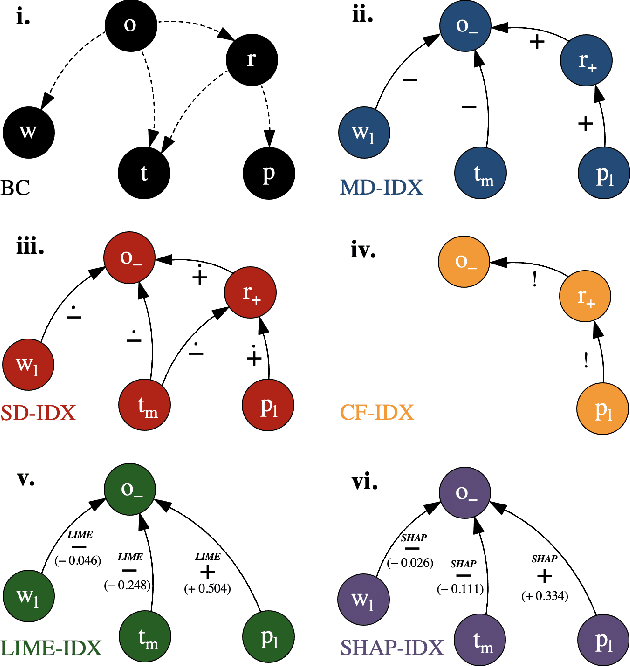

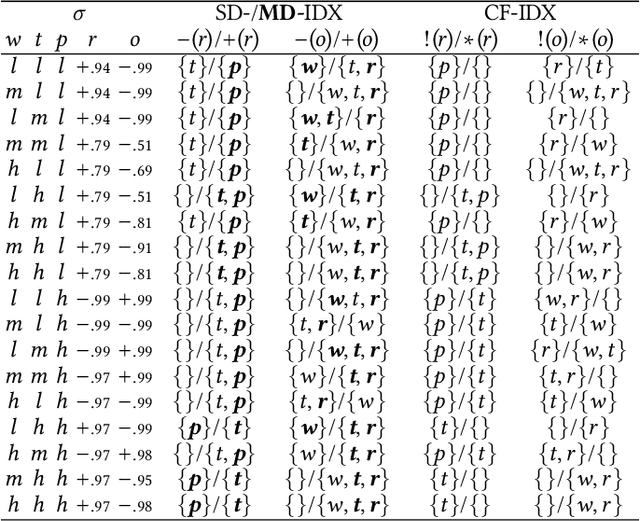

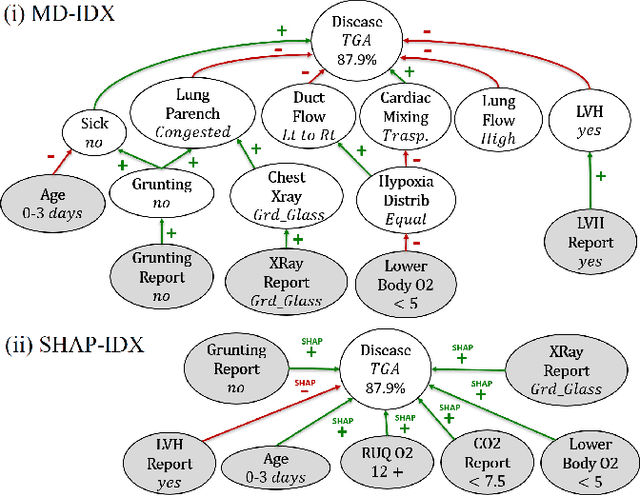

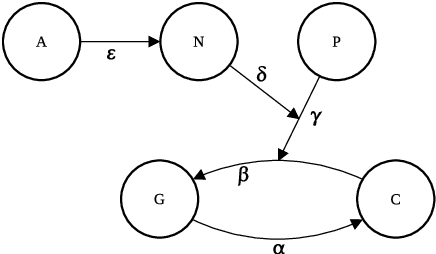

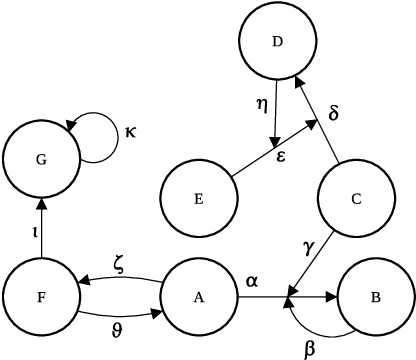

Influence-Driven Explanations for Bayesian Network Classifiers

Dec 10, 2020

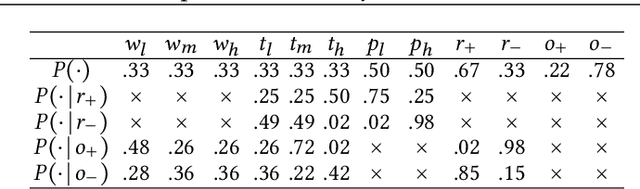

Abstract:One of the most pressing issues in AI in recent years has been the need to address the lack of explainability of many of its models. We focus on explanations for discrete Bayesian network classifiers (BCs), targeting greater transparency of their inner workings by including intermediate variables in explanations, rather than just the input and output variables as is standard practice. The proposed influence-driven explanations (IDXs) for BCs are systematically generated using the causal relationships between variables within the BC, called influences, which are then categorised by logical requirements, called relation properties, according to their behaviour. These relation properties both provide guarantees beyond heuristic explanation methods and allow the information underpinning an explanation to be tailored to a particular context's and user's requirements, e.g., IDXs may be dialectical or counterfactual. We demonstrate IDXs' capability to explain various forms of BCs, e.g., naive or multi-label, binary or categorical, and also integrate recent approaches to explanations for BCs from the literature. We evaluate IDXs with theoretical and empirical analyses, demonstrating their considerable advantages when compared with existing explanation methods.

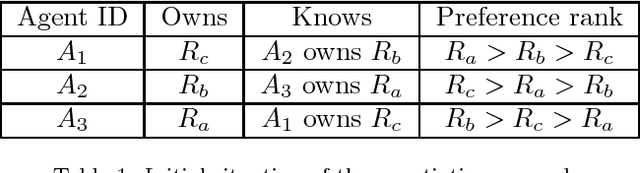

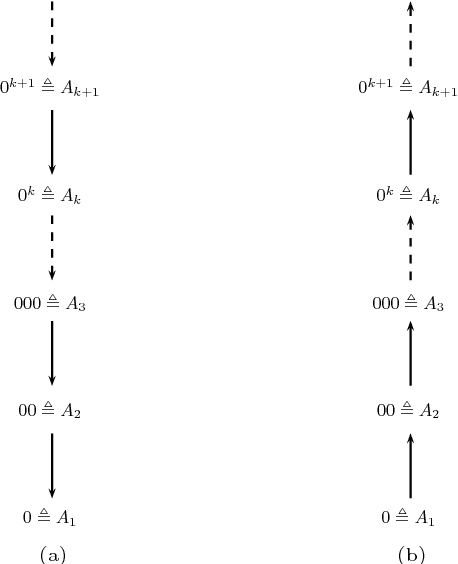

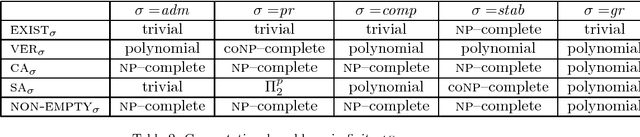

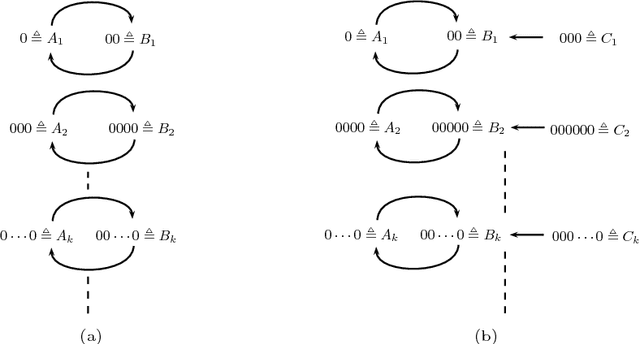

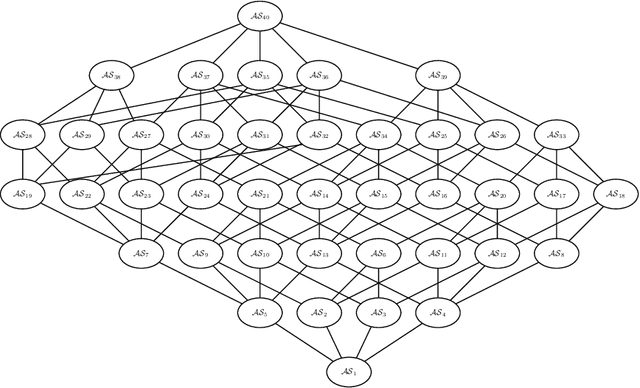

Automata for Infinite Argumentation Structures

Oct 11, 2018

Abstract:The theory of abstract argumentation frameworks (afs) has, in the main, focused on finite structures, though there are many significant contexts where argumentation can be regarded as a process involving infinite objects. To address this limitation, in this paper we propose a novel approach for describing infinite afs using tools from formal language theory. In particular, the possibly infinite set of arguments is specified through the language recognized by a deterministic finite automaton while a suitable formalism, called attack expression, is introduced to describe the relation of attack between arguments. The proposed approach is shown to satisfy some desirable properties which can not be achieved through other "naive" uses of formal languages. In particular, the approach is shown to be expressive enough to capture (besides any arbitrary finite structure) a large variety of infinite afs including two major examples from previous literature and two sample cases from the domains of multi-agent negotiation and ambient intelligence. On the computational side, we show that several decision and construction problems which are known to be polynomial time solvable in finite afs are decidable in the context of the proposed formalism and we provide the relevant algorithms. Moreover we obtain additional results concerning the case of finitary afs.

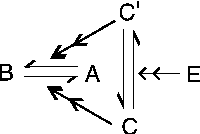

AFRA: Argumentation framework with recursive attacks

Oct 11, 2018

Abstract:The issue of representing attacks to attacks in argumentation is receiving an increasing attention as a useful conceptual modelling tool in several contexts. In this paper we present AFRA, a formalism encompassing unlimited recursive attacks within argumentation frameworks. AFRA satisfies the basic requirements of definition simplicity and rigorous compatibility with Dung's theory of argumentation. This paper provides a complete development of the AFRA formalism complemented by illustrative examples and a detailed comparison with other recursive attack formalizations.

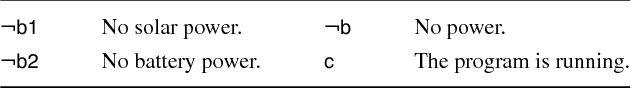

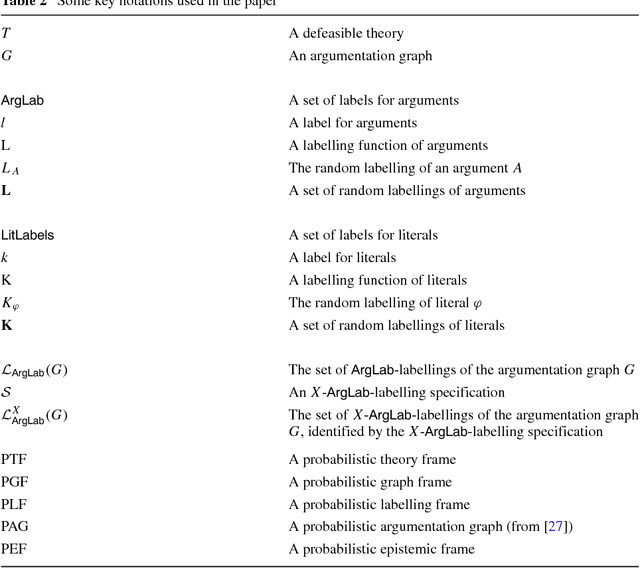

A Labelling Framework for Probabilistic Argumentation

Mar 09, 2018

Abstract:The combination of argumentation and probability paves the way to new accounts of qualitative and quantitative uncertainty, thereby offering new theoretical and applicative opportunities. Due to a variety of interests, probabilistic argumentation is approached in the literature with different frameworks, pertaining to structured and abstract argumentation, and with respect to diverse types of uncertainty, in particular the uncertainty on the credibility of the premises, the uncertainty about which arguments to consider, and the uncertainty on the acceptance status of arguments or statements. Towards a general framework for probabilistic argumentation, we investigate a labelling-oriented framework encompassing a basic setting for rule-based argumentation and its (semi-) abstract account, along with diverse types of uncertainty. Our framework provides a systematic treatment of various kinds of uncertainty and of their relationships and allows us to back or question assertions from the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge