Explaining Causal Models with Argumentation: the Case of Bi-variate Reinforcement

Paper and Code

May 23, 2022

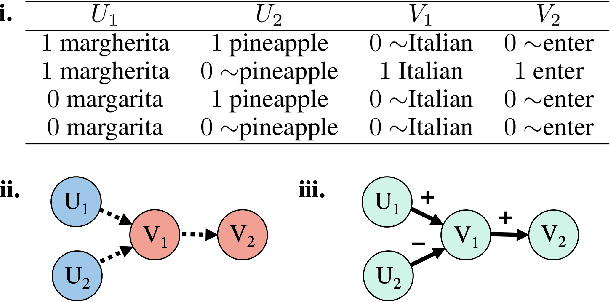

Causal models are playing an increasingly important role in machine learning, particularly in the realm of explainable AI. We introduce a conceptualisation for generating argumentation frameworks (AFs) from causal models for the purpose of forging explanations for the models' outputs. The conceptualisation is based on reinterpreting desirable properties of semantics of AFs as explanation moulds, which are means for characterising the relations in the causal model argumentatively. We demonstrate our methodology by reinterpreting the property of bi-variate reinforcement as an explanation mould to forge bipolar AFs as explanations for the outputs of causal models. We perform a theoretical evaluation of these argumentative explanations, examining whether they satisfy a range of desirable explanatory and argumentative properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge