Philipp Mayer

. Integrated Systems Laboratory, ETH Zürich, Switzerland

RF Power Transmission for Self-sustaining Miniaturized IoT Devices

Jul 31, 2024

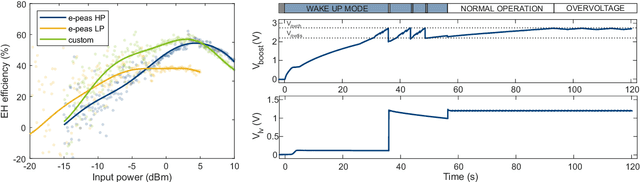

Abstract:Radio Frequency (RF) wireless power transfer is a promising technology that has the potential to constantly power small Internet of Things (IoT) devices, enabling even battery-less systems and reducing their maintenance requirements. However, to achieve this ambitious goal, carefully designed RF energy harvesting (EH) systems are needed to minimize the conversion losses and the conversion efficiency of the limited power. For intelligent internet of things sensors and devices, which often have non-constant power requirements, an additional power management stage with energy storage is needed to temporarily provide a higher power output than the power being harvested. This paper proposes an RF wireless power energy conversion system for miniaturized IoT composed of an impedance matching network, a rectifier, and power management with energy storage. The proposed sub-system has been experimentally validated and achieved an overall power conversion efficiency (PCE) of over 30 % for an input power of -10 dBm and a peak efficiency of 57 % at 3 dBm.

Ultra-Efficient On-Device Object Detection on AI-Integrated Smart Glasses with TinyissimoYOLO

Nov 03, 2023

Abstract:Smart glasses are rapidly gaining advanced functionality thanks to cutting-edge computing technologies, accelerated hardware architectures, and tiny AI algorithms. Integrating AI into smart glasses featuring a small form factor and limited battery capacity is still challenging when targeting full-day usage for a satisfactory user experience. This paper illustrates the design and implementation of tiny machine-learning algorithms exploiting novel low-power processors to enable prolonged continuous operation in smart glasses. We explore the energy- and latency-efficient of smart glasses in the case of real-time object detection. To this goal, we designed a smart glasses prototype as a research platform featuring two microcontrollers, including a novel milliwatt-power RISC-V parallel processor with a hardware accelerator for visual AI, and a Bluetooth low-power module for communication. The smart glasses integrate power cycling mechanisms, including image and audio sensing interfaces. Furthermore, we developed a family of novel tiny deep-learning models based on YOLO with sub-million parameters customized for microcontroller-based inference dubbed TinyissimoYOLO v1.3, v5, and v8, aiming at benchmarking object detection with smart glasses for energy and latency. Evaluations on the prototype of the smart glasses demonstrate TinyissimoYOLO's 17ms inference latency and 1.59mJ energy consumption per inference while ensuring acceptable detection accuracy. Further evaluation reveals an end-to-end latency from image capturing to the algorithm's prediction of 56ms or equivalently 18 fps, with a total power consumption of 62.9mW, equivalent to a 9.3 hours of continuous run time on a 154mAh battery. These results outperform MCUNet (TinyNAS+TinyEngine), which runs a simpler task (image classification) at just 7.3 fps per second.

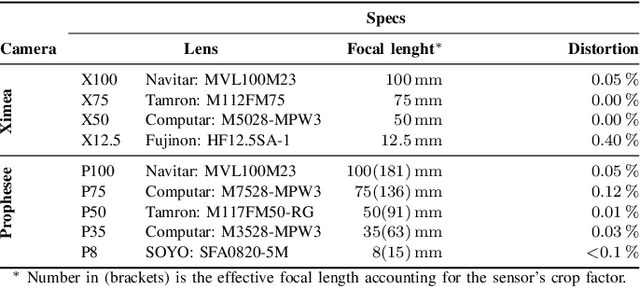

Quantitative Evaluation of a Multi-Modal Camera Setup for Fusing Event Data with RGB Images

Nov 03, 2023

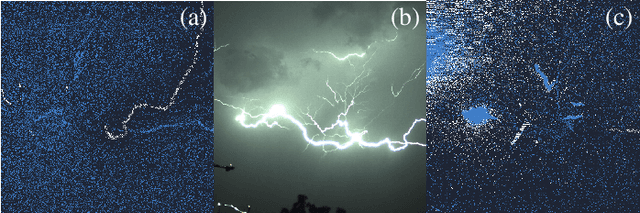

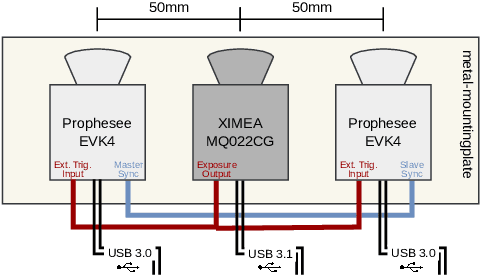

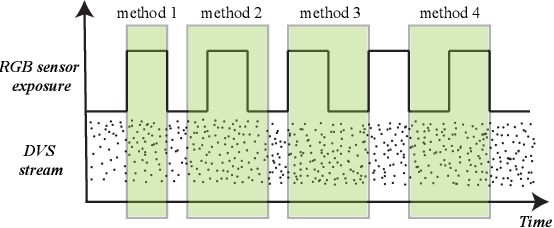

Abstract:Event-based cameras, also called silicon retinas, potentially revolutionize computer vision by detecting and reporting significant changes in intensity asynchronous events, offering extended dynamic range, low latency, and low power consumption, enabling a wide range of applications from autonomous driving to longtime surveillance. As an emerging technology, there is a notable scarcity of publicly available datasets for event-based systems that also feature frame-based cameras, in order to exploit the benefits of both technologies. This work quantitatively evaluates a multi-modal camera setup for fusing high-resolution DVS data with RGB image data by static camera alignment. The proposed setup, which is intended for semi-automatic DVS data labeling, combines two recently released Prophesee EVK4 DVS cameras and one global shutter XIMEA MQ022CG-CM RGB camera. After alignment, state-of-the-art object detection or segmentation networks label the image data by mapping boundary boxes or labeled pixels directly to the aligned events. To facilitate this process, various time-based synchronization methods for DVS data are analyzed, and calibration accuracy, camera alignment, and lens impact are evaluated. Experimental results demonstrate the benefits of the proposed system: the best synchronization method yields an image calibration error of less than 0.90px and a pixel cross-correlation deviation of1.6px, while a lens with 8mm focal length enables detection of objects with size 30cm at a distance of 350m against homogeneous background.

Non-invasive urinary bladder volume estimation with artefact-suppressed bio-impedance measurements

Mar 24, 2023

Abstract:Urine output is a vital parameter to gauge kidney health. Current monitoring methods include manually written records, invasive urinary catheterization or ultrasound measurements performed by highly skilled personnel. Catheterization bears high risks of infection while intermittent ultrasound measures and manual recording are time consuming and might miss early signs of kidney malfunction. Bioimpedance (BI) measurements may serve as a non-invasive alternative for measuring urine volume in vivo. However, limited robustness have prevented its clinical translation. Here, a deep learning-based algorithm is presented that processes the local BI of the lower abdomen and suppresses artefacts to measure the bladder volume quantitatively, non-invasively and without the continuous need for additional personnel. A tetrapolar BI wearable system called ANUVIS was used to collect continuous bladder volume data from three healthy subjects to demonstrate feasibility of operation, while clinical gold standards of urodynamic (n=6) and uroflowmetry tests (n=8) provided the ground truth. Optimized location for electrode placement and a model for the change in BI with changing bladder volume is deduced. The average error for full bladder volume estimation and for residual volume estimation was -29 +/-87.6 ml, thus, comparable to commercial portable ultrasound devices (Bland Altman analysis showed a bias of -5.2 ml with LoA between 119.7 ml to -130.1 ml), while providing the additional benefit of hands-free, non-invasive, and continuous bladder volume estimation. The combination of the wearable BI sensor node and the presented algorithm provides an attractive alternative to current standard of care with potential benefits in providing insights into kidney function.

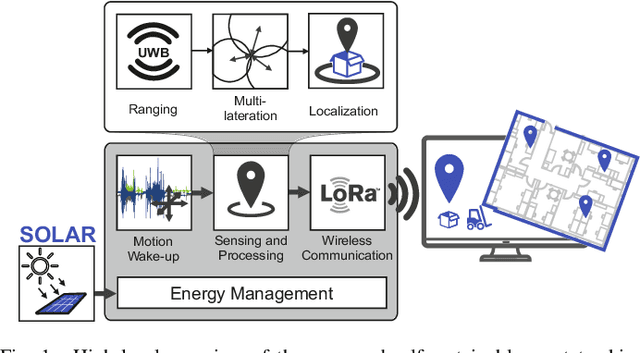

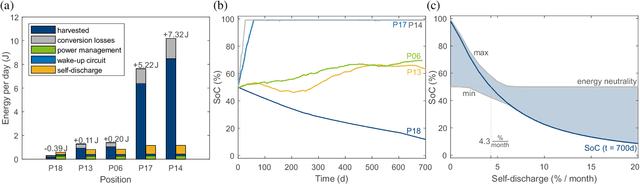

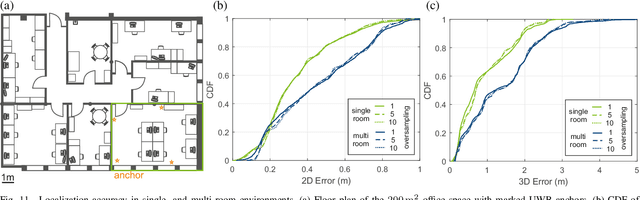

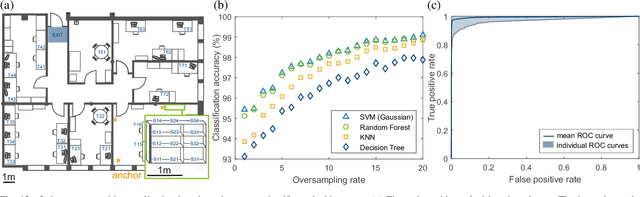

Self-sustaining Ultra-wideband Positioning System for Event-driven Indoor Localization

Dec 09, 2022

Abstract:Smart and unobtrusive mobile sensor nodes that accurately track their own position have the potential to augment data collection with location-based functions. To attain this vision of unobtrusiveness, the sensor nodes must have a compact form factor and operate over long periods without battery recharging or replacement. This paper presents a self-sustaining and accurate ultra-wideband-based indoor location system with conservative infrastructure overhead. An event-driven sensing approach allows for balancing the limited energy harvested in indoor conditions with the power consumption of ultra-wideband transceivers. The presented tag-centralized concept, which combines heterogeneous system design with embedded processing, minimizes idle consumption without sacrificing functionality. Despite modest infrastructure requirements, high localization accuracy is achieved with error-correcting double-sided two-way ranging and embedded optimal multilateration. Experimental results demonstrate the benefits of the proposed system: the node achieves a quiescent current of $47~nA$ and operates at $1.2~\mu A$ while performing energy harvesting and motion detection. The energy consumption for position updates, with an accuracy of $40~cm$ (2D) in realistic non-line-of-sight conditions, is $10.84~mJ$. In an asset tracking case study within a $200~m^2$ multi-room office space, the achieved accuracy level allows for identifying 36 different desk and storage locations with an accuracy of over $95~{\%}$. The system`s long-time self-sustainability has been analyzed over $700~days$ in multiple indoor lighting situations.

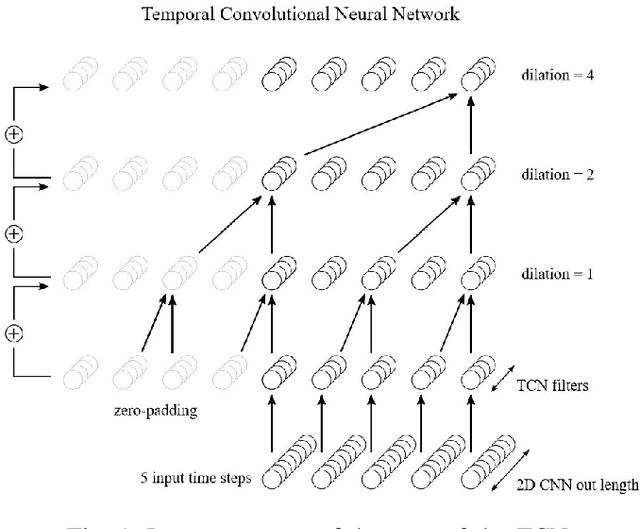

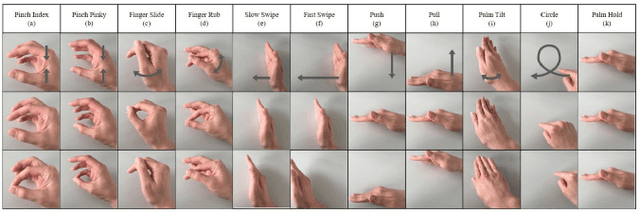

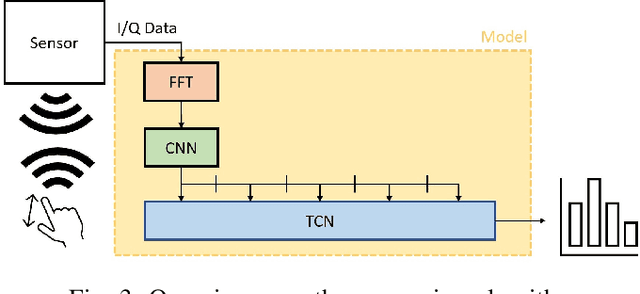

TinyRadarNN: Combining Spatial and Temporal Convolutional Neural Networks for Embedded Gesture Recognition with Short Range Radars

Jun 25, 2020

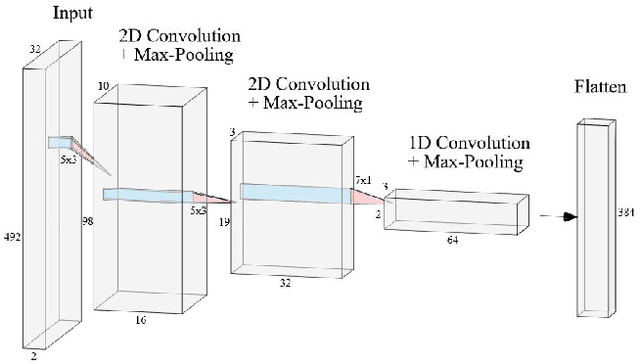

Abstract:This work proposes a low-power high-accuracy embedded hand-gesture recognition algorithm targeting battery-operated wearable devices using low power short-range RADAR sensors. A 2D Convolutional Neural Network (CNN) using range frequency Doppler features is combined with a Temporal Convolutional Neural Network (TCN) for time sequence prediction. The final algorithm has a model size of only 46 thousand parameters, yielding a memory footprint of only 92 KB. Two datasets containing 11 challenging hand gestures performed by 26 different people have been recorded containing a total of 20,210 gesture instances. On the 11 hand gesture dataset, accuracies of 86.6% (26 users) and 92.4% (single user) have been achieved, which are comparable to the state-of-the-art, which achieves 87% (10 users) and 94% (single user), while using a TCN-based network that is 7500x smaller than the state-of-the-art. Furthermore, the gesture recognition classifier has been implemented on Parallel Ultra-Low Power Processor, demonstrating that real-time prediction is feasible with only 21 mW of power consumption for the full TCN sequence prediction network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge