Peyman Sheikholharam Mashhadi

Volvo Discovery Challenge at ECML-PKDD 2024

Sep 17, 2024

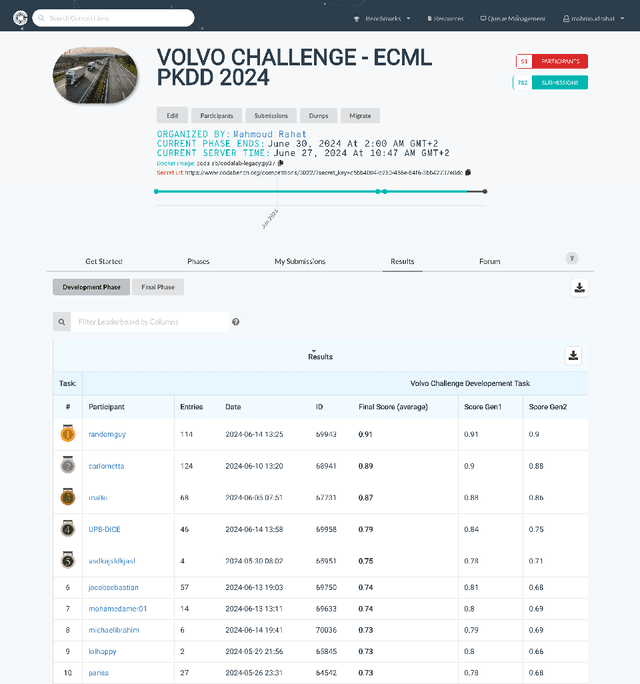

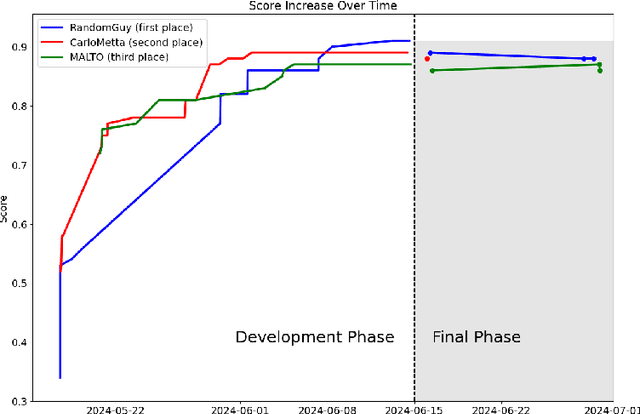

Abstract:This paper presents an overview of the Volvo Discovery Challenge, held during the ECML-PKDD 2024 conference. The challenge's goal was to predict the failure risk of an anonymized component in Volvo trucks using a newly published dataset. The test data included observations from two generations (gen1 and gen2) of the component, while the training data was provided only for gen1. The challenge attracted 52 data scientists from around the world who submitted a total of 791 entries. We provide a brief description of the problem definition, challenge setup, and statistics about the submissions. In the section on winning methodologies, the first, second, and third-place winners of the competition briefly describe their proposed methods and provide GitHub links to their implemented code. The shared code can be interesting as an advanced methodology for researchers in the predictive maintenance domain. The competition was hosted on the Codabench platform.

Expected Grad-CAM: Towards gradient faithfulness

Jun 03, 2024Abstract:Although input-gradients techniques have evolved to mitigate and tackle the challenges associated with gradients, modern gradient-weighted CAM approaches still rely on vanilla gradients, which are inherently susceptible to the saturation phenomena. Despite recent enhancements have incorporated counterfactual gradient strategies as a mitigating measure, these local explanation techniques still exhibit a lack of sensitivity to their baseline parameter. Our work proposes a gradient-weighted CAM augmentation that tackles both the saturation and sensitivity problem by reshaping the gradient computation, incorporating two well-established and provably approaches: Expected Gradients and kernel smoothing. By revisiting the original formulation as the smoothed expectation of the perturbed integrated gradients, one can concurrently construct more faithful, localized and robust explanations which minimize infidelity. Through fine modulation of the perturbation distribution it is possible to regulate the complexity characteristic of the explanation, selectively discriminating stable features. Our technique, Expected Grad-CAM, differently from recent works, exclusively optimizes the gradient computation, purposefully designed as an enhanced substitute of the foundational Grad-CAM algorithm and any method built therefrom. Quantitative and qualitative evaluations have been conducted to assess the effectiveness of our method.

Fast Genetic Algorithm for feature selection -- A qualitative approximation approach

Apr 05, 2024Abstract:Evolutionary Algorithms (EAs) are often challenging to apply in real-world settings since evolutionary computations involve a large number of evaluations of a typically expensive fitness function. For example, an evaluation could involve training a new machine learning model. An approximation (also known as meta-model or a surrogate) of the true function can be used in such applications to alleviate the computation cost. In this paper, we propose a two-stage surrogate-assisted evolutionary approach to address the computational issues arising from using Genetic Algorithm (GA) for feature selection in a wrapper setting for large datasets. We define 'Approximation Usefulness' to capture the necessary conditions to ensure correctness of the EA computations when an approximation is used. Based on this definition, we propose a procedure to construct a lightweight qualitative meta-model by the active selection of data instances. We then use a meta-model to carry out the feature selection task. We apply this procedure to the GA-based algorithm CHC (Cross generational elitist selection, Heterogeneous recombination and Cataclysmic mutation) to create a Qualitative approXimations variant, CHCQX. We show that CHCQX converges faster to feature subset solutions of significantly higher accuracy (as compared to CHC), particularly for large datasets with over 100K instances. We also demonstrate the applicability of the thinking behind our approach more broadly to Swarm Intelligence (SI), another branch of the Evolutionary Computation (EC) paradigm with results of PSOQX, a qualitative approximation adaptation of the Particle Swarm Optimization (PSO) method. A GitHub repository with the complete implementation is available.

Rolling the dice for better deep learning performance: A study of randomness techniques in deep neural networks

Apr 05, 2024Abstract:This paper investigates how various randomization techniques impact Deep Neural Networks (DNNs). Randomization, like weight noise and dropout, aids in reducing overfitting and enhancing generalization, but their interactions are poorly understood. The study categorizes randomness techniques into four types and proposes new methods: adding noise to the loss function and random masking of gradient updates. Using Particle Swarm Optimizer (PSO) for hyperparameter optimization, it explores optimal configurations across MNIST, FASHION-MNIST, CIFAR10, and CIFAR100 datasets. Over 30,000 configurations are evaluated, revealing data augmentation and weight initialization randomness as main performance contributors. Correlation analysis shows different optimizers prefer distinct randomization types. The complete implementation and dataset are available on GitHub.

Learning Causal Mechanisms through Orthogonal Neural Networks

Jun 05, 2023Abstract:A fundamental feature of human intelligence is the ability to infer high-level abstractions from low-level sensory data. An essential component of such inference is the ability to discover modularized generative mechanisms. Despite many efforts to use statistical learning and pattern recognition for finding disentangled factors, arguably human intelligence remains unmatched in this area. In this paper, we investigate a problem of learning, in a fully unsupervised manner, the inverse of a set of independent mechanisms from distorted data points. We postulate, and justify this claim with experimental results, that an important weakness of existing machine learning solutions lies in the insufficiency of cross-module diversification. Addressing this crucial discrepancy between human and machine intelligence is an important challenge for pattern recognition systems. To this end, our work proposes an unsupervised method that discovers and disentangles a set of independent mechanisms from unlabeled data, and learns how to invert them. A number of experts compete against each other for individual data points in an adversarial setting: one that best inverses the (unknown) generative mechanism is the winner. We demonstrate that introducing an orthogonalization layer into the expert architectures enforces additional diversity in the outputs, leading to significantly better separability. Moreover, we propose a procedure for relocating data points between experts to further prevent any one from claiming multiple mechanisms. We experimentally illustrate that these techniques allow discovery and modularization of much less pronounced transformations, in addition to considerably faster convergence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge