Peter Jung

Implicit Hypothesis Testing and Divergence Preservation in Neural Network Representations

Jan 28, 2026Abstract:We study the supervised training dynamics of neural classifiers through the lens of binary hypothesis testing. We model classification as a set of binary tests between class-conditional distributions of representations and empirically show that, along training trajectories, well-generalizing networks increasingly align with Neyman-Pearson optimal decision rules via monotonic improvements in KL divergence that relate to error rate exponents. We finally discuss how this yields an explanation and possible training or regularization strategies for different classes of neural networks.

A Deep Unfolding-Based Scalarization Approach for Power Control in D2D Networks

Oct 21, 2024

Abstract:Optimizing network utility in device-to-device networks is typically formulated as a non-convex optimization problem. This paper addresses the scenario where the optimization variables are from a bounded but continuous set, allowing each device to perform power control. The power at each link is optimized to maximize a desired network utility. Specifically, we consider the weighted-sum-rate. The state of the art benchmark for this problem is fractional programming with quadratic transform, known as FPLinQ. We propose a scalarization approach to transform the weighted-sum-rate, developing an iterative algorithm that depends on step sizes, a reference, and a direction vector. By employing the deep unfolding approach, we optimize these parameters by presenting the iterative algorithm as a finite sequence of steps, enabling it to be trained as a deep neural network. Numerical experiments demonstrate that the unfolded algorithm performs comparably to the benchmark in most cases while exhibiting lower complexity. Furthermore, the unfolded algorithm shows strong generalizability in terms of varying the number of users, the signal-to-noise ratio and arbitrary weights. The weighted-sum-rate maximizer can be integrated into a low-complexity fairness scheduler, updating priority weights via virtual queues and Lyapunov Drift Plus Penalty. This is demonstrated through experiments using proportional and max-min fairness.

Performance of Slotted ALOHA in User-Centric Cell-Free Massive MIMO

May 28, 2024

Abstract:To efficiently utilize the scarce wireless resource, the random access scheme has been attaining renewed interest primarily in supporting the sporadic traffic of a large number of devices encountered in the Internet of Things (IoT). In this paper we investigate the performance of slotted ALOHA -- a simple and practical random access scheme -- in connection with the grant-free random access protocol applied for user-centric cell-free massive MIMO. More specifically, we provide the expression of the sum-throughput under the assumptions of the capture capability owned by the centralized detector in the uplink. Further, a comparative study of user-centric cell-free massive MIMO with other types of networks is provided, which allows us to identify its potential and possible limitation. Our numerical simulations show that the user-centric cell-free massive MIMO has a good trade-off between performance and fronthaul load, especially at low activation probability regime.

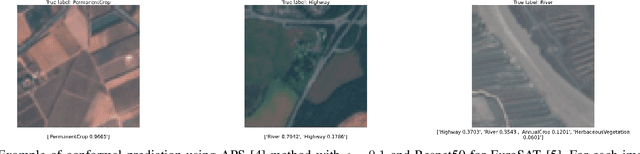

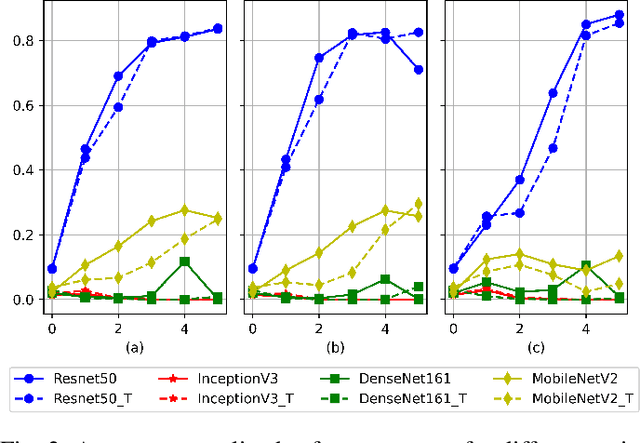

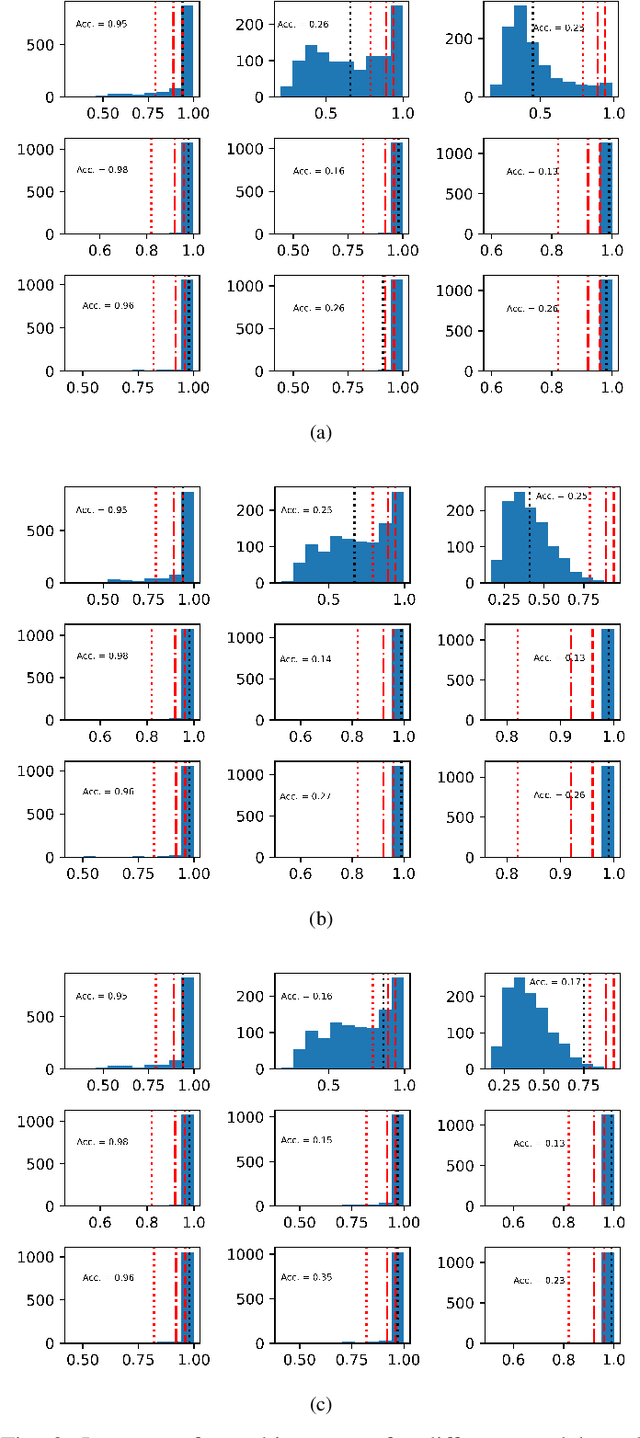

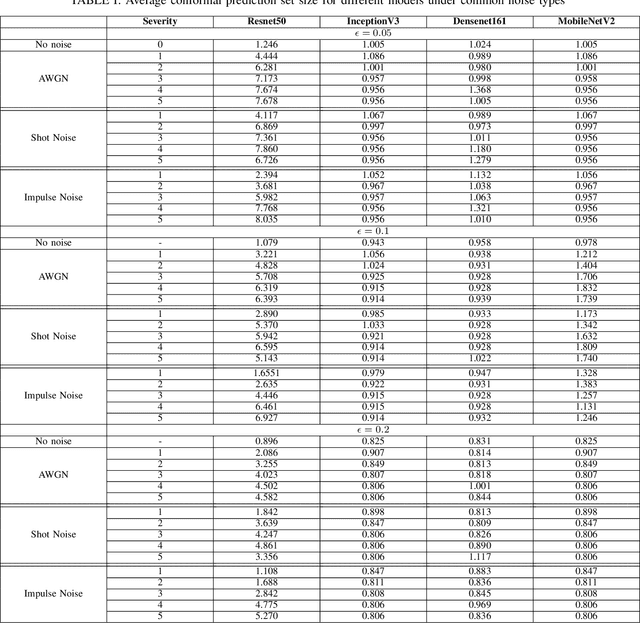

Onboard Out-of-Calibration Detection of Deep Learning Models using Conformal Prediction

May 04, 2024

Abstract:The black box nature of deep learning models complicate their usage in critical applications such as remote sensing. Conformal prediction is a method to ensure trust in such scenarios. Subject to data exchangeability, conformal prediction provides finite sample coverage guarantees in the form of a prediction set that is guaranteed to contain the true class within a user defined error rate. In this letter we show that conformal prediction algorithms are related to the uncertainty of the deep learning model and that this relation can be used to detect if the deep learning model is out-of-calibration. Popular classification models like Resnet50, Densenet161, InceptionV3, and MobileNetV2 are applied on remote sensing datasets such as the EuroSAT to demonstrate how under noisy scenarios the model outputs become untrustworthy. Furthermore an out-of-calibration detection procedure relating the model uncertainty and the average size of the conformal prediction set is presented.

Fundamentals of Delay-Doppler Communications: Practical Implementation and Extensions to OTFS

Mar 21, 2024

Abstract:The recently proposed orthogonal time frequency space (OTFS) modulation, which is a typical Delay-Doppler (DD) communication scheme, has attracted significant attention thanks to its appealing performance over doubly-selective channels. In this paper, we present the fundamentals of general DD communications from the viewpoint of the Zak transform. We start our study by constructing DD domain basis functions aligning with the time-frequency (TF)-consistency condition, which are globally quasi-periodic and locally twisted-shifted. We unveil that these features are translated to unique signal structures in both time and frequency, which are beneficial for communication purposes. Then, we focus on the practical implementations of DD Nyquist communications, where we show that rectangular windows achieve perfect DD orthogonality, while truncated periodic signals can obtain sufficient DD orthogonality. Particularly, smoothed rectangular window with excess bandwidth can result in a slightly worse orthogonality but better pulse localization in the DD domain. Furthermore, we present a practical pulse shaping framework for general DD communications and derive the corresponding input-output relation under various shaping pulses. Our numerical results agree with our derivations and also demonstrate advantages of DD communications over conventional orthogonal frequency-division multiplexing (OFDM).

HyperLISTA-ABT: An Ultra-light Unfolded Network for Accurate Multi-component Differential Tomographic SAR Inversion

Sep 28, 2023

Abstract:Deep neural networks based on unrolled iterative algorithms have achieved remarkable success in sparse reconstruction applications, such as synthetic aperture radar (SAR) tomographic inversion (TomoSAR). However, the currently available deep learning-based TomoSAR algorithms are limited to three-dimensional (3D) reconstruction. The extension of deep learning-based algorithms to four-dimensional (4D) imaging, i.e., differential TomoSAR (D-TomoSAR) applications, is impeded mainly due to the high-dimensional weight matrices required by the network designed for D-TomoSAR inversion, which typically contain millions of freely trainable parameters. Learning such huge number of weights requires an enormous number of training samples, resulting in a large memory burden and excessive time consumption. To tackle this issue, we propose an efficient and accurate algorithm called HyperLISTA-ABT. The weights in HyperLISTA-ABT are determined in an analytical way according to a minimum coherence criterion, trimming the model down to an ultra-light one with only three hyperparameters. Additionally, HyperLISTA-ABT improves the global thresholding by utilizing an adaptive blockwise thresholding scheme, which applies block-coordinate techniques and conducts thresholding in local blocks, so that weak expressions and local features can be retained in the shrinkage step layer by layer. Simulations were performed and demonstrated the effectiveness of our approach, showing that HyperLISTA-ABT achieves superior computational efficiency and with no significant performance degradation compared to state-of-the-art methods. Real data experiments showed that a high-quality 4D point cloud could be reconstructed over a large area by the proposed HyperLISTA-ABT with affordable computational resources and in a fast time.

Multi-static Parameter Estimation in the Near/Far Field Beam Space for Integrated Sensing and Communication Applications

Sep 26, 2023

Abstract:This work proposes a maximum likelihood (ML)-based parameter estimation framework for a millimeter wave (mmWave) integrated sensing and communication (ISAC) system in a multi-static configuration using energy-efficient hybrid digital-analog arrays. Due to the typically large arrays deployed in the higher frequency bands to mitigate isotropic path loss, such arrays may operate in the near-field regime. The proposed parameter estimation in this work consists of a two-stage estimation process, where the first stage is based on far-field assumptions, and is used to obtain a first estimate of the target parameters. In cases where the target is determined to be in the near-field of the arrays, a second estimation based on near-field assumptions is carried out to obtain more accurate estimates. In particular, we select beamfocusing array weights designed to achieve a constant gain over an extended spatial region and re-estimate the target parameters at the receivers. We evaluate the effectiveness of the proposed framework in numerous scenarios through numerical simulations and demonstrate the impact of the custom-designed flat-gain beamfocusing codewords in increasing the communication performance of the system.

Extended Target Parameter Estimation and Tracking with an HDA Setup for ISAC Applications

Aug 16, 2023Abstract:We investigate radar parameter estimation and beam tracking with a hybrid digital-analog (HDA) architecture in a multi-block measurement framework using an extended target model. In the considered setup, the backscattered data signal is utilized to predict the user position in the next time slots. Specifically, a simplified maximum likelihood framework is adopted for parameter estimation, based on which a simple tracking scheme is also developed. Furthermore, the proposed framework supports adaptive transmitter beamwidth selection, whose effects on the communication performance are also studied. Finally, we verify the effectiveness of the proposed framework via numerical simulations over complex motion patterns that emulate a realistic integrated sensing and communication (ISAC) scenario.

Integrated Sensing and Communication with MOCZ Waveform

Jul 04, 2023Abstract:In this work, we propose a waveform based on Modulation on Conjugate-reciprocal Zeros (MOCZ) originally proposed for short-packet communications in [1], as a new Integrated Sensing and Communication (ISAC) waveform. Having previously established the key advantages of MOCZ for noncoherent and sporadic communication, here we leverage the optimal auto-correlation property of Binary MOCZ (BMOCZ) for sensing applications. Due to this property, which eliminates the need for separate communication and radar-centric waveforms, we propose a new frame structure for ISAC, where pilot sequences and preambles become obsolete and are completely removed from the frame. As a result, the data rate can be significantly improved. Aimed at (hardware-) cost-effective radar-sensing applications, we consider a Hybrid Digital-Analog (HDA) beamforming architecture for data transmission and radar sensing. We demonstrate via extensive simulations, that a communication data rate, significantly higher than existing standards can be achieved, while simultaneously achieving sensing performance comparable to state-of-the-art sensing systems.

Basis Pursuit Denoising via Recurrent Neural Network Applied to Super-resolving SAR Tomography

May 23, 2023Abstract:Finding sparse solutions of underdetermined linear systems commonly requires the solving of L1 regularized least squares minimization problem, which is also known as the basis pursuit denoising (BPDN). They are computationally expensive since they cannot be solved analytically. An emerging technique known as deep unrolling provided a good combination of the descriptive ability of neural networks, explainable, and computational efficiency for BPDN. Many unrolled neural networks for BPDN, e.g. learned iterative shrinkage thresholding algorithm and its variants, employ shrinkage functions to prune elements with small magnitude. Through experiments on synthetic aperture radar tomography (TomoSAR), we discover the shrinkage step leads to unavoidable information loss in the dynamics of networks and degrades the performance of the model. We propose a recurrent neural network (RNN) with novel sparse minimal gated units (SMGUs) to solve the information loss issue. The proposed RNN architecture with SMGUs benefits from incorporating historical information into optimization, and thus effectively preserves full information in the final output. Taking TomoSAR inversion as an example, extensive simulations demonstrated that the proposed RNN outperforms the state-of-the-art deep learning-based algorithm in terms of super-resolution power as well as generalization ability. It achieved a 10% to 20% higher double scatterers detection rate and is less sensitive to phase and amplitude ratio differences between scatterers. Test on real TerraSAR-X spotlight images also shows a high-quality 3-D reconstruction of the test site.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge