Peter Brusilovsky

Skill-based Explanations for Serendipitous Course Recommendation

Aug 27, 2025Abstract:Academic choice is crucial in U.S. undergraduate education, allowing students significant freedom in course selection. However, navigating the complex academic environment is challenging due to limited information, guidance, and an overwhelming number of choices, compounded by time restrictions and the high demand for popular courses. Although career counselors exist, their numbers are insufficient, and course recommendation systems, though personalized, often lack insight into student perceptions and explanations to assess course relevance. In this paper, a deep learning-based concept extraction model is developed to efficiently extract relevant concepts from course descriptions to improve the recommendation process. Using this model, the study examines the effects of skill-based explanations within a serendipitous recommendation framework, tested through the AskOski system at the University of California, Berkeley. The findings indicate that these explanations not only increase user interest, particularly in courses with high unexpectedness, but also bolster decision-making confidence. This underscores the importance of integrating skill-related data and explanations into educational recommendation systems.

Use Random Selection for Now: Investigation of Few-Shot Selection Strategies in LLM-based Text Augmentation for Classification

Oct 14, 2024

Abstract:The generative large language models (LLMs) are increasingly used for data augmentation tasks, where text samples are paraphrased (or generated anew) and then used for classifier fine-tuning. Existing works on augmentation leverage the few-shot scenarios, where samples are given to LLMs as part of prompts, leading to better augmentations. Yet, the samples are mostly selected randomly and a comprehensive overview of the effects of other (more ``informed'') sample selection strategies is lacking. In this work, we compare sample selection strategies existing in few-shot learning literature and investigate their effects in LLM-based textual augmentation. We evaluate this on in-distribution and out-of-distribution classifier performance. Results indicate, that while some ``informed'' selection strategies increase the performance of models, especially for out-of-distribution data, it happens only seldom and with marginal performance increases. Unless further advances are made, a default of random sample selection remains a good option for augmentation practitioners.

LLMs vs Established Text Augmentation Techniques for Classification: When do the Benefits Outweight the Costs?

Aug 29, 2024Abstract:The generative large language models (LLMs) are increasingly being used for data augmentation tasks, where text samples are LLM-paraphrased and then used for classifier fine-tuning. However, a research that would confirm a clear cost-benefit advantage of LLMs over more established augmentation methods is largely missing. To study if (and when) is the LLM-based augmentation advantageous, we compared the effects of recent LLM augmentation methods with established ones on 6 datasets, 3 classifiers and 2 fine-tuning methods. We also varied the number of seeds and collected samples to better explore the downstream model accuracy space. Finally, we performed a cost-benefit analysis and show that LLM-based methods are worthy of deployment only when very small number of seeds is used. Moreover, in many cases, established methods lead to similar or better model accuracies.

Human-AI Co-Creation of Worked Examples for Programming Classes

Feb 29, 2024Abstract:Worked examples (solutions to typical programming problems presented as a source code in a certain language and are used to explain the topics from a programming class) are among the most popular types of learning content in programming classes. Most approaches and tools for presenting these examples to students are based on line-by-line explanations of the example code. However, instructors rarely have time to provide line-by-line explanations for a large number of examples typically used in a programming class. In this paper, we explore and assess a human-AI collaboration approach to authoring worked examples for Java programming. We introduce an authoring system for creating Java worked examples that generates a starting version of code explanations and presents it to the instructor to edit if necessary.We also present a study that assesses the quality of explanations created with this approach

Effects of diversity incentives on sample diversity and downstream model performance in LLM-based text augmentation

Jan 12, 2024

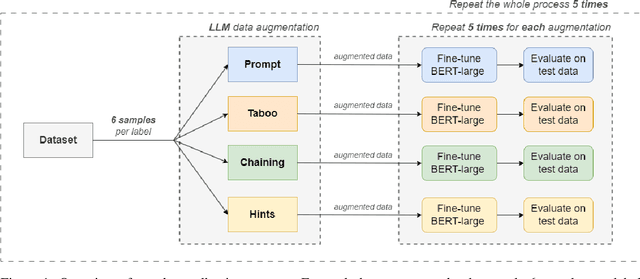

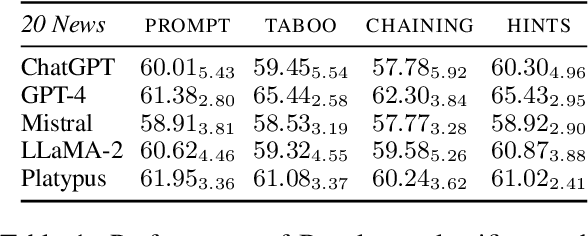

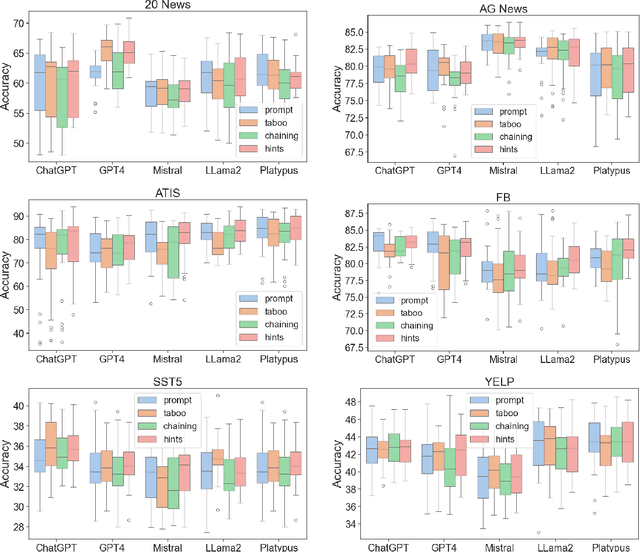

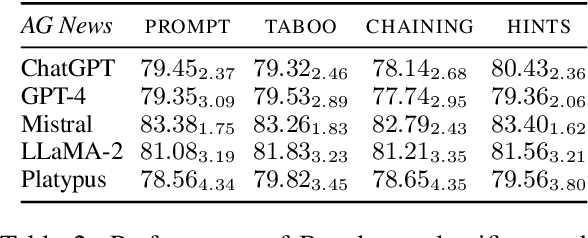

Abstract:The latest generative large language models (LLMs) have found their application in data augmentation tasks, where small numbers of text samples are LLM-paraphrased and then used to fine-tune the model. However, more research is needed to assess how different prompts, seed data selection strategies, filtering methods, or model settings affect the quality of paraphrased data (and downstream models). In this study, we investigate three text diversity incentive methods well established in crowdsourcing: taboo words, hints by previous outlier solutions, and chaining on previous outlier solutions. Using these incentive methods as part of instructions to LLMs augmenting text datasets, we measure their effects on generated texts' lexical diversity and downstream model performance. We compare the effects over 5 different LLMs and 6 datasets. We show that diversity is most increased by taboo words, while downstream model performance is highest when previously created paraphrases are used as hints.

Authoring Worked Examples for Java Programming with Human-AI Collaboration

Dec 04, 2023Abstract:Worked examples (solutions to typical programming problems presented as a source code in a certain language and are used to explain the topics from a programming class) are among the most popular types of learning content in programming classes. Most approaches and tools for presenting these examples to students are based on line-by-line explanations of the example code. However, instructors rarely have time to provide line-by-line explanations for a large number of examples typically used in a programming class. In this paper, we explore and assess a human-AI collaboration approach to authoring worked examples for Java programming. We introduce an authoring system for creating Java worked examples that generates a starting version of code explanations and presents it to the instructor to edit if necessary. We also present a study that assesses the quality of explanations created with this approach.

ChatGPT to Replace Crowdsourcing of Paraphrases for Intent Classification: Higher Diversity and Comparable Model Robustness

May 22, 2023

Abstract:The emergence of generative large language models (LLMs) raises the question: what will be its impact on crowdsourcing. Traditionally, crowdsourcing has been used for acquiring solutions to a wide variety of human-intelligence tasks, including ones involving text generation, manipulation or evaluation. For some of these tasks, models like ChatGPT can potentially substitute human workers. In this study, we investigate, whether this is the case for the task of paraphrase generation for intent classification. We quasi-replicated the data collection methodology of an existing crowdsourcing study (similar scale, prompts and seed data) using ChatGPT. We show that ChatGPT-created paraphrases are more diverse and lead to more robust models.

From Ranked Lists to Carousels: A Carousel Click Model

Sep 27, 2022

Abstract:Carousel-based recommendation interfaces allow users to explore recommended items in a structured, efficient, and visually-appealing way. This made them a de-facto standard approach to recommending items to end users in many real-life recommenders. In this work, we try to explain the efficiency of carousel recommenders using a \emph{carousel click model}, a generative model of user interaction with carousel-based recommender interfaces. We study this model both analytically and empirically. Our analytical results show that the user can examine more items in the carousel click model than in a single ranked list, due to the structured way of browsing. These results are supported by a series of experiments, where we integrate the carousel click model with a recommender based on matrix factorization. We show that the combined recommender performs well on held-out test data, and leads to higher engagement with recommendations than a traditional single ranked list.

Does Order Matter? An Empirical Study on Generating Multiple Keyphrases as a Sequence

Oct 20, 2019

Abstract:Recently, concatenating multiple keyphrases as a target sequence has been proposed as a new learning paradigm for keyphrase generation. Existing studies concatenate target keyphrases in different orders but no study has examined the effects of ordering on models' behavior. In this paper, we propose several orderings for concatenation and inspect the important factors for training a successful keyphrase generation model. By running comprehensive comparisons, we observe one preferable ordering and summarize a number of empirical findings and challenges, which can shed light on future research on this line of work.

Deep Keyphrase Generation

Sep 18, 2018

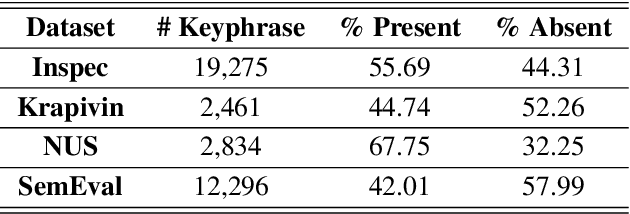

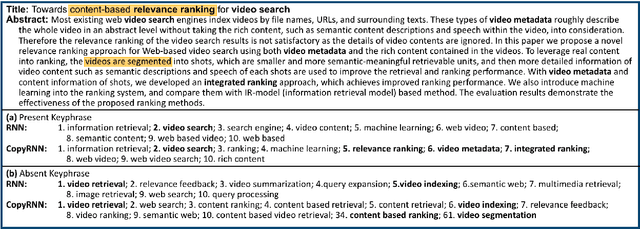

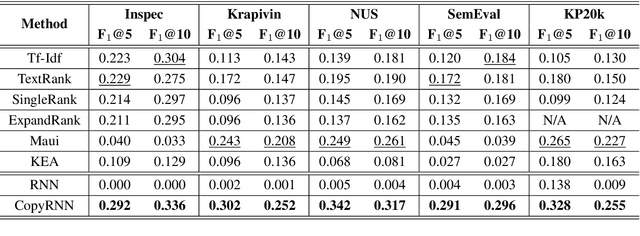

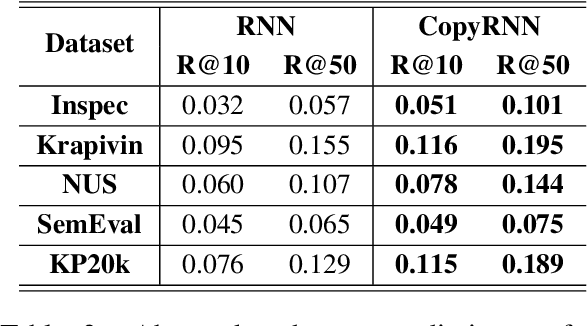

Abstract:Keyphrase provides highly-summative information that can be effectively used for understanding, organizing and retrieving text content. Though previous studies have provided many workable solutions for automated keyphrase extraction, they commonly divided the to-be-summarized content into multiple text chunks, then ranked and selected the most meaningful ones. These approaches could neither identify keyphrases that do not appear in the text, nor capture the real semantic meaning behind the text. We propose a generative model for keyphrase prediction with an encoder-decoder framework, which can effectively overcome the above drawbacks. We name it as deep keyphrase generation since it attempts to capture the deep semantic meaning of the content with a deep learning method. Empirical analysis on six datasets demonstrates that our proposed model not only achieves a significant performance boost on extracting keyphrases that appear in the source text, but also can generate absent keyphrases based on the semantic meaning of the text. Code and dataset are available at https://github.com/memray/seq2seq-keyphrase.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge