Pedro H. C. Avelar

Solving the Kidney-Exchange Problem via Graph Neural Networks with No Supervision

Apr 19, 2023Abstract:This paper introduces a new learning-based approach for approximately solving the Kidney-Exchange Problem (KEP), an NP-hard problem on graphs. The problem consists of, given a pool of kidney donors and patients waiting for kidney donations, optimally selecting a set of donations to optimize the quantity and quality of transplants performed while respecting a set of constraints about the arrangement of these donations. The proposed technique consists of two main steps: the first is a Graph Neural Network (GNN) trained without supervision; the second is a deterministic non-learned search heuristic that uses the output of the GNN to find paths and cycles. To allow for comparisons, we also implemented and tested an exact solution method using integer programming, two greedy search heuristics without the machine learning module, and the GNN alone without a heuristic. We analyze and compare the methods and conclude that the learning-based two-stage approach is the best solution quality, outputting approximate solutions on average 1.1 times more valuable than the ones from the deterministic heuristic alone.

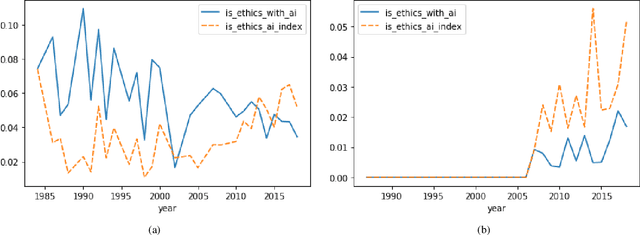

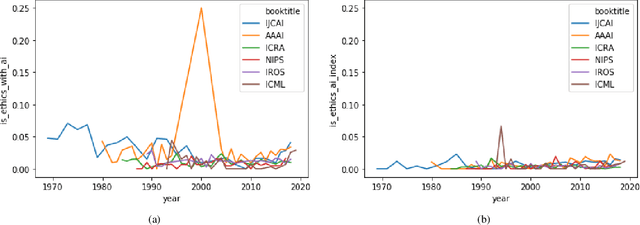

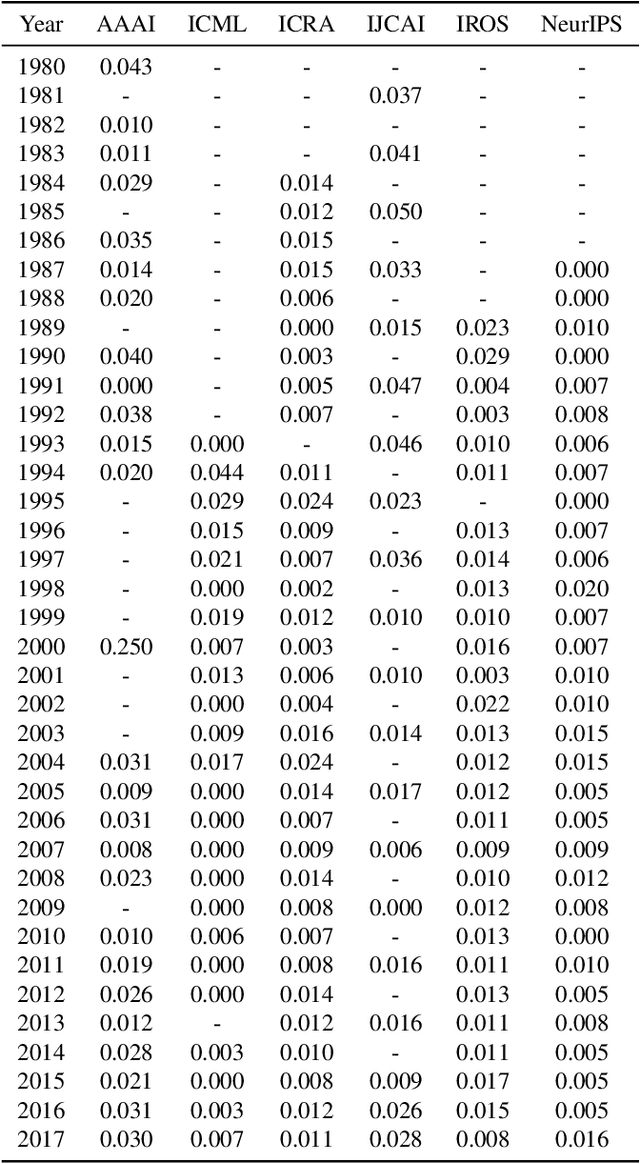

Measuring Ethics in AI with AI: A Methodology and Dataset Construction

Jul 26, 2021

Abstract:Recently, the use of sound measures and metrics in Artificial Intelligence has become the subject of interest of academia, government, and industry. Efforts towards measuring different phenomena have gained traction in the AI community, as illustrated by the publication of several influential field reports and policy documents. These metrics are designed to help decision takers to inform themselves about the fast-moving and impacting influences of key advances in Artificial Intelligence in general and Machine Learning in particular. In this paper we propose to use such newfound capabilities of AI technologies to augment our AI measuring capabilities. We do so by training a model to classify publications related to ethical issues and concerns. In our methodology we use an expert, manually curated dataset as the training set and then evaluate a large set of research papers. Finally, we highlight the implications of AI metrics, in particular their contribution towards developing trustful and fair AI-based tools and technologies. Keywords: AI Ethics; AI Fairness; AI Measurement. Ethics in Computer Science.

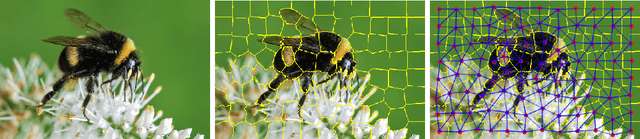

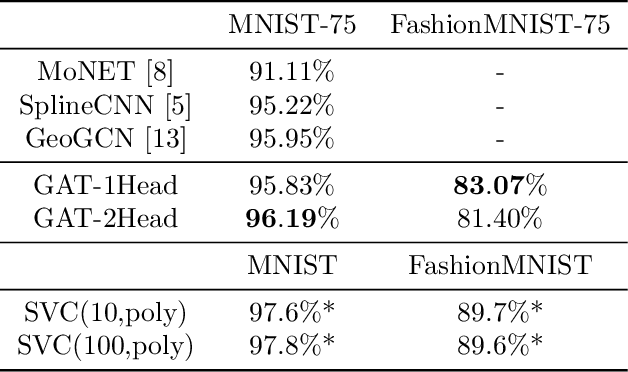

Superpixel Image Classification with Graph Attention Networks

Feb 13, 2020

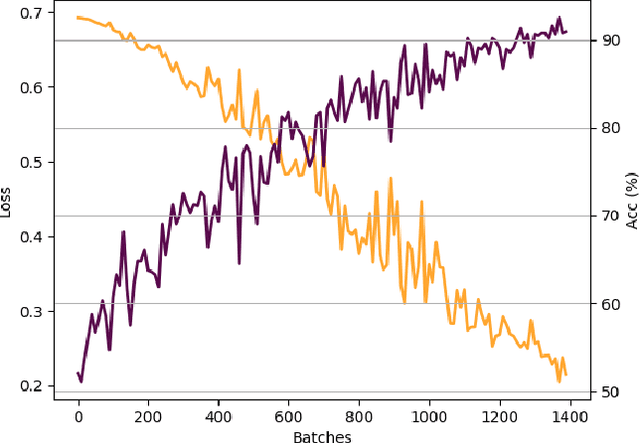

Abstract:This document reports the use of Graph Attention Networks for classifying oversegmented images, as well as a general procedure for generating oversegmented versions of image-based datasets. The code and learnt models for/from the experiments are available on github. The experiments were ran from June 2019 until December 2019. We obtained better results than the baseline models that uses geometric distance-based attention by using instead self attention, in a more sparsely connected graph network.

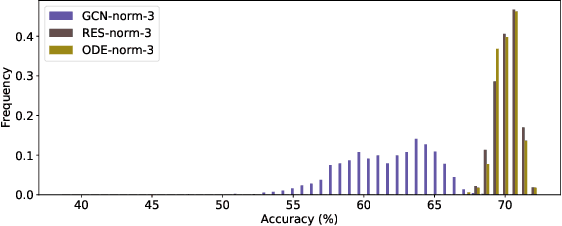

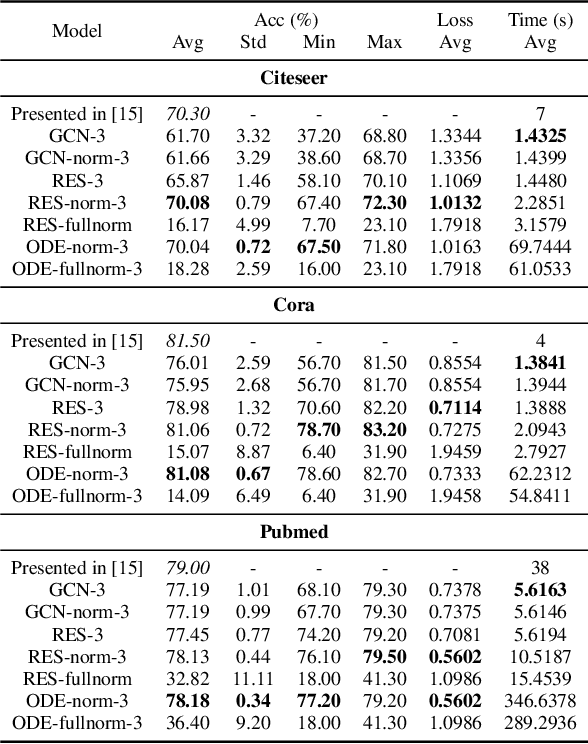

Discrete and Continuous Deep Residual Learning Over Graphs

Nov 26, 2019

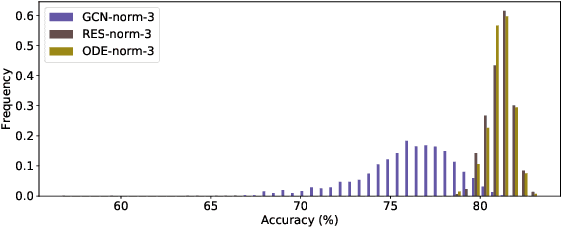

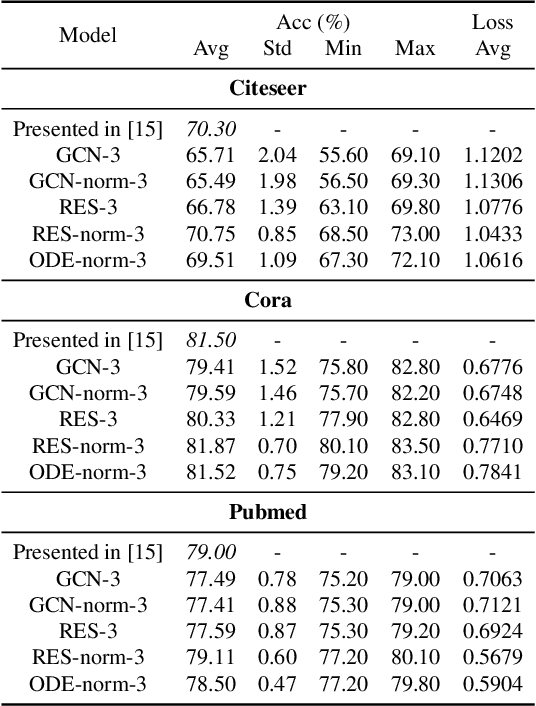

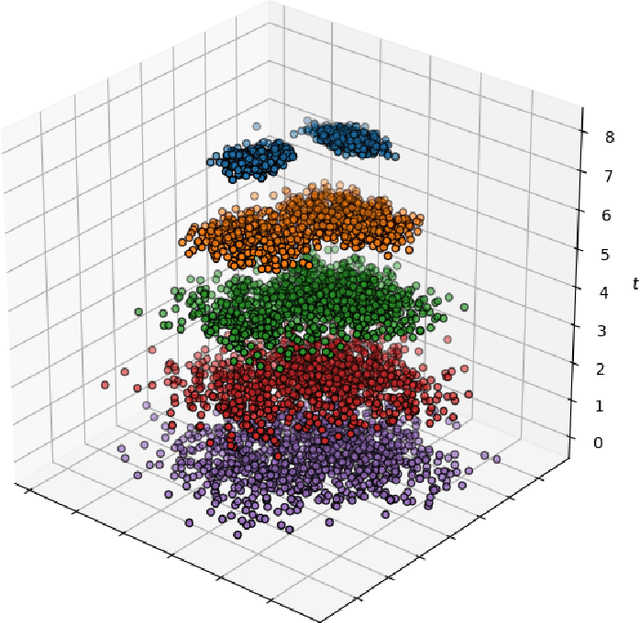

Abstract:In this paper we propose the use of continuous residual modules for graph kernels in Graph Neural Networks. We show how both discrete and continuous residual layers allow for more robust training, being that continuous residual layers are those which are applied by integrating through an Ordinary Differential Equation (ODE) solver to produce their output. We experimentally show that these residuals achieve better results than the ones with non-residual modules when multiple layers are used, mitigating the low-pass filtering effect of GCN-based models. Finally, we apply and analyse the behaviour of these techniques and give pointers to how this technique can be useful in other domains by allowing more predictable behaviour under dynamic times of computation.

Typed Graph Networks

Feb 05, 2019

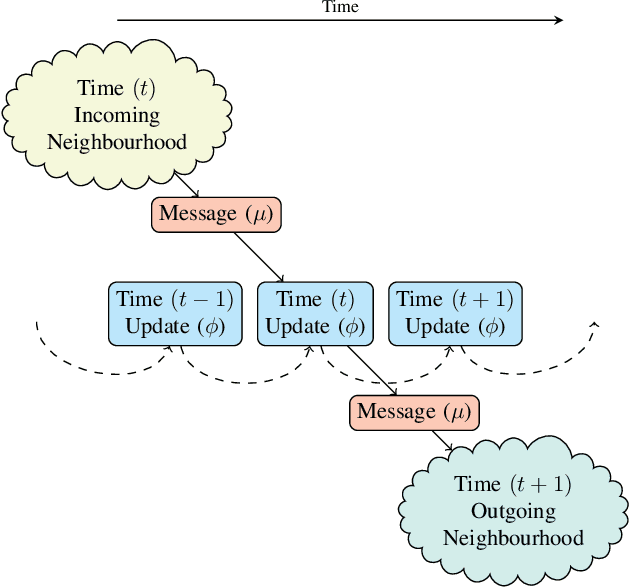

Abstract:Recently, the deep learning community has given growing attention to neural architectures engineered to learn problems in relational domains. Convolutional Neural Networks employ parameter sharing over the image domain, tying the weights of neural connections on a grid topology and thus enforcing the learning of a number of convolutional kernels. By instantiating trainable neural modules and assembling them in varied configurations (apart from grids), one can enforce parameter sharing over graphs, yielding models which can effectively be fed with relational data. In this context, vertices in a graph can be projected into a hyperdimensional real space and iteratively refined over many message-passing iterations in an end-to-end differentiable architecture. Architectures of this family have been referred to with several definitions in the literature, such as Graph Neural Networks, Message-passing Neural Networks, Relational Networks and Graph Networks. In this paper, we revisit the original Graph Neural Network model and show that it generalises many of the recent models, which in turn benefit from the insight of thinking about vertex \textbf{types}. To illustrate the generality of the original model, we present a Graph Neural Network formalisation, which partitions the vertices of a graph into a number of types. Each type represents an entity in the ontology of the problem one wants to learn. This allows - for instance - one to assign embeddings to edges, hyperedges, and any number of global attributes of the graph. As a companion to this paper we provide a Python/Tensorflow library to facilitate the development of such architectures, with which we instantiate the formalisation to reproduce a number of models proposed in the current literature.

Assessing Gender Bias in Machine Translation -- A Case Study with Google Translate

Nov 05, 2018

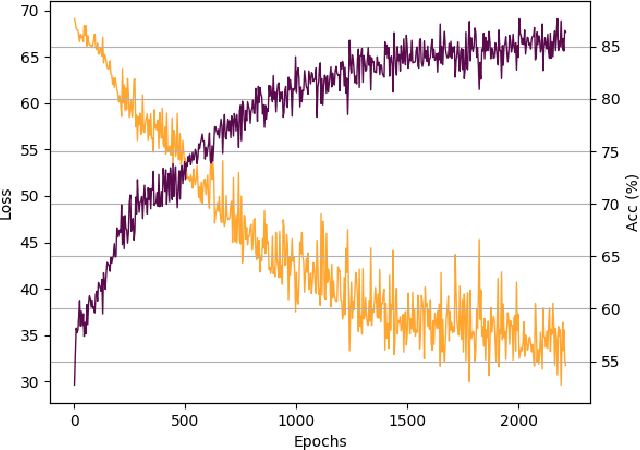

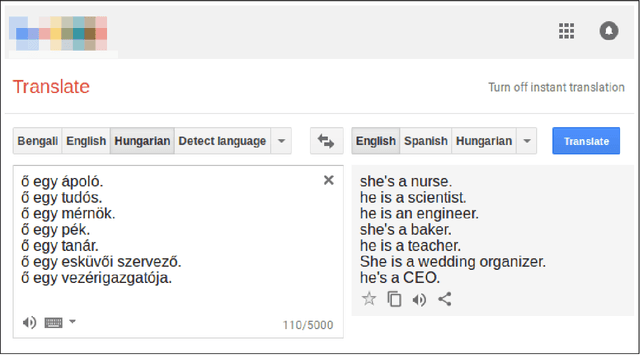

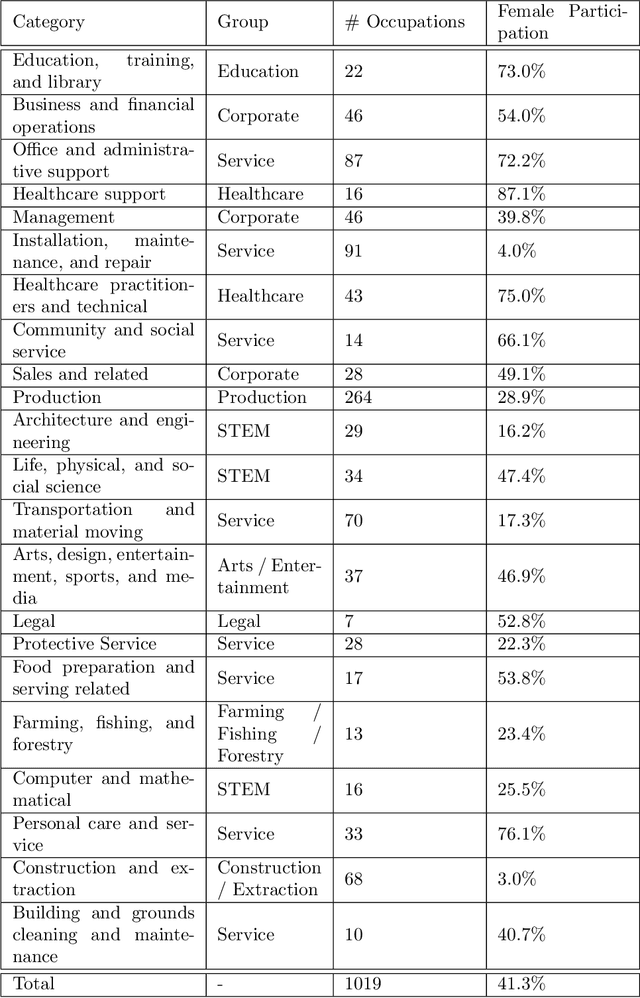

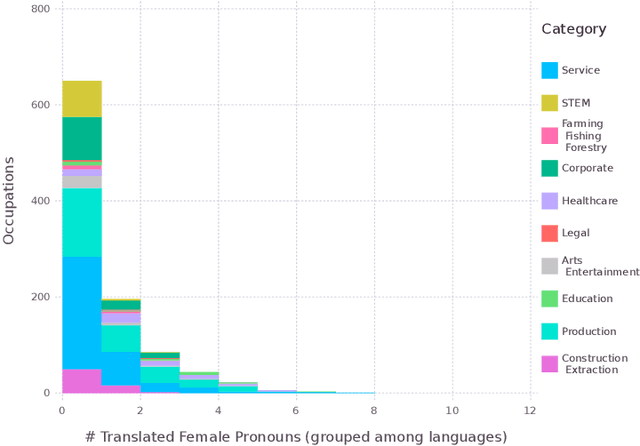

Abstract:Recently there has been a growing concern about machine bias, where trained statistical models grow to reflect controversial societal asymmetries, such as gender or racial bias. A significant number of AI tools have recently been suggested to be harmfully biased towards some minority, with reports of racist criminal behavior predictors, Iphone X failing to differentiate between two Asian people and Google photos' mistakenly classifying black people as gorillas. Although a systematic study of such biases can be difficult, we believe that automated translation tools can be exploited through gender neutral languages to yield a window into the phenomenon of gender bias in AI. In this paper, we start with a comprehensive list of job positions from the U.S. Bureau of Labor Statistics (BLS) and used it to build sentences in constructions like "He/She is an Engineer" in 12 different gender neutral languages such as Hungarian, Chinese, Yoruba, and several others. We translate these sentences into English using the Google Translate API, and collect statistics about the frequency of female, male and gender-neutral pronouns in the translated output. We show that GT exhibits a strong tendency towards male defaults, in particular for fields linked to unbalanced gender distribution such as STEM jobs. We ran these statistics against BLS' data for the frequency of female participation in each job position, showing that GT fails to reproduce a real-world distribution of female workers. We provide experimental evidence that even if one does not expect in principle a 50:50 pronominal gender distribution, GT yields male defaults much more frequently than what would be expected from demographic data alone. We are hopeful that this work will ignite a debate about the need to augment current statistical translation tools with debiasing techniques which can already be found in the scientific literature.

Learning to Solve NP-Complete Problems - A Graph Neural Network for the Decision TSP

Oct 31, 2018

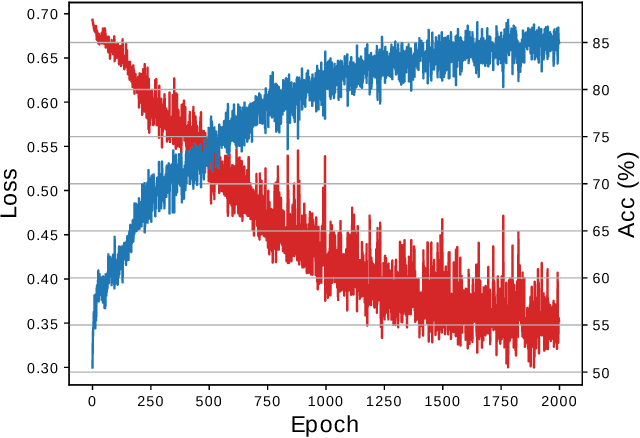

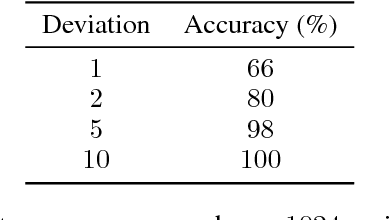

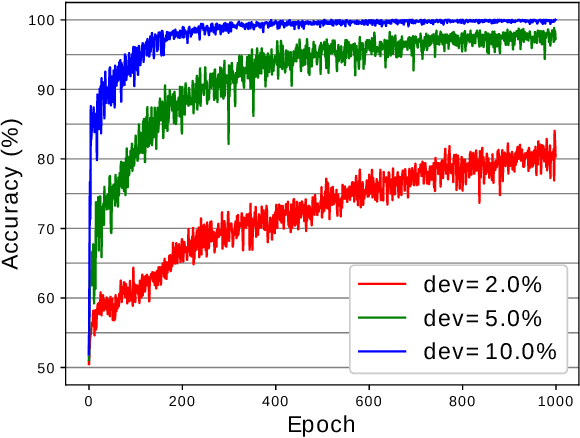

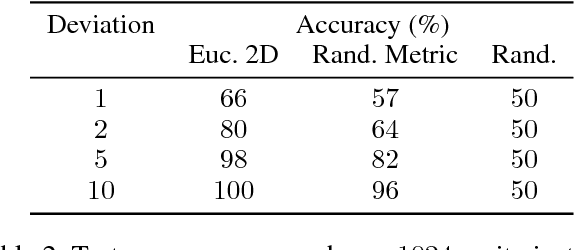

Abstract:Graph Neural Networks (GNN) are a promising technique for bridging differential programming and combinatorial domains. GNNs employ trainable modules which can be assembled in different configurations that reflect the relational structure of each problem instance. In this paper, we show that GNNs can learn to solve, with very little supervision, the decision variant of the Traveling Salesperson Problem (TSP), a highly relevant $\mathcal{NP}$-Complete problem. Our model is trained to function as an effective message-passing algorithm in which edges (embedded with their weights) communicate with vertices for a number of iterations after which the model is asked to decide whether a route with cost $<C$ exists. We show that such a network can be trained with sets of dual examples: given the optimal tour cost $C^{*}$, we produce one decision instance with target cost $x\%$ smaller and one with target cost $x\%$ larger than $C^{*}$. We were able to obtain $80\%$ accuracy training with $-2\%,+2\%$ deviations, and the same trained model can generalize for more relaxed deviations with increasing performance. We also show that the model is capable of generalizing for larger problem sizes. Finally, we provide a method for predicting the optimal route cost within $2\%$ deviation from the ground truth. In summary, our work shows that Graph Neural Networks are powerful enough to solve $\mathcal{NP}$-Complete problems which combine symbolic and numeric data.

Multitask Learning on Graph Neural Networks - Learning Multiple Graph Centrality Measures with a Unified Network

Oct 03, 2018

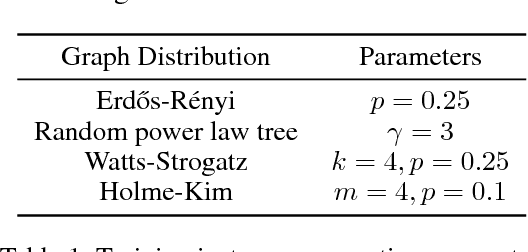

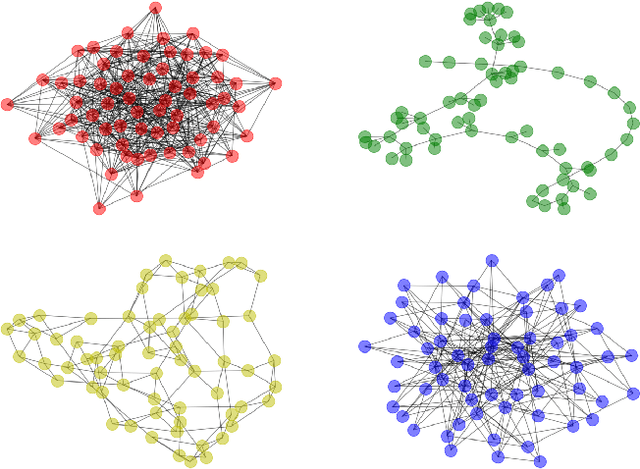

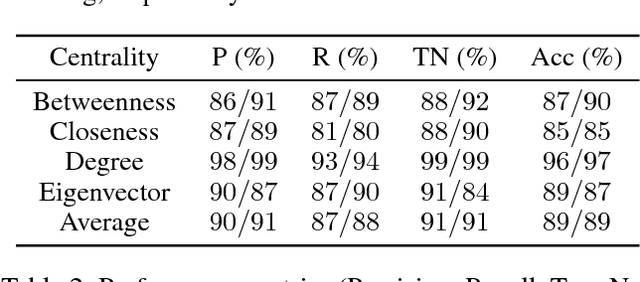

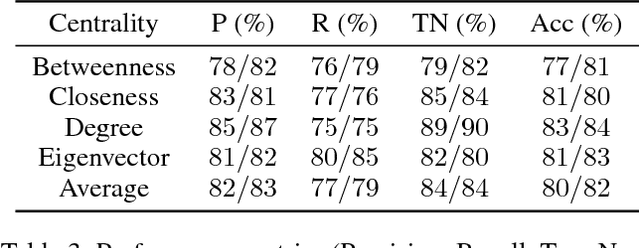

Abstract:The application of deep learning to symbolic domains remains an active research endeavour. Graph neural networks (GNN), consisting of trained neural modules which can be arranged in different topologies at run time, are sound alternatives to tackle relational problems which lend themselves to graph representations. In this paper, we show that GNNs are capable of multitask learning, which can be naturally enforced by training the model to refine a single set of multidimensional embeddings $\in \mathbb{R}^d$ and decode them into multiple outputs by connecting MLPs at the end of the pipeline. We demonstrate the multitask learning capability of the model in the relevant relational problem of estimating network centrality measures, i.e. is vertex $v_1$ more central than vertex $v_2$ given centrality $c$?. We then show that a GNN can be trained to develop a $lingua$ $franca$ of vertex embeddings from which all relevant information about any of the trained centrality measures can be decoded. The proposed model achieves $89\%$ accuracy on a test dataset of random instances with up to 128 vertices and is shown to generalise to larger problem sizes. The model is also shown to obtain reasonable accuracy on a dataset of real world instances with up to 4k vertices, vastly surpassing the sizes of the largest instances with which the model was trained ($n=128$). Finally, we believe that our contributions attest to the potential of GNNs in symbolic domains in general and in relational learning in particular.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge