Paul Verschure

High-fidelity social learning via shared episodic memories enhances collaborative foraging through mnemonic convergence

Dec 28, 2024Abstract:Social learning, a cornerstone of cultural evolution, enables individuals to acquire knowledge by observing and imitating others. At the heart of its efficacy lies episodic memory, which encodes specific behavioral sequences to facilitate learning and decision-making. This study explores the interrelation between episodic memory and social learning in collective foraging. Using Sequential Episodic Control (SEC) agents capable of sharing complete behavioral sequences stored in episodic memory, we investigate how variations in the frequency and fidelity of social learning influence collaborative foraging performance. Furthermore, we analyze the effects of social learning on the content and distribution of episodic memories across the group. High-fidelity social learning is shown to consistently enhance resource collection efficiency and distribution, with benefits sustained across memory lengths. In contrast, low-fidelity learning fails to outperform nonsocial learning, spreading diverse but ineffective mnemonic patterns. Novel analyses using mnemonic metrics reveal that high-fidelity social learning also fosters mnemonic group alignment and equitable resource distribution, while low-fidelity conditions increase mnemonic diversity without translating to performance gains. Additionally, we identify an optimal range for episodic memory length in this task, beyond which performance plateaus. These findings underscore the critical effects of social learning on mnemonic group alignment and distribution and highlight the potential of neurocomputational models to probe the cognitive mechanisms driving cultural evolution.

Awareness in robotics: An early perspective from the viewpoint of the EIC Pathfinder Challenge "Awareness Inside''

Feb 14, 2024Abstract:Consciousness has been historically a heavily debated topic in engineering, science, and philosophy. On the contrary, awareness had less success in raising the interest of scholars in the past. However, things are changing as more and more researchers are getting interested in answering questions concerning what awareness is and how it can be artificially generated. The landscape is rapidly evolving, with multiple voices and interpretations of the concept being conceived and techniques being developed. The goal of this paper is to summarize and discuss the ones among these voices connected with projects funded by the EIC Pathfinder Challenge called ``Awareness Inside'', a nonrecurring call for proposals within Horizon Europe designed specifically for fostering research on natural and synthetic awareness. In this perspective, we dedicate special attention to challenges and promises of applying synthetic awareness in robotics, as the development of mature techniques in this new field is expected to have a special impact on generating more capable and trustworthy embodied systems.

Distributed Adaptive Control: An ideal Cognitive Architecture candidate for managing a robotic recycling plant

Dec 23, 2020

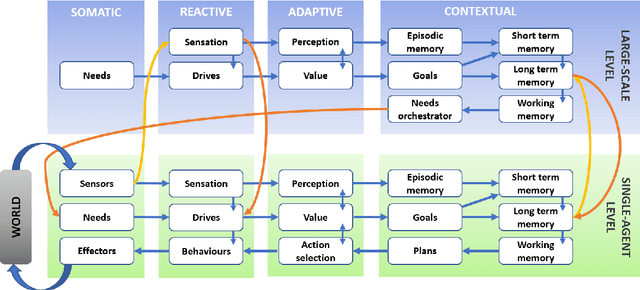

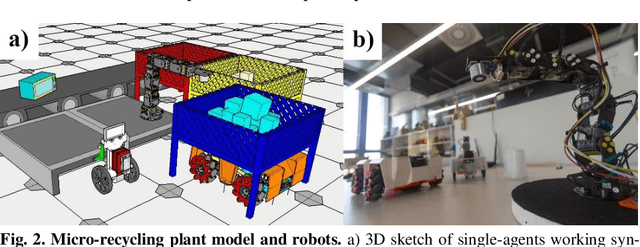

Abstract:In the past decade, society has experienced notable growth in a variety of technological areas. However, the Fourth Industrial Revolution has not been embraced yet. Industry 4.0 imposes several challenges which include the necessity of new architectural models to tackle the uncertainty that open environments represent to cyber-physical systems (CPS). Waste Electrical and Electronic Equipment (WEEE) recycling plants stand for one of such open environments. Here, CPSs must work harmoniously in a changing environment, interacting with similar and not so similar CPSs, and adaptively collaborating with human workers. In this paper, we support the Distributed Adaptive Control (DAC) theory as a suitable Cognitive Architecture for managing a recycling plant. Specifically, a recursive implementation of DAC (between both single-agent and large-scale levels) is proposed to meet the expected demands of the European Project HR-Recycler. Additionally, with the aim of having a realistic benchmark for future implementations of the recursive DAC, a micro-recycling plant prototype is presented.

Modeling Theory of Mind in Multi-Agent Games Using Adaptive Feedback Control

May 29, 2019

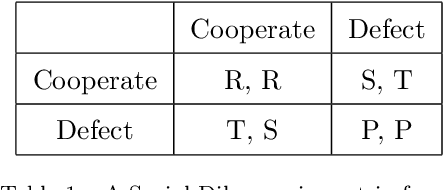

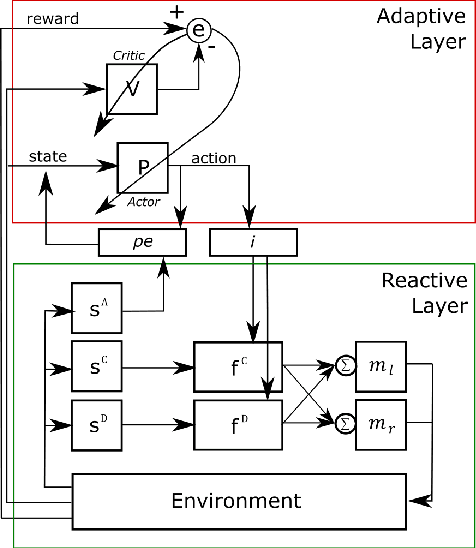

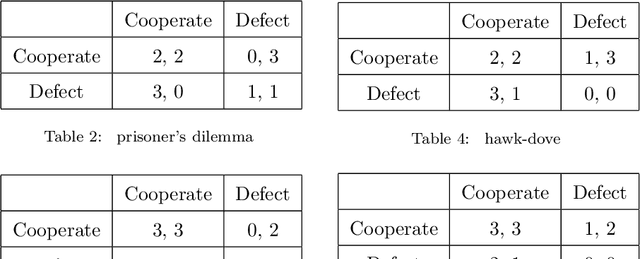

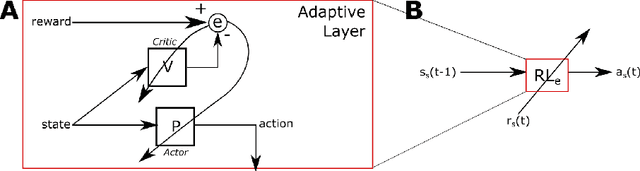

Abstract:A major challenge in cognitive science and AI has been to understand how autonomous agents might acquire and predict behavioral and mental states of other agents in the course of complex social interactions. How does such an agent model the goals, beliefs, and actions of other agents it interacts with? What are the computational principles to model a Theory of Mind (ToM)? Deep learning approaches to address these questions fall short of a better understanding of the problem. In part, this is due to the black-box nature of deep networks, wherein computational mechanisms of ToM are not readily revealed. Here, we consider alternative hypotheses seeking to model how the brain might realize a ToM. In particular, we propose embodied and situated agent models based on distributed adaptive control theory to predict actions of other agents in five different game theoretic tasks (Harmony Game, Hawk-Dove, Stag-Hunt, Prisoner's Dilemma and Battle of the Exes). Our multi-layer control models implement top-down predictions from adaptive to reactive layers of control and bottom-up error feedback from reactive to adaptive layers. We test cooperative and competitive strategies among seven different agent models (cooperative, greedy, tit-for-tat, reinforcement-based, rational, predictive and other's-model agents). We show that, compared to pure reinforcement-based strategies, probabilistic learning agents modeled on rational, predictive and other's-model phenotypes perform better in game-theoretic metrics across tasks. Our autonomous multi-agent models capture systems-level processes underlying a ToM and highlight architectural principles of ToM from a control-theoretic perspective.

Modeling the Formation of Social Conventions in Multi-Agent Populations

Feb 16, 2018

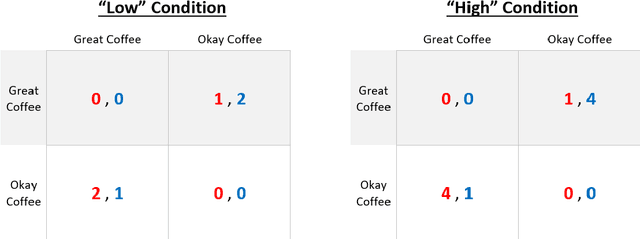

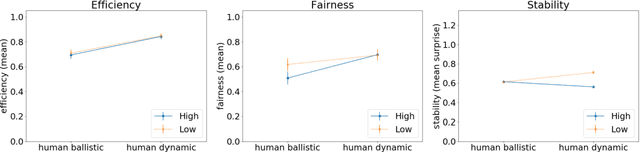

Abstract:In order to understand the formation of social conventions we need to know the specific role of control and learning in multi-agent systems. To advance in this direction, we propose, within the framework of the Distributed Adaptive Control (DAC) theory, a novel Control-based Reinforcement Learning architecture (CRL) that can account for the acquisition of social conventions in multi-agent populations that are solving a benchmark social decision-making problem. Our new CRL architecture, as a concrete realization of DAC multi-agent theory, implements a low-level sensorimotor control loop handling the agent's reactive behaviors (pre-wired reflexes), along with a layer based on model-free reinforcement learning that maximizes long-term reward. We apply CRL in a multi-agent game-theoretic task in which coordination must be achieved in order to find an optimal solution. We show that our CRL architecture is able to both find optimal solutions in discrete and continuous time and reproduce human experimental data on standard game-theoretic metrics such as efficiency in acquiring rewards, fairness in reward distribution and stability of convention formation.

The Morphospace of Consciousness

Jun 08, 2017

Abstract:Given recent proposals to synthesize consciousness, how many forms of conscious machines can one distinguish and on what grounds? Based on current clinical scales of consciousness, that measure cognitive awareness and wakefulness, we take a perspective on how contemporary artificially intelligent machines and synthetically engineered life forms would measure on these scales. To do so, we argue that awareness and wakefulness can be associated to computational and autonomous complexity respectively. Then, building on insights from cognitive robotics, we ask what function consciousness serves, and interpret it as an evolutionary game-theoretic strategy. We make the case for a third type of complexity necessary for describing consciousness, namely, social complexity. Having identified these complexity types, allows us to represent both, biological and synthetic systems in a common morphospace. This suggests an embodiment-based taxonomy of consciousness. In particular, we distinguish four forms of consciousness, based on embodiment: biological, synthetic, group (resulting from group interactions) and simulated consciousness (embodied by virtual agents within a simulated reality). Such a taxonomy is useful for studying comparative signatures of consciousness across domains, in order to highlight design principles necessary to engineer conscious machines. This is particularly relevant in the light of recent developments at the crossroads of neuroscience, biomedical engineering, artificial intelligence and biomimetics.

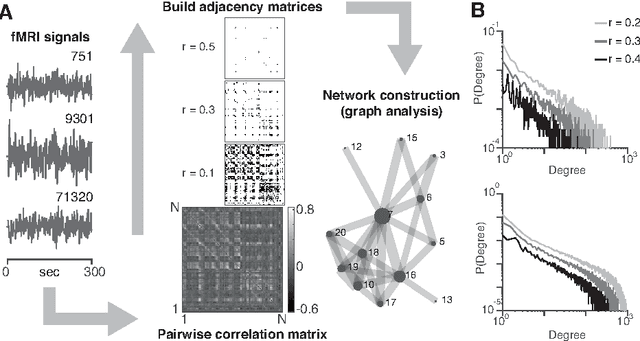

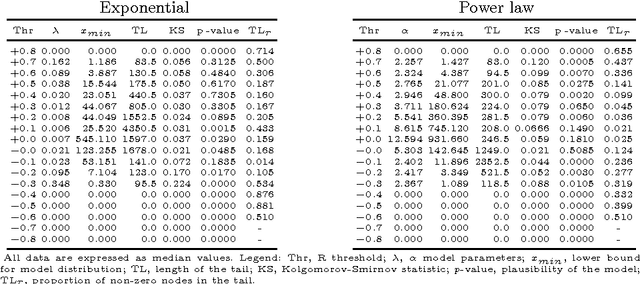

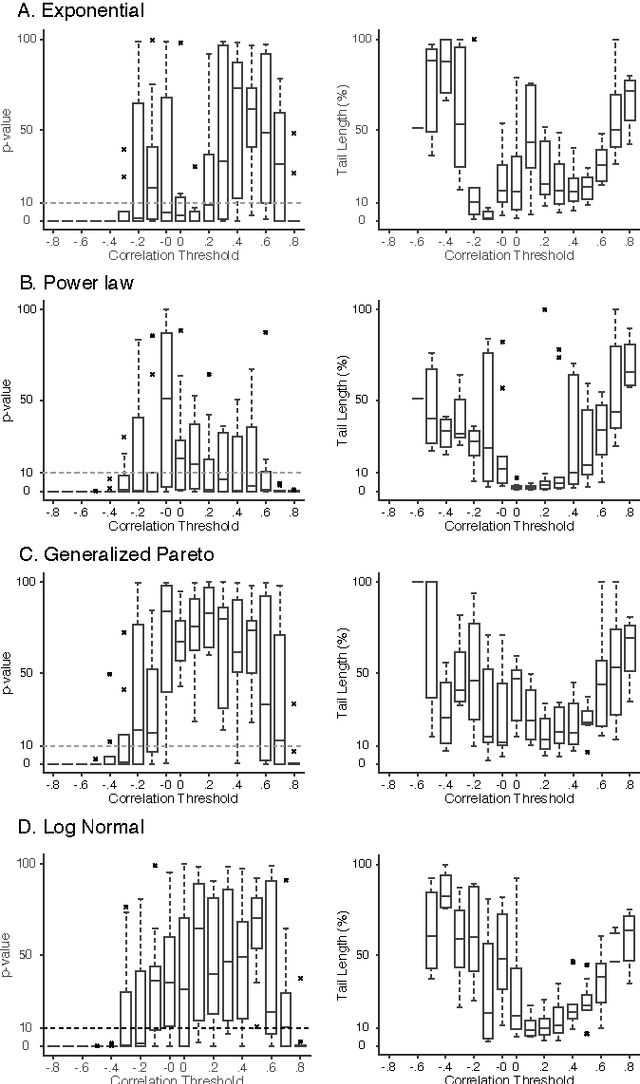

Scaling Properties of Human Brain Functional Networks

Feb 02, 2017

Abstract:We investigate scaling properties of human brain functional networks in the resting-state. Analyzing network degree distributions, we statistically test whether their tails scale as power-law or not. Initial studies, based on least-squares fitting, were shown to be inadequate for precise estimation of power-law distributions. Subsequently, methods based on maximum-likelihood estimators have been proposed and applied to address this question. Nevertheless, no clear consensus has emerged, mainly because results have shown substantial variability depending on the data-set used or its resolution. In this study, we work with high-resolution data (10K nodes) from the Human Connectome Project and take into account network weights. We test for the power-law, exponential, log-normal and generalized Pareto distributions. Our results show that the statistics generally do not support a power-law, but instead these degree distributions tend towards the thin-tail limit of the generalized Pareto model. This may have implications for the number of hubs in human brain functional networks.

* International Conference on Artificial Neural Networks - ICANN 2016

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge