Xerxes D. Arsiwalla

Qualia and the Formal Structure of Meaning

May 02, 2024Abstract:This work explores the hypothesis that subjectively attributed meaning constitutes the phenomenal content of conscious experience. That is, phenomenal content is semantic. This form of subjective meaning manifests as an intrinsic and non-representational character of qualia. Empirically, subjective meaning is ubiquitous in conscious experiences. We point to phenomenological studies that lend evidence to support this. Furthermore, this notion of meaning closely relates to what Frege refers to as "sense", in metaphysics and philosophy of language. It also aligns with Peirce's "interpretant", in semiotics. We discuss how Frege's sense can also be extended to the raw feels of consciousness. Sense and reference both play a role in phenomenal experience. Moreover, within the context of the mind-matter relation, we provide a formalization of subjective meaning associated to one's mental representations. Identifying the precise maps between the physical and mental domains, we argue that syntactic and semantic structures transcend language, and are realized within each of these domains. Formally, meaning is a relational attribute, realized via a map that interprets syntactic structures of a formal system within an appropriate semantic space. The image of this map within the mental domain is what is relevant for experience, and thus comprises the phenomenal content of qualia. We conclude with possible implications this may have for experience-based theories of consciousness.

A Cognitive Account of the Puzzle of Ideography

Apr 29, 2023Abstract:In this commentary article to 'The Puzzle of Ideography' by Morin, we put forth a new cognitive account of the puzzle of ideography, that complements the standardization account of Morin. Efficient standardization of spoken language is phenomenologically attributed to a modality effect coupled with chunking of cognitive representations, further aided by multi-sensory integration and the serialized nature of attention. These cognitive mechanisms are crucial for explaining why languages dominate graphic codes for general-purpose human communication.

Moral Dilemmas for Artificial Intelligence: a position paper on an application of Compositional Quantum Cognition

Nov 22, 2019

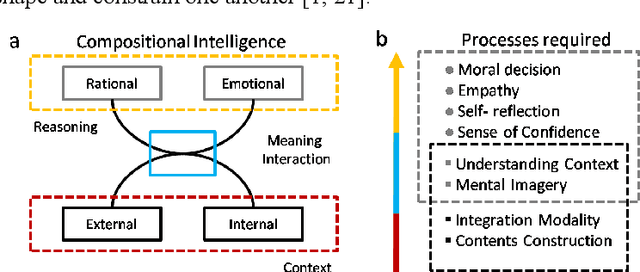

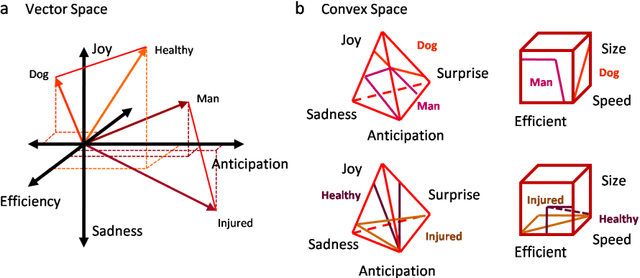

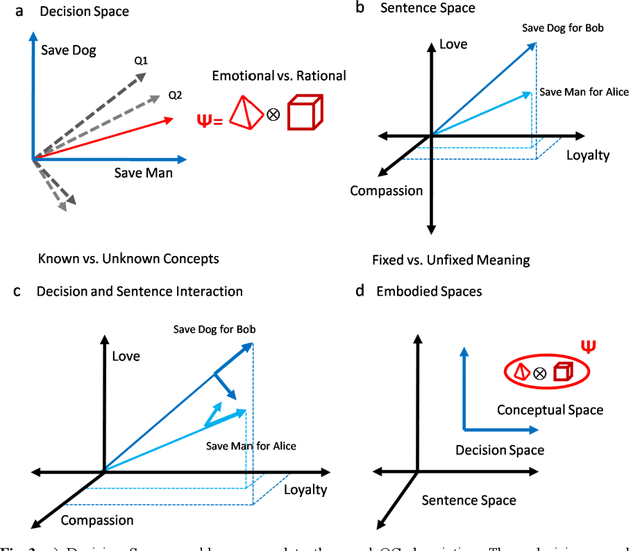

Abstract:Traditionally, the way one evaluates the performance of an Artificial Intelligence (AI) system is via a comparison to human performance in specific tasks, treating humans as a reference for high-level cognition. However, these comparisons leave out important features of human intelligence: the capability to transfer knowledge and make complex decisions based on emotional and rational reasoning. These decisions are influenced by current inferences as well as prior experiences, making the decision process strongly subjective and apparently biased. In this context, a definition of compositional intelligence is necessary to incorporate these features in future AI tests. Here, a concrete implementation of this will be suggested, using recent developments in quantum cognition, natural language and compositional meaning of sentences, thanks to categorical compositional models of meaning.

* 15 pages, 3 figures, Conference paper at Quantum Interaction 2018, Nice, France. Published in Lecture Notes in Computer Science, vol 11690, Springer, Cham. Online ISBN 978-3-030-35895-2

Modeling Theory of Mind in Multi-Agent Games Using Adaptive Feedback Control

May 29, 2019

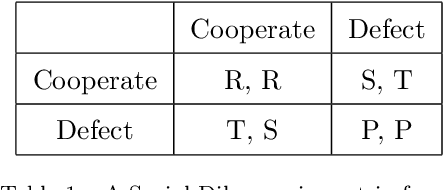

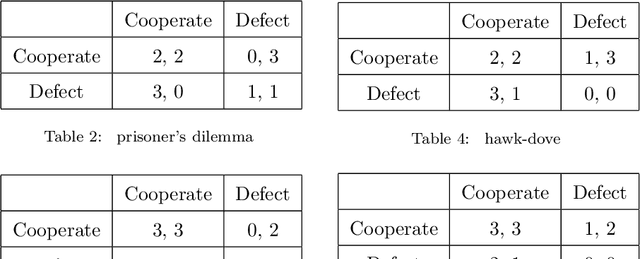

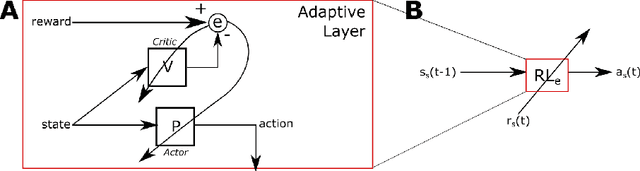

Abstract:A major challenge in cognitive science and AI has been to understand how autonomous agents might acquire and predict behavioral and mental states of other agents in the course of complex social interactions. How does such an agent model the goals, beliefs, and actions of other agents it interacts with? What are the computational principles to model a Theory of Mind (ToM)? Deep learning approaches to address these questions fall short of a better understanding of the problem. In part, this is due to the black-box nature of deep networks, wherein computational mechanisms of ToM are not readily revealed. Here, we consider alternative hypotheses seeking to model how the brain might realize a ToM. In particular, we propose embodied and situated agent models based on distributed adaptive control theory to predict actions of other agents in five different game theoretic tasks (Harmony Game, Hawk-Dove, Stag-Hunt, Prisoner's Dilemma and Battle of the Exes). Our multi-layer control models implement top-down predictions from adaptive to reactive layers of control and bottom-up error feedback from reactive to adaptive layers. We test cooperative and competitive strategies among seven different agent models (cooperative, greedy, tit-for-tat, reinforcement-based, rational, predictive and other's-model agents). We show that, compared to pure reinforcement-based strategies, probabilistic learning agents modeled on rational, predictive and other's-model phenotypes perform better in game-theoretic metrics across tasks. Our autonomous multi-agent models capture systems-level processes underlying a ToM and highlight architectural principles of ToM from a control-theoretic perspective.

Modeling the Formation of Social Conventions in Multi-Agent Populations

Feb 16, 2018

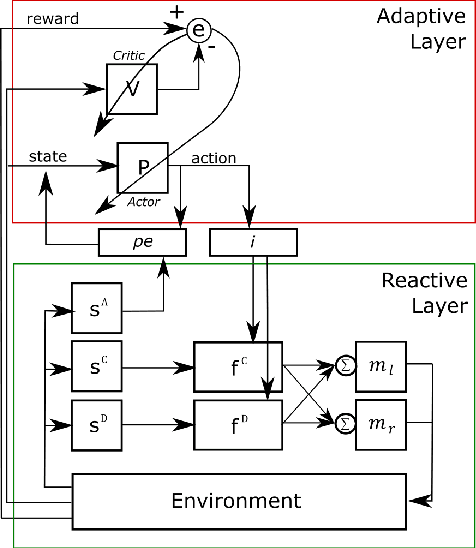

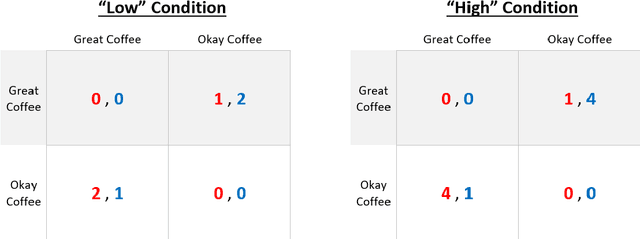

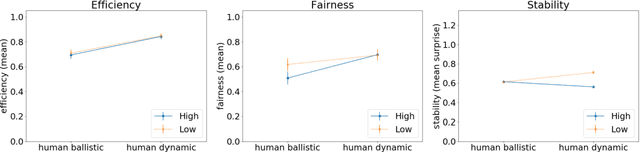

Abstract:In order to understand the formation of social conventions we need to know the specific role of control and learning in multi-agent systems. To advance in this direction, we propose, within the framework of the Distributed Adaptive Control (DAC) theory, a novel Control-based Reinforcement Learning architecture (CRL) that can account for the acquisition of social conventions in multi-agent populations that are solving a benchmark social decision-making problem. Our new CRL architecture, as a concrete realization of DAC multi-agent theory, implements a low-level sensorimotor control loop handling the agent's reactive behaviors (pre-wired reflexes), along with a layer based on model-free reinforcement learning that maximizes long-term reward. We apply CRL in a multi-agent game-theoretic task in which coordination must be achieved in order to find an optimal solution. We show that our CRL architecture is able to both find optimal solutions in discrete and continuous time and reproduce human experimental data on standard game-theoretic metrics such as efficiency in acquiring rewards, fairness in reward distribution and stability of convention formation.

Embodied Artificial Intelligence through Distributed Adaptive Control: An Integrated Framework

Sep 18, 2017

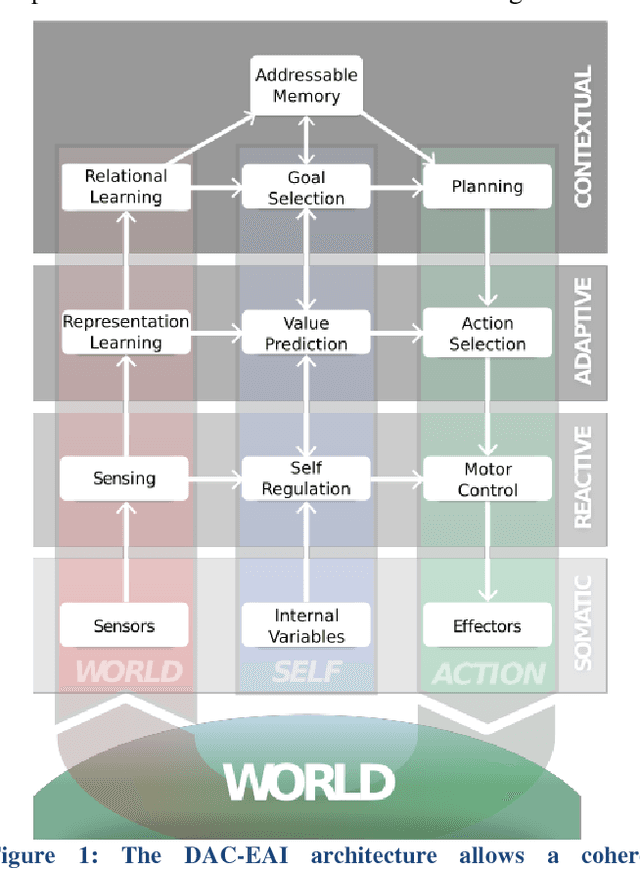

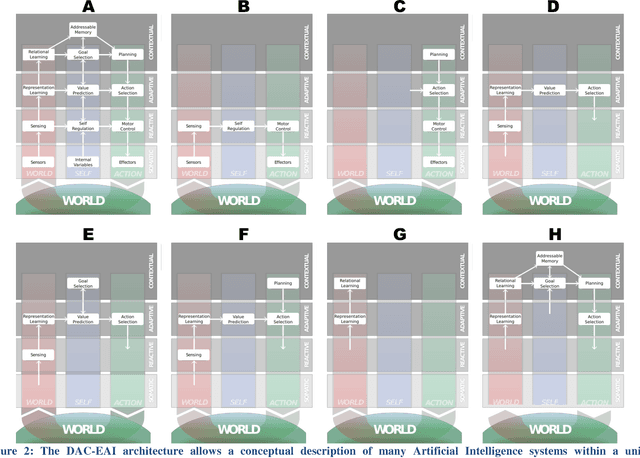

Abstract:In this paper, we argue that the future of Artificial Intelligence research resides in two keywords: integration and embodiment. We support this claim by analyzing the recent advances of the field. Regarding integration, we note that the most impactful recent contributions have been made possible through the integration of recent Machine Learning methods (based in particular on Deep Learning and Recurrent Neural Networks) with more traditional ones (e.g. Monte-Carlo tree search, goal babbling exploration or addressable memory systems). Regarding embodiment, we note that the traditional benchmark tasks (e.g. visual classification or board games) are becoming obsolete as state-of-the-art learning algorithms approach or even surpass human performance in most of them, having recently encouraged the development of first-person 3D game platforms embedding realistic physics. Building upon this analysis, we first propose an embodied cognitive architecture integrating heterogenous sub-fields of Artificial Intelligence into a unified framework. We demonstrate the utility of our approach by showing how major contributions of the field can be expressed within the proposed framework. We then claim that benchmarking environments need to reproduce ecologically-valid conditions for bootstrapping the acquisition of increasingly complex cognitive skills through the concept of a cognitive arms race between embodied agents.

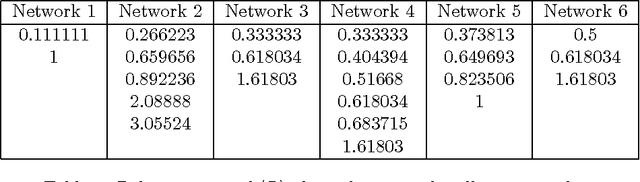

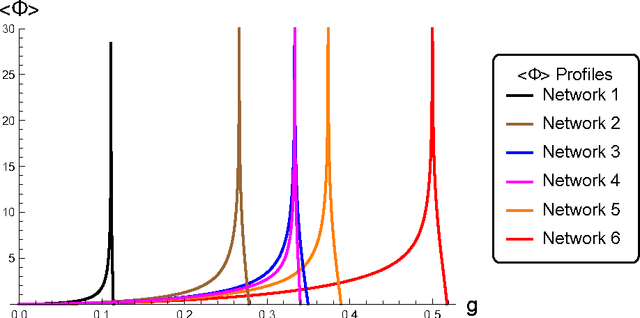

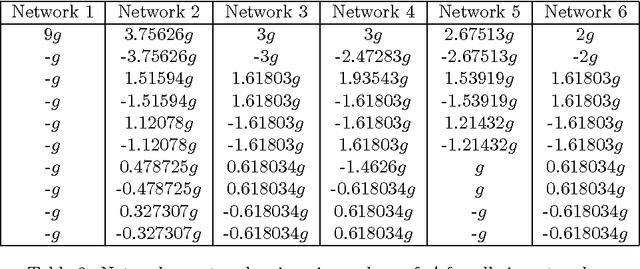

Spectral Modes of Network Dynamics Reveal Increased Informational Complexity Near Criticality

Jul 05, 2017

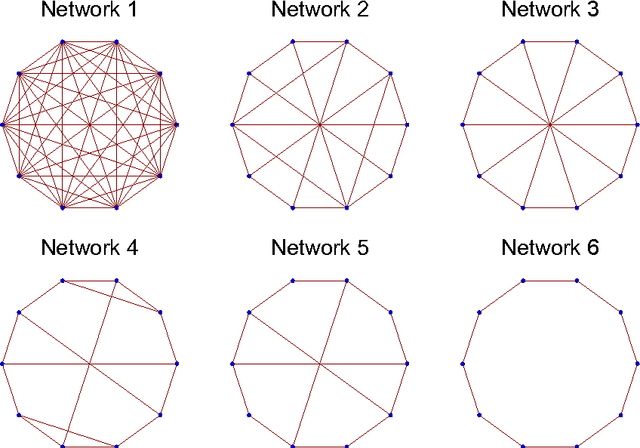

Abstract:What does the informational complexity of dynamical networked systems tell us about intrinsic mechanisms and functions of these complex systems? Recent complexity measures such as integrated information have sought to operationalize this problem taking a whole-versus-parts perspective, wherein one explicitly computes the amount of information generated by a network as a whole over and above that generated by the sum of its parts during state transitions. While several numerical schemes for estimating network integrated information exist, it is instructive to pursue an analytic approach that computes integrated information as a function of network weights. Our formulation of integrated information uses a Kullback-Leibler divergence between the multi-variate distribution on the set of network states versus the corresponding factorized distribution over its parts. Implementing stochastic Gaussian dynamics, we perform computations for several prototypical network topologies. Our findings show increased informational complexity near criticality, which remains consistent across network topologies. Spectral decomposition of the system's dynamics reveals how informational complexity is governed by eigenmodes of both, the network's covariance and adjacency matrices. We find that as the dynamics of the system approach criticality, high integrated information is exclusively driven by the eigenmode corresponding to the leading eigenvalue of the covariance matrix, while sub-leading modes get suppressed. The implication of this result is that it might be favorable for complex dynamical networked systems such as the human brain or communication systems to operate near criticality so that efficient information integration might be achieved.

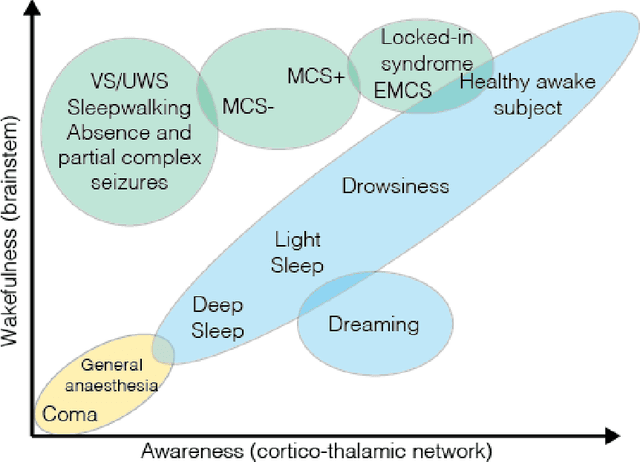

The Morphospace of Consciousness

Jun 08, 2017

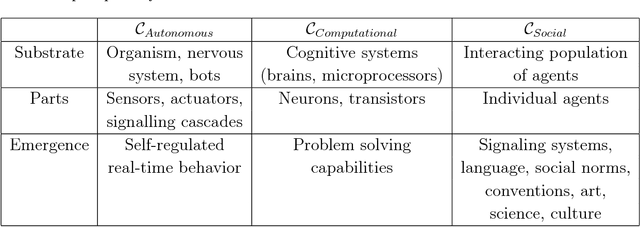

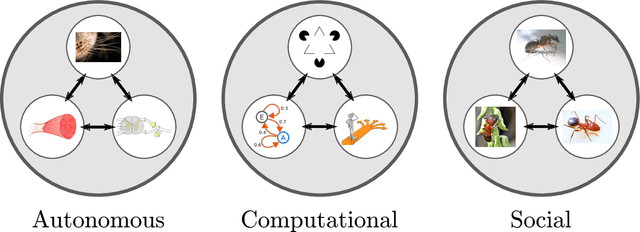

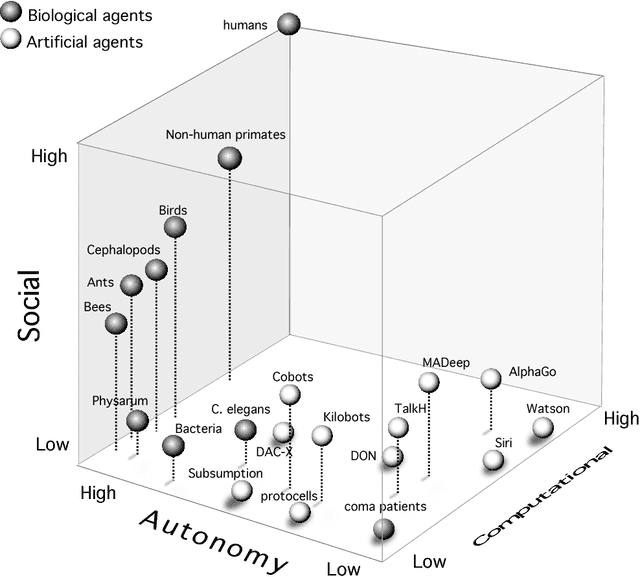

Abstract:Given recent proposals to synthesize consciousness, how many forms of conscious machines can one distinguish and on what grounds? Based on current clinical scales of consciousness, that measure cognitive awareness and wakefulness, we take a perspective on how contemporary artificially intelligent machines and synthetically engineered life forms would measure on these scales. To do so, we argue that awareness and wakefulness can be associated to computational and autonomous complexity respectively. Then, building on insights from cognitive robotics, we ask what function consciousness serves, and interpret it as an evolutionary game-theoretic strategy. We make the case for a third type of complexity necessary for describing consciousness, namely, social complexity. Having identified these complexity types, allows us to represent both, biological and synthetic systems in a common morphospace. This suggests an embodiment-based taxonomy of consciousness. In particular, we distinguish four forms of consciousness, based on embodiment: biological, synthetic, group (resulting from group interactions) and simulated consciousness (embodied by virtual agents within a simulated reality). Such a taxonomy is useful for studying comparative signatures of consciousness across domains, in order to highlight design principles necessary to engineer conscious machines. This is particularly relevant in the light of recent developments at the crossroads of neuroscience, biomedical engineering, artificial intelligence and biomimetics.

Scaling Properties of Human Brain Functional Networks

Feb 02, 2017

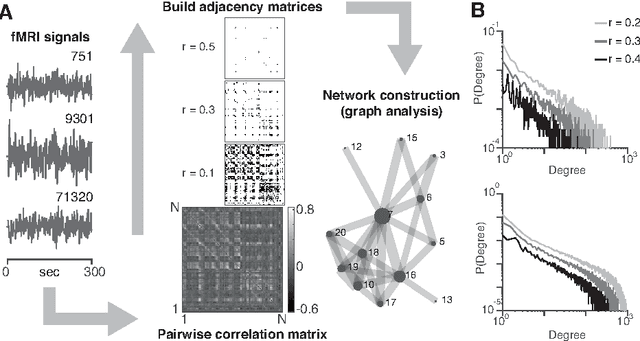

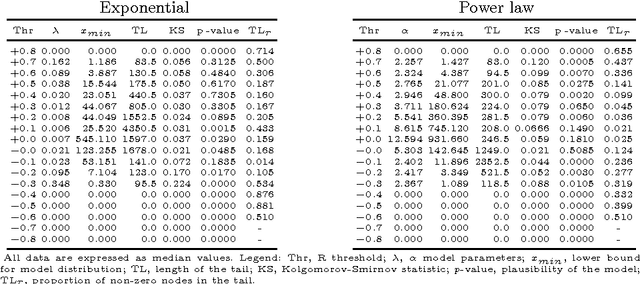

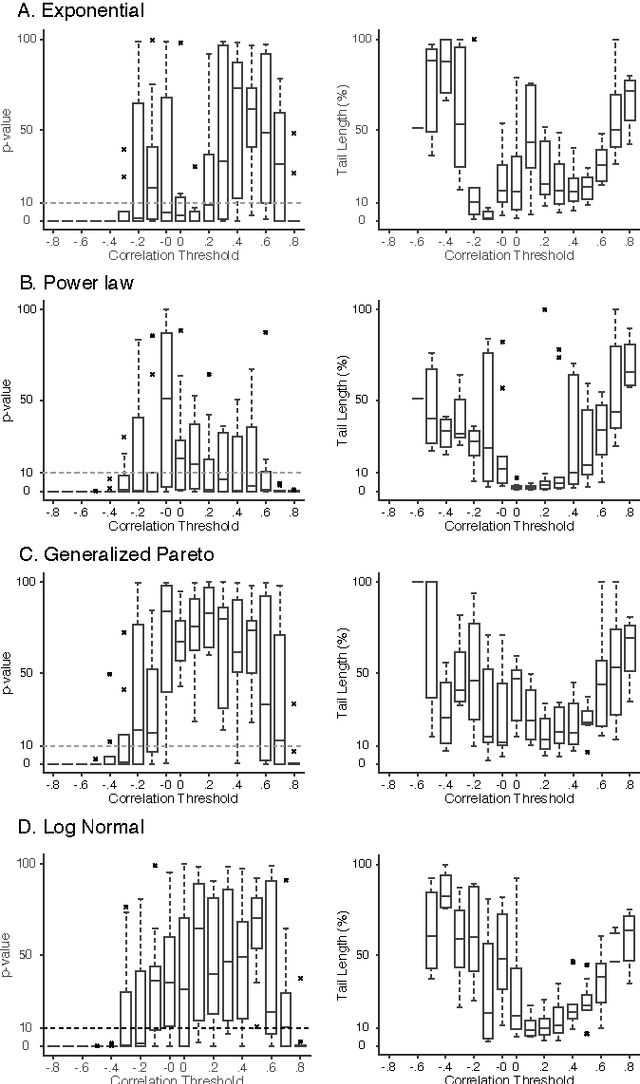

Abstract:We investigate scaling properties of human brain functional networks in the resting-state. Analyzing network degree distributions, we statistically test whether their tails scale as power-law or not. Initial studies, based on least-squares fitting, were shown to be inadequate for precise estimation of power-law distributions. Subsequently, methods based on maximum-likelihood estimators have been proposed and applied to address this question. Nevertheless, no clear consensus has emerged, mainly because results have shown substantial variability depending on the data-set used or its resolution. In this study, we work with high-resolution data (10K nodes) from the Human Connectome Project and take into account network weights. We test for the power-law, exponential, log-normal and generalized Pareto distributions. Our results show that the statistics generally do not support a power-law, but instead these degree distributions tend towards the thin-tail limit of the generalized Pareto model. This may have implications for the number of hubs in human brain functional networks.

* International Conference on Artificial Neural Networks - ICANN 2016

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge