Jordi-Ysard Puigbò

Modeling Theory of Mind in Multi-Agent Games Using Adaptive Feedback Control

May 29, 2019

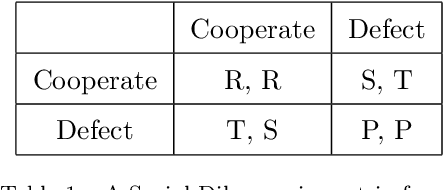

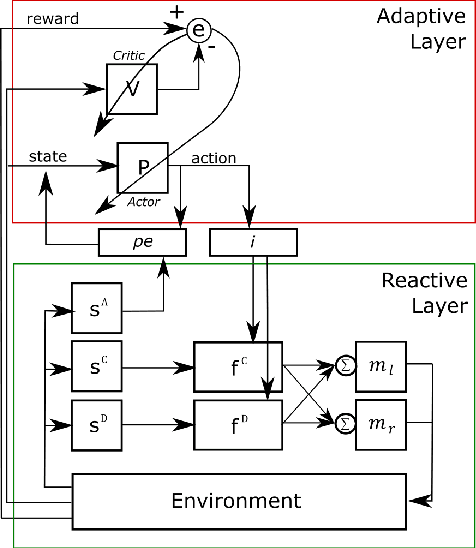

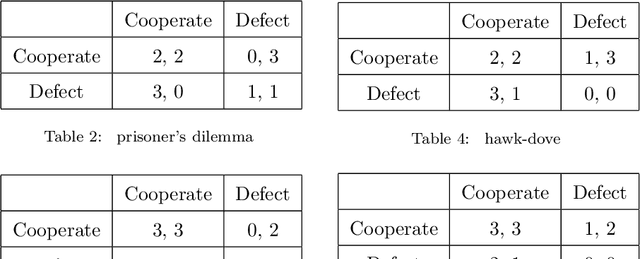

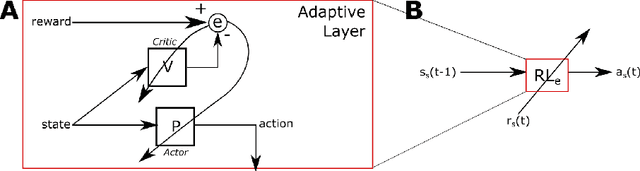

Abstract:A major challenge in cognitive science and AI has been to understand how autonomous agents might acquire and predict behavioral and mental states of other agents in the course of complex social interactions. How does such an agent model the goals, beliefs, and actions of other agents it interacts with? What are the computational principles to model a Theory of Mind (ToM)? Deep learning approaches to address these questions fall short of a better understanding of the problem. In part, this is due to the black-box nature of deep networks, wherein computational mechanisms of ToM are not readily revealed. Here, we consider alternative hypotheses seeking to model how the brain might realize a ToM. In particular, we propose embodied and situated agent models based on distributed adaptive control theory to predict actions of other agents in five different game theoretic tasks (Harmony Game, Hawk-Dove, Stag-Hunt, Prisoner's Dilemma and Battle of the Exes). Our multi-layer control models implement top-down predictions from adaptive to reactive layers of control and bottom-up error feedback from reactive to adaptive layers. We test cooperative and competitive strategies among seven different agent models (cooperative, greedy, tit-for-tat, reinforcement-based, rational, predictive and other's-model agents). We show that, compared to pure reinforcement-based strategies, probabilistic learning agents modeled on rational, predictive and other's-model phenotypes perform better in game-theoretic metrics across tasks. Our autonomous multi-agent models capture systems-level processes underlying a ToM and highlight architectural principles of ToM from a control-theoretic perspective.

Embodied Artificial Intelligence through Distributed Adaptive Control: An Integrated Framework

Sep 18, 2017

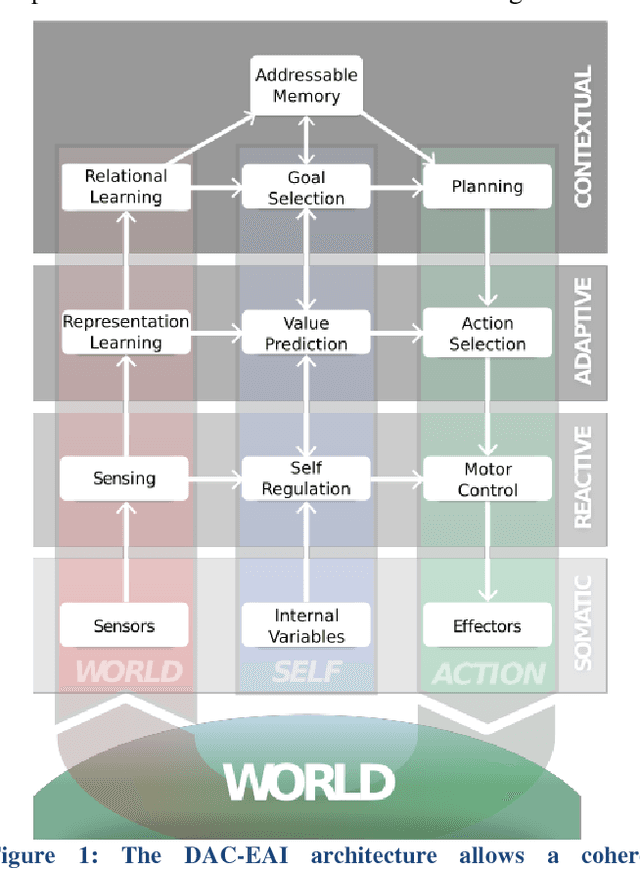

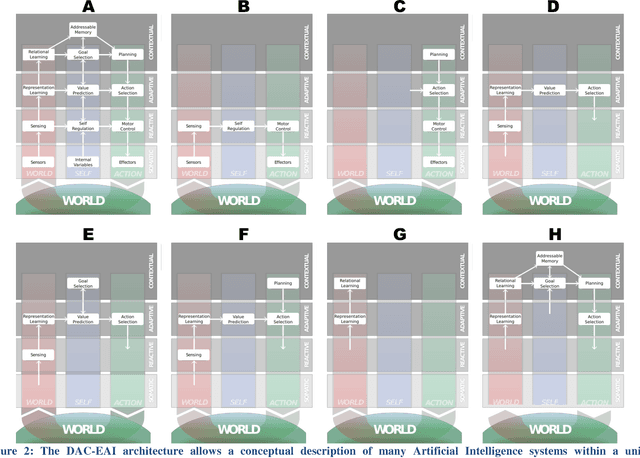

Abstract:In this paper, we argue that the future of Artificial Intelligence research resides in two keywords: integration and embodiment. We support this claim by analyzing the recent advances of the field. Regarding integration, we note that the most impactful recent contributions have been made possible through the integration of recent Machine Learning methods (based in particular on Deep Learning and Recurrent Neural Networks) with more traditional ones (e.g. Monte-Carlo tree search, goal babbling exploration or addressable memory systems). Regarding embodiment, we note that the traditional benchmark tasks (e.g. visual classification or board games) are becoming obsolete as state-of-the-art learning algorithms approach or even surpass human performance in most of them, having recently encouraged the development of first-person 3D game platforms embedding realistic physics. Building upon this analysis, we first propose an embodied cognitive architecture integrating heterogenous sub-fields of Artificial Intelligence into a unified framework. We demonstrate the utility of our approach by showing how major contributions of the field can be expressed within the proposed framework. We then claim that benchmarking environments need to reproduce ecologically-valid conditions for bootstrapping the acquisition of increasingly complex cognitive skills through the concept of a cognitive arms race between embodied agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge