Paolo Dini

Self-Supervised Learning at the Edge: The Cost of Labeling

Jul 09, 2025Abstract:Contrastive learning (CL) has recently emerged as an alternative to traditional supervised machine learning solutions by enabling rich representations from unstructured and unlabeled data. However, CL and, more broadly, self-supervised learning (SSL) methods often demand a large amount of data and computational resources, posing challenges for deployment on resource-constrained edge devices. In this work, we explore the feasibility and efficiency of SSL techniques for edge-based learning, focusing on trade-offs between model performance and energy efficiency. In particular, we analyze how different SSL techniques adapt to limited computational, data, and energy budgets, evaluating their effectiveness in learning robust representations under resource-constrained settings. Moreover, we also consider the energy costs involved in labeling data and assess how semi-supervised learning may assist in reducing the overall energy consumed to train CL models. Through extensive experiments, we demonstrate that tailored SSL strategies can achieve competitive performance while reducing resource consumption by up to 4X, underscoring their potential for energy-efficient learning at the edge.

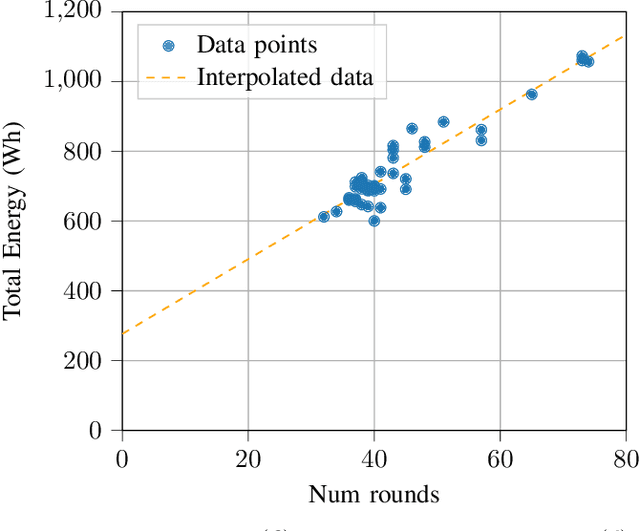

Energy-Efficient Federated Learning for AIoT using Clustering Methods

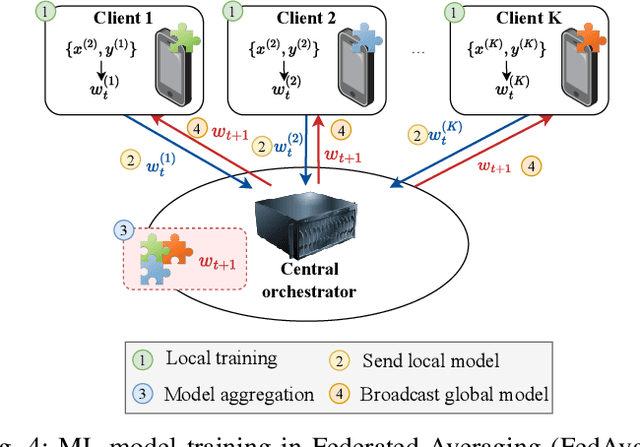

May 14, 2025Abstract:While substantial research has been devoted to optimizing model performance, convergence rates, and communication efficiency, the energy implications of federated learning (FL) within Artificial Intelligence of Things (AIoT) scenarios are often overlooked in the existing literature. This study examines the energy consumed during the FL process, focusing on three main energy-intensive processes: pre-processing, communication, and local learning, all contributing to the overall energy footprint. We rely on the observation that device/client selection is crucial for speeding up the convergence of model training in a distributed AIoT setting and propose two clustering-informed methods. These clustering solutions are designed to group AIoT devices with similar label distributions, resulting in clusters composed of nearly heterogeneous devices. Hence, our methods alleviate the heterogeneity often encountered in real-world distributed learning applications. Throughout extensive numerical experimentation, we demonstrate that our clustering strategies typically achieve high convergence rates while maintaining low energy consumption when compared to other recent approaches available in the literature.

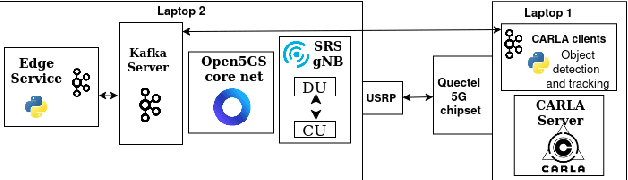

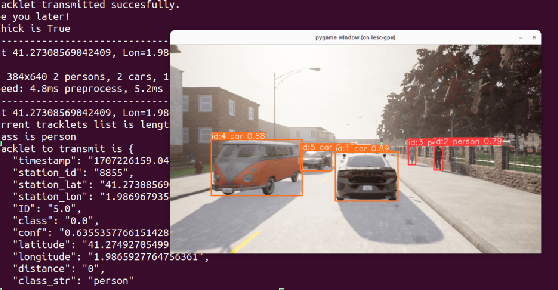

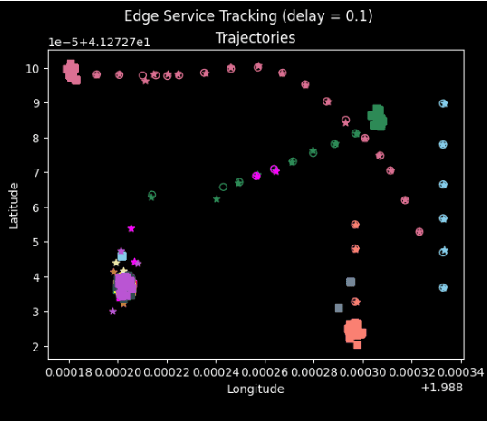

Multi-Object Tracking for Collision Avoidance Using Multiple Cameras in Open RAN Networks

Apr 09, 2025

Abstract:This paper deals with the multi-object detection and tracking problem, within the scope of open Radio Access Network (RAN), for collision avoidance in vehicular scenarios. To this end, a set of distributed intelligent agents collocated with cameras are considered. The fusion of detected objects is done at an edge service, considering Open RAN connectivity. Then, the edge service predicts the objects trajectories for collision avoidance. Compared to the related work a more realistic Open RAN network is implemented and multiple cameras are used.

Energy Minimization for Participatory Federated Learning in IoT Analyzed via Game Theory

Mar 27, 2025

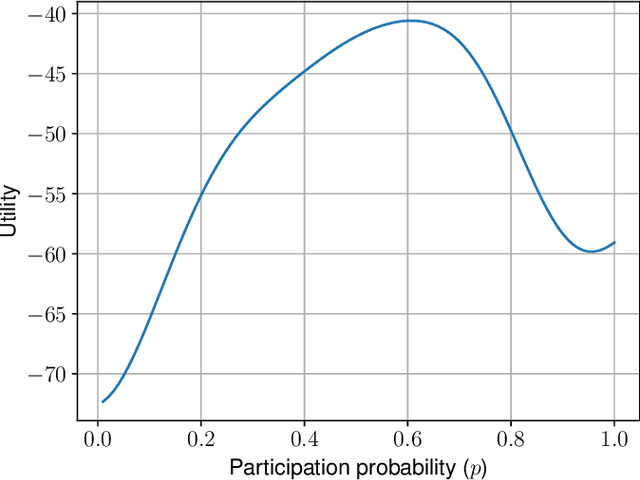

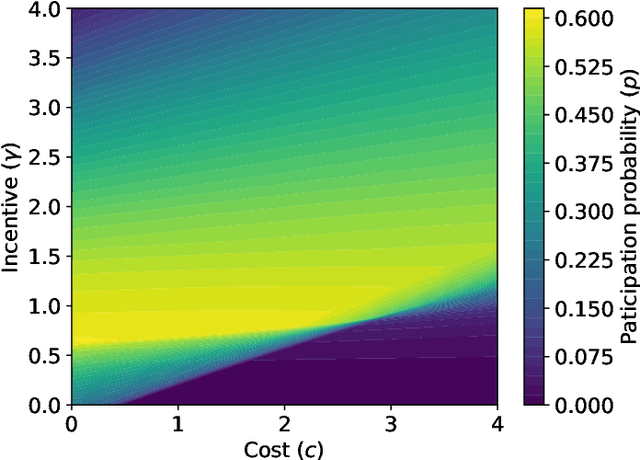

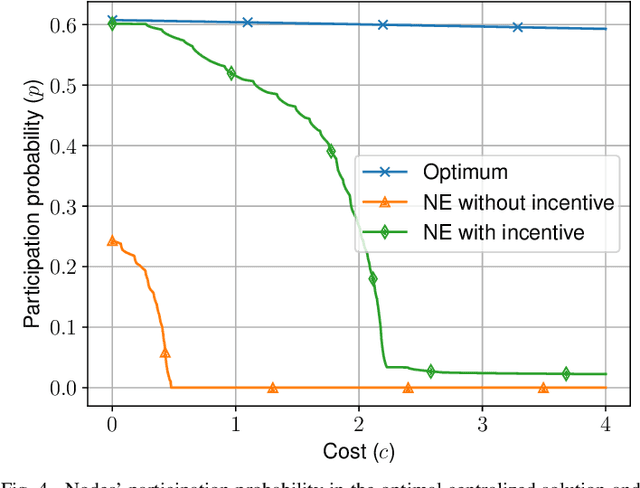

Abstract:The Internet of Things requires intelligent decision making in many scenarios. To this end, resources available at the individual nodes for sensing or computing, or both, can be leveraged. This results in approaches known as participatory sensing and federated learning, respectively. We investigate the simultaneous implementation of both, through a distributed approach based on empowering local nodes with game theoretic decision making. A global objective of energy minimization is combined with the individual node's optimization of local expenditure for sensing and transmitting data over multiple learning rounds. We present extensive evaluations of this technique, based on both a theoretical framework and experiments in a simulated network scenario with real data. Such a distributed approach can reach a desired level of accuracy for federated learning without a centralized supervision of the data collector. However, depending on the weight attributed to the local costs of the single node, it may also result in a significantly high Price of Anarchy (from 1.28 onwards). Thus, we argue for the need of incentive mechanisms, possibly based on Age of Information of the single nodes.

* 6 pages, 6 figures, 2 tables, conference

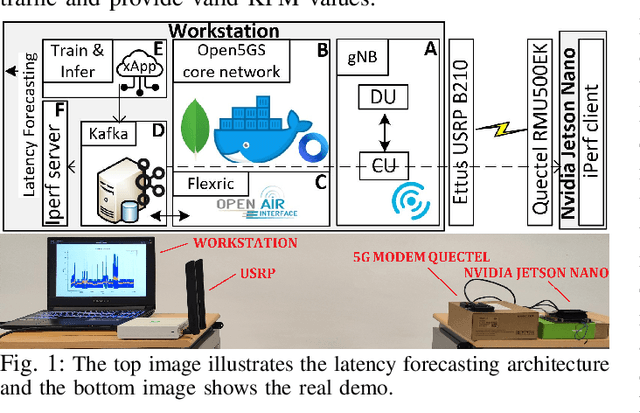

Enhancing 5G O-RAN Communication Efficiency Through AI-Based Latency Forecasting

Feb 25, 2025

Abstract:The increasing complexity and dynamic nature of 5G open radio access networks (O-RAN) pose significant challenges to maintaining low latency, high throughput, and resource efficiency. While existing methods leverage machine learning for latency prediction and resource management, they often lack real-world scalability and hardware validation. This paper addresses these limitations by presenting an artificial intelligence-driven latency forecasting system integrated into a functional O-RAN prototype. The system uses a bidirectional long short-term memory model to predict latency in real time within a scalable, open-source framework built with FlexRIC. Experimental results demonstrate the model's efficacy, achieving a loss metric below 0.04, thus validating its applicability in dynamic 5G environments.

Federated Learning in Mobile Networks: A Comprehensive Case Study on Traffic Forecasting

Dec 05, 2024

Abstract:The increasing demand for efficient resource allocation in mobile networks has catalyzed the exploration of innovative solutions that could enhance the task of real-time cellular traffic prediction. Under these circumstances, federated learning (FL) stands out as a distributed and privacy-preserving solution to foster collaboration among different sites, thus enabling responsive near-the-edge solutions. In this paper, we comprehensively study the potential benefits of FL in telecommunications through a case study on federated traffic forecasting using real-world data from base stations (BSs) in Barcelona (Spain). Our study encompasses relevant aspects within the federated experience, including model aggregation techniques, outlier management, the impact of individual clients, personalized learning, and the integration of exogenous sources of data. The performed evaluation is based on both prediction accuracy and sustainability, thus showcasing the environmental impact of employed FL algorithms in various settings. The findings from our study highlight FL as a promising and robust solution for mobile traffic prediction, emphasizing its twin merits as a privacy-conscious and environmentally sustainable approach, while also demonstrating its capability to overcome data heterogeneity and ensure high-quality predictions, marking a significant stride towards its integration in mobile traffic management systems.

Energy-Aware Decentralized Learning with Intermittent Model Training

Jul 01, 2024

Abstract:Decentralized learning (DL) offers a powerful framework where nodes collaboratively train models without sharing raw data and without the coordination of a central server. In the iterative rounds of DL, models are trained locally, shared with neighbors in the topology, and aggregated with other models received from neighbors. Sharing and merging models contribute to convergence towards a consensus model that generalizes better across the collective data captured at training time. In addition, the energy consumption while sharing and merging model parameters is negligible compared to the energy spent during the training phase. Leveraging this fact, we present SkipTrain, a novel DL algorithm, which minimizes energy consumption in decentralized learning by strategically skipping some training rounds and substituting them with synchronization rounds. These training-silent periods, besides saving energy, also allow models to better mix and finally produce models with superior accuracy than typical DL algorithms that train at every round. Our empirical evaluations with 256 nodes demonstrate that SkipTrain reduces energy consumption by 50% and increases model accuracy by up to 12% compared to D-PSGD, the conventional DL algorithm.

Mobile Traffic Prediction at the Edge through Distributed and Transfer Learning

Oct 22, 2023Abstract:Traffic prediction represents one of the crucial tasks for smartly optimizing the mobile network. The research in this topic concentrated in making predictions in a centralized fashion, i.e., by collecting data from the different network elements. This translates to a considerable amount of energy for data transmission and processing. In this work, we propose a novel prediction framework based on edge computing which uses datasets obtained on the edge through a large measurement campaign. Two main Deep Learning architectures are designed, based on Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), and tested under different training conditions. In addition, Knowledge Transfer Learning (KTL) techniques are employed to improve the performance of the models while reducing the required computational resources. Simulation results show that the CNN architectures outperform the RNNs. An estimation for the needed training energy is provided, highlighting KTL ability to reduce the energy footprint of the models of 60% and 90% for CNNs and RNNs, respectively. Finally, two cutting-edge explainable Artificial Intelligence techniques are employed to interpret the derived learning models.

The Implications of Decentralization in Blockchained Federated Learning: Evaluating the Impact of Model Staleness and Inconsistencies

Oct 11, 2023

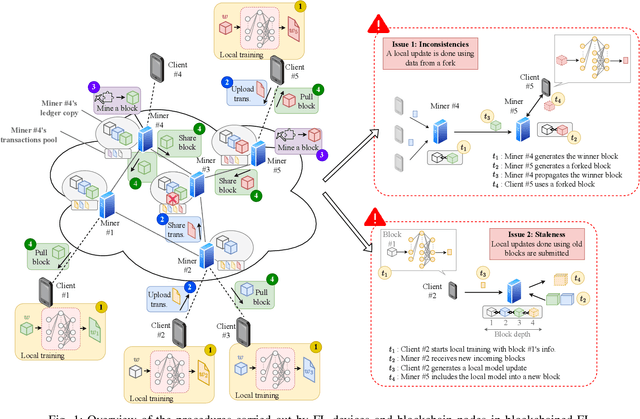

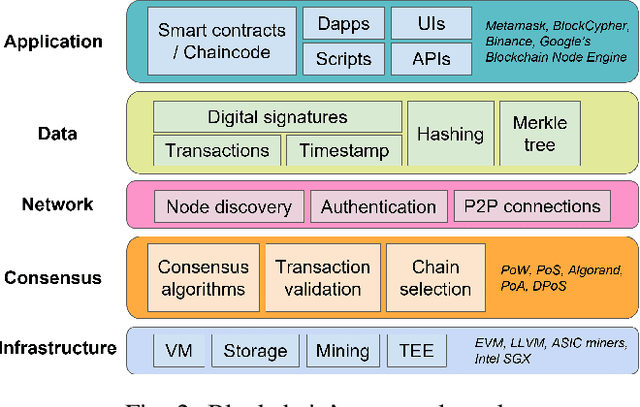

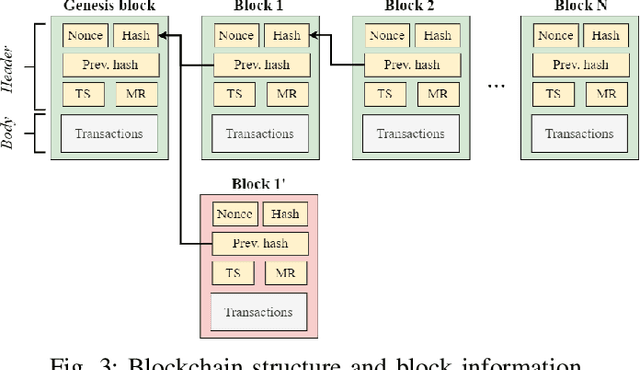

Abstract:Blockchain promises to enhance distributed machine learning (ML) approaches such as federated learning (FL) by providing further decentralization, security, immutability, and trust, which are key properties for enabling collaborative intelligence in next-generation applications. Nonetheless, the intrinsic decentralized operation of peer-to-peer (P2P) blockchain nodes leads to an uncharted setting for FL, whereby the concepts of FL round and global model become meaningless, as devices' synchronization is lost without the figure of a central orchestrating server. In this paper, we study the practical implications of outsourcing the orchestration of FL to a democratic network such as in a blockchain. In particular, we focus on the effects that model staleness and inconsistencies, endorsed by blockchains' modus operandi, have on the training procedure held by FL devices asynchronously. Using simulation, we evaluate the blockchained FL operation on the well-known CIFAR-10 dataset and focus on the accuracy and timeliness of the solutions. Our results show the high impact of model inconsistencies on the accuracy of the models (up to a ~35% decrease in prediction accuracy), which underscores the importance of properly designing blockchain systems based on the characteristics of the underlying FL application.

Towards Energy-Aware Federated Traffic Prediction for Cellular Networks

Sep 19, 2023Abstract:Cellular traffic prediction is a crucial activity for optimizing networks in fifth-generation (5G) networks and beyond, as accurate forecasting is essential for intelligent network design, resource allocation and anomaly mitigation. Although machine learning (ML) is a promising approach to effectively predict network traffic, the centralization of massive data in a single data center raises issues regarding confidentiality, privacy and data transfer demands. To address these challenges, federated learning (FL) emerges as an appealing ML training framework which offers high accurate predictions through parallel distributed computations. However, the environmental impact of these methods is often overlooked, which calls into question their sustainability. In this paper, we address the trade-off between accuracy and energy consumption in FL by proposing a novel sustainability indicator that allows assessing the feasibility of ML models. Then, we comprehensively evaluate state-of-the-art deep learning (DL) architectures in a federated scenario using real-world measurements from base station (BS) sites in the area of Barcelona, Spain. Our findings indicate that larger ML models achieve marginally improved performance but have a significant environmental impact in terms of carbon footprint, which make them impractical for real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge