Paolo Braca

3D Localization and Tracking Methods for Multi-Platform Radar Networks

Aug 14, 2023

Abstract:Multi-platform radar networks (MPRNs) are an emerging sensing technology due to their ability to provide improved surveillance capabilities over plain monostatic and bistatic systems. The design of advanced detection, localization, and tracking algorithms for efficient fusion of information obtained through multiple receivers has attracted much attention. However, considerable challenges remain. This article provides an overview on recent unconstrained and constrained localization techniques as well as multitarget tracking (MTT) algorithms tailored to MPRNs. In particular, two data-processing methods are illustrated and explored in detail, one aimed at accomplishing localization tasks the other tracking functions. As to the former, assuming a MPRN with one transmitter and multiple receivers, the angular and range constrained estimator (ARCE) algorithm capitalizes on the knowledge of the transmitter antenna beamwidth. As to the latter, the scalable sum-product algorithm (SPA) based MTT technique is presented. Additionally, a solution to combine ARCE and SPA-based MTT is investigated in order to boost the accuracy of the overall surveillance system. Simulated experiments show the benefit of the combined algorithm in comparison with the conventional baseline SPA-based MTT and the stand-alone ARCE localization, in a 3D sensing scenario.

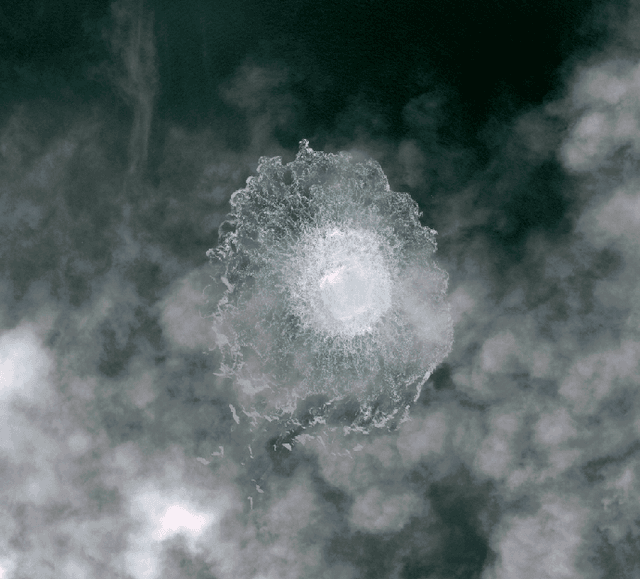

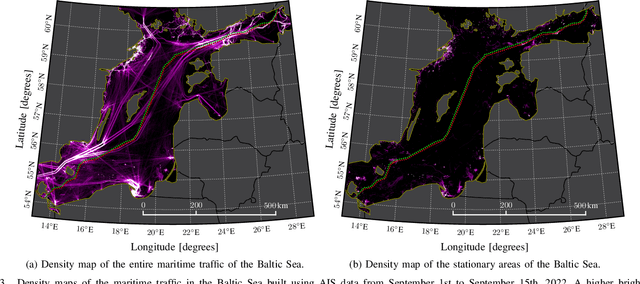

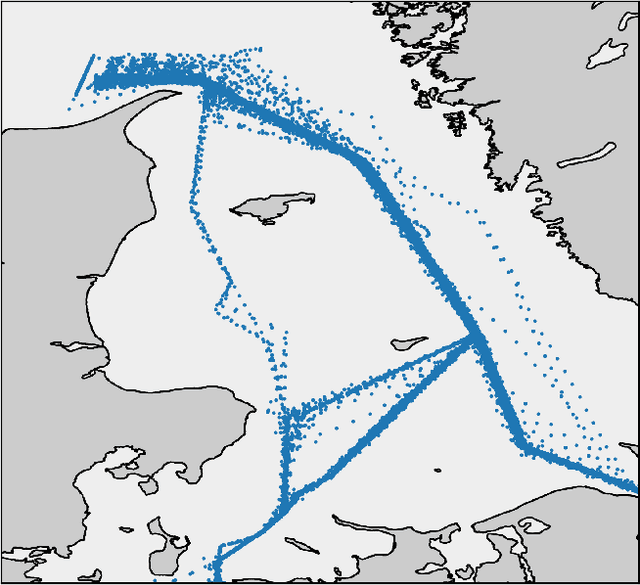

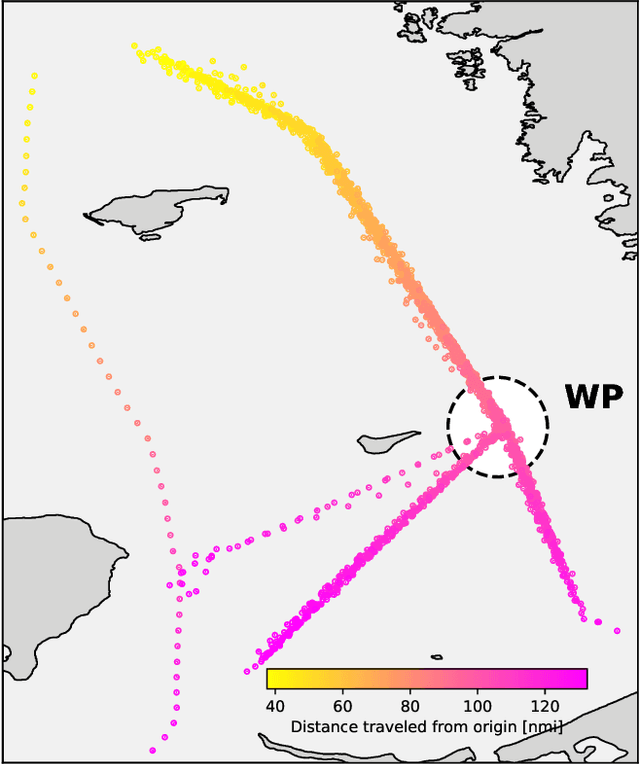

Monitoring of Underwater Critical Infrastructures: the Nord Stream and Other Recent Case Studies

Feb 03, 2023

Abstract:The explosions on September 26th, 2022, which damaged the gas pipelines of Nord Stream 1 and Nord Stream 2, have highlighted the need and urgency of improving the resilience of Underwater Critical Infrastructures (UCIs). Comprising gas pipelines and power and communication cables, these connect countries worldwide and are critical for the global economy and stability. An attack targeting multiple of such infrastructures simultaneously could potentially cause significant damage and greatly affect various aspects of daily life. Due to the increasing number and continuous deployment of UCIs, existing underwater surveillance solutions, such as Autonomous Underwater Vehicles (AUVs) or Remotely Operated Vehicles (ROVs), are not adequate enough to ensure thorough monitoring. We show that the combination of information from both underwater and above-water surveillance sensors enables achieving Seabed-to-Space Situational Awareness (S3A), mainly thanks to Artificial Intelligence (AI) and Information Fusion (IF) methodologies. These are designed to process immense volumes of information, fused from a variety of sources and generated from monitoring a very large number of assets on a daily basis. The learned knowledge can be used to anticipate future behaviors, identify threats, and determine critical situations concerning UCIs. To illustrate the capabilities and importance of S3A, we consider three events that occurred in the second half of 2022: the aforementioned Nord Stream explosions, the cutoff of the underwater communication cable SHEFA-2 connecting the Shetland Islands and the UK mainland, and the suspicious activity of a large vessel in the Adriatic Sea. Specifically, we provide analyses of the available data, from Automatic Identification System (AIS) and satellite data, integrated with possible contextual information, e.g., bathymetry, weather conditions, and human intelligence.

Large Deviations for Classification Performance Analysis of Machine Learning Systems

Jan 16, 2023Abstract:We study the performance of machine learning binary classification techniques in terms of error probabilities. The statistical test is based on the Data-Driven Decision Function (D3F), learned in the training phase, i.e., what is thresholded before the final binary decision is made. Based on large deviations theory, we show that under appropriate conditions the classification error probabilities vanish exponentially, as $\sim \exp\left(-n\,I + o(n) \right)$, where $I$ is the error rate and $n$ is the number of observations available for testing. We also propose two different approximations for the error probability curves, one based on a refined asymptotic formula (often referred to as exact asymptotics), and another one based on the central limit theorem. The theoretical findings are finally tested using the popular MNIST dataset.

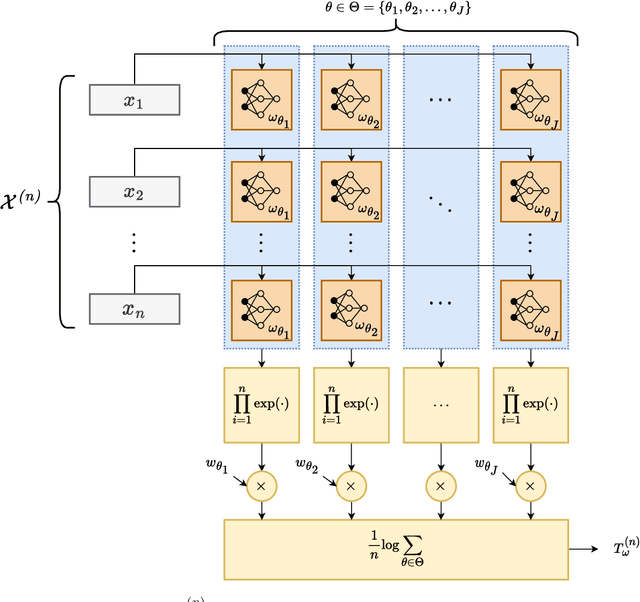

Statistical Hypothesis Testing Based on Machine Learning: Large Deviations Analysis

Jul 22, 2022

Abstract:We study the performance -- and specifically the rate at which the error probability converges to zero -- of Machine Learning (ML) classification techniques. Leveraging the theory of large deviations, we provide the mathematical conditions for a ML classifier to exhibit error probabilities that vanish exponentially, say $\sim \exp\left(-n\,I + o(n) \right)$, where $n$ is the number of informative observations available for testing (or another relevant parameter, such as the size of the target in an image) and $I$ is the error rate. Such conditions depend on the Fenchel-Legendre transform of the cumulant-generating function of the Data-Driven Decision Function (D3F, i.e., what is thresholded before the final binary decision is made) learned in the training phase. As such, the D3F and, consequently, the related error rate $I$, depend on the given training set, which is assumed of finite size. Interestingly, these conditions can be verified and tested numerically exploiting the available dataset, or a synthetic dataset, generated according to the available information on the underlying statistical model. In other words, the classification error probability convergence to zero and its rate can be computed on a portion of the dataset available for training. Coherently with the large deviations theory, we can also establish the convergence, for $n$ large enough, of the normalized D3F statistic to a Gaussian distribution. This property is exploited to set a desired asymptotic false alarm probability, which empirically turns out to be accurate even for quite realistic values of $n$. Furthermore, approximate error probability curves $\sim \zeta_n \exp\left(-n\,I \right)$ are provided, thanks to the refined asymptotic derivation (often referred to as exact asymptotics), where $\zeta_n$ represents the most representative sub-exponential terms of the error probabilities.

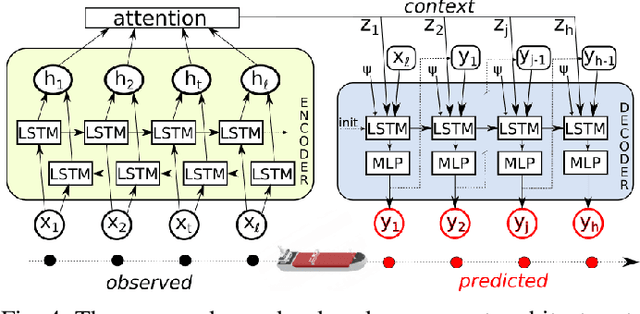

Recurrent Encoder-Decoder Networks for Vessel Trajectory Prediction with Uncertainty Estimation

May 11, 2022

Abstract:Recent deep learning methods for vessel trajectory prediction are able to learn complex maritime patterns from historical Automatic Identification System (AIS) data and accurately predict sequences of future vessel positions with a prediction horizon of several hours. However, in maritime surveillance applications, reliably quantifying the prediction uncertainty can be as important as obtaining high accuracy. This paper extends deep learning frameworks for trajectory prediction tasks by exploring how recurrent encoder-decoder neural networks can be tasked not only to predict but also to yield a corresponding prediction uncertainty via Bayesian modeling of epistemic and aleatoric uncertainties. We compare the prediction performance of two different models based on labeled or unlabeled input data to highlight how uncertainty quantification and accuracy can be improved by using, if available, additional information on the intention of the ship (e.g., its planned destination).

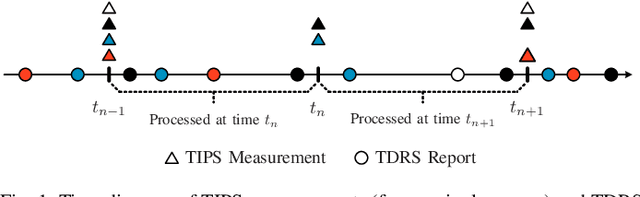

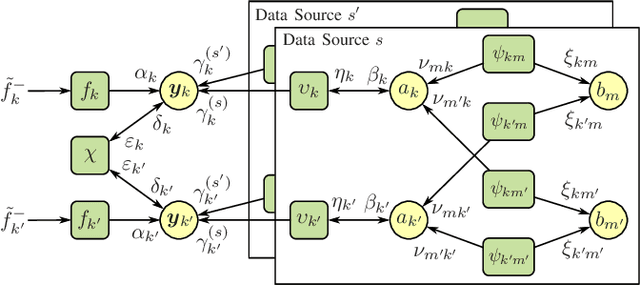

Fusion of Sensor Measurements and Target-Provided Information in Multitarget Tracking

Nov 26, 2021

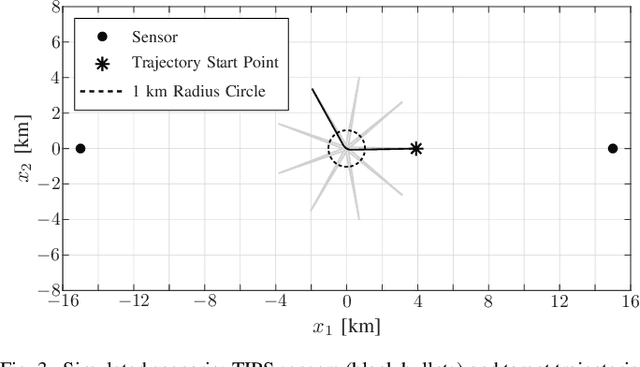

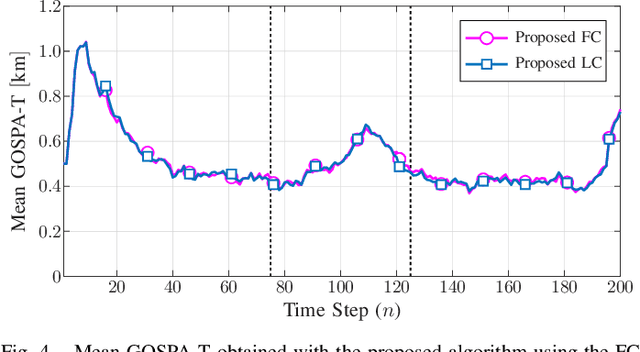

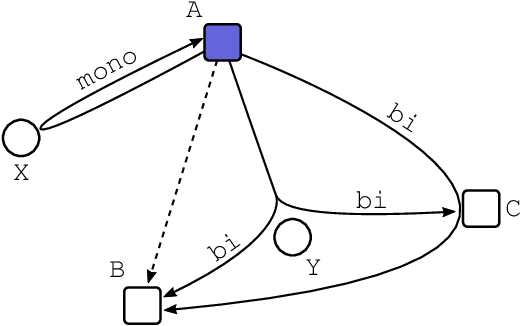

Abstract:Tracking multiple time-varying states based on heterogeneous observations is a key problem in many applications. Here, we develop a statistical model and algorithm for tracking an unknown number of targets based on the probabilistic fusion of observations from two classes of data sources. The first class, referred to as target-independent perception systems (TIPSs), consists of sensors that periodically produce noisy measurements of targets without requiring target cooperation. The second class, referred to as target-dependent reporting systems (TDRSs), relies on cooperative targets that report noisy measurements of their state and their identity. We present a joint TIPS-TDRS observation model that accounts for observation-origin uncertainty, missed detections, false alarms, and asynchronicity. We then establish a factor graph that represents this observation model along with a state evolution model including target identities. Finally, by executing the sum-product algorithm on that factor graph, we obtain a scalable multitarget tracking algorithm with inherent TIPS-TDRS fusion. The performance of the proposed algorithm is evaluated using simulated data as well as real data from a maritime surveillance experiment.

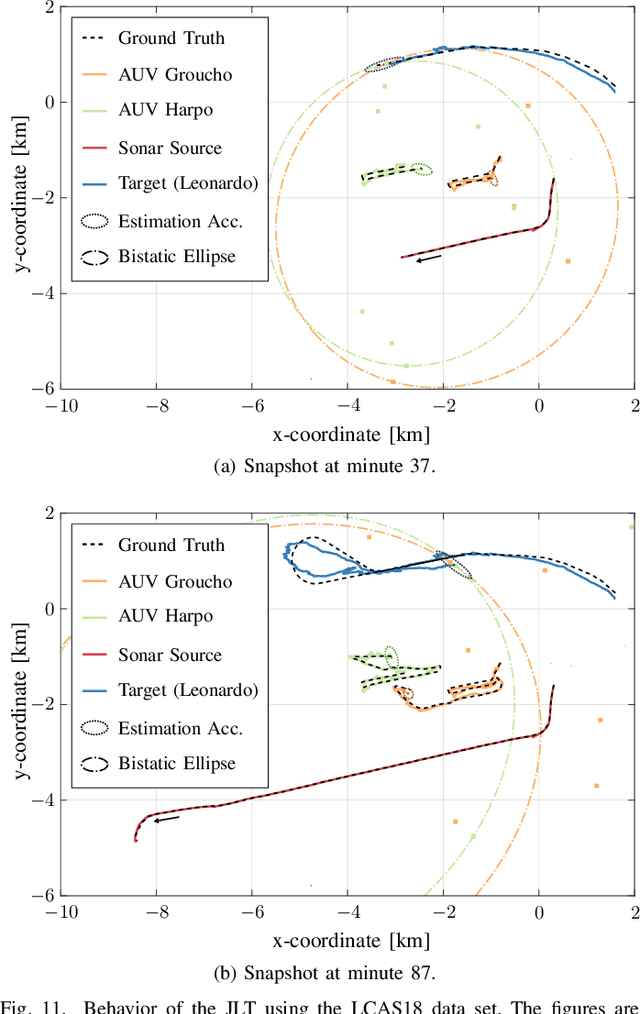

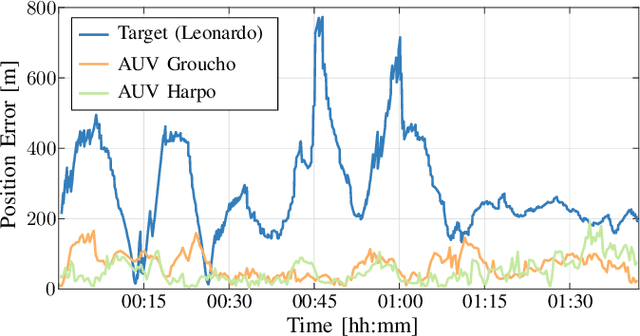

Cooperative Localization and Multitarget Tracking in Agent Networks with the Sum-Product Algorithm

Aug 05, 2021

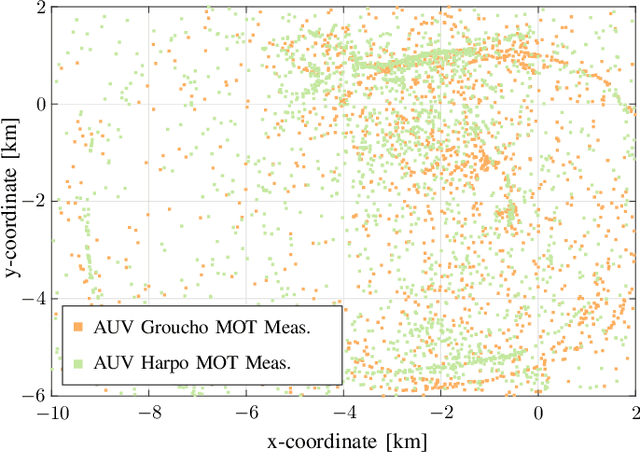

Abstract:This paper addresses the problem of multitarget tracking using a network of sensing agents with unknown positions. Agents have to both localize themselves in the sensor network and, at the same time, perform multitarget tracking in the presence of clutter and target miss detection. These two problems are jointly resolved in a holistic approach where graph theory is used to describe the statistical relationships among agent states, target states, and observations. A scalable message passing scheme, based on the sum-product algorithm, enables to efficiently approximate the marginal posterior distributions of both agent and target states. The proposed solution is general enough to accommodate a full multistatic network configuration, with multiple transmitters and receivers. Numerical simulations show superior performance of the proposed joint approach with respect to the case in which cooperative self-localization and multitarget tracking are performed separately, as the former manages to extract valuable information from targets. Lastly, data acquired in 2018 by the NATO Science and Technology (STO) Centre for Maritime Research and Experimentation (CMRE) through a network of autonomous underwater vehicles demonstrates the effectiveness of the approach in practical applications.

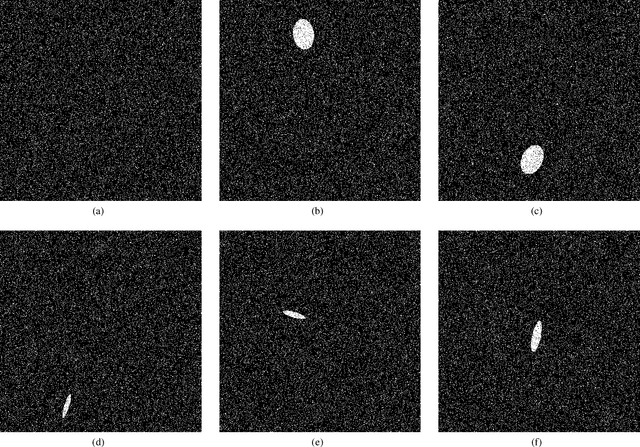

Enhanced Target Localization with Deployable Multiplatform Radar Nodes Based on Non-Convex Constrained Least Squares Optimization

Apr 23, 2021

Abstract:A new algorithm for 3D localization in multiplatform radar networks, comprising one transmitter and multiple receivers, is proposed. To take advantage of the monostatic sensor radiation pattern features, ad-hoc constraints are imposed in the target localization process. Therefore, the localization problem is formulated as a non-convex constrained Least Squares (LS) optimization problem which is globally solved in a quasi-closed-form leveraging Karush-Kuhn-Tucker (KKT) conditions. The performance of the new algorithm is assessed in terms of Root Mean Square Error (RMSE) in comparison with the benchmark Cramer Rao Lower Bound (CRLB) and some competitors from the open literature. The results corroborate the effectiveness of the new strategy which is capable of ensuring a lower RMSE than the counterpart methodologies especially in the low Signal to Noise Ratio (SNR) regime.

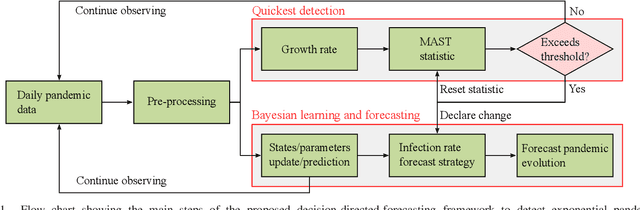

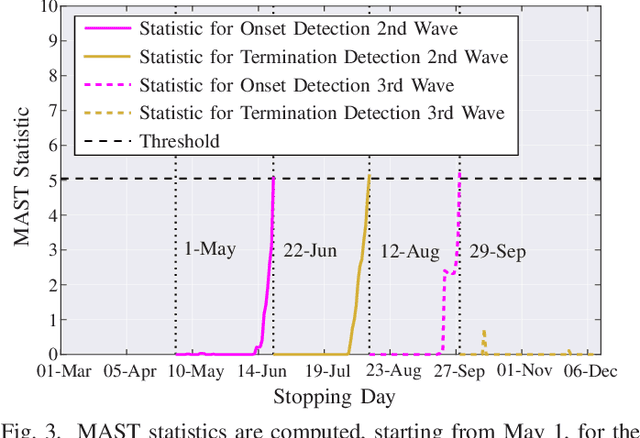

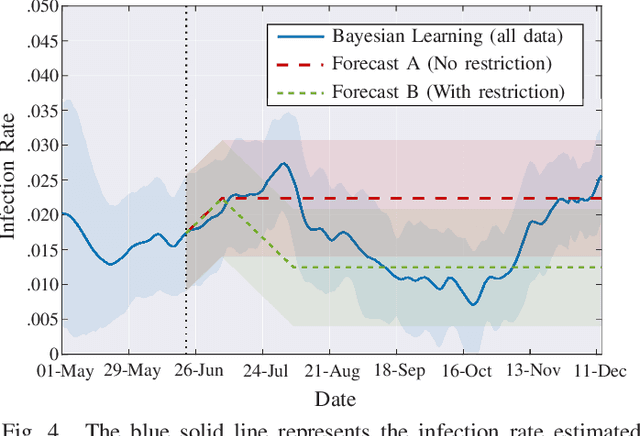

Quickest Detection and Forecast of Pandemic Outbreaks: Analysis of COVID-19 Waves

Jan 12, 2021

Abstract:The COVID-19 pandemic has, worldwide and up to December 2020, caused over 1.7 million deaths, and put the world's most advanced healthcare systems under heavy stress. In many countries, drastic restriction measures adopted by political authorities, such as national lockdowns, have not prevented the outbreak of new pandemic's waves. In this article, we propose an integrated detection-estimation-forecasting framework that, using publicly available data published by the national authorities, is designed to: (i) learn relevant features of the epidemic (e.g., the infection rate); (ii) detect as quickly as possible the onset (or the termination) of an exponential growth of the contagion; and (iii) reliably forecast the epidemic evolution. The proposed solution is validated by analyzing the COVID-19 second and third waves in the USA.

Deep Learning Methods for Vessel Trajectory Prediction based on Recurrent Neural Networks

Jan 07, 2021

Abstract:Data-driven methods open up unprecedented possibilities for maritime surveillance using Automatic Identification System (AIS) data. In this work, we explore deep learning strategies using historical AIS observations to address the problem of predicting future vessel trajectories with a prediction horizon of several hours. We propose novel sequence-to-sequence vessel trajectory prediction models based on encoder-decoder recurrent neural networks (RNNs) that are trained on historical trajectory data to predict future trajectory samples given previous observations. The proposed architecture combines Long Short-Term Memory (LSTM) RNNs for sequence modeling to encode the observed data and generate future predictions with different intermediate aggregation layers to capture space-time dependencies in sequential data. Experimental results on vessel trajectories from an AIS dataset made freely available by the Danish Maritime Authority show the effectiveness of deep-learning methods for trajectory prediction based on sequence-to-sequence neural networks, which achieve better performance than baseline approaches based on linear regression or feed-forward networks. The comparative evaluation of results shows: i) the superiority of attention pooling over static pooling for the specific application, and ii) the remarkable performance improvement that can be obtained with labeled trajectories, i.e. when predictions are conditioned on a low-level context representation encoded from the sequence of past observations, as well as on additional inputs (e.g., the port of departure or arrival) about the vessel's high-level intention which may be available from AIS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge