Olivier Morel

Pola4All: survey of polarimetric applications and an open-source toolkit to analyze polarization

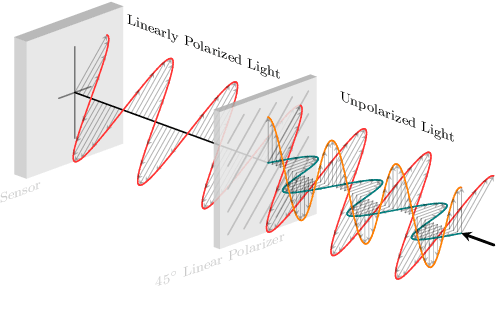

Dec 22, 2023Abstract:Polarization information of the light can provide rich cues for computer vision and scene understanding tasks, such as the type of material, pose, and shape of the objects. With the advent of new and cheap polarimetric sensors, this imaging modality is becoming accessible to a wider public for solving problems such as pose estimation, 3D reconstruction, underwater navigation, and depth estimation. However, we observe several limitations regarding the usage of this sensorial modality, as well as a lack of standards and publicly available tools to analyze polarization images. Furthermore, although polarization camera manufacturers usually provide acquisition tools to interface with their cameras, they rarely include processing algorithms that make use of the polarization information. In this paper, we review recent advances in applications that involve polarization imaging, including a comprehensive survey of recent advances on polarization for vision and robotics perception tasks. We also introduce a complete software toolkit that provides common standards to communicate with and process information from most of the existing micro-grid polarization cameras on the market. The toolkit also implements several image processing algorithms for this modality, and it is publicly available on GitHub: https://github.com/vibot-lab/Pola4all_JEI_2023.

A Practical Calibration Method for RGB Micro-Grid Polarimetric Cameras

Aug 29, 2022

Abstract:Polarimetric imaging has been applied in a growing number of applications in robotic vision (ex. underwater navigation, glare removal, de-hazing, object classification, and depth estimation). One can find on the market RGB Polarization cameras that can capture both color and polarimetric state of the light in a single snapshot. Due to the sensor's characteristic dispersion, and the use of lenses, it is crucial to calibrate these types of cameras so as to obtain correct polarization measurements. The calibration methods that have been developed so far are either not adapted to this type of cameras, or they require complex equipment and time consuming experiments in strict setups. In this paper, we propose a new method to overcome the need for complex optical systems to efficiently calibrate these cameras. We show that the proposed calibration method has several advantages such as that any user can easily calibrate the camera using a uniform, linearly polarized light source without any a priori knowledge of its polarization state, and with a limited number of acquisitions. We will make our calibration code publicly available.

* This is a preprint version of the paper to appear at IEEE Robotics and Automation Letters (RAL). The final journal version will be available at https://doi.org/10.1109/LRA.2022.3192655

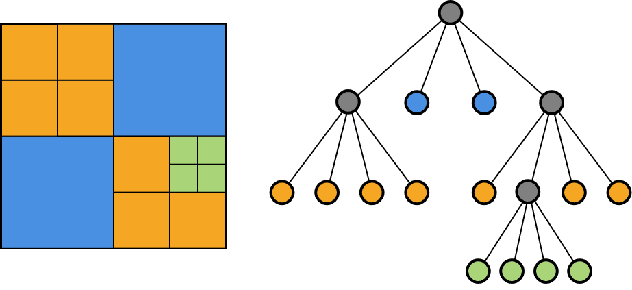

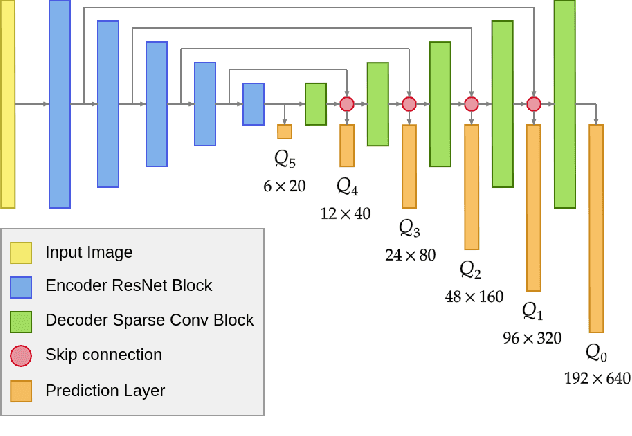

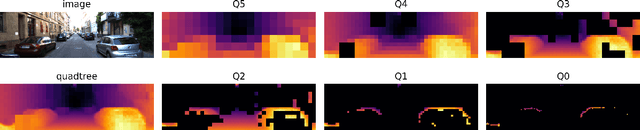

N-QGN: Navigation Map from a Monocular Camera using Quadtree Generating Networks

Feb 24, 2022

Abstract:Monocular depth estimation has been a popular area of research for several years, especially since self-supervised networks have shown increasingly good results in bridging the gap with supervised and stereo methods. However, these approaches focus their interest on dense 3D reconstruction and sometimes on tiny details that are superfluous for autonomous navigation. In this paper, we propose to address this issue by estimating the navigation map under a quadtree representation. The objective is to create an adaptive depth map prediction that only extract details that are essential for the obstacle avoidance. Other 3D space which leaves large room for navigation will be provided with approximate distance. Experiment on KITTI dataset shows that our method can significantly reduce the number of output information without major loss of accuracy.

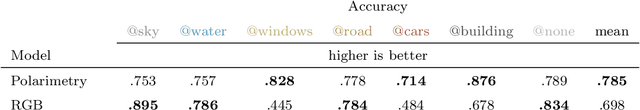

Towards urban scenes understanding through polarization cues

Jun 03, 2021

Abstract:Autonomous robotics is critically affected by the robustness of its scene understanding algorithms. We propose a two-axis pipeline based on polarization indices to analyze dynamic urban scenes. As robots evolve in unknown environments, they are prone to encountering specular obstacles. Usually, specular phenomena are rarely taken into account by algorithms which causes misinterpretations and erroneous estimates. By exploiting all the light properties, systems can greatly increase their robustness to events. In addition to the conventional photometric characteristics, we propose to include polarization sensing. We demonstrate in this paper that the contribution of polarization measurement increases both the performances of segmentation and the quality of depth estimation. Our polarimetry-based approaches are compared here with other state-of-the-art RGB-centric methods showing interest of using polarization imaging.

P2D: a self-supervised method for depth estimation from polarimetry

Jul 15, 2020

Abstract:Monocular depth estimation is a recurring subject in the field of computer vision. Its ability to describe scenes via a depth map while reducing the constraints related to the formulation of perspective geometry tends to favor its use. However, despite the constant improvement of algorithms, most methods exploit only colorimetric information. Consequently, robustness to events to which the modality is not sensitive to, like specularity or transparency, is neglected. In response to this phenomenon, we propose using polarimetry as an input for a self-supervised monodepth network. Therefore, we propose exploiting polarization cues to encourage accurate reconstruction of scenes. Furthermore, we include a term of polarimetric regularization to state-of-the-art method to take specific advantage of the data. Our method is evaluated both qualitatively and quantitatively demonstrating that the contribution of this new information as well as an enhanced loss function improves depth estimation results, especially for specular areas.

Polarimetric image augmentation

May 22, 2020

Abstract:Robotics applications in urban environments are subject to obstacles that exhibit specular reflections hampering autonomous navigation. On the other hand, these reflections are highly polarized and this extra information can successfully be used to segment the specular areas. In nature, polarized light is obtained by reflection or scattering. Deep Convolutional Neural Networks (DCNNs) have shown excellent segmentation results, but require a significant amount of data to achieve best performances. The lack of data is usually overcomed by using augmentation methods. However, unlike RGB images, polarization images are not only scalar (intensity) images and standard augmentation techniques cannot be applied straightforwardly. We propose to enhance deep learning models through a regularized augmentation procedure applied to polarimetric data in order to characterize scenes more effectively under challenging conditions. We subsequently observe an average of 18.1% improvement in IoU between non augmented and regularized training procedures on real world data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge