Ole-Christoffer Granmo

A Methodology for Transparent Logic-Based Classification Using a Multi-Task Convolutional Tsetlin Machine

Oct 02, 2025

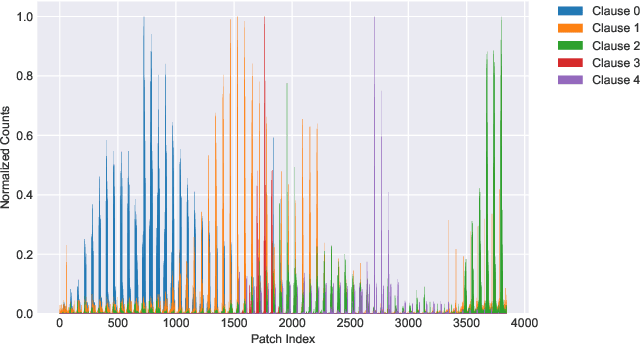

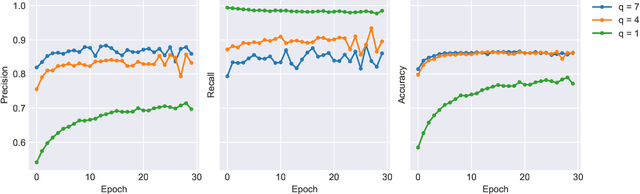

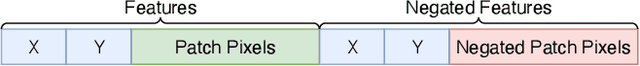

Abstract:The Tsetlin Machine (TM) is a novel machine learning paradigm that employs finite-state automata for learning and utilizes propositional logic to represent patterns. Due to its simplistic approach, TMs are inherently more interpretable than learning algorithms based on Neural Networks. The Convolutional TM has shown comparable performance on various datasets such as MNIST, K-MNIST, F-MNIST and CIFAR-2. In this paper, we explore the applicability of the TM architecture for large-scale multi-channel (RGB) image classification. We propose a methodology to generate both local interpretations and global class representations. The local interpretations can be used to explain the model predictions while the global class representations aggregate important patterns for each class. These interpretations summarize the knowledge captured by the convolutional clauses, which can be visualized as images. We evaluate our methods on MNIST and CelebA datasets, using models that achieve 98.5\% accuracy on MNIST and 86.56\% F1-score on CelebA (compared to 88.07\% for ResNet50) respectively. We show that the TM performs competitively to this deep learning model while maintaining its interpretability, even in large-scale complex training environments. This contributes to a better understanding of TM clauses and provides insights into how these models can be applied to more complex and diverse datasets.

A Comparative Study of Feature Selection in Tsetlin Machines

Aug 09, 2025Abstract:Feature Selection (FS) is crucial for improving model interpretability, reducing complexity, and sometimes for enhancing accuracy. The recently introduced Tsetlin machine (TM) offers interpretable clause-based learning, but lacks established tools for estimating feature importance. In this paper, we adapt and evaluate a range of FS techniques for TMs, including classical filter and embedded methods as well as post-hoc explanation methods originally developed for neural networks (e.g., SHAP and LIME) and a novel family of embedded scorers derived from TM clause weights and Tsetlin automaton (TA) states. We benchmark all methods across 12 datasets, using evaluation protocols, like Remove and Retrain (ROAR) strategy and Remove and Debias (ROAD), to assess causal impact. Our results show that TM-internal scorers not only perform competitively but also exploit the interpretability of clauses to reveal interacting feature patterns. Simpler TM-specific scorers achieve similar accuracy retention at a fraction of the computational cost. This study establishes the first comprehensive baseline for FS in TM and paves the way for developing specialized TM-specific interpretability techniques.

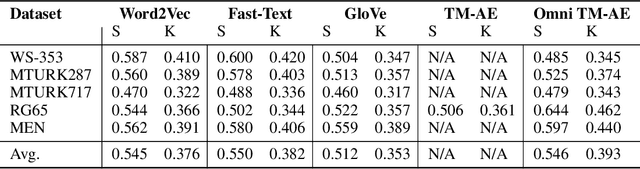

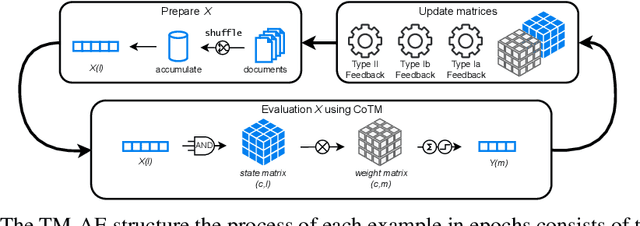

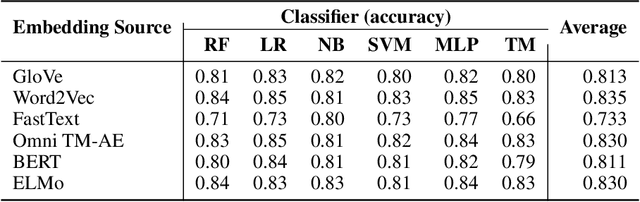

Omni TM-AE: A Scalable and Interpretable Embedding Model Using the Full Tsetlin Machine State Space

May 22, 2025

Abstract:The increasing complexity of large-scale language models has amplified concerns regarding their interpretability and reusability. While traditional embedding models like Word2Vec and GloVe offer scalability, they lack transparency and often behave as black boxes. Conversely, interpretable models such as the Tsetlin Machine (TM) have shown promise in constructing explainable learning systems, though they previously faced limitations in scalability and reusability. In this paper, we introduce Omni Tsetlin Machine AutoEncoder (Omni TM-AE), a novel embedding model that fully exploits the information contained in the TM's state matrix, including literals previously excluded from clause formation. This method enables the construction of reusable, interpretable embeddings through a single training phase. Extensive experiments across semantic similarity, sentiment classification, and document clustering tasks show that Omni TM-AE performs competitively with and often surpasses mainstream embedding models. These results demonstrate that it is possible to balance performance, scalability, and interpretability in modern Natural Language Processing (NLP) systems without resorting to opaque architectures.

An All-digital 65-nm Tsetlin Machine Image Classification Accelerator with 8.6 nJ per MNIST Frame at 60.3k Frames per Second

Jan 31, 2025

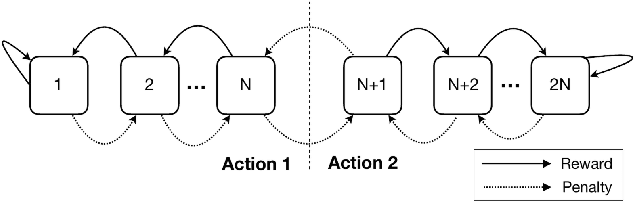

Abstract:We present an all-digital programmable machine learning accelerator chip for image classification, underpinning on the Tsetlin machine (TM) principles. The TM is a machine learning algorithm founded on propositional logic, utilizing sub-pattern recognition expressions called clauses. The accelerator implements the coalesced TM version with convolution, and classifies booleanized images of 28$\times$28 pixels with 10 categories. A configuration with 128 clauses is used in a highly parallel architecture. Fast clause evaluation is obtained by keeping all clause weights and Tsetlin automata (TA) action signals in registers. The chip is implemented in a 65 nm low-leakage CMOS technology, and occupies an active area of 2.7mm$^2$. At a clock frequency of 27.8 MHz, the accelerator achieves 60.3k classifications per second, and consumes 8.6 nJ per classification. The latency for classifying a single image is 25.4 $\mu$s which includes system timing overhead. The accelerator achieves 97.42%, 84.54% and 82.55% test accuracies for the datasets MNIST, Fashion-MNIST and Kuzushiji-MNIST, respectively, matching the TM software models.

Adversarial Attacks on AI-Generated Text Detection Models: A Token Probability-Based Approach Using Embeddings

Jan 31, 2025

Abstract:In recent years, text generation tools utilizing Artificial Intelligence (AI) have occasionally been misused across various domains, such as generating student reports or creative writings. This issue prompts plagiarism detection services to enhance their capabilities in identifying AI-generated content. Adversarial attacks are often used to test the robustness of AI-text generated detectors. This work proposes a novel textual adversarial attack on the detection models such as Fast-DetectGPT. The method employs embedding models for data perturbation, aiming at reconstructing the AI generated texts to reduce the likelihood of detection of the true origin of the texts. Specifically, we employ different embedding techniques, including the Tsetlin Machine (TM), an interpretable approach in machine learning for this purpose. By combining synonyms and embedding similarity vectors, we demonstrates the state-of-the-art reduction in detection scores against Fast-DetectGPT. Particularly, in the XSum dataset, the detection score decreased from 0.4431 to 0.2744 AUROC, and in the SQuAD dataset, it dropped from 0.5068 to 0.3532 AUROC.

Scalable Multi-phase Word Embedding Using Conjunctive Propositional Clauses

Jan 31, 2025

Abstract:The Tsetlin Machine (TM) architecture has recently demonstrated effectiveness in Machine Learning (ML), particularly within Natural Language Processing (NLP). It has been utilized to construct word embedding using conjunctive propositional clauses, thereby significantly enhancing our understanding and interpretation of machine-derived decisions. The previous approach performed the word embedding over a sequence of input words to consolidate the information into a cohesive and unified representation. However, that approach encounters scalability challenges as the input size increases. In this study, we introduce a novel approach incorporating two-phase training to discover contextual embeddings of input sequences. Specifically, this method encapsulates the knowledge for each input word within the dataset's vocabulary, subsequently constructing embeddings for a sequence of input words utilizing the extracted knowledge. This technique not only facilitates the design of a scalable model but also preserves interpretability. Our experimental findings revealed that the proposed method yields competitive performance compared to the previous approaches, demonstrating promising results in contrast to human-generated benchmarks. Furthermore, we applied the proposed approach to sentiment analysis on the IMDB dataset, where the TM embedding and the TM classifier, along with other interpretable classifiers, offered a transparent end-to-end solution with competitive performance.

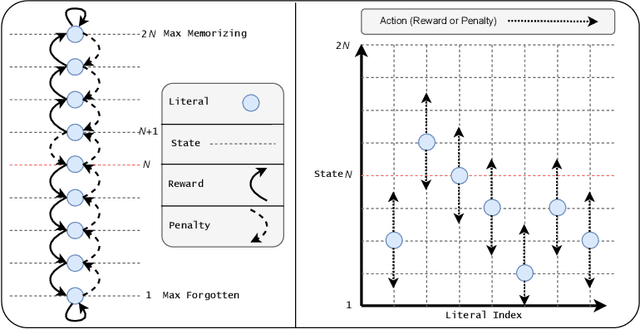

Exploring State Space and Reasoning by Elimination in Tsetlin Machine

Jul 12, 2024

Abstract:The Tsetlin Machine (TM) has gained significant attention in Machine Learning (ML). By employing logical fundamentals, it facilitates pattern learning and representation, offering an alternative approach for developing comprehensible Artificial Intelligence (AI) with a specific focus on pattern classification in the form of conjunctive clauses. In the domain of Natural Language Processing (NLP), TM is utilised to construct word embedding and describe target words using clauses. To enhance the descriptive capacity of these clauses, we study the concept of Reasoning by Elimination (RbE) in clauses' formulation, which involves incorporating feature negations to provide a more comprehensive representation. In more detail, this paper employs the Tsetlin Machine Auto-Encoder (TM-AE) architecture to generate dense word vectors, aiming at capturing contextual information by extracting feature-dense vectors for a given vocabulary. Thereafter, the principle of RbE is explored to improve descriptivity and optimise the performance of the TM. Specifically, the specificity parameter s and the voting margin parameter T are leveraged to regulate feature distribution in the state space, resulting in a dense representation of information for each clause. In addition, we investigate the state spaces of TM-AE, especially for the forgotten/excluded features. Empirical investigations on artificially generated data, the IMDB dataset, and the 20 Newsgroups dataset showcase the robustness of the TM, with accuracy reaching 90.62\% for the IMDB.

Exploring Effects of Hyperdimensional Vectors for Tsetlin Machines

Jun 04, 2024

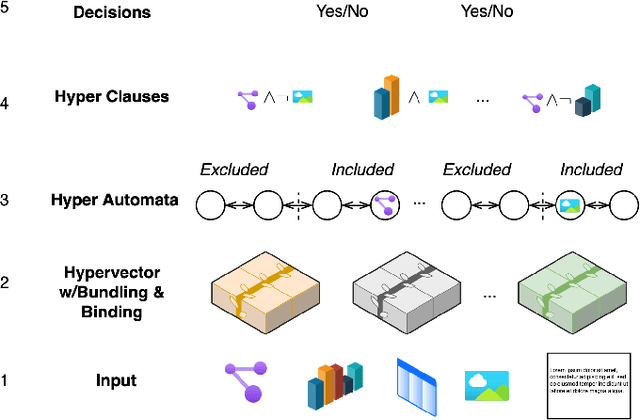

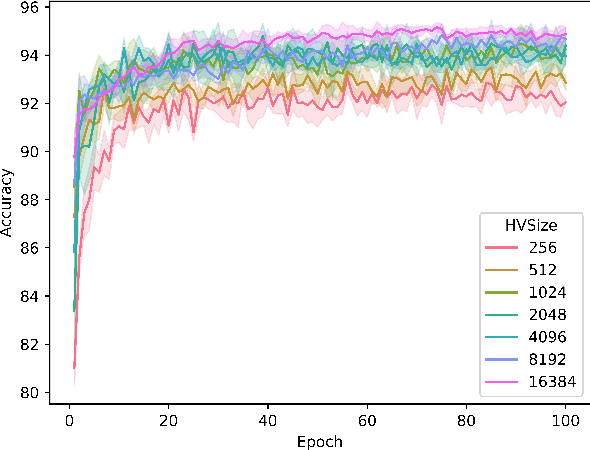

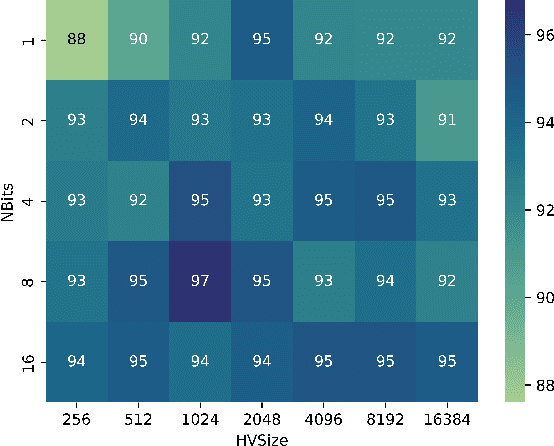

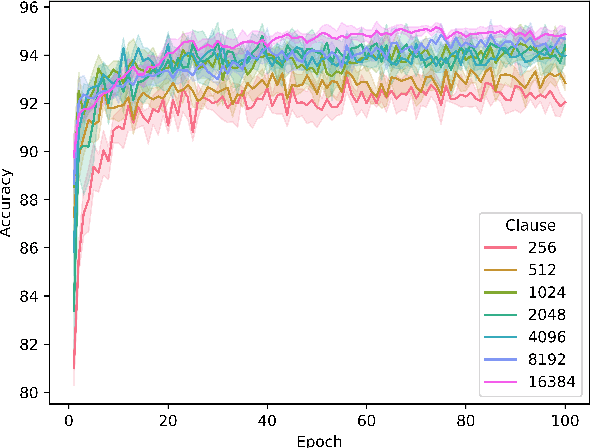

Abstract:Tsetlin machines (TMs) have been successful in several application domains, operating with high efficiency on Boolean representations of the input data. However, Booleanizing complex data structures such as sequences, graphs, images, signal spectra, chemical compounds, and natural language is not trivial. In this paper, we propose a hypervector (HV) based method for expressing arbitrarily large sets of concepts associated with any input data. Using a hyperdimensional space to build vectors drastically expands the capacity and flexibility of the TM. We demonstrate how images, chemical compounds, and natural language text are encoded according to the proposed method, and how the resulting HV-powered TM can achieve significantly higher accuracy and faster learning on well-known benchmarks. Our results open up a new research direction for TMs, namely how to expand and exploit the benefits of operating in hyperspace, including new booleanization strategies, optimization of TM inference and learning, as well as new TM applications.

An Optimized Toolbox for Advanced Image Processing with Tsetlin Machine Composites

Jun 02, 2024Abstract:The Tsetlin Machine (TM) has achieved competitive results on several image classification benchmarks, including MNIST, K-MNIST, F-MNIST, and CIFAR-2. However, color image classification is arguably still in its infancy for TMs, with CIFAR-10 being a focal point for tracking progress. Over the past few years, TM's CIFAR-10 accuracy has increased from around 61% in 2020 to 75.1% in 2023 with the introduction of Drop Clause. In this paper, we leverage the recently proposed TM Composites architecture and introduce a range of TM Specialists that use various image processing techniques. These include Canny edge detection, Histogram of Oriented Gradients, adaptive mean thresholding, adaptive Gaussian thresholding, Otsu's thresholding, color thermometers, and adaptive color thermometers. In addition, we conduct a rigorous hyperparameter search, where we uncover optimal hyperparameters for several of the TM Specialists. The result is a toolbox that provides new state-of-the-art results on CIFAR-10 for TMs with an accuracy of 82.8%. In conclusion, our toolbox of TM Specialists forms a foundation for new TM applications and a landmark for further research on TM Composites in image analysis.

Efficient Data Fusion using the Tsetlin Machine

Oct 26, 2023

Abstract:We propose a novel way of assessing and fusing noisy dynamic data using a Tsetlin Machine. Our approach consists in monitoring how explanations in form of logical clauses that a TM learns changes with possible noise in dynamic data. This way TM can recognize the noise by lowering weights of previously learned clauses, or reflect it in the form of new clauses. We also perform a comprehensive experimental study using notably different datasets that demonstrated high performance of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge