Oktay Karakuş

Semantic Communities and Boundary-Spanning Lyrics in K-pop: A Graph-Based Unsupervised Analysis

Feb 13, 2026Abstract:Large-scale lyric corpora present unique challenges for data-driven analysis, including the absence of reliable annotations, multilingual content, and high levels of stylistic repetition. Most existing approaches rely on supervised classification, genre labels, or coarse document-level representations, limiting their ability to uncover latent semantic structure. We present a graph-based framework for unsupervised discovery and evaluation of semantic communities in K-pop lyrics using line-level semantic representations. By constructing a similarity graph over lyric texts and applying community detection, we uncover stable micro-theme communities without genre, artist, or language supervision. We further identify boundary-spanning songs via graph-theoretic bridge metrics and analyse their structural properties. Across multiple robustness settings, boundary-spanning lyrics exhibit higher lexical diversity and lower repetition compared to core community members, challenging the assumption that hook intensity or repetition drives cross-theme connectivity. Our framework is language-agnostic and applicable to unlabeled cultural text corpora.

UniCrop: A Universal, Multi-Source Data Engineering Pipeline for Scalable Crop Yield Prediction

Jan 04, 2026Abstract:Accurate crop yield prediction relies on diverse data streams, including satellite, meteorological, soil, and topographic information. However, despite rapid advances in machine learning, existing approaches remain crop- or region-specific and require data engineering efforts. This limits scalability, reproducibility, and operational deployment. This study introduces UniCrop, a universal and reusable data pipeline designed to automate the acquisition, cleaning, harmonisation, and engineering of multi-source environmental data for crop yield prediction. For any given location, crop type, and temporal window, UniCrop automatically retrieves, harmonises, and engineers over 200 environmental variables (Sentinel-1/2, MODIS, ERA5-Land, NASA POWER, SoilGrids, and SRTM), reducing them to a compact, analysis-ready feature set utilising a structured feature reduction workflow with minimum redundancy maximum relevance (mRMR). To validate, UniCrop was applied to a rice yield dataset comprising 557 field observations. Using only the selected 15 features, four baseline machine learning models (LightGBM, Random Forest, Support Vector Regression, and Elastic Net) were trained. LightGBM achieved the best single-model performance (RMSE = 465.1 kg/ha, $R^2 = 0.6576$), while a constrained ensemble of all baselines further improved accuracy (RMSE = 463.2 kg/ha, $R^2 = 0.6604$). UniCrop contributes a scalable and transparent data-engineering framework that addresses the primary bottleneck in operational crop yield modelling: the preparation of consistent and harmonised multi-source data. By decoupling data specification from implementation and supporting any crop, region, and time frame through simple configuration updates, UniCrop provides a practical foundation for scalable agricultural analytics. The code and implementation documentation are shared in https://github.com/CoDIS-Lab/UniCrop.

Adaptive Heterogeneous Mixtures of Normalising Flows for Robust Variational Inference

Oct 02, 2025

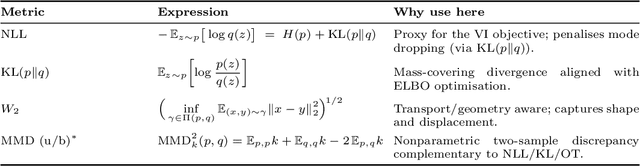

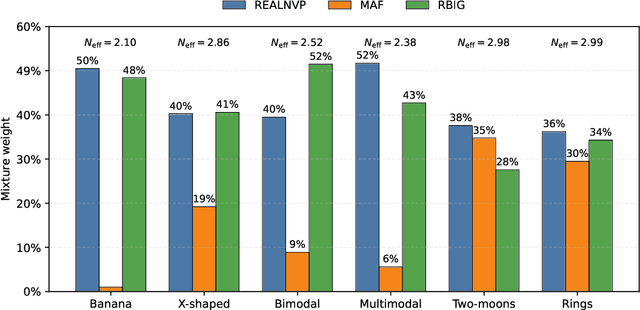

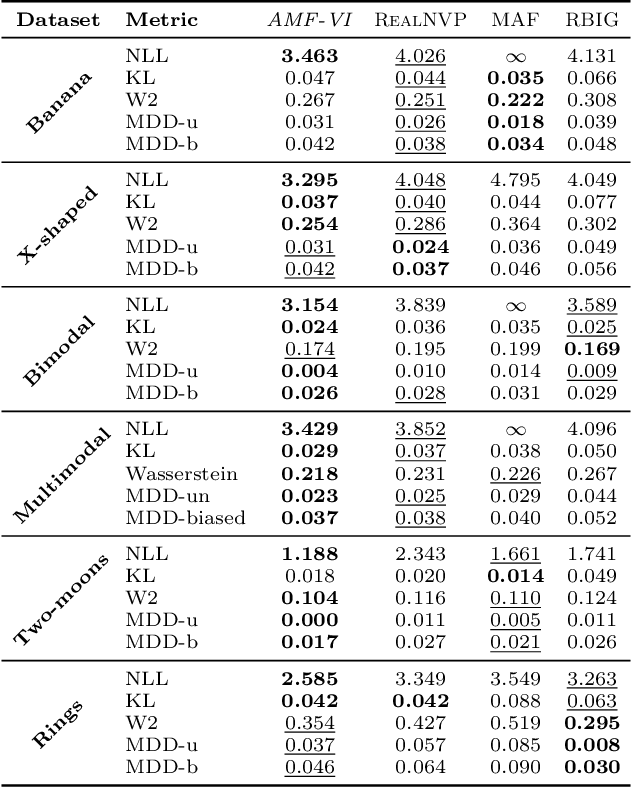

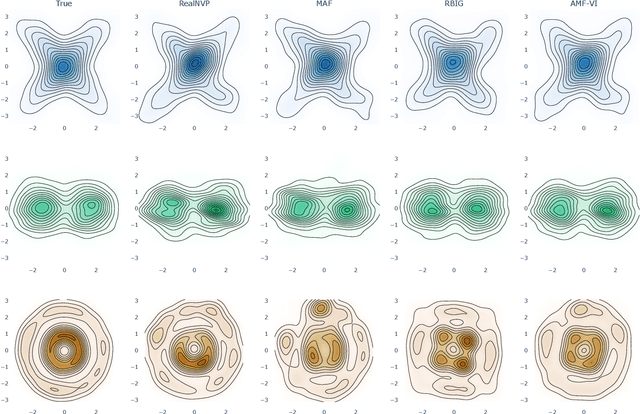

Abstract:Normalising-flow variational inference (VI) can approximate complex posteriors, yet single-flow models often behave inconsistently across qualitatively different distributions. We propose Adaptive Mixture Flow Variational Inference (AMF-VI), a heterogeneous mixture of complementary flows (MAF, RealNVP, RBIG) trained in two stages: (i) sequential expert training of individual flows, and (ii) adaptive global weight estimation via likelihood-driven updates, without per-sample gating or architectural changes. Evaluated on six canonical posterior families of banana, X-shape, two-moons, rings, a bimodal, and a five-mode mixture, AMF-VI achieves consistently lower negative log-likelihood than each single-flow baseline and delivers stable gains in transport metrics (Wasserstein-2) and maximum mean discrepancy (MDD), indicating improved robustness across shapes and modalities. The procedure is efficient and architecture-agnostic, incurring minimal overhead relative to standard flow training, and demonstrates that adaptive mixtures of diverse flows provide a reliable route to robust VI across diverse posterior families whilst preserving each expert's inductive bias.

Through the Gaps: Uncovering Tactical Line-Breaking Passes with Clustering

Jun 07, 2025Abstract:Line-breaking passes (LBPs) are crucial tactical actions in football, allowing teams to penetrate defensive lines and access high-value spaces. In this study, we present an unsupervised, clustering-based framework for detecting and analysing LBPs using synchronised event and tracking data from elite matches. Our approach models opponent team shape through vertical spatial segmentation and identifies passes that disrupt defensive lines within open play. Beyond detection, we introduce several tactical metrics, including the space build-up ratio (SBR) and two chain-based variants, LBPCh$^1$ and LBPCh$^2$, which quantify the effectiveness of LBPs in generating immediate or sustained attacking threats. We evaluate these metrics across teams and players in the 2022 FIFA World Cup, revealing stylistic differences in vertical progression and structural disruption. The proposed methodology is explainable, scalable, and directly applicable to modern performance analysis and scouting workflows.

Core-Set Selection for Data-efficient Land Cover Segmentation

May 02, 2025

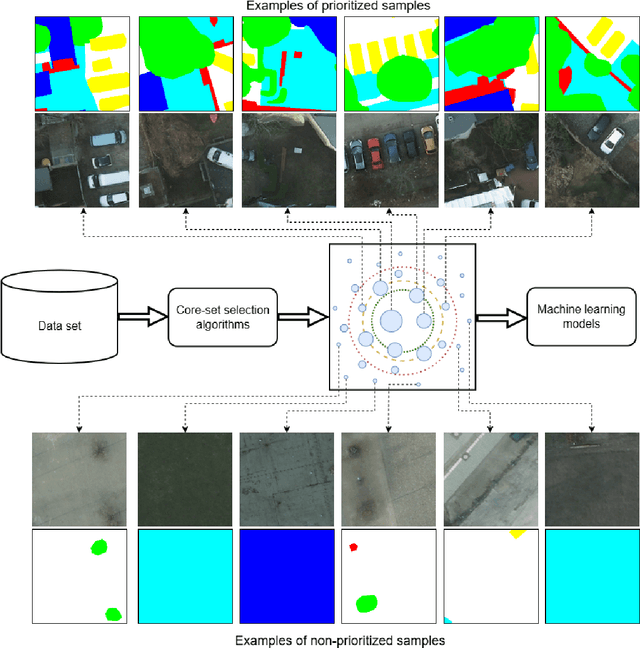

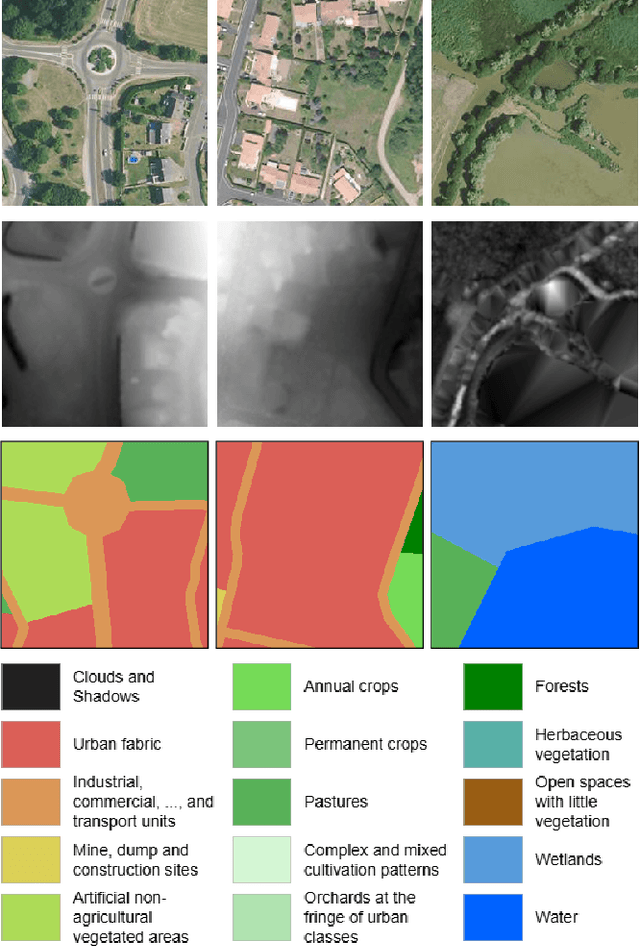

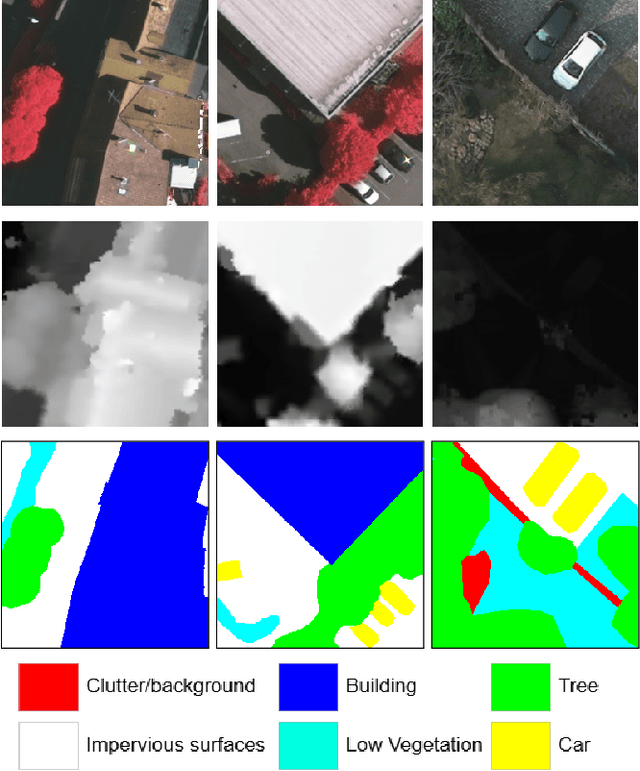

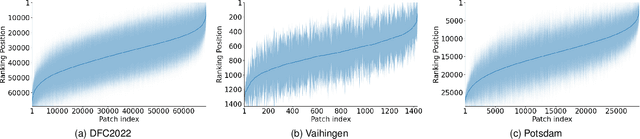

Abstract:The increasing accessibility of remotely sensed data and the potential of such data to inform large-scale decision-making has driven the development of deep learning models for many Earth Observation tasks. Traditionally, such models must be trained on large datasets. However, the common assumption that broadly larger datasets lead to better outcomes tends to overlook the complexities of the data distribution, the potential for introducing biases and noise, and the computational resources required for processing and storing vast datasets. Therefore, effective solutions should consider both the quantity and quality of data. In this paper, we propose six novel core-set selection methods for selecting important subsets of samples from remote sensing image segmentation datasets that rely on imagery only, labels only, and a combination of each. We benchmark these approaches against a random-selection baseline on three commonly used land cover classification datasets: DFC2022, Vaihingen, and Potsdam. In each of the datasets, we demonstrate that training on a subset of samples outperforms the random baseline, and some approaches outperform training on all available data. This result shows the importance and potential of data-centric learning for the remote sensing domain. The code is available at https://github.com/keillernogueira/data-centric-rs-classification/.

Multi-modal Data Fusion and Deep Ensemble Learning for Accurate Crop Yield Prediction

Feb 09, 2025Abstract:This study introduces RicEns-Net, a novel Deep Ensemble model designed to predict crop yields by integrating diverse data sources through multimodal data fusion techniques. The research focuses specifically on the use of synthetic aperture radar (SAR), optical remote sensing data from Sentinel 1, 2, and 3 satellites, and meteorological measurements such as surface temperature and rainfall. The initial field data for the study were acquired through Ernst & Young's (EY) Open Science Challenge 2023. The primary objective is to enhance the precision of crop yield prediction by developing a machine-learning framework capable of handling complex environmental data. A comprehensive data engineering process was employed to select the most informative features from over 100 potential predictors, reducing the set to 15 features from 5 distinct modalities. This step mitigates the ``curse of dimensionality" and enhances model performance. The RicEns-Net architecture combines multiple machine learning algorithms in a deep ensemble framework, integrating the strengths of each technique to improve predictive accuracy. Experimental results demonstrate that RicEns-Net achieves a mean absolute error (MAE) of 341 kg/Ha (roughly corresponds to 5-6\% of the lowest average yield in the region), significantly exceeding the performance of previous state-of-the-art models, including those developed during the EY challenge.

TopoFormer: Integrating Transformers and ConvLSTMs for Coastal Topography Prediction

Jan 11, 2025

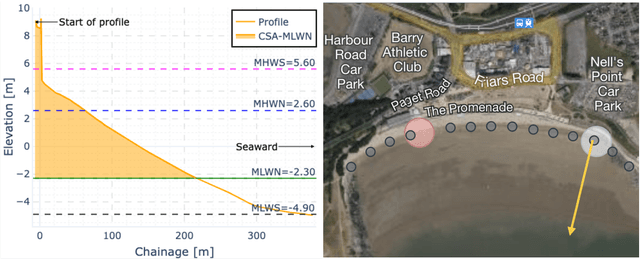

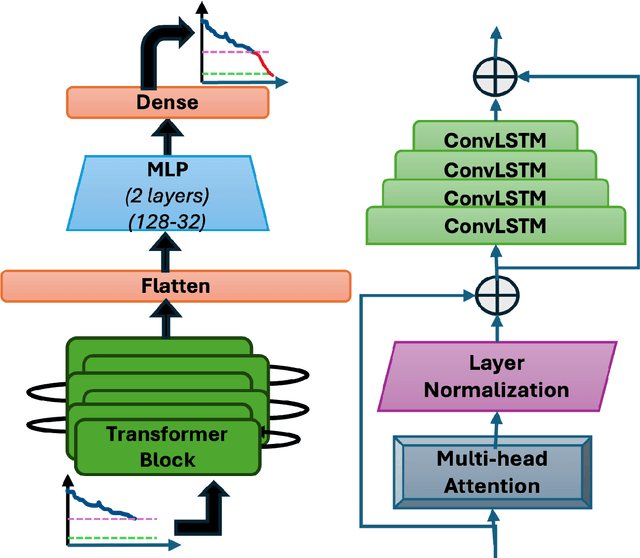

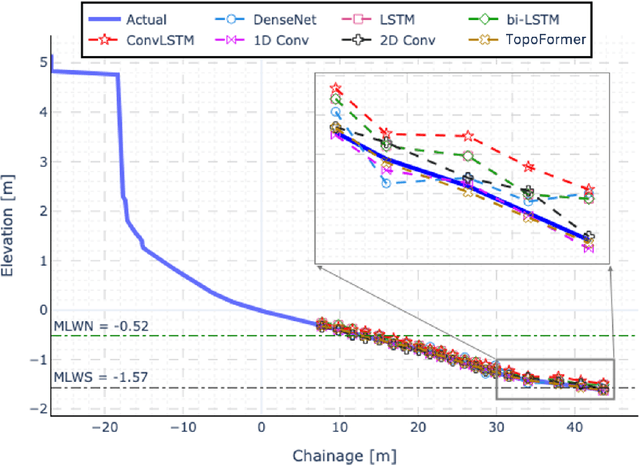

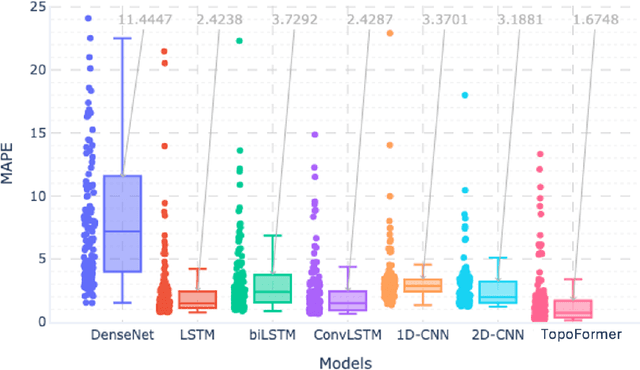

Abstract:This paper presents \textit{TopoFormer}, a novel hybrid deep learning architecture that integrates transformer-based encoders with convolutional long short-term memory (ConvLSTM) layers for the precise prediction of topographic beach profiles referenced to elevation datums, with a particular focus on Mean Low Water Springs (MLWS) and Mean Low Water Neaps (MLWN). Accurate topographic estimation down to MLWS is critical for coastal management, navigation safety, and environmental monitoring. Leveraging a comprehensive dataset from the Wales Coastal Monitoring Centre (WCMC), consisting of over 2000 surveys across 36 coastal survey units, TopoFormer addresses key challenges in topographic prediction, including temporal variability and data gaps in survey measurements. The architecture uniquely combines multi-head attention mechanisms and ConvLSTM layers to capture both long-range dependencies and localized temporal patterns inherent in beach profiles data. TopoFormer's predictive performance was rigorously evaluated against state-of-the-art models, including DenseNet, 1D/2D CNNs, and LSTMs. While all models demonstrated strong performance, \textit{TopoFormer} achieved the lowest mean absolute error (MAE), as low as 2 cm, and provided superior accuracy in both in-distribution (ID) and out-of-distribution (OOD) evaluations.

A Multimodal Approach for Fluid Overload Prediction: Integrating Lung Ultrasound and Clinical Data

Sep 13, 2024

Abstract:Managing fluid balance in dialysis patients is crucial, as improper management can lead to severe complications. In this paper, we propose a multimodal approach that integrates visual features from lung ultrasound images with clinical data to enhance the prediction of excess body fluid. Our framework employs independent encoders to extract features for each modality and combines them through a cross-domain attention mechanism to capture complementary information. By framing the prediction as a classification task, the model achieves significantly better performance than regression. The results demonstrate that multimodal models consistently outperform single-modality models, particularly when attention mechanisms prioritize tabular data. Pseudo-sample generation further contributes to mitigating the imbalanced classification problem, achieving the highest accuracy of 88.31%. This study underscores the effectiveness of multimodal learning for fluid overload management in dialysis patients, offering valuable insights for improved clinical outcomes.

DUCPS: Deep Unfolding the Cauchy Proximal Splitting Algorithm for B-Lines Quantification in Lung Ultrasound Images

Jul 16, 2024Abstract:The identification of artefacts, particularly B-lines, in lung ultrasound (LUS), is crucial for assisting clinical diagnosis, prompting the development of innovative methodologies. While the Cauchy proximal splitting (CPS) algorithm has demonstrated effective performance in B-line detection, the process is slow and has limited generalization. This paper addresses these issues with a novel unsupervised deep unfolding network structure (DUCPS). The framework utilizes deep unfolding procedures to merge traditional model-based techniques with deep learning approaches. By unfolding the CPS algorithm into a deep network, DUCPS enables the parameters in the optimization algorithm to be learnable, thus enhancing generalization performance and facilitating rapid convergence. We conducted entirely unsupervised training using the Neighbor2Neighbor (N2N) and the Structural Similarity Index Measure (SSIM) losses. When combined with an improved line identification method proposed in this paper, state-of-the-art performance is achieved, with the recall and F2 score reaching 0.70 and 0.64, respectively. Notably, DUCPS significantly improves computational efficiency eliminating the need for extensive data labeling, representing a notable advancement over both traditional algorithms and existing deep learning approaches.

Bayes-xG: Player and Position Correction on Expected Goals using Bayesian Hierarchical Approach

Nov 22, 2023Abstract:This study employs Bayesian methodologies to explore the influence of player or positional factors in predicting the probability of a shot resulting in a goal, measured by the expected goals (xG) metric. Utilising publicly available data from StatsBomb, Bayesian hierarchical logistic regressions are constructed, analysing approximately 10,000 shots from the English Premier League to ascertain whether positional or player-level effects impact xG. The findings reveal positional effects in a basic model that includes only distance to goal and shot angle as predictors, highlighting that strikers and attacking midfielders exhibit a higher likelihood of scoring. However, these effects diminish when more informative predictors are introduced. Nevertheless, even with additional predictors, player-level effects persist, indicating that certain players possess notable positive or negative xG adjustments, influencing their likelihood of scoring a given chance. The study extends its analysis to data from Spain's La Liga and Germany's Bundesliga, yielding comparable results. Additionally, the paper assesses the impact of prior distribution choices on outcomes, concluding that the priors employed in the models provide sound results but could be refined to enhance sampling efficiency for constructing more complex and extensive models feasibly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge