Nurislam Tursynbek

Unsupervised Discovery of 3D Hierarchical Structure with Generative Diffusion Features

Apr 28, 2023

Abstract:Inspired by recent findings that generative diffusion models learn semantically meaningful representations, we use them to discover the intrinsic hierarchical structure in biomedical 3D images using unsupervised segmentation. We show that features of diffusion models from different stages of a U-Net-based ladder-like architecture capture different hierarchy levels in 3D biomedical images. We design three losses to train a predictive unsupervised segmentation network that encourages the decomposition of 3D volumes into meaningful nested subvolumes that represent a hierarchy. First, we pretrain 3D diffusion models and use the consistency of their features across subvolumes. Second, we use the visual consistency between subvolumes. Third, we use the invariance to photometric augmentations as a regularizer. Our models achieve better performance than prior unsupervised structure discovery approaches on challenging biologically-inspired synthetic datasets and on a real-world brain tumor MRI dataset.

Smoothed Embeddings for Certified Few-Shot Learning

Feb 02, 2022

Abstract:Randomized smoothing is considered to be the state-of-the-art provable defense against adversarial perturbations. However, it heavily exploits the fact that classifiers map input objects to class probabilities and do not focus on the ones that learn a metric space in which classification is performed by computing distances to embeddings of classes prototypes. In this work, we extend randomized smoothing to few-shot learning models that map inputs to normalized embeddings. We provide analysis of Lipschitz continuity of such models and derive robustness certificate against $\ell_2$-bounded perturbations that may be useful in few-shot learning scenarios. Our theoretical results are confirmed by experiments on different datasets.

CC-Cert: A Probabilistic Approach to Certify General Robustness of Neural Networks

Sep 22, 2021

Abstract:In safety-critical machine learning applications, it is crucial to defend models against adversarial attacks -- small modifications of the input that change the predictions. Besides rigorously studied $\ell_p$-bounded additive perturbations, recently proposed semantic perturbations (e.g. rotation, translation) raise a serious concern on deploying ML systems in real-world. Therefore, it is important to provide provable guarantees for deep learning models against semantically meaningful input transformations. In this paper, we propose a new universal probabilistic certification approach based on Chernoff-Cramer bounds that can be used in general attack settings. We estimate the probability of a model to fail if the attack is sampled from a certain distribution. Our theoretical findings are supported by experimental results on different datasets.

Robustness Threats of Differential Privacy

Dec 14, 2020

Abstract:Differential privacy is a powerful and gold-standard concept of measuring and guaranteeing privacy in data analysis. It is well-known that differential privacy reduces the model's accuracy. However, it is unclear how it affects security of the model from robustness point of view. In this paper, we empirically observe an interesting trade-off between the differential privacy and the security of neural networks. Standard neural networks are vulnerable to input perturbations, either adversarial attacks or common corruptions. We experimentally demonstrate that networks, trained with differential privacy, in some settings might be even more vulnerable in comparison to non-private versions. To explore this, we extensively study different robustness measurements, including FGSM and PGD adversaries, distance to linear decision boundaries, curvature profile, and performance on a corrupted dataset. Finally, we study how the main ingredients of differentially private neural networks training, such as gradient clipping and noise addition, affect (decrease and increase) the robustness of the model.

Adversarial Turing Patterns from Cellular Automata

Nov 18, 2020

Abstract:State-of-the-art deep classifiers are intriguingly vulnerable to universal adversarial perturbations: single disturbances of small magnitude that lead to misclassification of most inputs. This phenomena may potentially result in a serious security problem. Despite the extensive research in this area, there is a lack of theoretical understanding of the structure of these perturbations. In image domain, there is a certain visual similarity between patterns, that represent these perturbations, and classical Turing patterns, which appear as a solution of non-linear partial differential equations and are underlying concept of many processes in nature. In this paper, we provide a theoretical bridge between these two different theories, by mapping a simplified algorithm for crafting universal perturbations to (inhomogeneous) cellular automata, the latter is known to generate Turing patterns. Furthermore, we propose to use Turing patterns, generated by cellular automata, as universal perturbations, and experimentally show that they significantly degrade the performance of deep learning models. We found this method to be a fast and efficient way to create a data-agnostic quasi-imperceptible perturbation in the black-box scenario.

Black-Box Face Recovery from Identity Features

Jul 30, 2020

Abstract:In this work, we present a novel algorithm based on an it-erative sampling of random Gaussian blobs for black-box face recovery, given only an output feature vector of deep face recognition systems. We attack the state-of-the-art face recognition system (ArcFace) to test our algorithm. Another network with different architecture (FaceNet) is used as an independent critic showing that the target person can be identified with the reconstructed image even with no access to the attacked model. Furthermore, our algorithm requires a significantly less number of queries compared to the state-of-the-art solution.

Follow the bisector: a simple method for multi-objective optimization

Jul 14, 2020Abstract:This study presents a novel Equiangular Direction Method (EDM) to solve a multi-objective optimization problem. We consider optimization problems, where multiple differentiable losses have to be minimized. The presented method computes descent direction in every iteration to guarantee equal relative decrease of objective functions. This descent direction is based on the normalized gradients of the individual losses. Therefore, it is appropriate to solve multi-objective optimization problems with multi-scale losses. We test the proposed method on the imbalanced classification problem and multi-task learning problem, where standard datasets are used. EDM is compared with other methods to solve these problems.

Geometry-Inspired Top-k Adversarial Perturbations

Jun 28, 2020

Abstract:State-of-the-art deep learning models are untrustworthy due to their vulnerability to adversarial examples. Intriguingly, besides simple adversarial perturbations, there exist Universal Adversarial Perturbations (UAPs), which are input-agnostic perturbations that lead to misclassification of majority inputs. The main target of existing adversarial examples (including UAPs) is to change primarily the correct Top-1 predicted class by the incorrect one, which does not guarantee changing the Top-k prediction. However, in many real-world scenarios, dealing with digital data, Top-k predictions are more important. We propose an effective geometry-inspired method of computing Top-k adversarial examples for any k. We evaluate its effectiveness and efficiency by comparing it with other adversarial example crafting techniques. Based on this method, we propose Top-k Universal Adversarial Perturbations, image-agnostic tiny perturbations that cause true class to be absent among the Top-k pre-diction. We experimentally show that our approach outperforms baseline methods and even improves existing techniques of generating UAPs.

Data Driven Chiller Plant Energy Optimization with Domain Knowledge

Dec 03, 2018

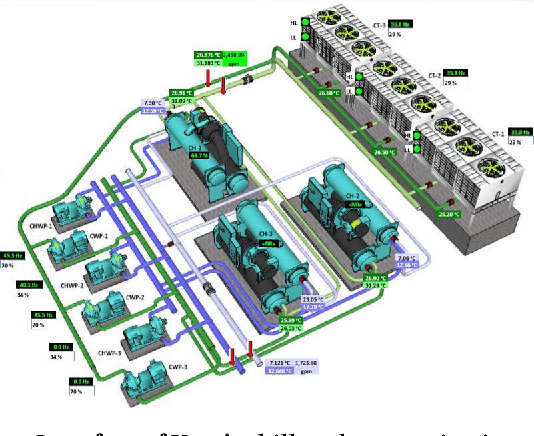

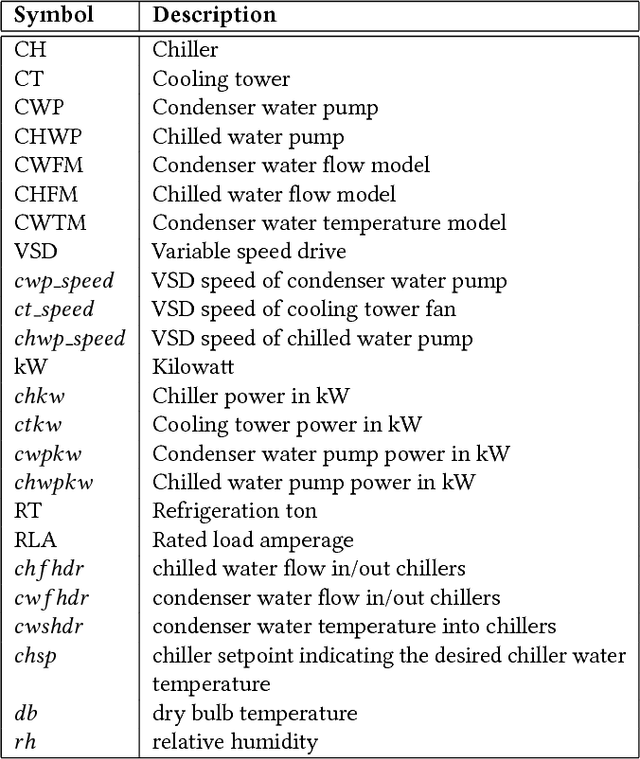

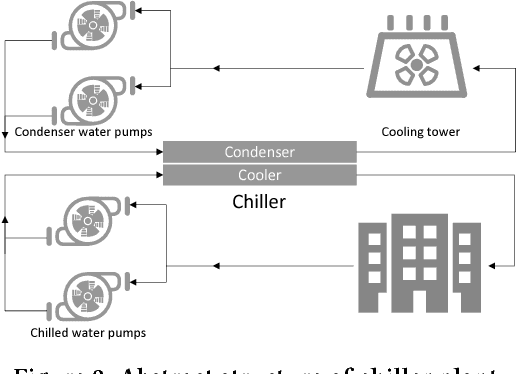

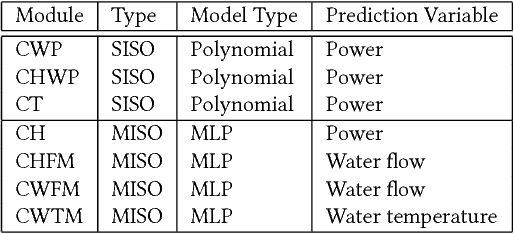

Abstract:Refrigeration and chiller optimization is an important and well studied topic in mechanical engineering, mostly taking advantage of physical models, designed on top of over-simplified assumptions, over the equipments. Conventional optimization techniques using physical models make decisions of online parameter tuning, based on very limited information of hardware specifications and external conditions, e.g., outdoor weather. In recent years, new generation of sensors is becoming essential part of new chiller plants, for the first time allowing the system administrators to continuously monitor the running status of all equipments in a timely and accurate way. The explosive growth of data flowing to databases, driven by the increasing analytical power by machine learning and data mining, unveils new possibilities of data-driven approaches for real-time chiller plant optimization. This paper presents our research and industrial experience on the adoption of data models and optimizations on chiller plant and discusses the lessons learnt from our practice on real world plants. Instead of employing complex machine learning models, we emphasize the incorporation of appropriate domain knowledge into data analysis tools, which turns out to be the key performance improver over state-of-the-art deep learning techniques by a significant margin. Our empirical evaluation on a real world chiller plant achieves savings by more than 7% on daily power consumption.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge