Nikita Kasatkin

From Broadcast to Minimap: Achieving State-of-the-Art SoccerNet Game State Reconstruction

Apr 08, 2025

Abstract:Game State Reconstruction (GSR), a critical task in Sports Video Understanding, involves precise tracking and localization of all individuals on the football field-players, goalkeepers, referees, and others - in real-world coordinates. This capability enables coaches and analysts to derive actionable insights into player movements, team formations, and game dynamics, ultimately optimizing training strategies and enhancing competitive advantage. Achieving accurate GSR using a single-camera setup is highly challenging due to frequent camera movements, occlusions, and dynamic scene content. In this work, we present a robust end-to-end pipeline for tracking players across an entire match using a single-camera setup. Our solution integrates a fine-tuned YOLOv5m for object detection, a SegFormer-based camera parameter estimator, and a DeepSORT-based tracking framework enhanced with re-identification, orientation prediction, and jersey number recognition. By ensuring both spatial accuracy and temporal consistency, our method delivers state-of-the-art game state reconstruction, securing first place in the SoccerNet Game State Reconstruction Challenge 2024 and significantly outperforming competing methods.

SoccerNet 2024 Challenges Results

Sep 16, 2024

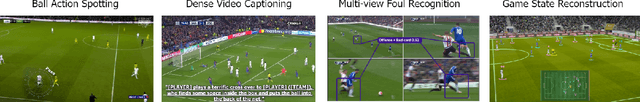

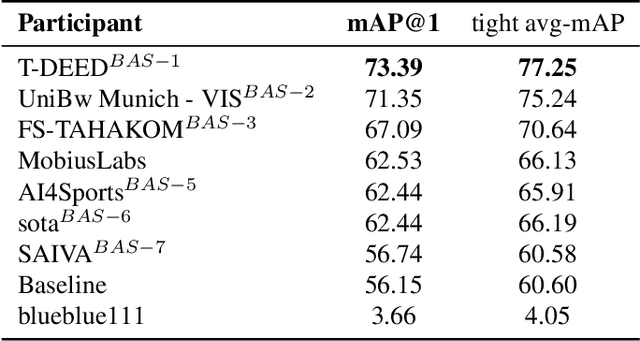

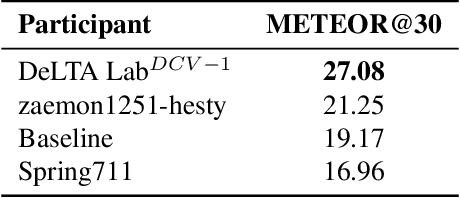

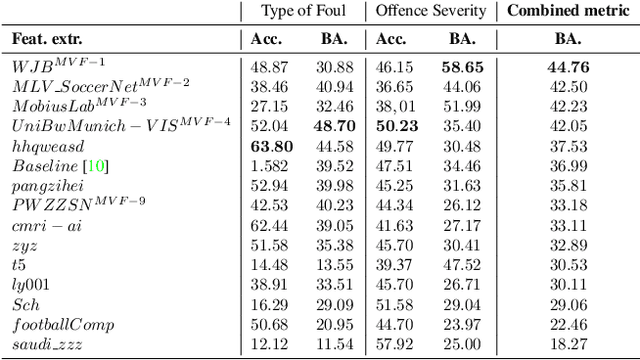

Abstract:The SoccerNet 2024 challenges represent the fourth annual video understanding challenges organized by the SoccerNet team. These challenges aim to advance research across multiple themes in football, including broadcast video understanding, field understanding, and player understanding. This year, the challenges encompass four vision-based tasks. (1) Ball Action Spotting, focusing on precisely localizing when and which soccer actions related to the ball occur, (2) Dense Video Captioning, focusing on describing the broadcast with natural language and anchored timestamps, (3) Multi-View Foul Recognition, a novel task focusing on analyzing multiple viewpoints of a potential foul incident to classify whether a foul occurred and assess its severity, (4) Game State Reconstruction, another novel task focusing on reconstructing the game state from broadcast videos onto a 2D top-view map of the field. Detailed information about the tasks, challenges, and leaderboards can be found at https://www.soccer-net.org, with baselines and development kits available at https://github.com/SoccerNet.

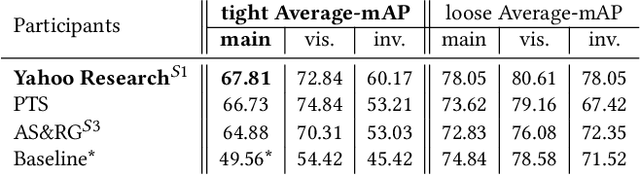

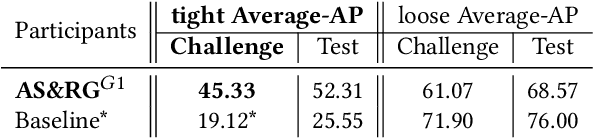

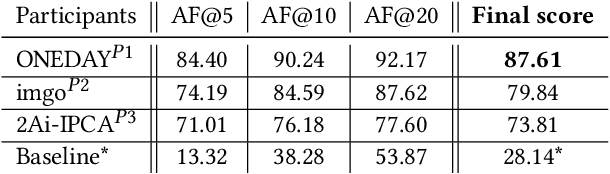

SoccerNet 2022 Challenges Results

Oct 05, 2022

Abstract:The SoccerNet 2022 challenges were the second annual video understanding challenges organized by the SoccerNet team. In 2022, the challenges were composed of 6 vision-based tasks: (1) action spotting, focusing on retrieving action timestamps in long untrimmed videos, (2) replay grounding, focusing on retrieving the live moment of an action shown in a replay, (3) pitch localization, focusing on detecting line and goal part elements, (4) camera calibration, dedicated to retrieving the intrinsic and extrinsic camera parameters, (5) player re-identification, focusing on retrieving the same players across multiple views, and (6) multiple object tracking, focusing on tracking players and the ball through unedited video streams. Compared to last year's challenges, tasks (1-2) had their evaluation metrics redefined to consider tighter temporal accuracies, and tasks (3-6) were novel, including their underlying data and annotations. More information on the tasks, challenges and leaderboards are available on https://www.soccer-net.org. Baselines and development kits are available on https://github.com/SoccerNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge