Ngoc-Son Vu

Memory-efficient Continual Learning with Neural Collapse Contrastive

Dec 05, 2024

Abstract:Contrastive learning has significantly improved representation quality, enhancing knowledge transfer across tasks in continual learning (CL). However, catastrophic forgetting remains a key challenge, as contrastive based methods primarily focus on "soft relationships" or "softness" between samples, which shift with changing data distributions and lead to representation overlap across tasks. Recently, the newly identified Neural Collapse phenomenon has shown promise in CL by focusing on "hard relationships" or "hardness" between samples and fixed prototypes. However, this approach overlooks "softness", crucial for capturing intra-class variability, and this rigid focus can also pull old class representations toward current ones, increasing forgetting. Building on these insights, we propose Focal Neural Collapse Contrastive (FNC2), a novel representation learning loss that effectively balances both soft and hard relationships. Additionally, we introduce the Hardness-Softness Distillation (HSD) loss to progressively preserve the knowledge gained from these relationships across tasks. Our method outperforms state-of-the-art approaches, particularly in minimizing memory reliance. Remarkably, even without the use of memory, our approach rivals rehearsal-based methods, offering a compelling solution for data privacy concerns.

SeeABLE: Soft Discrepancies and Bounded Contrastive Learning for Exposing Deepfakes

Nov 21, 2022Abstract:Modern deepfake detectors have achieved encouraging results, when training and test images are drawn from the same collection. However, when applying these detectors to faces manipulated using an unknown technique, considerable performance drops are typically observed. In this work, we propose a novel deepfake detector, called SeeABLE, that formalizes the detection problem as a (one-class) out-of-distribution detection task and generalizes better to unseen deepfakes. Specifically, SeeABLE uses a novel data augmentation strategy to synthesize fine-grained local image anomalies (referred to as soft-discrepancies) and pushes those pristine disrupted faces towards predefined prototypes using a novel regression-based bounded contrastive loss. To strengthen the generalization performance of SeeABLE to unknown deepfake types, we generate a rich set of soft discrepancies and train the detector: (i) to localize, which part of the face was modified, and (ii) to identify the alteration type. Using extensive experiments on widely used datasets, SeeABLE considerably outperforms existing detectors, with gains of up to +10\% on the DFDC-preview dataset in term of detection accuracy over SoTA methods while using a simpler model. Code will be made publicly available.

Anomaly Detection via Multi-Scale Contrasted Memory

Nov 16, 2022

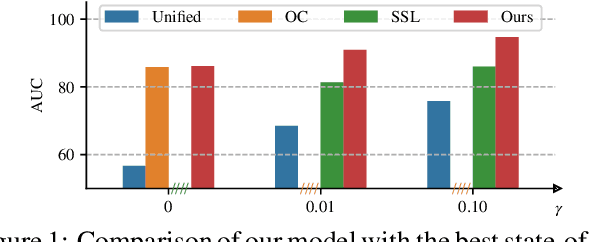

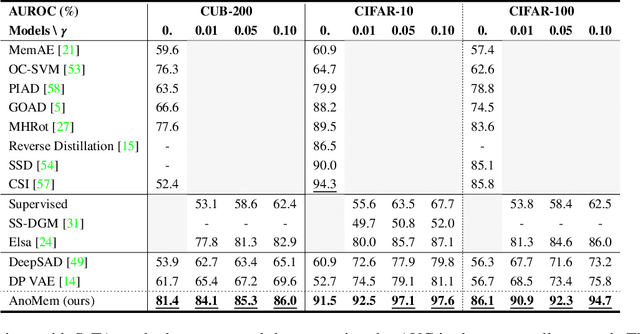

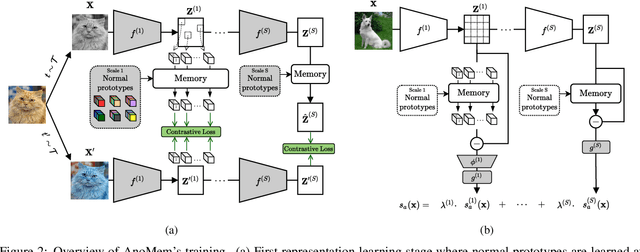

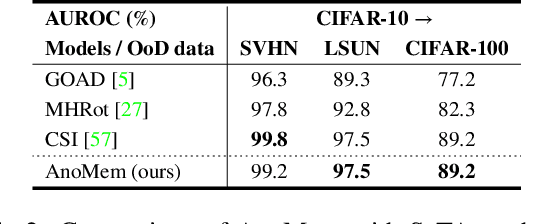

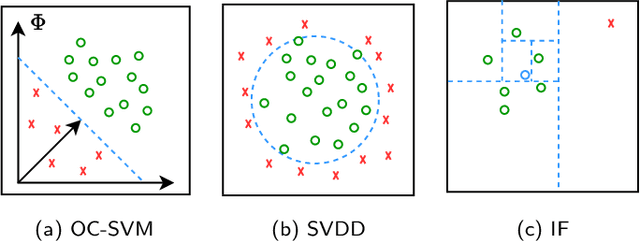

Abstract:Deep anomaly detection (AD) aims to provide robust and efficient classifiers for one-class and unbalanced settings. However current AD models still struggle on edge-case normal samples and are often unable to keep high performance over different scales of anomalies. Moreover, there currently does not exist a unified framework efficiently covering both one-class and unbalanced learnings. In the light of these limitations, we introduce a new two-stage anomaly detector which memorizes during training multi-scale normal prototypes to compute an anomaly deviation score. First, we simultaneously learn representations and memory modules on multiple scales using a novel memory-augmented contrastive learning. Then, we train an anomaly distance detector on the spatial deviation maps between prototypes and observations. Our model highly improves the state-of-the-art performance on a wide range of object, style and local anomalies with up to 35\% error relative improvement on CIFAR-10. It is also the first model to keep high performance across the one-class and unbalanced settings.

Efficient Anomaly Detection Using Self-Supervised Multi-Cue Tasks

Nov 24, 2021

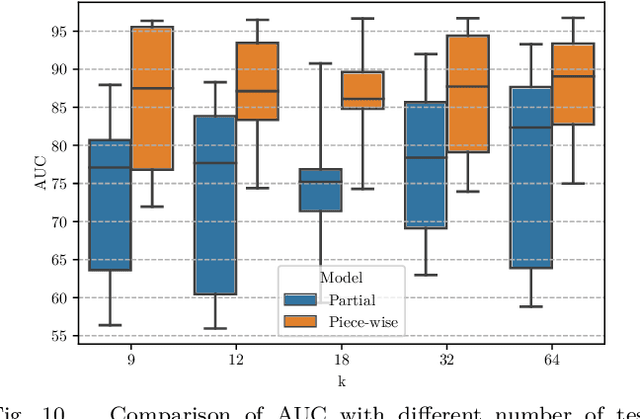

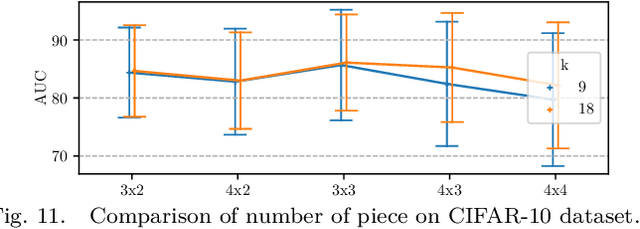

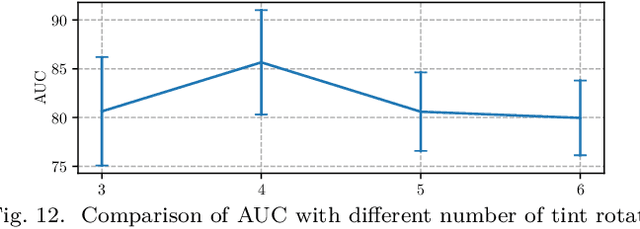

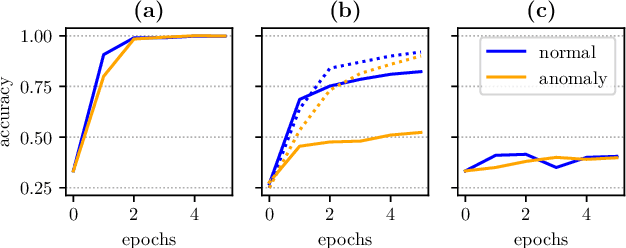

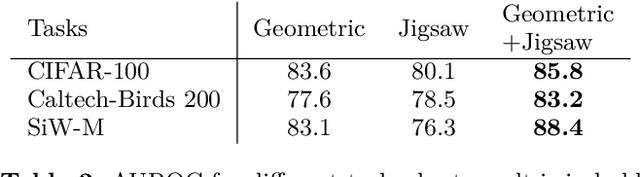

Abstract:Deep anomaly detection has proven to be an efficient and robust approach in several fields. The introduction of self-supervised learning has greatly helped many methods including anomaly detection where simple geometric transformation recognition tasks are used. However these methods do not perform well on fine-grained problems since they lack finer features and are usually highly dependent on the anomaly type. In this paper, we explore each step of self-supervised anomaly detection with pretext tasks. First, we introduce novel discriminative and generative tasks which focus on different visual cues. A piece-wise jigsaw puzzle task focuses on structure cues, while a tint rotation recognition is used on each piece for colorimetry and a partial re-colorization task is performed. In order for the re-colorization task to focus more on the object rather than on the background, we propose to include the contextual color information of the image border. Then, we present a new out-of-distribution detection function and highlight its better stability compared to other out-of-distribution detection methods. Along with it, we also experiment different score fusion functions. Finally, we evaluate our method on a comprehensive anomaly detection protocol composed of object anomalies with classical object recognition, style anomalies with fine-grained classification and local anomalies with face anti-spoofing datasets. Our model can more accurately learn highly discriminative features using these self-supervised tasks. It outperforms state-of-the-art with up to 36% relative error improvement on object anomalies and 40% on face anti-spoofing problems.

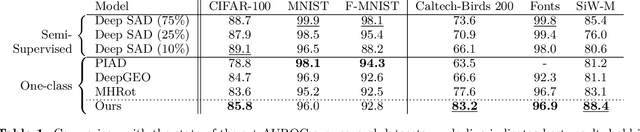

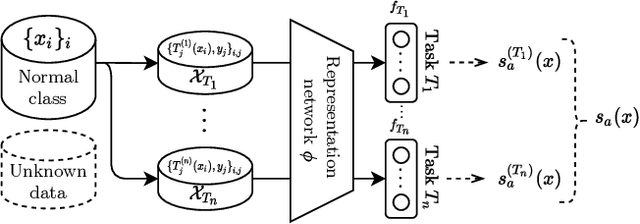

Fine-grained Anomaly Detection via Multi-task Self-Supervision

Apr 20, 2021

Abstract:Detecting anomalies using deep learning has become a major challenge over the last years, and is becoming increasingly promising in several fields. The introduction of self-supervised learning has greatly helped many methods including anomaly detection where simple geometric transformation recognition tasks are used. However these methods do not perform well on fine-grained problems since they lack finer features. By combining in a multi-task framework high-scale shape features oriented task with low-scale fine features oriented task, our method greatly improves fine-grained anomaly detection. It outperforms state-of-the-art with up to 31% relative error reduction measured with AUROC on various anomaly detection problems.

Improving Texture Categorization with Biologically Inspired Filtering

Nov 30, 2013

Abstract:Within the domain of texture classification, a lot of effort has been spent on local descriptors, leading to many powerful algorithms. However, preprocessing techniques have received much less attention despite their important potential for improving the overall classification performance. We address this question by proposing a novel, simple, yet very powerful biologically-inspired filtering (BF) which simulates the performance of human retina. In the proposed approach, given a texture image, after applying a DoG filter to detect the "edges", we first split the filtered image into two "maps" alongside the sides of its edges. The feature extraction step is then carried out on the two "maps" instead of the input image. Our algorithm has several advantages such as simplicity, robustness to illumination and noise, and discriminative power. Experimental results on three large texture databases show that with an extremely low computational cost, the proposed method improves significantly the performance of many texture classification systems, notably in noisy environments. The source codes of the proposed algorithm can be downloaded from https://sites.google.com/site/nsonvu/code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge