Anomaly Detection via Multi-Scale Contrasted Memory

Paper and Code

Nov 16, 2022

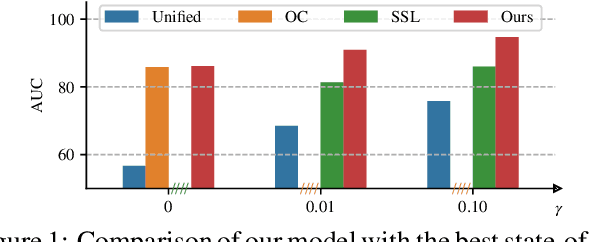

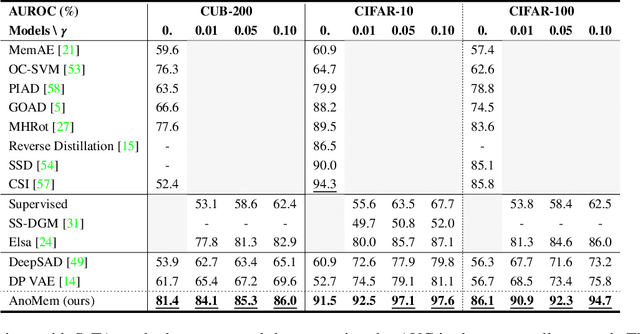

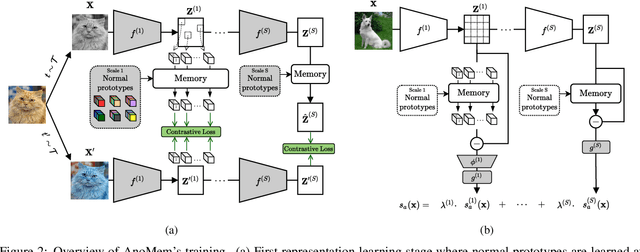

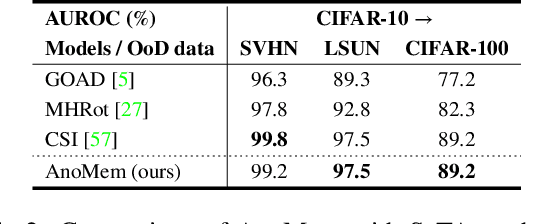

Deep anomaly detection (AD) aims to provide robust and efficient classifiers for one-class and unbalanced settings. However current AD models still struggle on edge-case normal samples and are often unable to keep high performance over different scales of anomalies. Moreover, there currently does not exist a unified framework efficiently covering both one-class and unbalanced learnings. In the light of these limitations, we introduce a new two-stage anomaly detector which memorizes during training multi-scale normal prototypes to compute an anomaly deviation score. First, we simultaneously learn representations and memory modules on multiple scales using a novel memory-augmented contrastive learning. Then, we train an anomaly distance detector on the spatial deviation maps between prototypes and observations. Our model highly improves the state-of-the-art performance on a wide range of object, style and local anomalies with up to 35\% error relative improvement on CIFAR-10. It is also the first model to keep high performance across the one-class and unbalanced settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge