Loic Jezequel

Anomaly Detection via Multi-Scale Contrasted Memory

Nov 16, 2022

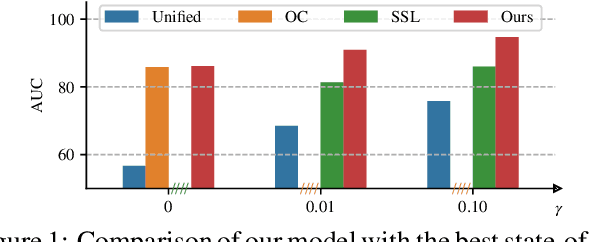

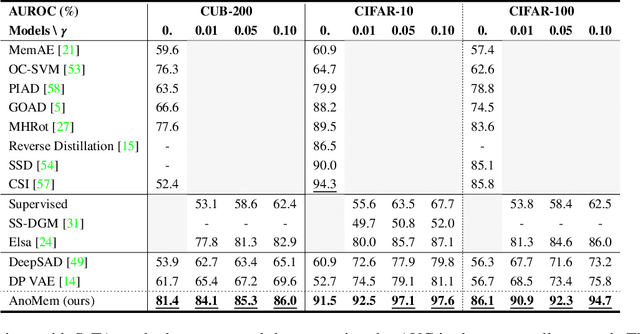

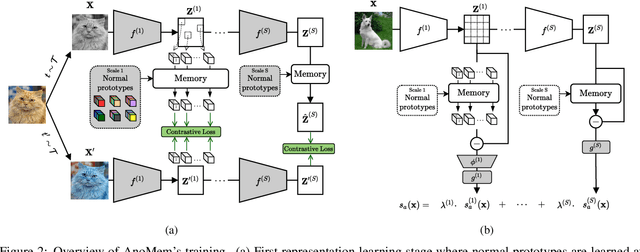

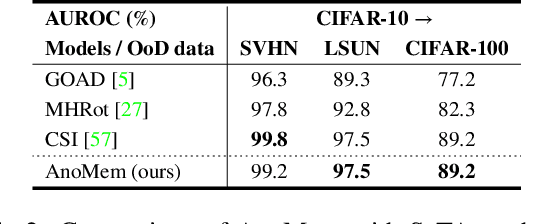

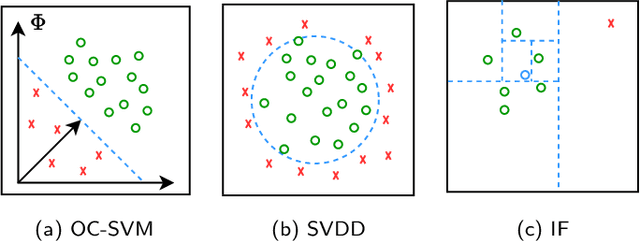

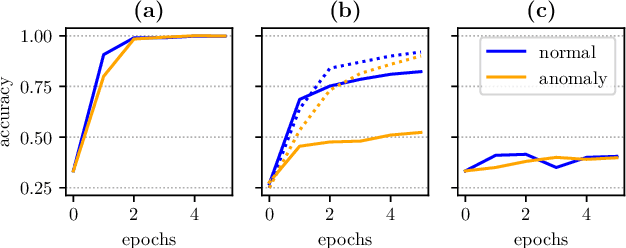

Abstract:Deep anomaly detection (AD) aims to provide robust and efficient classifiers for one-class and unbalanced settings. However current AD models still struggle on edge-case normal samples and are often unable to keep high performance over different scales of anomalies. Moreover, there currently does not exist a unified framework efficiently covering both one-class and unbalanced learnings. In the light of these limitations, we introduce a new two-stage anomaly detector which memorizes during training multi-scale normal prototypes to compute an anomaly deviation score. First, we simultaneously learn representations and memory modules on multiple scales using a novel memory-augmented contrastive learning. Then, we train an anomaly distance detector on the spatial deviation maps between prototypes and observations. Our model highly improves the state-of-the-art performance on a wide range of object, style and local anomalies with up to 35\% error relative improvement on CIFAR-10. It is also the first model to keep high performance across the one-class and unbalanced settings.

Efficient Anomaly Detection Using Self-Supervised Multi-Cue Tasks

Nov 24, 2021

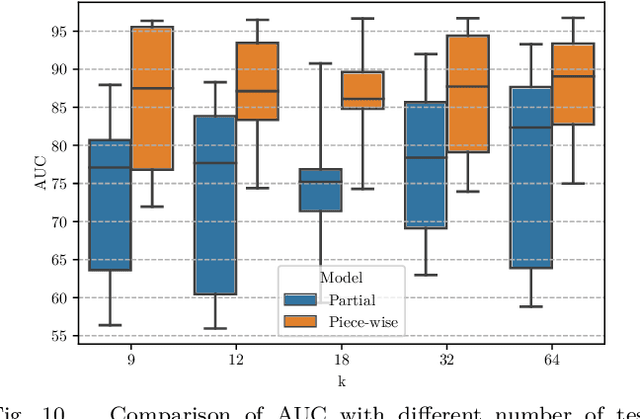

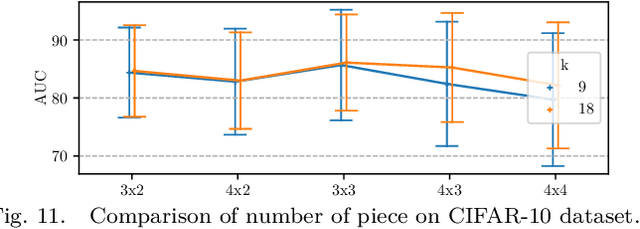

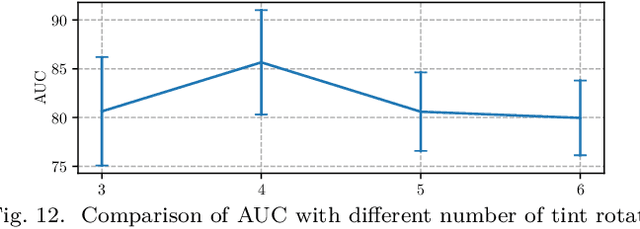

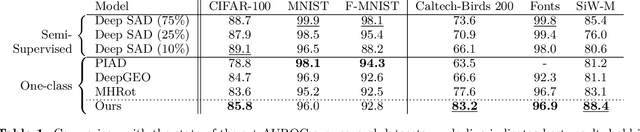

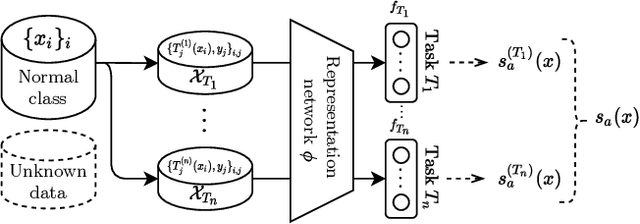

Abstract:Deep anomaly detection has proven to be an efficient and robust approach in several fields. The introduction of self-supervised learning has greatly helped many methods including anomaly detection where simple geometric transformation recognition tasks are used. However these methods do not perform well on fine-grained problems since they lack finer features and are usually highly dependent on the anomaly type. In this paper, we explore each step of self-supervised anomaly detection with pretext tasks. First, we introduce novel discriminative and generative tasks which focus on different visual cues. A piece-wise jigsaw puzzle task focuses on structure cues, while a tint rotation recognition is used on each piece for colorimetry and a partial re-colorization task is performed. In order for the re-colorization task to focus more on the object rather than on the background, we propose to include the contextual color information of the image border. Then, we present a new out-of-distribution detection function and highlight its better stability compared to other out-of-distribution detection methods. Along with it, we also experiment different score fusion functions. Finally, we evaluate our method on a comprehensive anomaly detection protocol composed of object anomalies with classical object recognition, style anomalies with fine-grained classification and local anomalies with face anti-spoofing datasets. Our model can more accurately learn highly discriminative features using these self-supervised tasks. It outperforms state-of-the-art with up to 36% relative error improvement on object anomalies and 40% on face anti-spoofing problems.

Fine-grained Anomaly Detection via Multi-task Self-Supervision

Apr 20, 2021

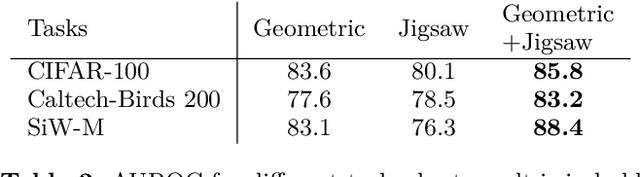

Abstract:Detecting anomalies using deep learning has become a major challenge over the last years, and is becoming increasingly promising in several fields. The introduction of self-supervised learning has greatly helped many methods including anomaly detection where simple geometric transformation recognition tasks are used. However these methods do not perform well on fine-grained problems since they lack finer features. By combining in a multi-task framework high-scale shape features oriented task with low-scale fine features oriented task, our method greatly improves fine-grained anomaly detection. It outperforms state-of-the-art with up to 31% relative error reduction measured with AUROC on various anomaly detection problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge