Nathan O. Lambert

MBRL-Lib: A Modular Library for Model-based Reinforcement Learning

Apr 20, 2021

Abstract:Model-based reinforcement learning is a compelling framework for data-efficient learning of agents that interact with the world. This family of algorithms has many subcomponents that need to be carefully selected and tuned. As a result the entry-bar for researchers to approach the field and to deploy it in real-world tasks can be daunting. In this paper, we present MBRL-Lib -- a machine learning library for model-based reinforcement learning in continuous state-action spaces based on PyTorch. MBRL-Lib is designed as a platform for both researchers, to easily develop, debug and compare new algorithms, and non-expert user, to lower the entry-bar of deploying state-of-the-art algorithms. MBRL-Lib is open-source at https://github.com/facebookresearch/mbrl-lib.

Learning Accurate Long-term Dynamics for Model-based Reinforcement Learning

Dec 16, 2020

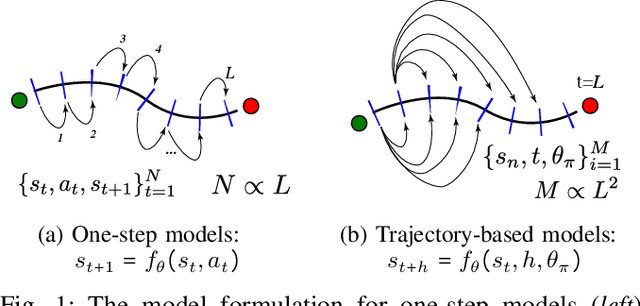

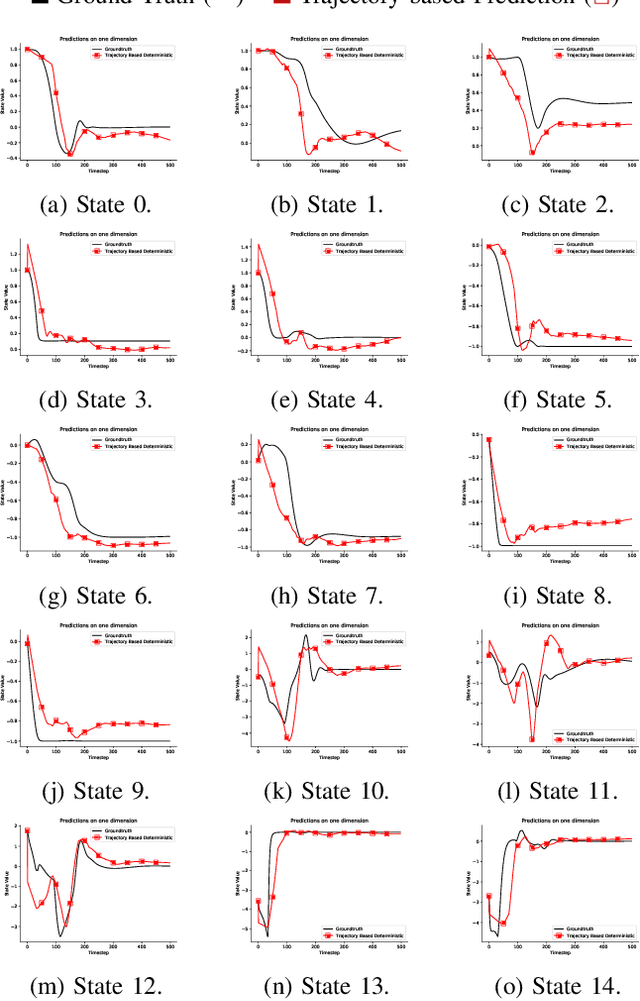

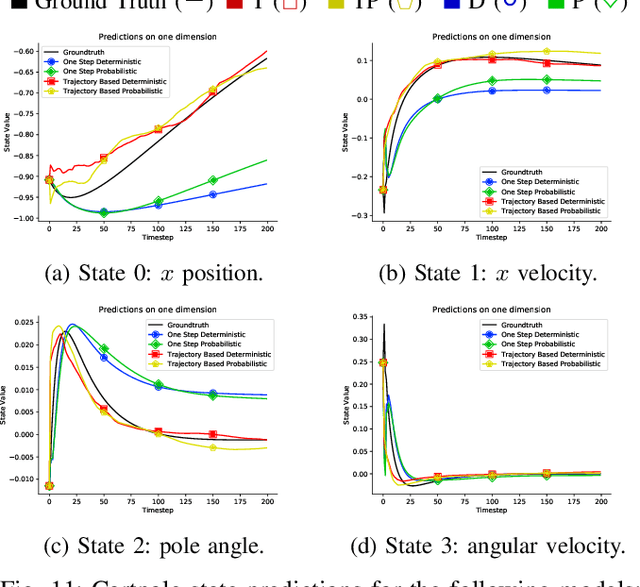

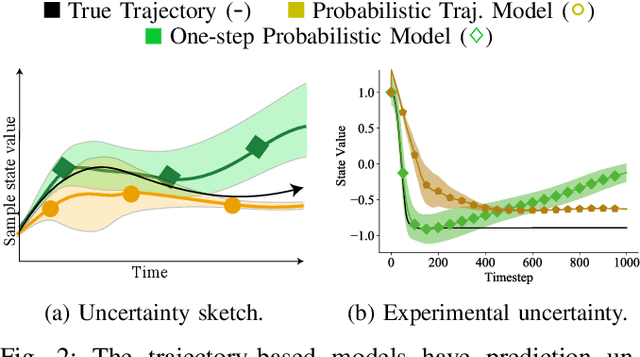

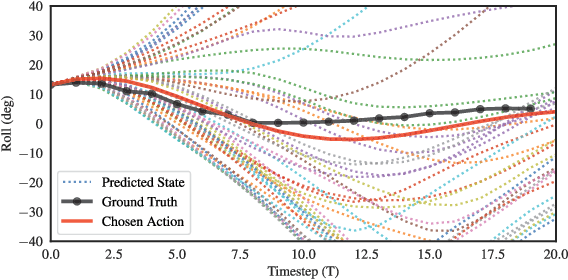

Abstract:Accurately predicting the dynamics of robotic systems is crucial for model-based control and reinforcement learning. The most common way to estimate dynamics is by fitting a one-step ahead prediction model and using it to recursively propagate the predicted state distribution over long horizons. Unfortunately, this approach is known to compound even small prediction errors, making long-term predictions inaccurate. In this paper, we propose a new parametrization to supervised learning on state-action data to stably predict at longer horizons -- that we call a trajectory-based model. This trajectory-based model takes an initial state, a future time index, and control parameters as inputs, and predicts the state at the future time. Our results in simulated and experimental robotic tasks show that our trajectory-based models yield significantly more accurate long term predictions, improved sample efficiency, and ability to predict task reward.

Learning for Microrobot Exploration: Model-based Locomotion, Sparse-robust Navigation, and Low-power Deep Classification

Apr 27, 2020

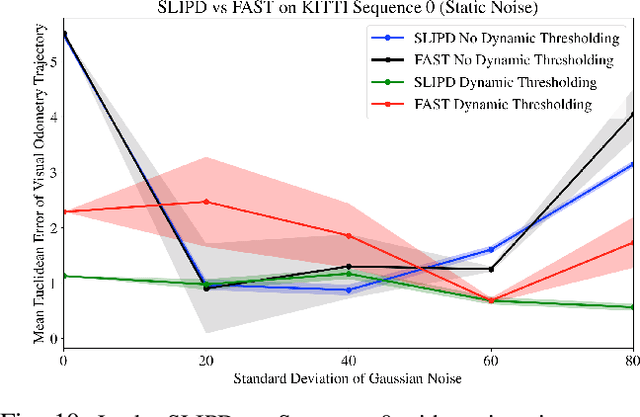

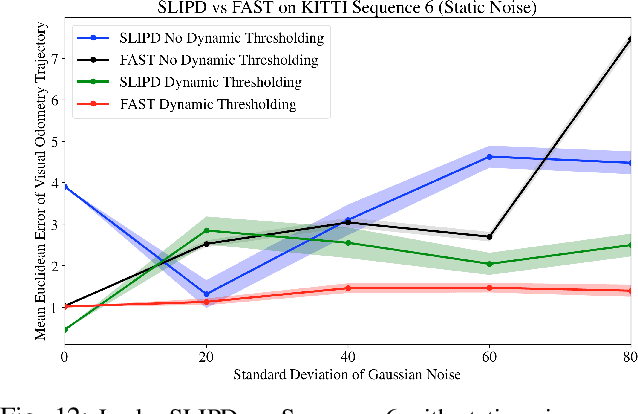

Abstract:Building intelligent autonomous systems at any scale is challenging. The sensing and computation constraints of a microrobot platform make the problems harder. We present improvements to learning-based methods for on-board learning of locomotion, classification, and navigation of microrobots. We show how simulated locomotion can be achieved with model-based reinforcement learning via on-board sensor data distilled into control. Next, we introduce a sparse, linear detector and a Dynamic Thresholding method to FAST Visual Odometry for improved navigation in the noisy regime of mm scale imagery. We end with a new image classifier capable of classification with fewer than one million multiply-and-accumulate (MAC) operations by combining fast downsampling, efficient layer structures and hard activation functions. These are promising steps toward using state-of-the-art algorithms in the power-limited world of edge-intelligence and microrobots.

Low Level Control of a Quadrotor with Deep Model-Based Reinforcement learning

Jan 11, 2019

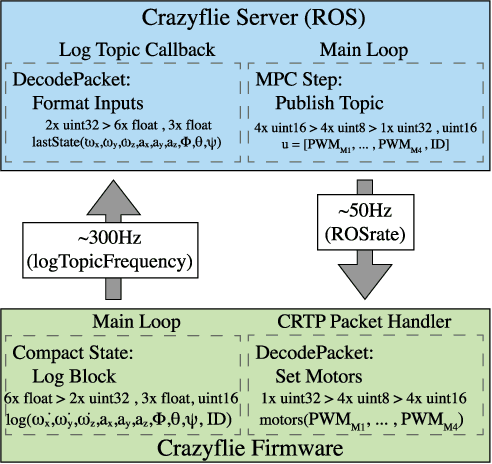

Abstract:Generating low-level robot controllers often requires manual parameters tuning and significant system knowledge, which can result in long design times for highly specialized controllers. With the growth of automation, the need for such controllers might grow faster than the number of expert designers. To address the problem of rapidly generating low-level controllers without domain knowledge, we propose using model-based reinforcement learning (MBRL) trained on few minutes of automatically generated data. In this paper, we explore the capabilities of MBRL on a Crazyflie quadrotor with rapid dynamics where existing classical control schemes offer a baseline against the new method's performance. To our knowledge, this is the first use of MBRL for low-level controlled hover of a quadrotor using only on-board sensors, direct motor input signals, and no initial dynamics knowledge. Our forward dynamics model for prediction is a neural network tuned to predict the state variables at the next time step, with a regularization term on the variance of predictions. The model predictive controller then transmits best actions from a GPU-enabled base station to the quadrotor firmware via radio. In our experiments, the quadrotor achieved hovering capability of up to 6 seconds with 3 minutes of experimental training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge