Nathalie Majcherczyk

Scalable Multi-Robot Task Allocation and Coordination under Signal Temporal Logic Specifications

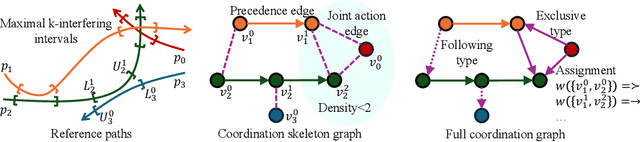

Mar 04, 2025Abstract:Motion planning with simple objectives, such as collision-avoidance and goal-reaching, can be solved efficiently using modern planners. However, the complexity of the allowed tasks for these planners is limited. On the other hand, signal temporal logic (STL) can specify complex requirements, but STL-based motion planning and control algorithms often face scalability issues, especially in large multi-robot systems with complex dynamics. In this paper, we propose an algorithm that leverages the best of the two worlds. We first use a single-robot motion planner to efficiently generate a set of alternative reference paths for each robot. Then coordination requirements are specified using STL, which is defined over the assignment of paths and robots' progress along those paths. We use a Mixed Integer Linear Program (MILP) to compute task assignments and robot progress targets over time such that the STL specification is satisfied. Finally, a local controller is used to track the target progress. Simulations demonstrate that our method can handle tasks with complex constraints and scales to large multi-robot teams and intricate task allocation scenarios.

Reliable and Efficient Multi-Agent Coordination via Graph Neural Network Variational Autoencoders

Mar 04, 2025

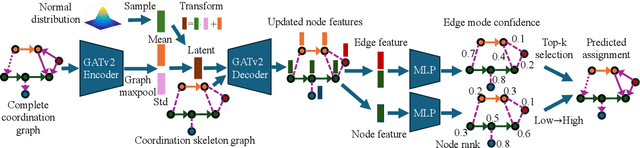

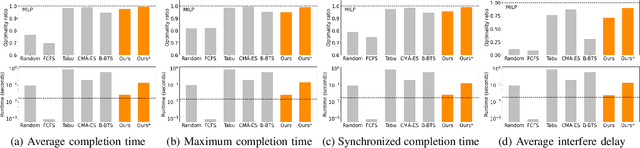

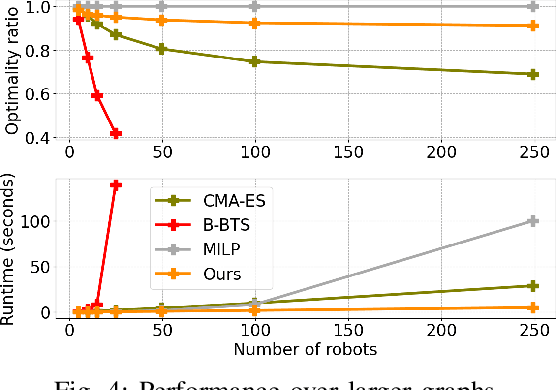

Abstract:Multi-agent coordination is crucial for reliable multi-robot navigation in shared spaces such as automated warehouses. In regions of dense robot traffic, local coordination methods may fail to find a deadlock-free solution. In these scenarios, it is appropriate to let a central unit generate a global schedule that decides the passing order of robots. However, the runtime of such centralized coordination methods increases significantly with the problem scale. In this paper, we propose to leverage Graph Neural Network Variational Autoencoders (GNN-VAE) to solve the multi-agent coordination problem at scale faster than through centralized optimization. We formulate the coordination problem as a graph problem and collect ground truth data using a Mixed-Integer Linear Program (MILP) solver. During training, our learning framework encodes good quality solutions of the graph problem into a latent space. At inference time, solution samples are decoded from the sampled latent variables, and the lowest-cost sample is selected for coordination. Finally, the feasible proposal with the highest performance index is selected for the deployment. By construction, our GNN-VAE framework returns solutions that always respect the constraints of the considered coordination problem. Numerical results show that our approach trained on small-scale problems can achieve high-quality solutions even for large-scale problems with 250 robots, being much faster than other baselines. Project page: https://mengyuest.github.io/gnn-vae-coord

Learning to View: Decision Transformers for Active Object Detection

Jan 23, 2023

Abstract:Active perception describes a broad class of techniques that couple planning and perception systems to move the robot in a way to give the robot more information about the environment. In most robotic systems, perception is typically independent of motion planning. For example, traditional object detection is passive: it operates only on the images it receives. However, we have a chance to improve the results if we allow planning to consume detection signals and move the robot to collect views that maximize the quality of the results. In this paper, we use reinforcement learning (RL) methods to control the robot in order to obtain images that maximize the detection quality. Specifically, we propose using a Decision Transformer with online fine-tuning, which first optimizes the policy with a pre-collected expert dataset and then improves the learned policy by exploring better solutions in the environment. We evaluate the performance of proposed method on an interactive dataset collected from an indoor scenario simulator. Experimental results demonstrate that our method outperforms all baselines, including expert policy and pure offline RL methods. We also provide exhaustive analyses of the reward distribution and observation space.

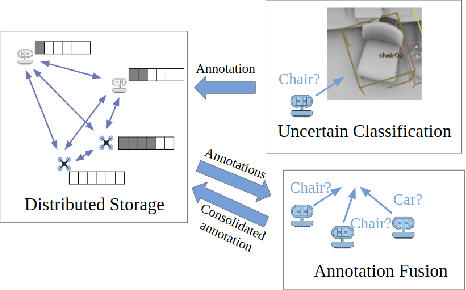

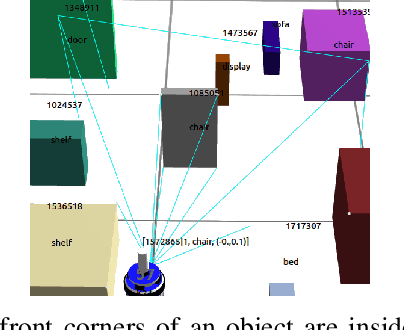

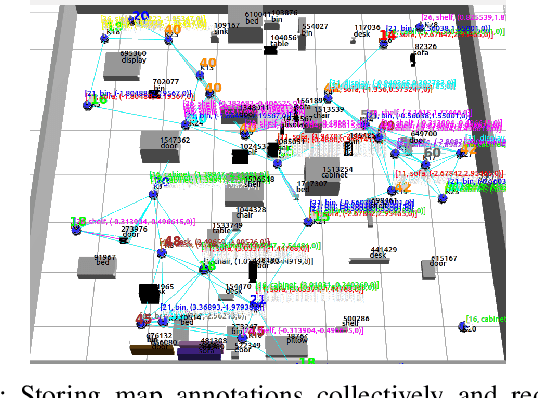

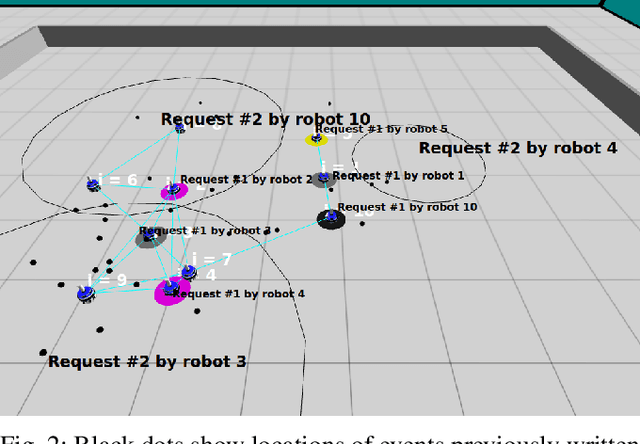

Distributed Data Storage and Fusion for Collective Perception in Resource-Limited Mobile Robot Swarms

Dec 15, 2020

Abstract:In this paper, we propose an approach to the distributed storage and fusion of data for collective perception in resource-limited robot swarms. We demonstrate our approach in a distributed semantic classification scenario. We consider a team of mobile robots, in which each robot runs a pre-trained classifier of known accuracy to annotate objects in the environment. We provide two main contributions: (i) a decentralized, shared data structure for efficient storage and retrieval of the semantic annotations, specifically designed for low-resource mobile robots; and (ii) a voting-based, decentralized algorithm to reduce the variance of the calculated annotations in presence of imperfect classification. We discuss theory and implementation of both contributions, and perform an extensive set of realistic simulated experiments to evaluate the performance of our approach.

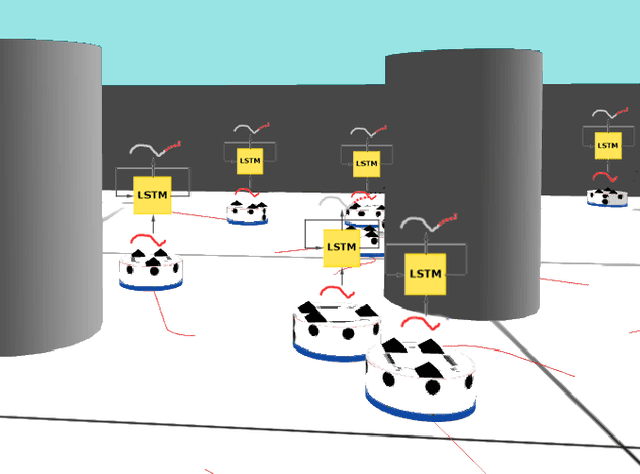

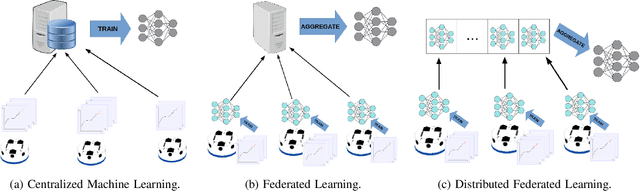

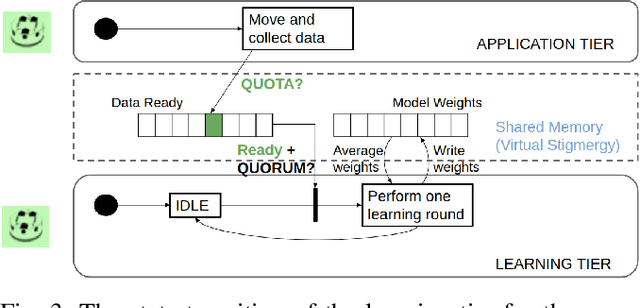

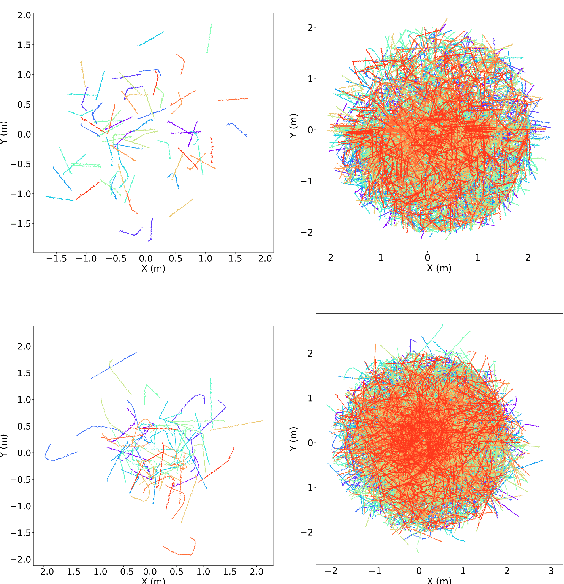

Flow-FL: Data-Driven Federated Learning for Spatio-Temporal Predictions in Multi-Robot Systems

Oct 16, 2020

Abstract:In this paper, we show how the Federated Learning (FL) framework enables learning collectively from distributed data in connected robot teams. This framework typically works with clients collecting data locally, updating neural network weights of their model, and sending updates to a server for aggregation into a global model. We explore the design space of FL by comparing two variants of this concept. The first variant follows the traditional FL approach in which a server aggregates the local models. In the second variant, that we call Flow-FL, the aggregation process is serverless thanks to the use of a gossip-based shared data structure. In both variants, we use a data-driven mechanism to synchronize the learning process in which robots contribute model updates when they collect sufficient data. We validate our approach with an agent trajectory forecasting problem in a multi-agent setting. Using a centralized implementation as a baseline, we study the effects of staggered online data collection, and variations in data flow, number of participating robots, and time delays introduced by the decentralization of the framework in a multi-robot setting.

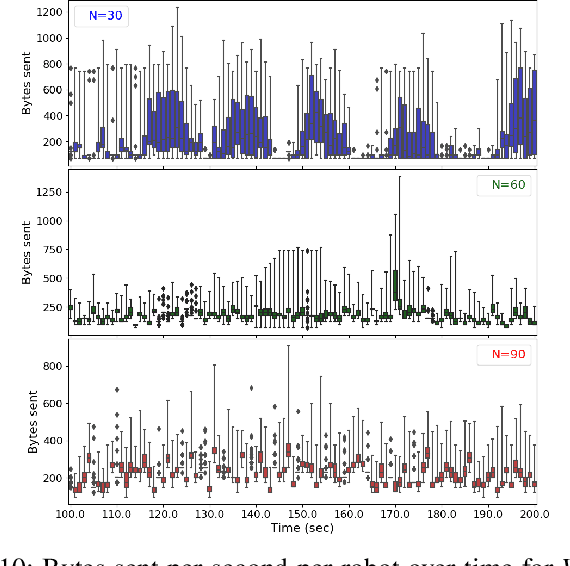

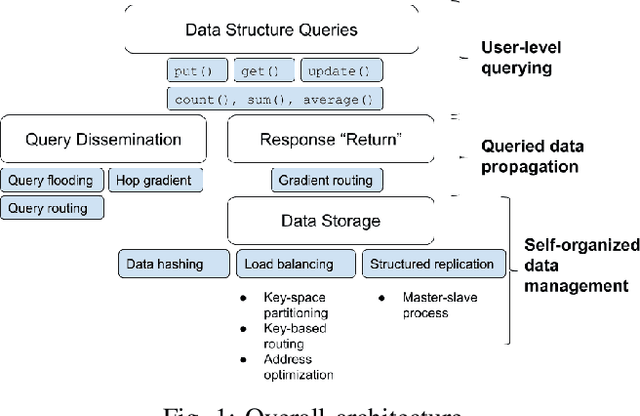

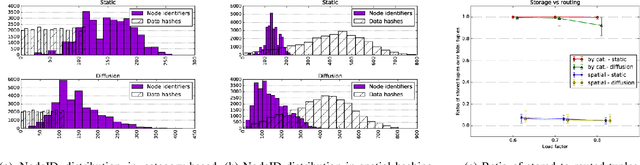

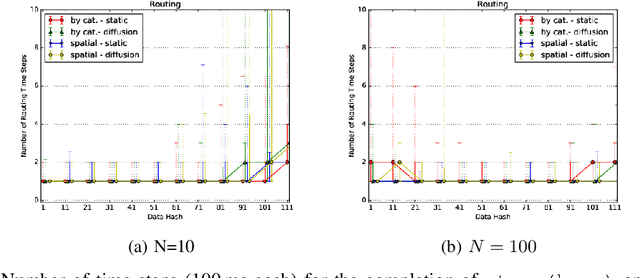

SwarmMesh: A Distributed Data Structure for Cooperative Multi-Robot Applications

Sep 11, 2019

Abstract:We present an approach to the distributed storage of data across a swarm of mobile robots that forms a shared global memory. We assume that external storage infrastructure is absent, and that each robot is capable of devoting a quota of memory and bandwidth to distributed storage. Our approach is motivated by the insight that in many applications data is collected at the periphery of a swarm topology, but the periphery also happens to be the most dangerous location for storing data, especially in exploration missions. Our approach is designed to promote data storage in the locations in the swarm that best suit a specific feature of interest in the data, while accounting for the constantly changing topology due to individual motion. We analyze two possible features of interest: the data type and the data item position in the environment. We assess the performance of our approach in a large set of simulated experiments. The evaluation shows that our approach is capable of storing quantities of data that exceed the memory of individual robots, while maintaining near-perfect data retention in high-load conditions.

Decentralized Connectivity-Preserving Deployment of Large-Scale Robot Swarms

Jun 01, 2018

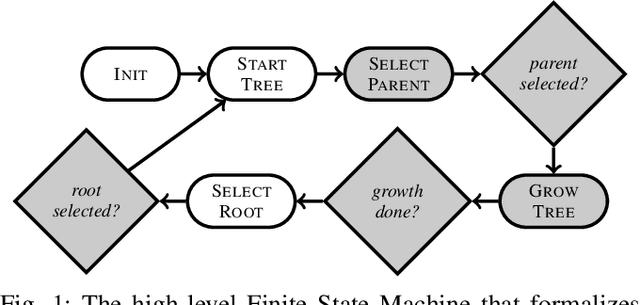

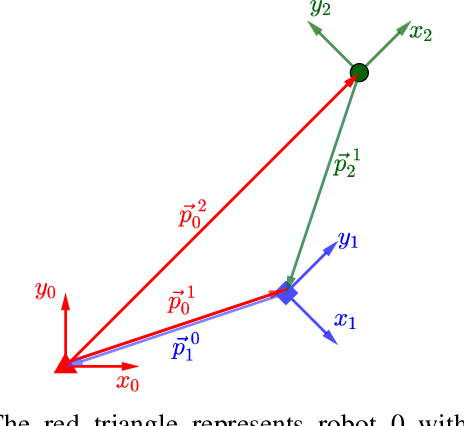

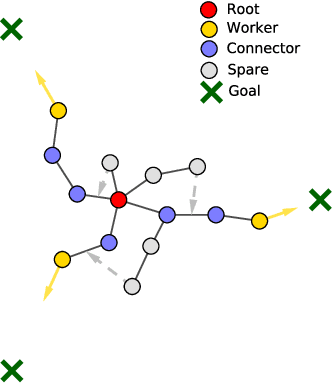

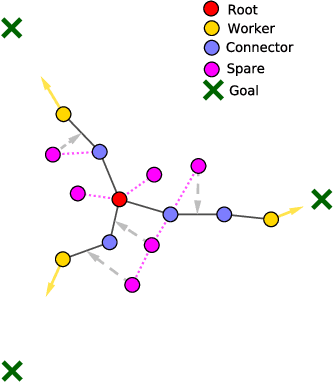

Abstract:We present a decentralized and scalable approach for deployment of a robot swarm. Our approach tackles scenarios in which the swarm must reach multiple spatially distributed targets, and enforce the constraint that the robot network cannot be split. The basic idea behind our work is to construct a logical tree topology over the physical network formed by the robots. The logical tree acts as a backbone used by robots to enforce connectivity constraints. We study and compare two algorithms to form the logical tree: outwards and inwards. These algorithms differ in the order in which the robots join the tree: the outwards algorithm starts at the tree root and grows towards the targets, while the inwards algorithm proceeds in the opposite manner. Both algorithms perform periodic reconfiguration, to prevent suboptimal topologies from halting the growth of the tree. Our contributions are (i) The formulation of the two algorithms; (ii) A comparison of the algorithms in extensive physics-based simulations; (iii) A validation of our findings through real-robot experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge